Shuhei Kurita

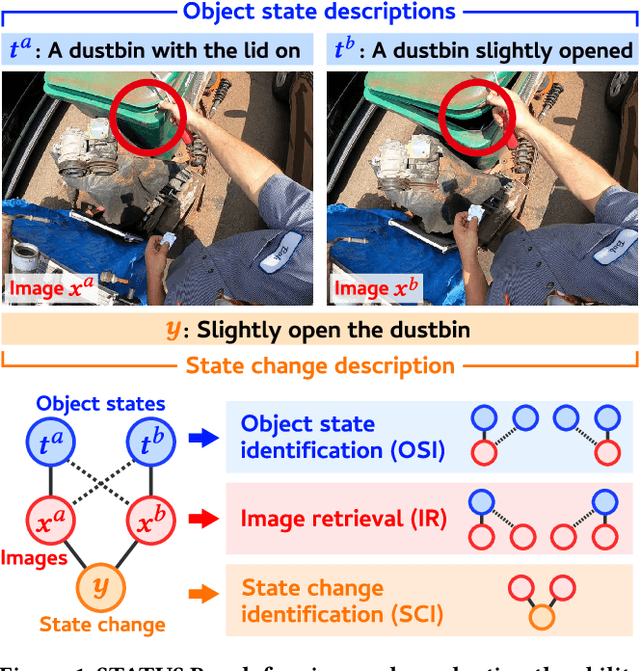

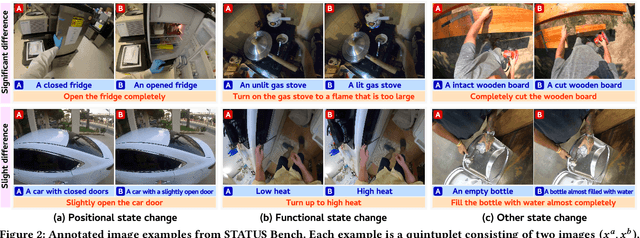

STATUS Bench: A Rigorous Benchmark for Evaluating Object State Understanding in Vision-Language Models

Oct 26, 2025

Abstract:Object state recognition aims to identify the specific condition of objects, such as their positional states (e.g., open or closed) and functional states (e.g., on or off). While recent Vision-Language Models (VLMs) are capable of performing a variety of multimodal tasks, it remains unclear how precisely they can identify object states. To alleviate this issue, we introduce the STAte and Transition UnderStanding Benchmark (STATUS Bench), the first benchmark for rigorously evaluating the ability of VLMs to understand subtle variations in object states in diverse situations. Specifically, STATUS Bench introduces a novel evaluation scheme that requires VLMs to perform three tasks simultaneously: object state identification (OSI), image retrieval (IR), and state change identification (SCI). These tasks are defined over our fully hand-crafted dataset involving image pairs, their corresponding object state descriptions and state change descriptions. Furthermore, we introduce a large-scale training dataset, namely STATUS Train, which consists of 13 million semi-automatically created descriptions. This dataset serves as the largest resource to facilitate further research in this area. In our experiments, we demonstrate that STATUS Bench enables rigorous consistency evaluation and reveal that current state-of-the-art VLMs still significantly struggle to capture subtle object state distinctions. Surprisingly, under the proposed rigorous evaluation scheme, most open-weight VLMs exhibited chance-level zero-shot performance. After fine-tuning on STATUS Train, Qwen2.5-VL achieved performance comparable to Gemini 2.0 Flash. These findings underscore the necessity of STATUS Bench and Train for advancing object state recognition in VLM research.

Developing Vision-Language-Action Model from Egocentric Videos

Sep 26, 2025Abstract:Egocentric videos capture how humans manipulate objects and tools, providing diverse motion cues for learning object manipulation. Unlike the costly, expert-driven manual teleoperation commonly used in training Vision-Language-Action models (VLAs), egocentric videos offer a scalable alternative. However, prior studies that leverage such videos for training robot policies typically rely on auxiliary annotations, such as detailed hand-pose recordings. Consequently, it remains unclear whether VLAs can be trained directly from raw egocentric videos. In this work, we address this challenge by leveraging EgoScaler, a framework that extracts 6DoF object manipulation trajectories from egocentric videos without requiring auxiliary recordings. We apply EgoScaler to four large-scale egocentric video datasets and automatically refine noisy or incomplete trajectories, thereby constructing a new large-scale dataset for VLA pre-training. Our experiments with a state-of-the-art $\pi_0$ architecture in both simulated and real-robot environments yield three key findings: (i) pre-training on our dataset improves task success rates by over 20\% compared to training from scratch, (ii) the performance is competitive with that achieved using real-robot datasets, and (iii) combining our dataset with real-robot data yields further improvements. These results demonstrate that egocentric videos constitute a promising and scalable resource for advancing VLA research.

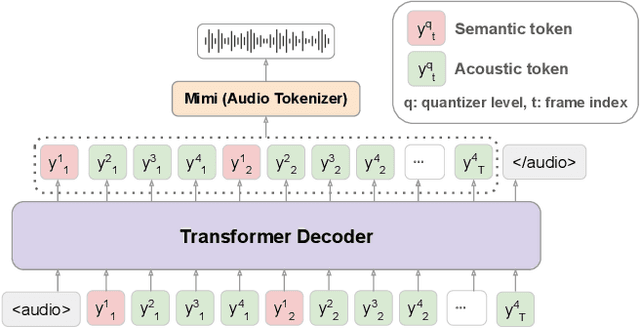

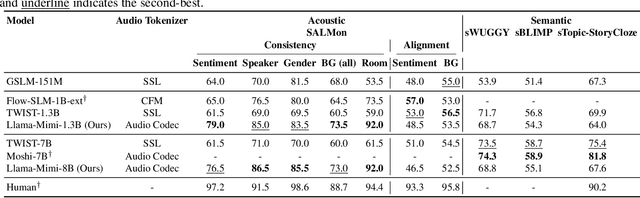

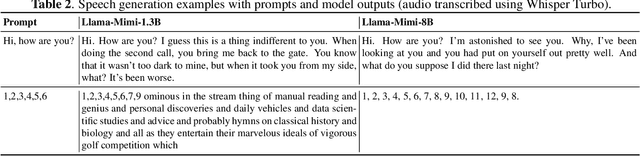

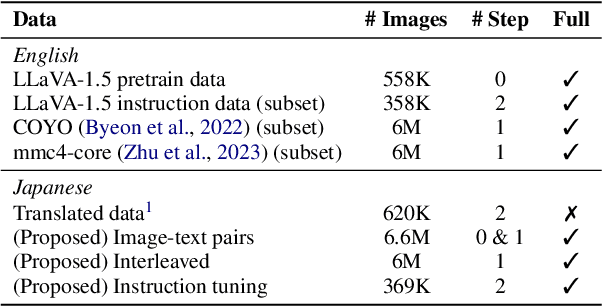

Llama-Mimi: Speech Language Models with Interleaved Semantic and Acoustic Tokens

Sep 18, 2025

Abstract:We propose Llama-Mimi, a speech language model that uses a unified tokenizer and a single Transformer decoder to jointly model sequences of interleaved semantic and acoustic tokens. Comprehensive evaluation shows that Llama-Mimi achieves state-of-the-art performance in acoustic consistency and possesses the ability to preserve speaker identity. Our analysis further demonstrates that increasing the number of quantizers improves acoustic fidelity but degrades linguistic performance, highlighting the inherent challenge of maintaining long-term coherence. We additionally introduce an LLM-as-a-Judge-based evaluation to assess the spoken content quality of generated outputs. Our models, code, and speech samples are publicly available.

LegalViz: Legal Text Visualization by Text To Diagram Generation

Feb 10, 2025Abstract:Legal documents including judgments and court orders require highly sophisticated legal knowledge for understanding. To disclose expert knowledge for non-experts, we explore the problem of visualizing legal texts with easy-to-understand diagrams and propose a novel dataset of LegalViz with 23 languages and 7,010 cases of legal document and visualization pairs, using the DOT graph description language of Graphviz. LegalViz provides a simple diagram from a complicated legal corpus identifying legal entities, transactions, legal sources, and statements at a glance, that are essential in each judgment. In addition, we provide new evaluation metrics for the legal diagram visualization by considering graph structures, textual similarities, and legal contents. We conducted empirical studies on few-shot and finetuning large language models for generating legal diagrams and evaluated them with these metrics, including legal content-based evaluation within 23 languages. Models trained with LegalViz outperform existing models including GPTs, confirming the effectiveness of our dataset.

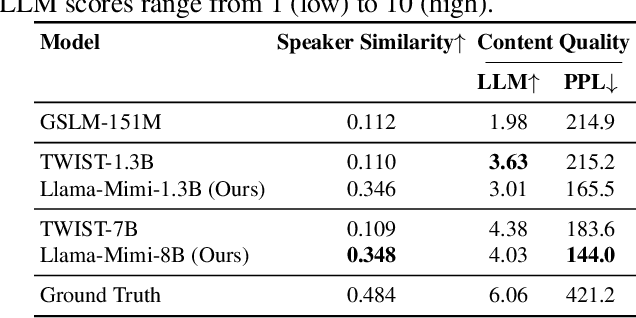

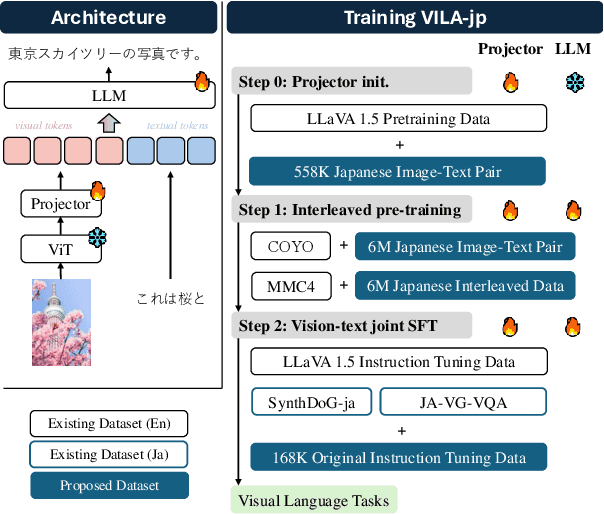

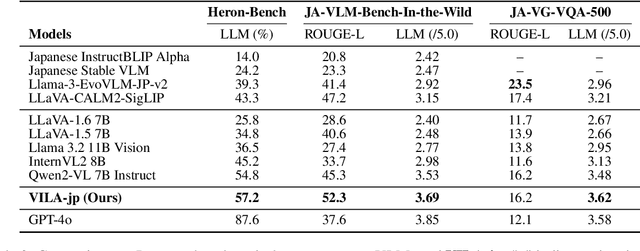

Constructing Multimodal Datasets from Scratch for Rapid Development of a Japanese Visual Language Model

Oct 30, 2024

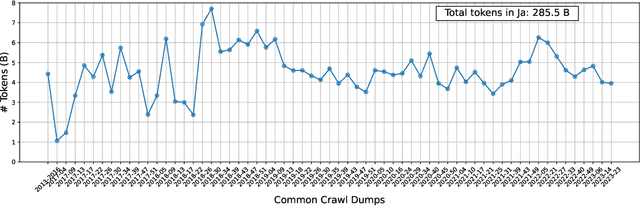

Abstract:To develop high-performing Visual Language Models (VLMs), it is essential to prepare multimodal resources, such as image-text pairs, interleaved data, and instruction data. While multimodal resources for English are abundant, there is a significant lack of corresponding resources for non-English languages, such as Japanese. To address this problem, we take Japanese as a non-English language and propose a method for rapidly creating Japanese multimodal datasets from scratch. We collect Japanese image-text pairs and interleaved data from web archives and generate Japanese instruction data directly from images using an existing VLM. Our experimental results show that a VLM trained on these native datasets outperforms those relying on machine-translated content.

AdaCoder: Adaptive Prompt Compression for Programmatic Visual Question Answering

Jul 28, 2024Abstract:Visual question answering aims to provide responses to natural language questions given visual input. Recently, visual programmatic models (VPMs), which generate executable programs to answer questions through large language models (LLMs), have attracted research interest. However, they often require long input prompts to provide the LLM with sufficient API usage details to generate relevant code. To address this limitation, we propose AdaCoder, an adaptive prompt compression framework for VPMs. AdaCoder operates in two phases: a compression phase and an inference phase. In the compression phase, given a preprompt that describes all API definitions in the Python language with example snippets of code, a set of compressed preprompts is generated, each depending on a specific question type. In the inference phase, given an input question, AdaCoder predicts the question type and chooses the appropriate corresponding compressed preprompt to generate code to answer the question. Notably, AdaCoder employs a single frozen LLM and pre-defined prompts, negating the necessity of additional training and maintaining adaptability across different powerful black-box LLMs such as GPT and Claude. In experiments, we apply AdaCoder to ViperGPT and demonstrate that it reduces token length by 71.1%, while maintaining or even improving the performance of visual question answering.

Answerability Fields: Answerable Location Estimation via Diffusion Models

Jul 26, 2024

Abstract:In an era characterized by advancements in artificial intelligence and robotics, enabling machines to interact with and understand their environment is a critical research endeavor. In this paper, we propose Answerability Fields, a novel approach to predicting answerability within complex indoor environments. Leveraging a 3D question answering dataset, we construct a comprehensive Answerability Fields dataset, encompassing diverse scenes and questions from ScanNet. Using a diffusion model, we successfully infer and evaluate these Answerability Fields, demonstrating the importance of objects and their locations in answering questions within a scene. Our results showcase the efficacy of Answerability Fields in guiding scene-understanding tasks, laying the foundation for their application in enhancing interactions between intelligent agents and their environments.

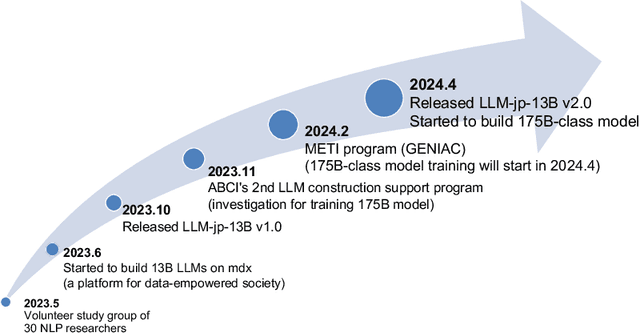

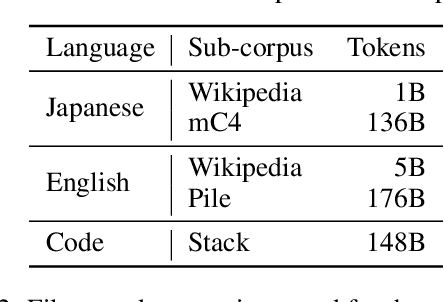

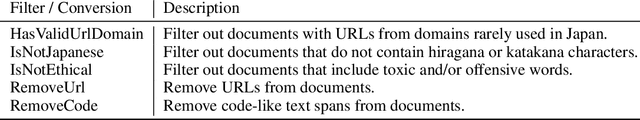

LLM-jp: A Cross-organizational Project for the Research and Development of Fully Open Japanese LLMs

Jul 04, 2024

Abstract:This paper introduces LLM-jp, a cross-organizational project for the research and development of Japanese large language models (LLMs). LLM-jp aims to develop open-source and strong Japanese LLMs, and as of this writing, more than 1,500 participants from academia and industry are working together for this purpose. This paper presents the background of the establishment of LLM-jp, summaries of its activities, and technical reports on the LLMs developed by LLM-jp. For the latest activities, visit https://llm-jp.nii.ac.jp/en/.

CityNav: Language-Goal Aerial Navigation Dataset with Geographic Information

Jun 20, 2024Abstract:Vision-and-language navigation (VLN) aims to guide autonomous agents through real-world environments by integrating visual and linguistic cues. While substantial progress has been made in understanding these interactive modalities in ground-level navigation, aerial navigation remains largely underexplored. This is primarily due to the scarcity of resources suitable for real-world, city-scale aerial navigation studies. To bridge this gap, we introduce CityNav, a new dataset for language-goal aerial navigation using a 3D point cloud representation from real-world cities. CityNav includes 32,637 natural language descriptions paired with human demonstration trajectories, collected from participants via a new web-based 3D simulator developed for this research. Each description specifies a navigation goal, leveraging the names and locations of landmarks within real-world cities. We also provide baseline models of navigation agents that incorporate an internal 2D spatial map representing landmarks referenced in the descriptions. We benchmark the latest aerial navigation baselines and our proposed model on the CityNav dataset. The results using this dataset reveal the following key findings: (i) Our aerial agent models trained on human demonstration trajectories outperform those trained on shortest path trajectories, highlighting the importance of human-driven navigation strategies; (ii) The integration of a 2D spatial map significantly enhances navigation efficiency at city scale. Our dataset and code are available at https://water-cookie.github.io/city-nav-proj/

Map-based Modular Approach for Zero-shot Embodied Question Answering

May 26, 2024Abstract:Building robots capable of interacting with humans through natural language in the visual world presents a significant challenge in the field of robotics. To overcome this challenge, Embodied Question Answering (EQA) has been proposed as a benchmark task to measure the ability to identify an object navigating through a previously unseen environment in response to human-posed questions. Although some methods have been proposed, their evaluations have been limited to simulations, without experiments in real-world scenarios. Furthermore, all of these methods are constrained by a limited vocabulary for question-and-answer interactions, making them unsuitable for practical applications. In this work, we propose a map-based modular EQA method that enables real robots to navigate unknown environments through frontier-based map creation and address unknown QA pairs using foundation models that support open vocabulary. Unlike the questions of the previous EQA dataset on Matterport 3D (MP3D), questions in our real-world experiments contain various question formats and vocabularies not included in the training data. We conduct comprehensive experiments on virtual environments (MP3D-EQA) and two real-world house environments and demonstrate that our method can perform EQA even in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge