Shirley Ho

Test-time Generalization for Physics through Neural Operator Splitting

Jan 31, 2026Abstract:Neural operators have shown promise in learning solution maps of partial differential equations (PDEs), but they often struggle to generalize when test inputs lie outside the training distribution, such as novel initial conditions, unseen PDE coefficients or unseen physics. Prior works address this limitation with large-scale multiple physics pretraining followed by fine-tuning, but this still requires examples from the new dynamics, falling short of true zero-shot generalization. In this work, we propose a method to enhance generalization at test time, i.e., without modifying pretrained weights. Building on DISCO, which provides a dictionary of neural operators trained across different dynamics, we introduce a neural operator splitting strategy that, at test time, searches over compositions of training operators to approximate unseen dynamics. On challenging out-of-distribution tasks including parameter extrapolation and novel combinations of physics phenomena, our approach achieves state-of-the-art zero-shot generalization results, while being able to recover the underlying PDE parameters. These results underscore test-time computation as a key avenue for building flexible, compositional, and generalizable neural operators.

Semantic search for 100M+ galaxy images using AI-generated captions

Dec 12, 2025Abstract:Finding scientifically interesting phenomena through slow, manual labeling campaigns severely limits our ability to explore the billions of galaxy images produced by telescopes. In this work, we develop a pipeline to create a semantic search engine from completely unlabeled image data. Our method leverages Vision-Language Models (VLMs) to generate descriptions for galaxy images, then contrastively aligns a pre-trained multimodal astronomy foundation model with these embedded descriptions to produce searchable embeddings at scale. We find that current VLMs provide descriptions that are sufficiently informative to train a semantic search model that outperforms direct image similarity search. Our model, AION-Search, achieves state-of-the-art zero-shot performance on finding rare phenomena despite training on randomly selected images with no deliberate curation for rare cases. Furthermore, we introduce a VLM-based re-ranking method that nearly doubles the recall for our most challenging targets in the top-100 results. For the first time, AION-Search enables flexible semantic search scalable to 140 million galaxy images, enabling discovery from previously infeasible searches. More broadly, our work provides an approach for making large, unlabeled scientific image archives semantically searchable, expanding data exploration capabilities in fields from Earth observation to microscopy. The code, data, and app are publicly available at https://github.com/NolanKoblischke/AION-Search

Walrus: A Cross-Domain Foundation Model for Continuum Dynamics

Nov 19, 2025

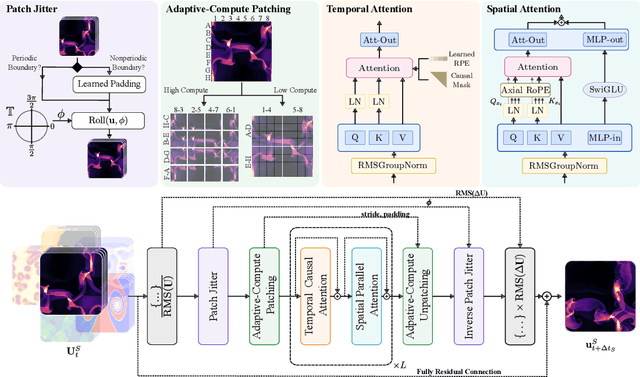

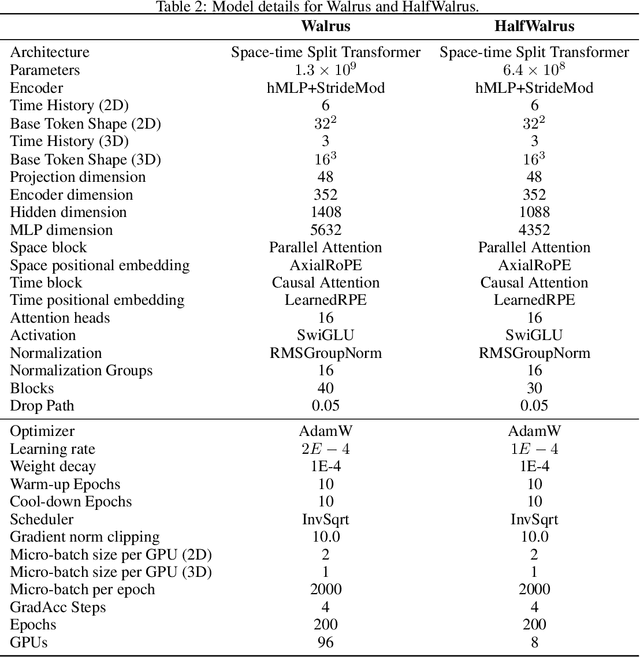

Abstract:Foundation models have transformed machine learning for language and vision, but achieving comparable impact in physical simulation remains a challenge. Data heterogeneity and unstable long-term dynamics inhibit learning from sufficiently diverse dynamics, while varying resolutions and dimensionalities challenge efficient training on modern hardware. Through empirical and theoretical analysis, we incorporate new approaches to mitigate these obstacles, including a harmonic-analysis-based stabilization method, load-balanced distributed 2D and 3D training strategies, and compute-adaptive tokenization. Using these tools, we develop Walrus, a transformer-based foundation model developed primarily for fluid-like continuum dynamics. Walrus is pretrained on nineteen diverse scenarios spanning astrophysics, geoscience, rheology, plasma physics, acoustics, and classical fluids. Experiments show that Walrus outperforms prior foundation models on both short and long term prediction horizons on downstream tasks and across the breadth of pretraining data, while ablation studies confirm the value of our contributions to forecast stability, training throughput, and transfer performance over conventional approaches. Code and weights are released for community use.

Accelerating scientific discovery with the common task framework

Nov 06, 2025Abstract:Machine learning (ML) and artificial intelligence (AI) algorithms are transforming and empowering the characterization and control of dynamic systems in the engineering, physical, and biological sciences. These emerging modeling paradigms require comparative metrics to evaluate a diverse set of scientific objectives, including forecasting, state reconstruction, generalization, and control, while also considering limited data scenarios and noisy measurements. We introduce a common task framework (CTF) for science and engineering, which features a growing collection of challenge data sets with a diverse set of practical and common objectives. The CTF is a critically enabling technology that has contributed to the rapid advance of ML/AI algorithms in traditional applications such as speech recognition, language processing, and computer vision. There is a critical need for the objective metrics of a CTF to compare the diverse algorithms being rapidly developed and deployed in practice today across science and engineering.

Lost in Latent Space: An Empirical Study of Latent Diffusion Models for Physics Emulation

Jul 03, 2025Abstract:The steep computational cost of diffusion models at inference hinders their use as fast physics emulators. In the context of image and video generation, this computational drawback has been addressed by generating in the latent space of an autoencoder instead of the pixel space. In this work, we investigate whether a similar strategy can be effectively applied to the emulation of dynamical systems and at what cost. We find that the accuracy of latent-space emulation is surprisingly robust to a wide range of compression rates (up to 1000x). We also show that diffusion-based emulators are consistently more accurate than non-generative counterparts and compensate for uncertainty in their predictions with greater diversity. Finally, we cover practical design choices, spanning from architectures to optimizers, that we found critical to train latent-space emulators.

The Well: a Large-Scale Collection of Diverse Physics Simulations for Machine Learning

Nov 30, 2024

Abstract:Machine learning based surrogate models offer researchers powerful tools for accelerating simulation-based workflows. However, as standard datasets in this space often cover small classes of physical behavior, it can be difficult to evaluate the efficacy of new approaches. To address this gap, we introduce the Well: a large-scale collection of datasets containing numerical simulations of a wide variety of spatiotemporal physical systems. The Well draws from domain experts and numerical software developers to provide 15TB of data across 16 datasets covering diverse domains such as biological systems, fluid dynamics, acoustic scattering, as well as magneto-hydrodynamic simulations of extra-galactic fluids or supernova explosions. These datasets can be used individually or as part of a broader benchmark suite. To facilitate usage of the Well, we provide a unified PyTorch interface for training and evaluating models. We demonstrate the function of this library by introducing example baselines that highlight the new challenges posed by the complex dynamics of the Well. The code and data is available at https://github.com/PolymathicAI/the_well.

ASURA-FDPS-ML: Star-by-star Galaxy Simulations Accelerated by Surrogate Modeling for Supernova Feedback

Oct 30, 2024

Abstract:We introduce new high-resolution galaxy simulations accelerated by a surrogate model that reduces the computation cost by approximately 75 percent. Massive stars with a Zero Age Main Sequence mass of about 8 solar masses and above explode as core-collapse supernovae (CCSNe), which play a critical role in galaxy formation. The energy released by CCSNe is essential for regulating star formation and driving feedback processes in the interstellar medium (ISM). However, the short integration timesteps required for SNe feedback present significant bottlenecks in star-by-star galaxy simulations that aim to capture individual stellar dynamics and the inhomogeneous shell expansion of SNe within the turbulent ISM. Our new framework combines direct numerical simulations and surrogate modeling, including machine learning and Gibbs sampling. The star formation history and the time evolution of outflow rates in the galaxy match those obtained from resolved direct numerical simulations. Our new approach achieves high-resolution fidelity while reducing computational costs, effectively bridging the physical scale gap and enabling multi-scale simulations.

Accelerating Giant Impact Simulations with Machine Learning

Aug 16, 2024

Abstract:Constraining planet formation models based on the observed exoplanet population requires generating large samples of synthetic planetary systems, which can be computationally prohibitive. A significant bottleneck is simulating the giant impact phase, during which planetary embryos evolve gravitationally and combine to form planets, which may themselves experience later collisions. To accelerate giant impact simulations, we present a machine learning (ML) approach to predicting collisional outcomes in multiplanet systems. Trained on more than 500,000 $N$-body simulations of three-planet systems, we develop an ML model that can accurately predict which two planets will experience a collision, along with the state of the post-collision planets, from a short integration of the system's initial conditions. Our model greatly improves on non-ML baselines that rely on metrics from dynamics theory, which struggle to accurately predict which pair of planets will experience a collision. By combining with a model for predicting long-term stability, we create an efficient ML-based giant impact emulator, which can predict the outcomes of giant impact simulations with a speedup of up to four orders of magnitude. We expect our model to enable analyses that would not otherwise be computationally feasible. As such, we release our full training code, along with an easy-to-use API for our collision outcome model and giant impact emulator.

Contextual Counting: A Mechanistic Study of Transformers on a Quantitative Task

May 30, 2024Abstract:Transformers have revolutionized machine learning across diverse domains, yet understanding their behavior remains crucial, particularly in high-stakes applications. This paper introduces the contextual counting task, a novel toy problem aimed at enhancing our understanding of Transformers in quantitative and scientific contexts. This task requires precise localization and computation within datasets, akin to object detection or region-based scientific analysis. We present theoretical and empirical analysis using both causal and non-causal Transformer architectures, investigating the influence of various positional encodings on performance and interpretability. In particular, we find that causal attention is much better suited for the task, and that no positional embeddings lead to the best accuracy, though rotary embeddings are competitive and easier to train. We also show that out of distribution performance is tightly linked to which tokens it uses as a bias term.

Surrogate Modeling for Computationally Expensive Simulations of Supernovae in High-Resolution Galaxy Simulations

Nov 14, 2023Abstract:Some stars are known to explode at the end of their lives, called supernovae (SNe). The substantial amount of matter and energy that SNe release provides significant feedback to star formation and gas dynamics in a galaxy. SNe release a substantial amount of matter and energy to the interstellar medium, resulting in significant feedback to star formation and gas dynamics in a galaxy. While such feedback has a crucial role in galaxy formation and evolution, in simulations of galaxy formation, it has only been implemented using simple {\it sub-grid models} instead of numerically solving the evolution of gas elements around SNe in detail due to a lack of resolution. We develop a method combining machine learning and Gibbs sampling to predict how a supernova (SN) affects the surrounding gas. The fidelity of our model in the thermal energy and momentum distribution outperforms the low-resolution SN simulations. Our method can replace the SN sub-grid models and help properly simulate un-resolved SN feedback in galaxy formation simulations. We find that employing our new approach reduces the necessary computational cost to $\sim$ 1 percent compared to directly resolving SN feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge