Siavash Golkar

Walrus: A Cross-Domain Foundation Model for Continuum Dynamics

Nov 19, 2025

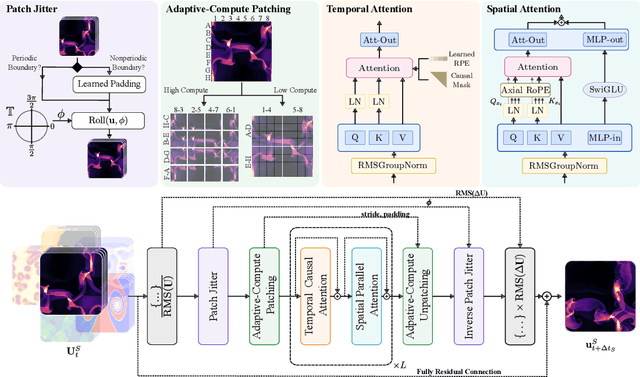

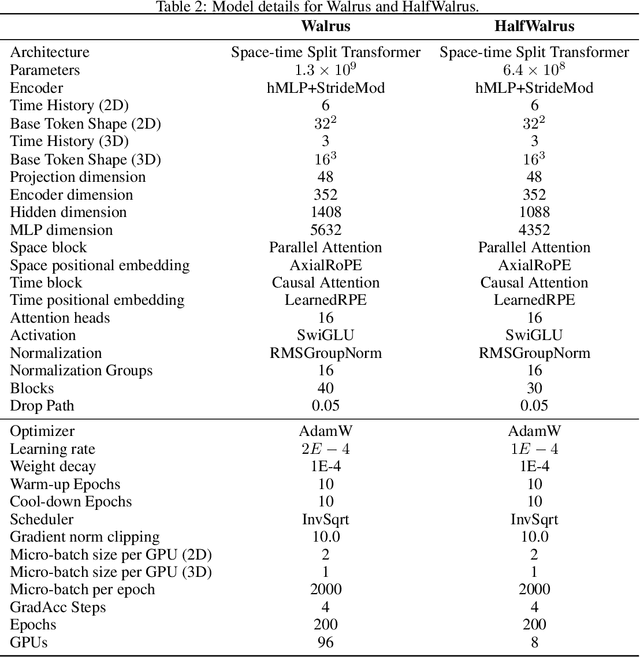

Abstract:Foundation models have transformed machine learning for language and vision, but achieving comparable impact in physical simulation remains a challenge. Data heterogeneity and unstable long-term dynamics inhibit learning from sufficiently diverse dynamics, while varying resolutions and dimensionalities challenge efficient training on modern hardware. Through empirical and theoretical analysis, we incorporate new approaches to mitigate these obstacles, including a harmonic-analysis-based stabilization method, load-balanced distributed 2D and 3D training strategies, and compute-adaptive tokenization. Using these tools, we develop Walrus, a transformer-based foundation model developed primarily for fluid-like continuum dynamics. Walrus is pretrained on nineteen diverse scenarios spanning astrophysics, geoscience, rheology, plasma physics, acoustics, and classical fluids. Experiments show that Walrus outperforms prior foundation models on both short and long term prediction horizons on downstream tasks and across the breadth of pretraining data, while ablation studies confirm the value of our contributions to forecast stability, training throughput, and transfer performance over conventional approaches. Code and weights are released for community use.

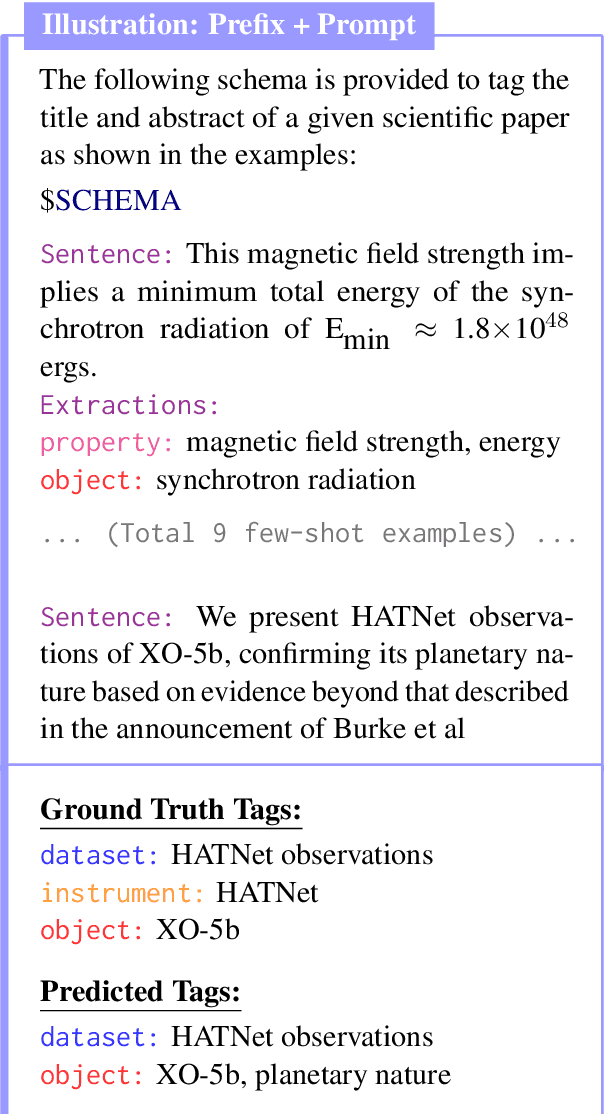

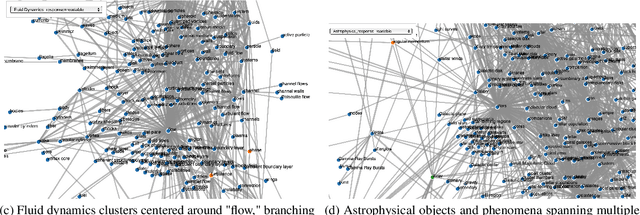

What's In Your Field? Mapping Scientific Research with Knowledge Graphs and Large Language Models

Mar 12, 2025

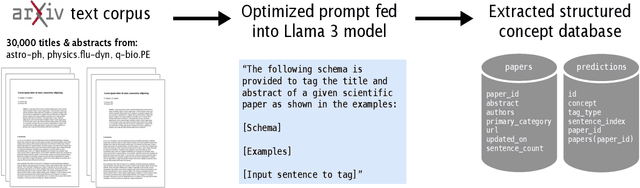

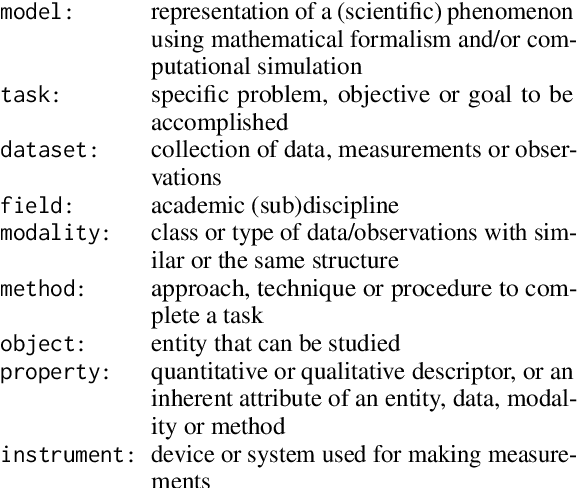

Abstract:The scientific literature's exponential growth makes it increasingly challenging to navigate and synthesize knowledge across disciplines. Large language models (LLMs) are powerful tools for understanding scientific text, but they fail to capture detailed relationships across large bodies of work. Unstructured approaches, like retrieval augmented generation, can sift through such corpora to recall relevant facts; however, when millions of facts influence the answer, unstructured approaches become cost prohibitive. Structured representations offer a natural complement -- enabling systematic analysis across the whole corpus. Recent work enhances LLMs with unstructured or semistructured representations of scientific concepts; to complement this, we try extracting structured representations using LLMs. By combining LLMs' semantic understanding with a schema of scientific concepts, we prototype a system that answers precise questions about the literature as a whole. Our schema applies across scientific fields and we extract concepts from it using only 20 manually annotated abstracts. To demonstrate the system, we extract concepts from 30,000 papers on arXiv spanning astrophysics, fluid dynamics, and evolutionary biology. The resulting database highlights emerging trends and, by visualizing the knowledge graph, offers new ways to explore the ever-growing landscape of scientific knowledge. Demo: abby101/surveyor-0 on HF Spaces. Code: https://github.com/chiral-carbon/kg-for-science.

Contextual Counting: A Mechanistic Study of Transformers on a Quantitative Task

May 30, 2024Abstract:Transformers have revolutionized machine learning across diverse domains, yet understanding their behavior remains crucial, particularly in high-stakes applications. This paper introduces the contextual counting task, a novel toy problem aimed at enhancing our understanding of Transformers in quantitative and scientific contexts. This task requires precise localization and computation within datasets, akin to object detection or region-based scientific analysis. We present theoretical and empirical analysis using both causal and non-causal Transformer architectures, investigating the influence of various positional encodings on performance and interpretability. In particular, we find that causal attention is much better suited for the task, and that no positional embeddings lead to the best accuracy, though rotary embeddings are competitive and easier to train. We also show that out of distribution performance is tightly linked to which tokens it uses as a bias term.

Neuronal Temporal Filters as Normal Mode Extractors

Jan 06, 2024Abstract:To generate actions in the face of physiological delays, the brain must predict the future. Here we explore how prediction may lie at the core of brain function by considering a neuron predicting the future of a scalar time series input. Assuming that the dynamics of the lag vector (a vector composed of several consecutive elements of the time series) are locally linear, Normal Mode Decomposition decomposes the dynamics into independently evolving (eigen-)modes allowing for straightforward prediction. We propose that a neuron learns the top mode and projects its input onto the associated subspace. Under this interpretation, the temporal filter of a neuron corresponds to the left eigenvector of a generalized eigenvalue problem. We mathematically analyze the operation of such an algorithm on noisy observations of synthetic data generated by a linear system. Interestingly, the shape of the temporal filter varies with the signal-to-noise ratio (SNR): a noisy input yields a monophasic filter and a growing SNR leads to multiphasic filters with progressively greater number of phases. Such variation in the temporal filter with input SNR resembles that observed experimentally in biological neurons.

xVal: A Continuous Number Encoding for Large Language Models

Oct 04, 2023Abstract:Large Language Models have not yet been broadly adapted for the analysis of scientific datasets due in part to the unique difficulties of tokenizing numbers. We propose xVal, a numerical encoding scheme that represents any real number using just a single token. xVal represents a given real number by scaling a dedicated embedding vector by the number value. Combined with a modified number-inference approach, this strategy renders the model end-to-end continuous when considered as a map from the numbers of the input string to those of the output string. This leads to an inductive bias that is generally more suitable for applications in scientific domains. We empirically evaluate our proposal on a number of synthetic and real-world datasets. Compared with existing number encoding schemes, we find that xVal is more token-efficient and demonstrates improved generalization.

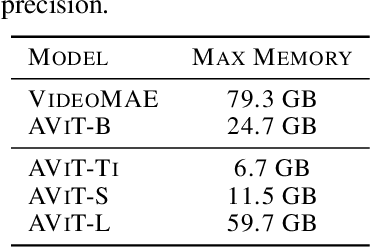

Multiple Physics Pretraining for Physical Surrogate Models

Oct 04, 2023

Abstract:We introduce multiple physics pretraining (MPP), an autoregressive task-agnostic pretraining approach for physical surrogate modeling. MPP involves training large surrogate models to predict the dynamics of multiple heterogeneous physical systems simultaneously by learning features that are broadly useful across diverse physical tasks. In order to learn effectively in this setting, we introduce a shared embedding and normalization strategy that projects the fields of multiple systems into a single shared embedding space. We validate the efficacy of our approach on both pretraining and downstream tasks over a broad fluid mechanics-oriented benchmark. We show that a single MPP-pretrained transformer is able to match or outperform task-specific baselines on all pretraining sub-tasks without the need for finetuning. For downstream tasks, we demonstrate that finetuning MPP-trained models results in more accurate predictions across multiple time-steps on new physics compared to training from scratch or finetuning pretrained video foundation models. We open-source our code and model weights trained at multiple scales for reproducibility and community experimentation.

AstroCLIP: Cross-Modal Pre-Training for Astronomical Foundation Models

Oct 04, 2023Abstract:We present AstroCLIP, a strategy to facilitate the construction of astronomical foundation models that bridge the gap between diverse observational modalities. We demonstrate that a cross-modal contrastive learning approach between images and optical spectra of galaxies yields highly informative embeddings of both modalities. In particular, we apply our method on multi-band images and optical spectra from the Dark Energy Spectroscopic Instrument (DESI), and show that: (1) these embeddings are well-aligned between modalities and can be used for accurate cross-modal searches, and (2) these embeddings encode valuable physical information about the galaxies -- in particular redshift and stellar mass -- that can be used to achieve competitive zero- and few- shot predictions without further finetuning. Additionally, in the process of developing our approach, we also construct a novel, transformer-based model and pretraining approach for processing galaxy spectra.

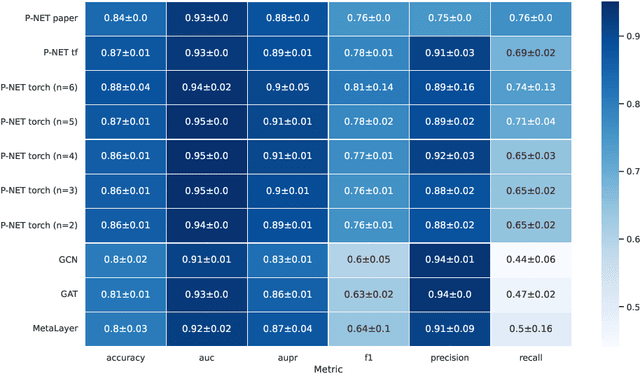

Reusability report: Prostate cancer stratification with diverse biologically-informed neural architectures

Sep 28, 2023

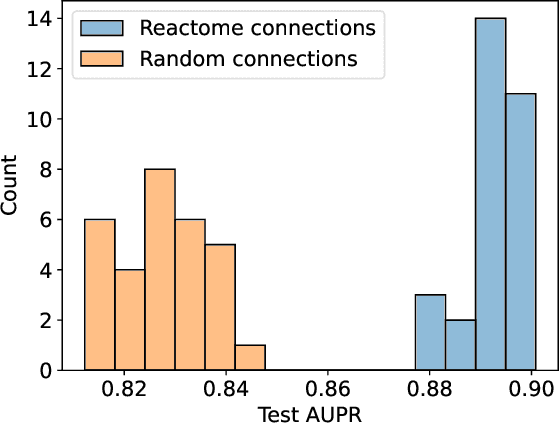

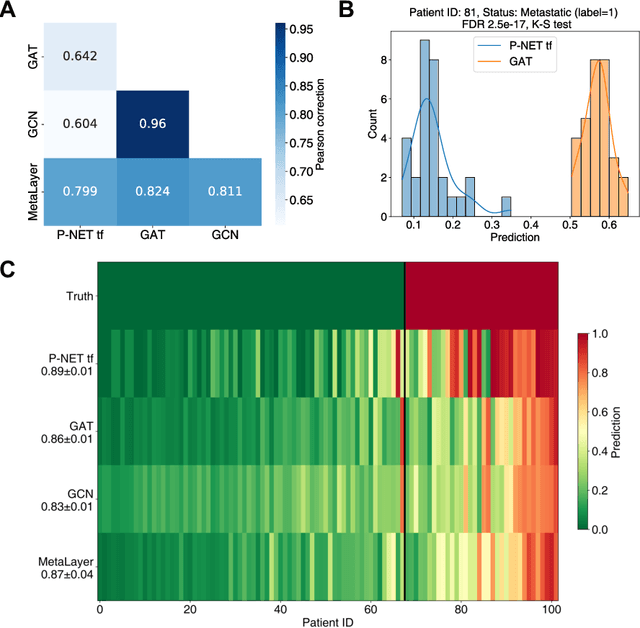

Abstract:In, Elmarakeby et al., "Biologically informed deep neural network for prostate cancer discovery", a feedforward neural network with biologically informed, sparse connections (P-NET) was presented to model the state of prostate cancer. We verified the reproducibility of the study conducted by Elmarakeby et al., using both their original codebase, and our own re-implementation using more up-to-date libraries. We quantified the contribution of network sparsification by Reactome biological pathways, and confirmed its importance to P-NET's superior performance. Furthermore, we explored alternative neural architectures and approaches to incorporating biological information into the networks. We experimented with three types of graph neural networks on the same training data, and investigated the clinical prediction agreement between different models. Our analyses demonstrated that deep neural networks with distinct architectures make incorrect predictions for individual patient that are persistent across different initializations of a specific neural architecture. This suggests that different neural architectures are sensitive to different aspects of the data, an important yet under-explored challenge for clinical prediction tasks.

A normative framework for deriving neural networks with multi-compartmental neurons and non-Hebbian plasticity

Feb 20, 2023Abstract:An established normative approach for understanding the algorithmic basis of neural computation is to derive online algorithms from principled computational objectives and evaluate their compatibility with anatomical and physiological observations. Similarity matching objectives have served as successful starting points for deriving online algorithms that map onto neural networks (NNs) with point neurons and Hebbian/anti-Hebbian plasticity. These NN models account for many anatomical and physiological observations; however, the objectives have limited computational power and the derived NNs do not explain multi-compartmental neuronal structures and non-Hebbian forms of plasticity that are prevalent throughout the brain. In this article, we review and unify recent extensions of the similarity matching approach to address more complex objectives, including a broad range of unsupervised and self-supervised learning tasks that can be formulated as generalized eigenvalue problems or nonnegative matrix factorization problems. Interestingly, the online algorithms derived from these objectives naturally map onto NNs with multi-compartmental neurons and local, non-Hebbian learning rules. Therefore, this unified extension of the similarity matching approach provides a normative framework that facilitates understanding the multi-compartmental neuronal structures and non-Hebbian plasticity found throughout the brain.

An online algorithm for contrastive Principal Component Analysis

Nov 14, 2022

Abstract:Finding informative low-dimensional representations that can be computed efficiently in large datasets is an important problem in data analysis. Recently, contrastive Principal Component Analysis (cPCA) was proposed as a more informative generalization of PCA that takes advantage of contrastive learning. However, the performance of cPCA is sensitive to hyper-parameter choice and there is currently no online algorithm for implementing cPCA. Here, we introduce a modified cPCA method, which we denote cPCA*, that is more interpretable and less sensitive to the choice of hyper-parameter. We derive an online algorithm for cPCA* and show that it maps onto a neural network with local learning rules, so it can potentially be implemented in energy efficient neuromorphic hardware. We evaluate the performance of our online algorithm on real datasets and highlight the differences and similarities with the original formulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge