Robert J. Wood

John A. Paulson School of Engineering and Applied Sciences

Taxonomy and Modular Tool System for Versatile and Effective Non-Prehensile Manipulations

Dec 11, 2025Abstract:General-purpose robotic end-effectors of limited complexity, like the parallel-jaw gripper, are appealing for their balance of simplicity and effectiveness in a wide range of manipulation tasks. However, while many such manipulators offer versatility in grasp-like interactions, they are not optimized for non-prehensile actions like pressing, rubbing, or scraping -- manipulations needed for many common tasks. To perform such tasks, humans use a range of different body parts or tools with different rigidity, friction, etc., according to the properties most effective for a given task. Here, we discuss a taxonomy for the key properties of a non-actuated end-effector, laying the groundwork for a systematic understanding of the affordances of non-prehensile manipulators. We then present a modular tool system, based on the taxonomy, that can be used by a standard two-fingered gripper to extend its versatility and effectiveness in performing such actions. We demonstrate the application of the tool system in aerospace and household scenarios that require a range of non-prehensile and prehensile manipulations.

WiSER-X: Wireless Signals-based Efficient Decentralized Multi-Robot Exploration without Explicit Information Exchange

Dec 27, 2024Abstract:We introduce a Wireless Signal based Efficient multi-Robot eXploration (WiSER-X) algorithm applicable to a decentralized team of robots exploring an unknown environment with communication bandwidth constraints. WiSER-X relies only on local inter-robot relative position estimates, that can be obtained by exchanging signal pings from onboard sensors such as WiFi, Ultra-Wide Band, amongst others, to inform the exploration decisions of individual robots to minimize redundant coverage overlaps. Furthermore, WiSER-X also enables asynchronous termination without requiring a shared map between the robots. It also adapts to heterogeneous robot behaviors and even complete failures in unknown environment while ensuring complete coverage. Simulations show that WiSER-X leads to 58% lower overlap than a zero-information-sharing baseline algorithm-1 and only 23% more overlap than a full-information-sharing algorithm baseline algorithm-2.

Hardware-in-the-Loop for Characterization of Embedded State Estimation for Flying Microrobots

Nov 10, 2024

Abstract:Autonomous flapping-wing micro-aerial vehicles (FWMAV) have a host of potential applications such as environmental monitoring, artificial pollination, and search and rescue operations. One of the challenges for achieving these applications is the implementation of an onboard sensor suite due to the small size and limited payload capacity of FWMAVs. The current solution for accurate state estimation is the use of offboard motion capture cameras, thus restricting vehicle operation to a special flight arena. In addition, the small payload capacity and highly non-linear oscillating dynamics of FWMAVs makes state estimation using onboard sensors challenging due to limited compute power and sensor noise. In this paper, we develop a novel hardware-in-the-loop (HWIL) testing pipeline that recreates flight trajectories of the Harvard RoboBee, a 100mg FWMAV. We apply this testing pipeline to evaluate a potential suite of sensors for robust altitude and attitude estimation by implementing and characterizing a Complimentary Extended Kalman Filter. The HWIL system includes a mechanical noise generator, such that both trajectories and oscillatinos can be emulated and evaluated. Our onboard sensing package works towards the future goal of enabling fully autonomous control for micro-aerial vehicles.

Reprogrammable sequencing for physically intelligent under-actuated robots

Sep 05, 2024

Abstract:Programming physical intelligence into mechanisms holds great promise for machines that can accomplish tasks such as navigation of unstructured environments while utilizing a minimal amount of computational resources and electronic components. In this study, we introduce a novel design approach for physically intelligent under-actuated mechanisms capable of autonomously adjusting their motion in response to environmental interactions. Specifically, multistability is harnessed to sequence the motion of different degrees of freedom in a programmed order. A key aspect of this approach is that these sequences can be passively reprogrammed through mechanical stimuli that arise from interactions with the environment. To showcase our approach, we construct a four degree of freedom robot capable of autonomously navigating mazes and moving away from obstacles. Remarkably, this robot operates without relying on traditional computational architectures and utilizes only a single linear actuator.

Follow Anything: Open-set detection, tracking, and following in real-time

Aug 10, 2023

Abstract:Tracking and following objects of interest is critical to several robotics use cases, ranging from industrial automation to logistics and warehousing, to healthcare and security. In this paper, we present a robotic system to detect, track, and follow any object in real-time. Our approach, dubbed ``follow anything'' (FAn), is an open-vocabulary and multimodal model -- it is not restricted to concepts seen at training time and can be applied to novel classes at inference time using text, images, or click queries. Leveraging rich visual descriptors from large-scale pre-trained models (foundation models), FAn can detect and segment objects by matching multimodal queries (text, images, clicks) against an input image sequence. These detected and segmented objects are tracked across image frames, all while accounting for occlusion and object re-emergence. We demonstrate FAn on a real-world robotic system (a micro aerial vehicle) and report its ability to seamlessly follow the objects of interest in a real-time control loop. FAn can be deployed on a laptop with a lightweight (6-8 GB) graphics card, achieving a throughput of 6-20 frames per second. To enable rapid adoption, deployment, and extensibility, we open-source all our code on our project webpage at https://github.com/alaamaalouf/FollowAnything . We also encourage the reader the watch our 5-minutes explainer video in this https://www.youtube.com/watch?v=6Mgt3EPytrw .

Cetacean Translation Initiative: a roadmap to deciphering the communication of sperm whales

Apr 17, 2021

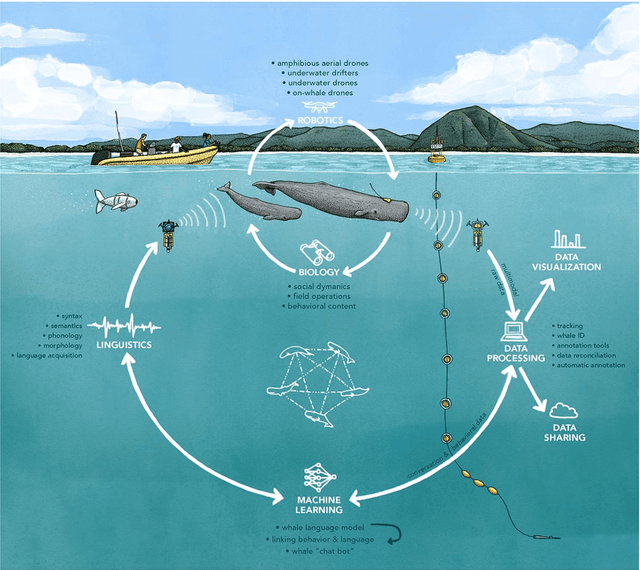

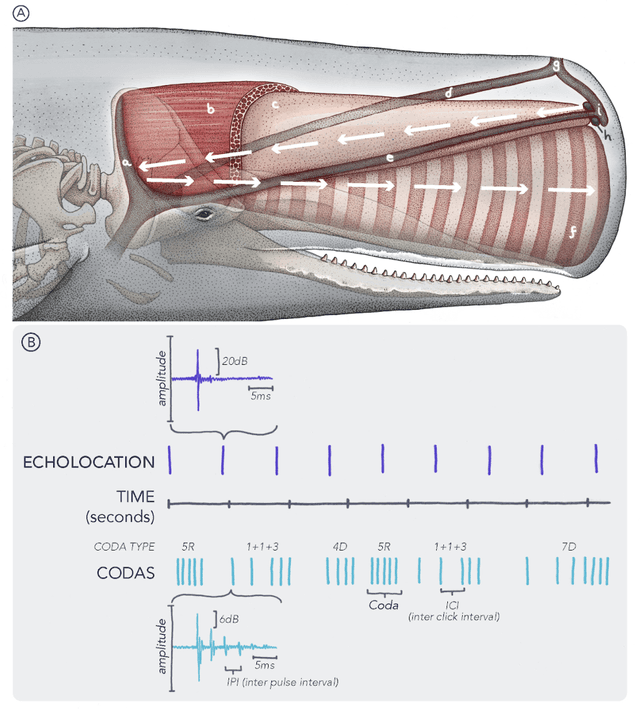

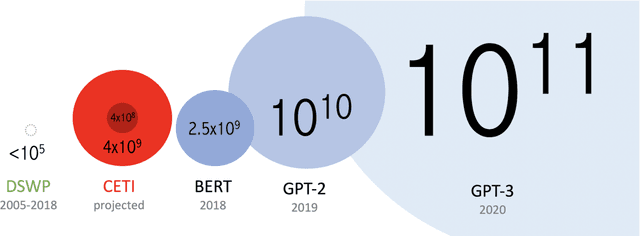

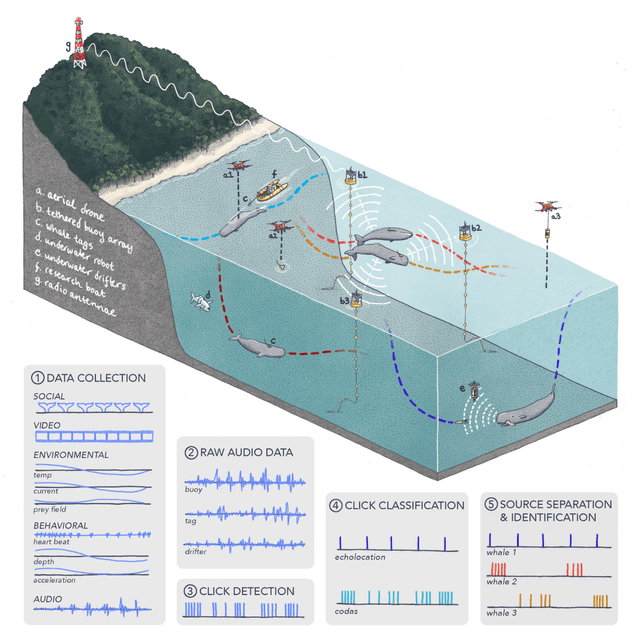

Abstract:The past decade has witnessed a groundbreaking rise of machine learning for human language analysis, with current methods capable of automatically accurately recovering various aspects of syntax and semantics - including sentence structure and grounded word meaning - from large data collections. Recent research showed the promise of such tools for analyzing acoustic communication in nonhuman species. We posit that machine learning will be the cornerstone of future collection, processing, and analysis of multimodal streams of data in animal communication studies, including bioacoustic, behavioral, biological, and environmental data. Cetaceans are unique non-human model species as they possess sophisticated acoustic communications, but utilize a very different encoding system that evolved in an aquatic rather than terrestrial medium. Sperm whales, in particular, with their highly-developed neuroanatomical features, cognitive abilities, social structures, and discrete click-based encoding make for an excellent starting point for advanced machine learning tools that can be applied to other animals in the future. This paper details a roadmap toward this goal based on currently existing technology and multidisciplinary scientific community effort. We outline the key elements required for the collection and processing of massive bioacoustic data of sperm whales, detecting their basic communication units and language-like higher-level structures, and validating these models through interactive playback experiments. The technological capabilities developed by such an undertaking are likely to yield cross-applications and advancements in broader communities investigating non-human communication and animal behavioral research.

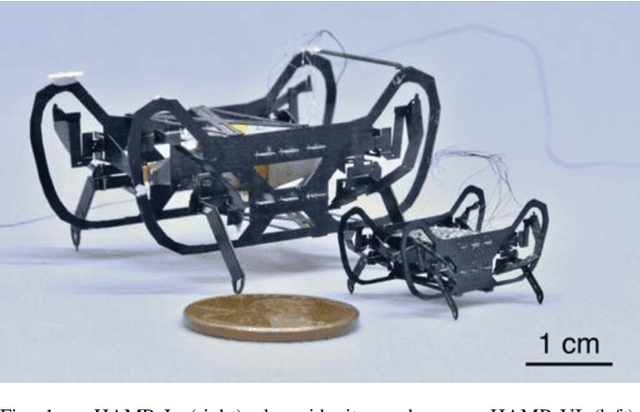

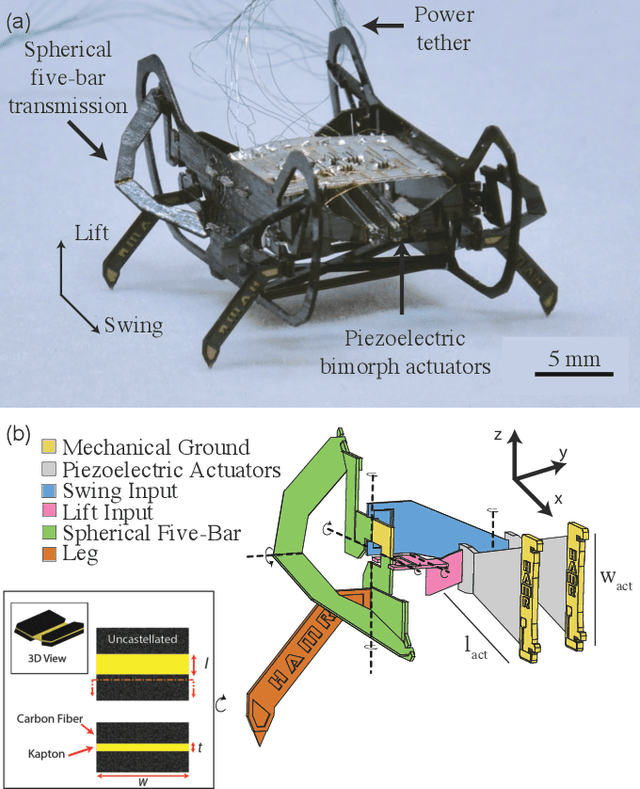

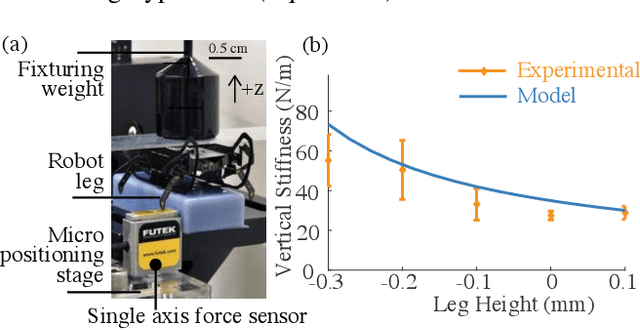

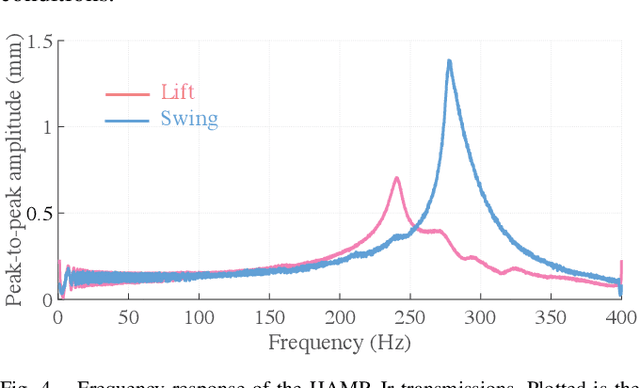

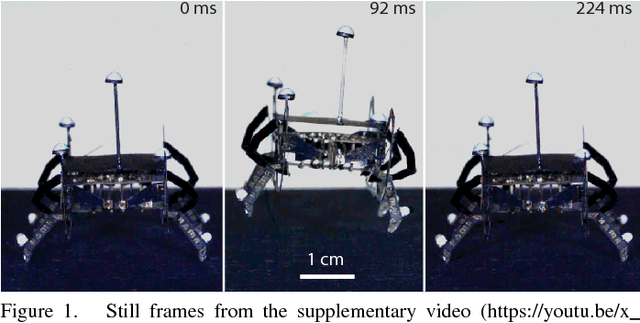

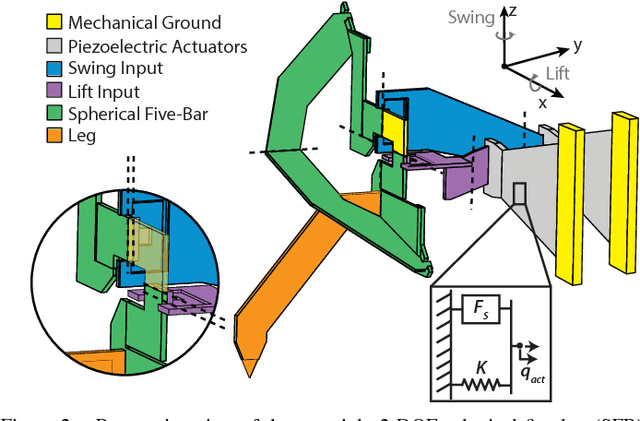

Scaling down an insect-size microrobot, HAMR-VI into HAMR-Jr

Mar 06, 2020

Abstract:Here we present HAMR-Jr, a \SI{22.5}{\milli\meter}, \SI{320}{\milli\gram} quadrupedal microrobot. With eight independently actuated degrees of freedom, HAMR-Jr is, to our knowledge, the most mechanically dexterous legged robot at its scale and is capable of high-speed locomotion (\SI{13.91}{bodylengths~\second^{-1}}) at a variety of stride frequencies (\SI{1}{}-\SI{200}{\hertz}) using multiple gaits. We achieved this using a design and fabrication process that is flexible, allowing scaling with minimum changes to our workflow. We further characterized HAMR-Jr's open-loop locomotion and compared it with the larger scale HAMR-VI microrobot to demonstrate the effectiveness of scaling laws in predicting running performance.

Contact-Implicit Optimization of Locomotion Trajectories for a Quadrupedal Microrobot

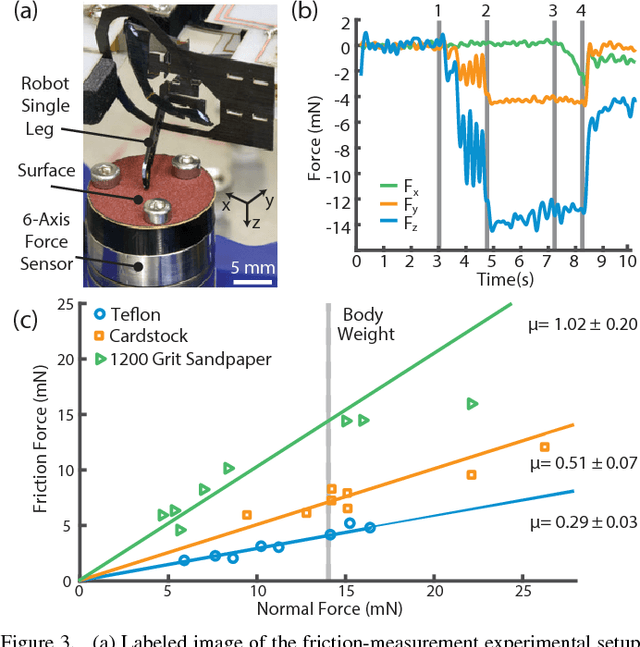

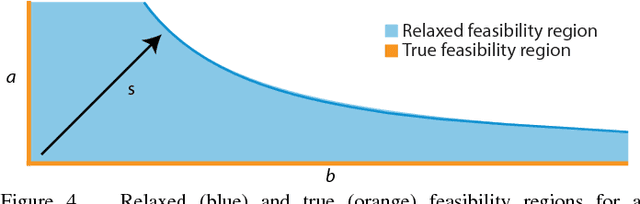

Jan 25, 2019

Abstract:Planning locomotion trajectories for legged microrobots is challenging because of their complex morphology, high frequency passive dynamics, and discontinuous contact interactions with their environment. Consequently, such research is often driven by time-consuming experimental methods. As an alternative, we present a framework for systematically modeling, planning, and controlling legged microrobots. We develop a three-dimensional dynamic model of a 1.5 gram quadrupedal microrobot with complexity (e.g., number of degrees of freedom) similar to larger-scale legged robots. We then adapt a recently developed variational contact-implicit trajectory optimization method to generate feasible whole-body locomotion plans for this microrobot, and we demonstrate that these plans can be tracked with simple joint-space controllers. We plan and execute periodic gaits at multiple stride frequencies and on various surfaces. These gaits achieve high per-cycle velocities, including a maximum of 10.87 mm/cycle, which is 15% faster than previously measured velocities for this microrobot. Furthermore, we plan and execute a vertical jump of 9.96 mm, which is 78% of the microrobot's center-of-mass height. To the best of our knowledge, this is the first end-to-end demonstration of planning and tracking whole-body dynamic locomotion on a millimeter-scale legged microrobot.

Framing Human-Robot Task Communication as a POMDP

Apr 01, 2012

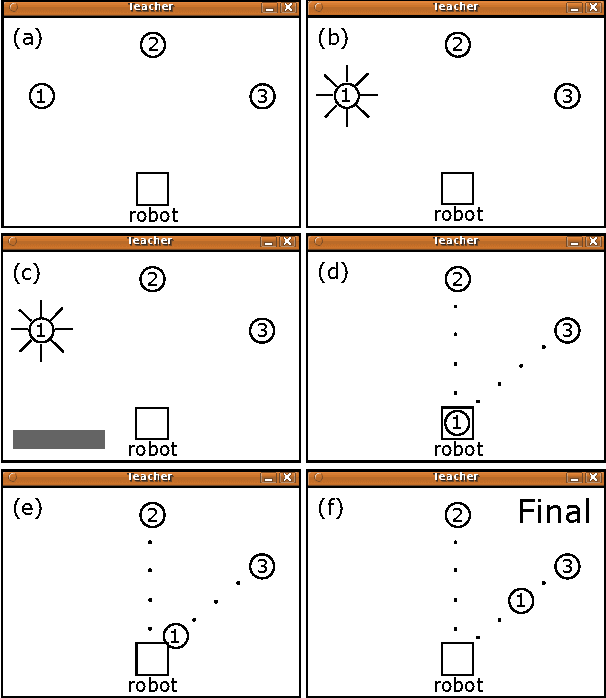

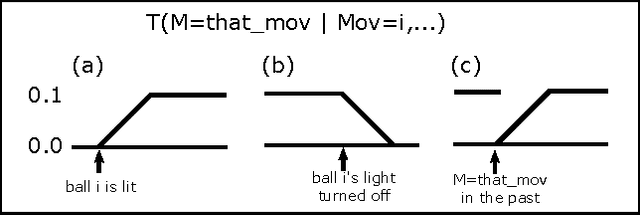

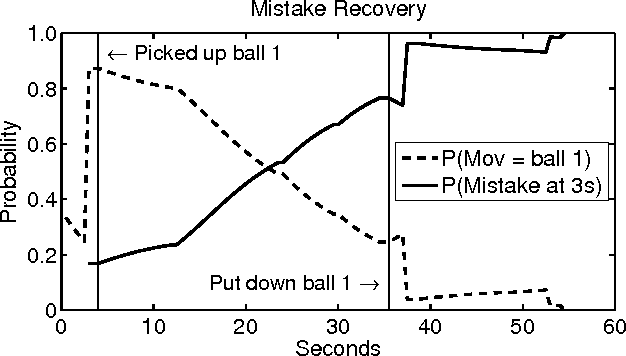

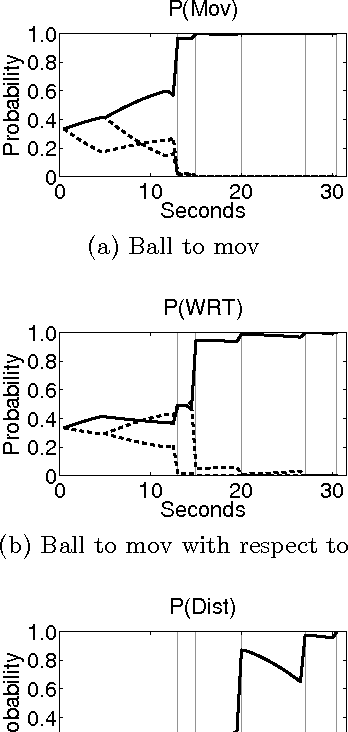

Abstract:As general purpose robots become more capable, pre-programming of all tasks at the factory will become less practical. We would like for non-technical human owners to be able to communicate, through interaction with their robot, the details of a new task; we call this interaction "task communication". During task communication the robot must infer the details of the task from unstructured human signals and it must choose actions that facilitate this inference. In this paper we propose the use of a partially observable Markov decision process (POMDP) for representing the task communication problem; with the unobservable task details and unobservable intentions of the human teacher captured in the state, with all signals from the human represented as observations, and with the cost function chosen to penalize uncertainty. We work through an example representation of task communication as a POMDP, and present results from a user experiment on an interactive virtual robot, compared with a human controlled virtual robot, for a task involving a single object movement and binary approval input from the teacher. The results suggest that the proposed POMDP representation produces robots that are robust to teacher error, that can accurately infer task details, and that are perceived to be intelligent.

Learning from Humans as an I-POMDP

Apr 01, 2012Abstract:The interactive partially observable Markov decision process (I-POMDP) is a recently developed framework which extends the POMDP to the multi-agent setting by including agent models in the state space. This paper argues for formulating the problem of an agent learning interactively from a human teacher as an I-POMDP, where the agent \emph{programming} to be learned is captured by random variables in the agent's state space, all \emph{signals} from the human teacher are treated as observed random variables, and the human teacher, modeled as a distinct agent, is explicitly represented in the agent's state space. The main benefits of this approach are: i. a principled action selection mechanism, ii. a principled belief update mechanism, iii. support for the most common teacher \emph{signals}, and iv. the anticipated production of complex beneficial interactions. The proposed formulation, its benefits, and several open questions are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge