Framing Human-Robot Task Communication as a POMDP

Paper and Code

Apr 01, 2012

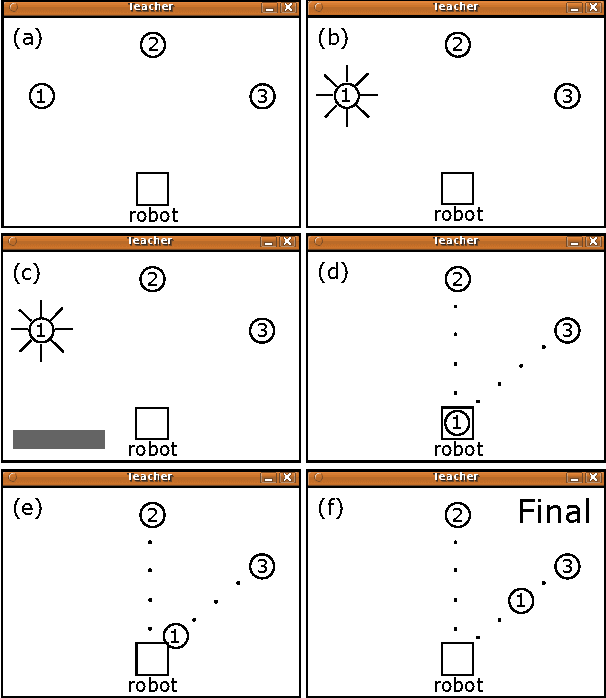

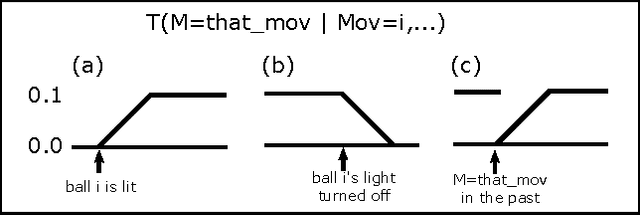

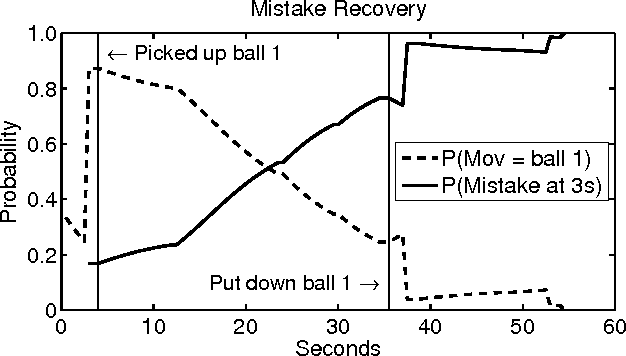

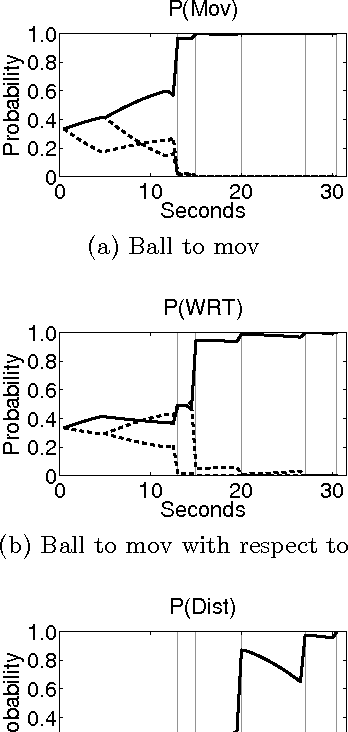

As general purpose robots become more capable, pre-programming of all tasks at the factory will become less practical. We would like for non-technical human owners to be able to communicate, through interaction with their robot, the details of a new task; we call this interaction "task communication". During task communication the robot must infer the details of the task from unstructured human signals and it must choose actions that facilitate this inference. In this paper we propose the use of a partially observable Markov decision process (POMDP) for representing the task communication problem; with the unobservable task details and unobservable intentions of the human teacher captured in the state, with all signals from the human represented as observations, and with the cost function chosen to penalize uncertainty. We work through an example representation of task communication as a POMDP, and present results from a user experiment on an interactive virtual robot, compared with a human controlled virtual robot, for a task involving a single object movement and binary approval input from the teacher. The results suggest that the proposed POMDP representation produces robots that are robust to teacher error, that can accurately infer task details, and that are perceived to be intelligent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge