Neel Doshi

Stow: Robotic Packing of Items into Fabric Pods

May 07, 2025

Abstract:This paper presents a compliant manipulation system capable of placing items onto densely packed shelves. The wide diversity of items and strict business requirements for high producing rates and low defect generation have prohibited warehouse robotics from performing this task. Our innovations in hardware, perception, decision-making, motion planning, and control have enabled this system to perform over 500,000 stows in a large e-commerce fulfillment center. The system achieves human levels of packing density and speed while prioritizing work on overhead shelves to enhance the safety of humans working alongside the robots.

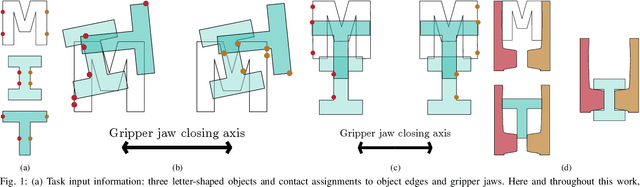

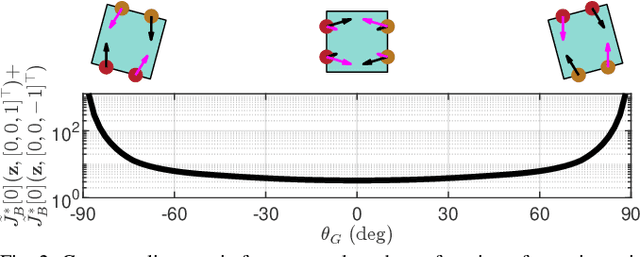

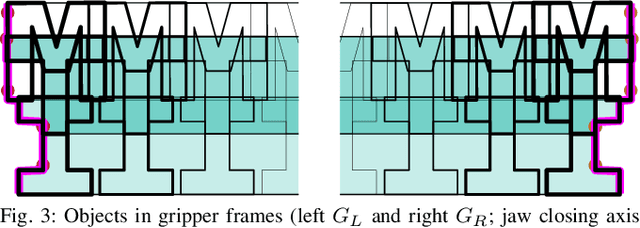

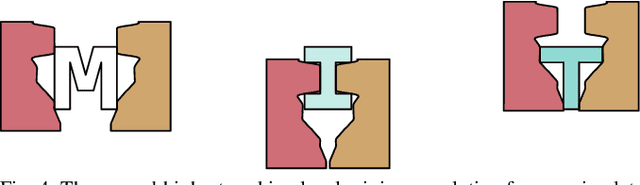

Parallel-Jaw Gripper and Grasp Co-Optimization for Sets of Planar Objects

Oct 27, 2023

Abstract:We propose a framework for optimizing a planar parallel-jaw gripper for use with multiple objects. While optimizing general-purpose grippers and contact locations for grasps are both well studied, co-optimizing grasps and the gripper geometry to execute them receives less attention. As such, our framework synthesizes grippers optimized to stably grasp sets of polygonal objects. Given a fixed number of contacts and their assignments to object faces and gripper jaws, our framework optimizes contact locations along these faces, gripper pose for each grasp, and gripper shape. Our key insights are to pose shape and contact constraints in frames fixed to the gripper jaws, and to leverage the linearity of constraints in our grasp stability and gripper shape models via an augmented Lagrangian formulation. Together, these enable a tractable nonlinear program implementation. We apply our method to several examples. The first illustrative problem shows the discovery of a geometrically simple solution where possible. In another, space is constrained, forcing multiple objects to be contacted by the same features as each other. Finally a toolset-grasping example shows that our framework applies to complex, real-world objects. We provide a physical experiment of the toolset grasps.

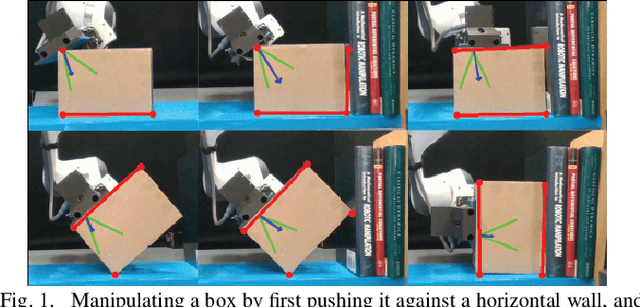

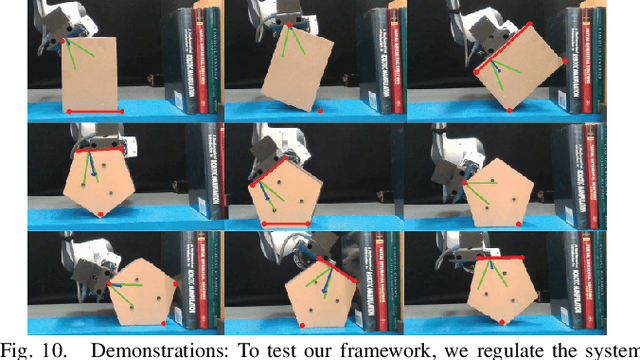

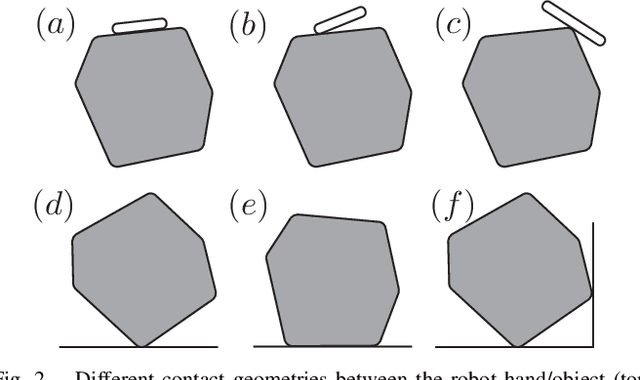

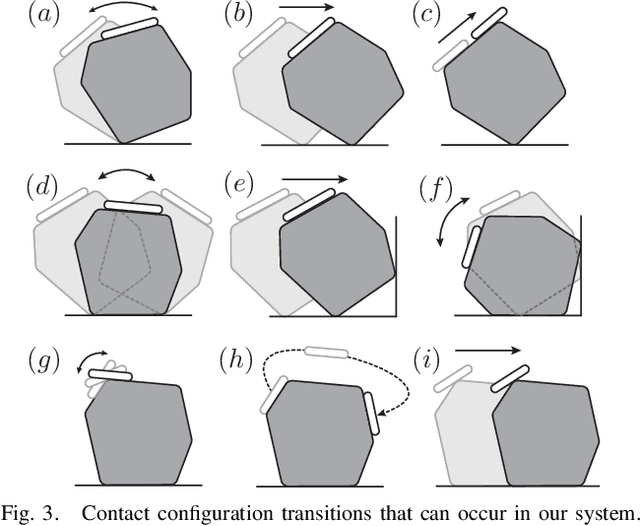

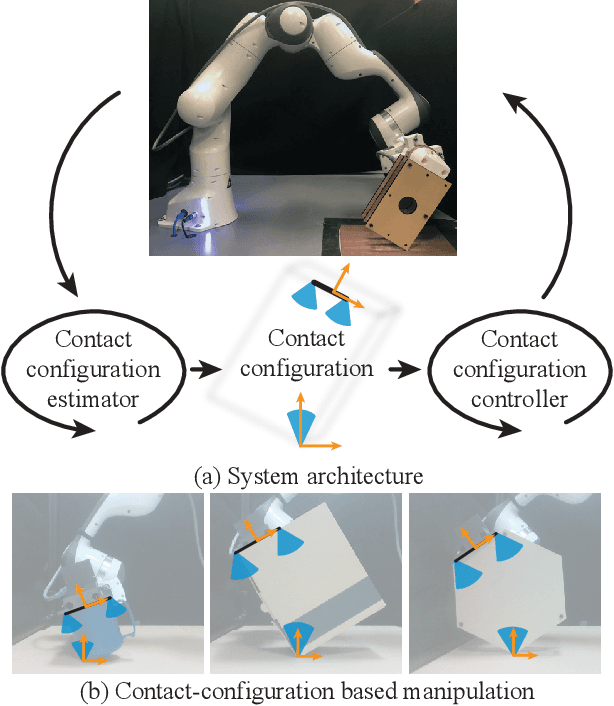

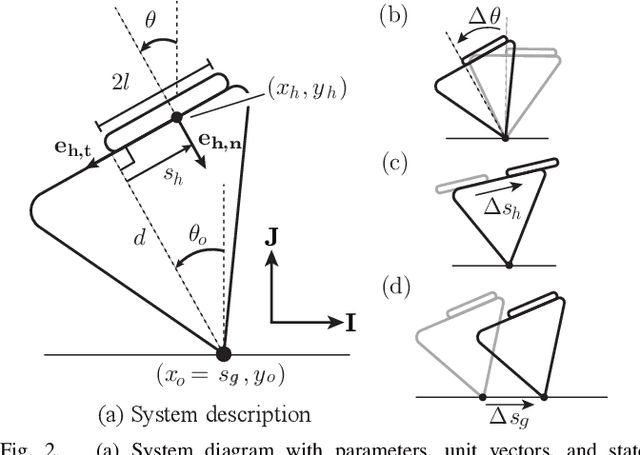

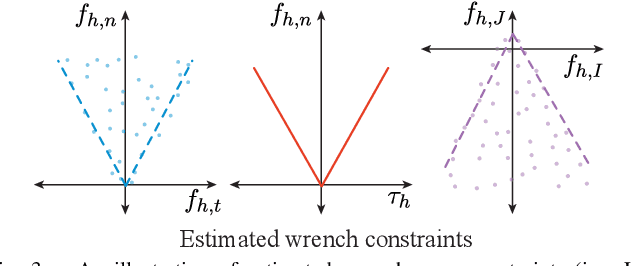

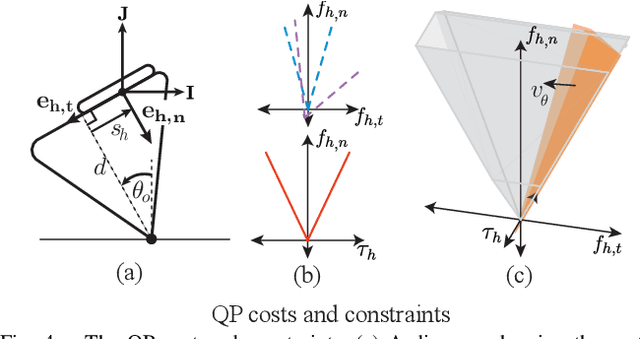

Object manipulation through contact configuration regulation: multiple and intermittent contacts

Oct 01, 2023

Abstract:In this work, we build on our method for manipulating unknown objects via contact configuration regulation: the estimation and control of the location, geometry, and mode of all contacts between the robot, object, and environment. We further develop our estimator and controller to enable manipulation through more complex contact interactions, including intermittent contact between the robot/object, and multiple contacts between the object/environment. In addition, we support a larger set of contact geometries at each interface. This is accomplished through a factor graph based estimation framework that reasons about the complementary kinematic and wrench constraints of contact to predict the current contact configuration. We are aided by the incorporation of a limited amount of visual feedback; which when combined with the available F/T sensing and robot proprioception, allows us to differentiate contact modes that were previously indistinguishable. We implement this revamped framework on our manipulation platform, and demonstrate that it allows the robot to perform a wider set of manipulation tasks. This includes, using a wall as a support to re-orient an object, or regulating the contact geometry between the object and the ground. Finally, we conduct ablation studies to understand the contributions from visual and tactile feedback in our manipulation framework. Our code can be found at: https://github.com/mcubelab/pbal.

Manipulation of unknown objects via contact configuration regulation

Mar 02, 2022

Abstract:We present an approach to robotic manipulation of unknown objects through regulation of the object's contact configuration: the location, geometry, and mode of all contacts between the object, robot, and environment. A contact configuration constrains the forces and motions that can be applied to the object; however, synthesizing these constraints generally requires knowledge of the object's pose and geometry. We develop an object-agnostic approach for estimation and control that circumvents this need. Our framework directly estimates a set of wrench and motion constraints which it uses to regulate the contact configuration. We use this to reactively manipulate unknown planar objects in the gravity plane. A video describing our work can be found on our project page: http://mcube.mit.edu/research/contactConfig.html.

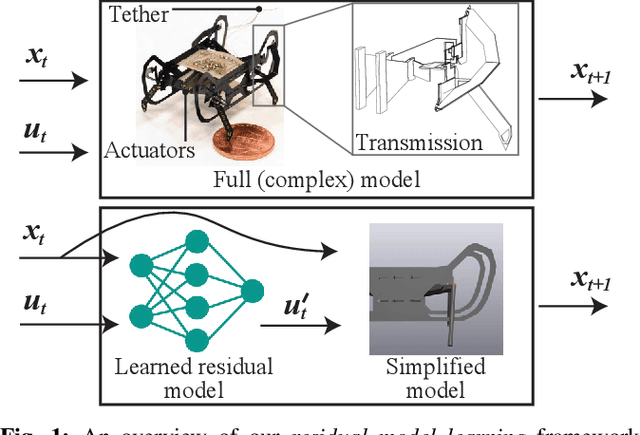

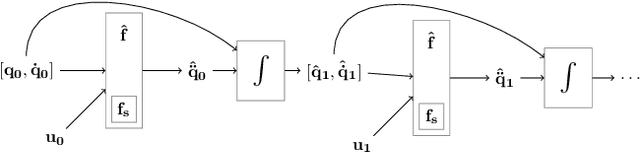

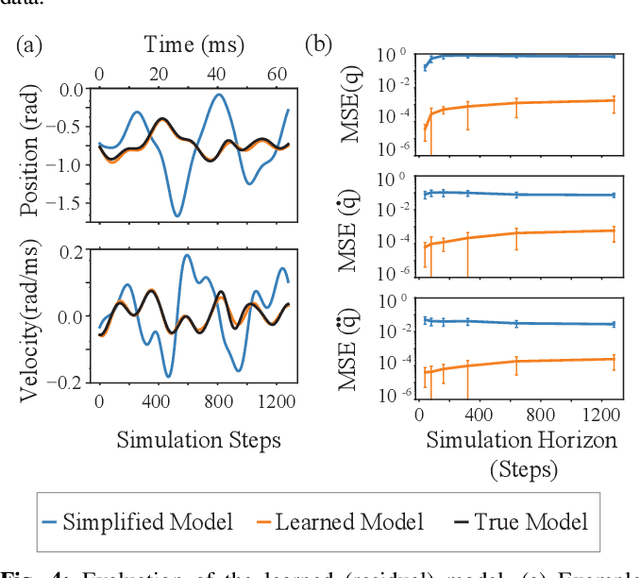

Residual Model Learning for Microrobot Control

Apr 01, 2021

Abstract:A majority of microrobots are constructed using compliant materials that are difficult to model analytically, limiting the utility of traditional model-based controllers. Challenges in data collection on microrobots and large errors between simulated models and real robots make current model-based learning and sim-to-real transfer methods difficult to apply. We propose a novel framework residual model learning (RML) that leverages approximate models to substantially reduce the sample complexity associated with learning an accurate robot model. We show that using RML, we can learn a model of the Harvard Ambulatory MicroRobot (HAMR) using just 12 seconds of passively collected interaction data. The learned model is accurate enough to be leveraged as "proxy-simulator" for learning walking and turning behaviors using model-free reinforcement learning algorithms. RML provides a general framework for learning from extremely small amounts of interaction data, and our experiments with HAMR clearly demonstrate that RML substantially outperforms existing techniques.

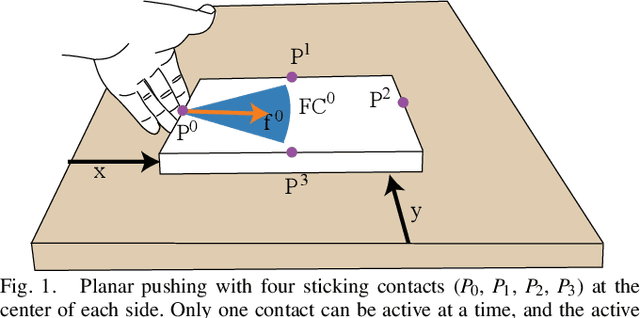

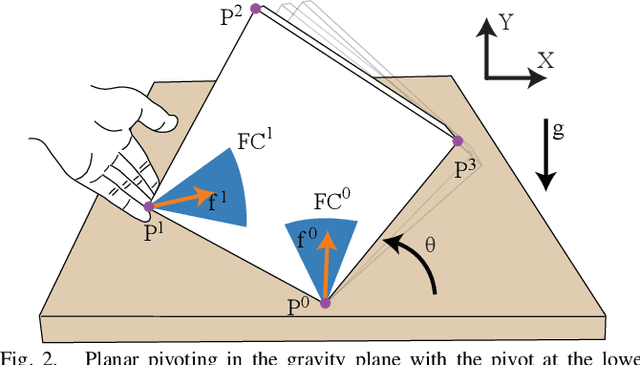

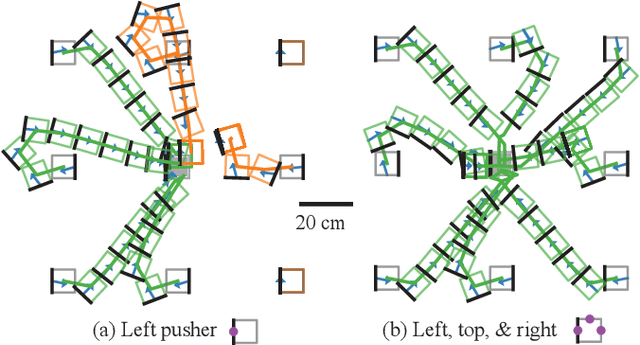

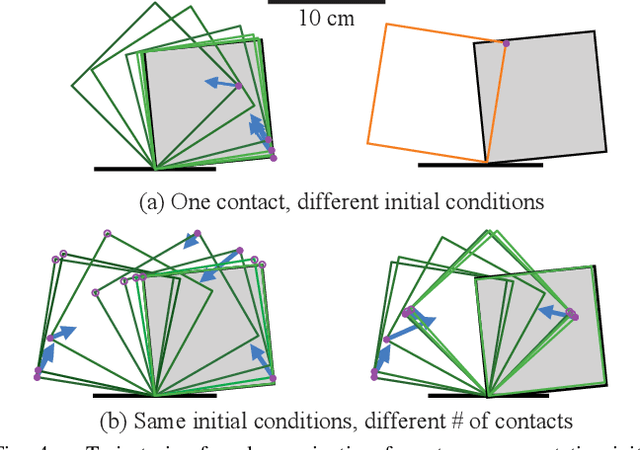

Hybrid Differential Dynamic Programming for Planar Manipulation Primitives

Nov 10, 2019

Abstract:We present a hybrid differential dynamic programming algorithm for closed-loop execution of manipulation primitives with frictional contact switches. Planning and control of these primitives is challenging as they are hybrid, under-actuated, and stochastic. We address this by planning a trajectory over a finite horizon, considering a small number of contact switches, and generating a stabilizing controller. We evaluate the performance and computational cost of our framework in ablations studies for two primitives: planar pushing and planar pivoting. We can plan pose-to-pose trajectories from most configurations with only a couple (one to two) hybrid switches and in reasonable time (one to five seconds). We further demonstrate that our controller stabilizes these hybrid trajectories on a real pushing system. A video describing out work can be found at https://youtu.be/YGSe4cUfq6Q.

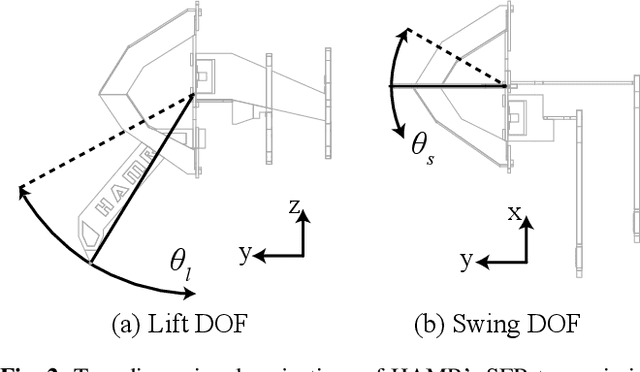

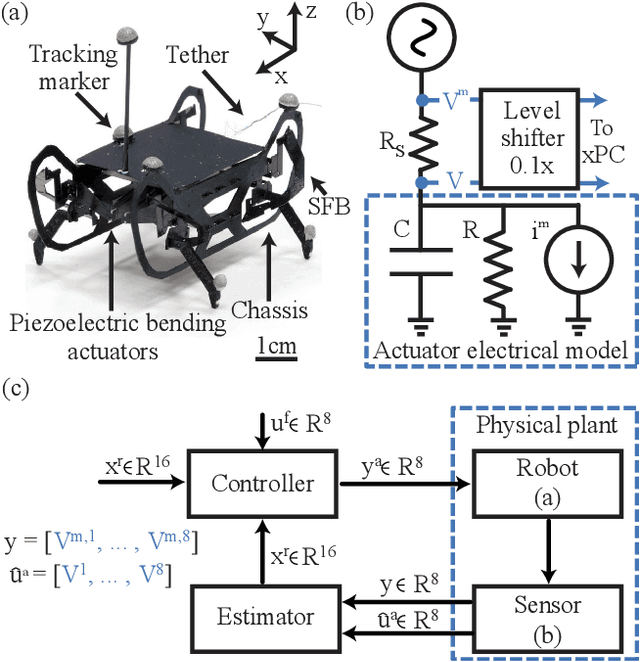

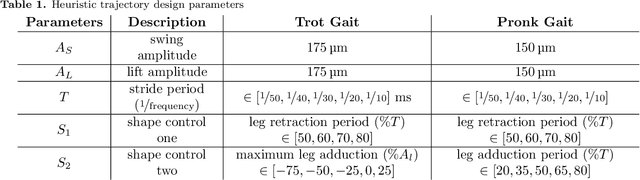

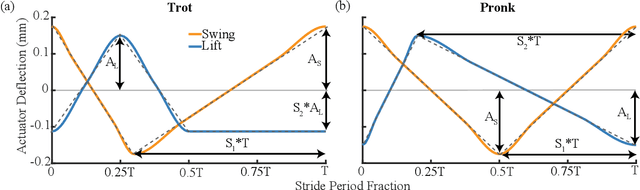

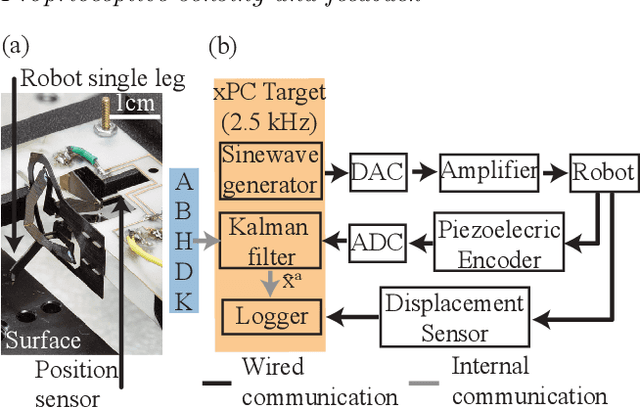

Effective Locomotion at Multiple Stride Frequencies Using Proprioceptive Feedback on a Legged Microrobot

Apr 17, 2019

Abstract:Limitations in actuation, sensing, and computation have forced small legged robots to rely on carefully tuned, mechanically mediated leg trajectories for effective locomotion. Recent advances in manufacturing, however, have enabled the development of small legged robots capable of operation at multiple stride frequencies using multi-degree-of-freedom leg trajectories. Proprioceptive sensing and control is key to extending the capabilities of these robots to a broad range of operating conditions. In this work, we leverage concomitant sensing for piezoelectric actuation to develop a computationally efficient framework for estimation and control of leg trajectories on a quadrupedal microrobot. We demonstrate accurate position estimation ($<$16% root-mean-square error) and control ($<$16% root-mean-square tracking error) during locomotion across a wide range of stride frequencies (10-50 Hz). This capability enables the exploration of two bioinspired parametric leg trajectories designed to reduce leg slip and increase locomotion performance (e.g., speed, cost-of-transport, etc.). Using this approach, we demonstrate high performance locomotion at stride frequencies of (10-30 Hz) where the robot's natural dynamics result in poor open-loop locomotion. Furthermore, we validate the biological hypotheses that inspired the our trajectories and identify regions of highly dynamic locomotion, low cost-of-transport (3.33), and minimal leg slippage (<10%).

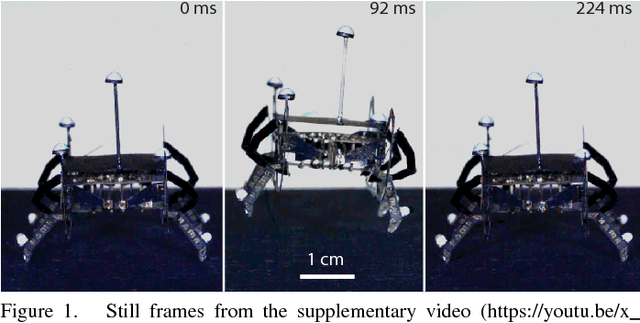

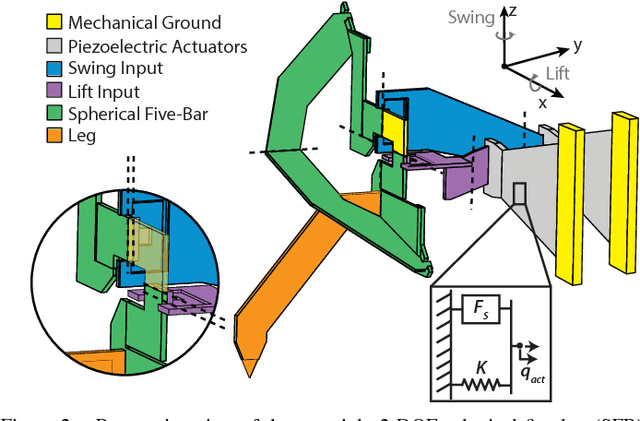

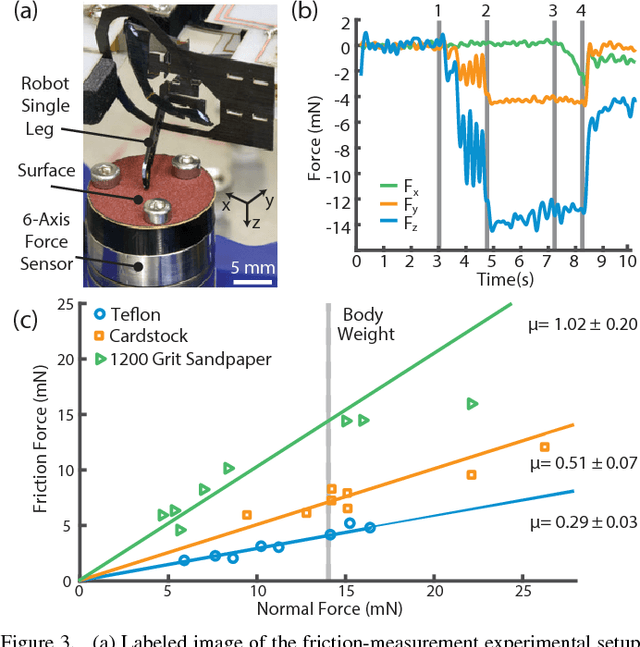

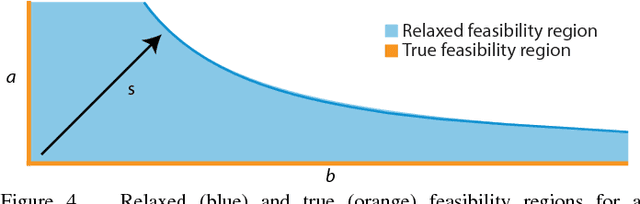

Contact-Implicit Optimization of Locomotion Trajectories for a Quadrupedal Microrobot

Jan 25, 2019

Abstract:Planning locomotion trajectories for legged microrobots is challenging because of their complex morphology, high frequency passive dynamics, and discontinuous contact interactions with their environment. Consequently, such research is often driven by time-consuming experimental methods. As an alternative, we present a framework for systematically modeling, planning, and controlling legged microrobots. We develop a three-dimensional dynamic model of a 1.5 gram quadrupedal microrobot with complexity (e.g., number of degrees of freedom) similar to larger-scale legged robots. We then adapt a recently developed variational contact-implicit trajectory optimization method to generate feasible whole-body locomotion plans for this microrobot, and we demonstrate that these plans can be tracked with simple joint-space controllers. We plan and execute periodic gaits at multiple stride frequencies and on various surfaces. These gaits achieve high per-cycle velocities, including a maximum of 10.87 mm/cycle, which is 15% faster than previously measured velocities for this microrobot. Furthermore, we plan and execute a vertical jump of 9.96 mm, which is 78% of the microrobot's center-of-mass height. To the best of our knowledge, this is the first end-to-end demonstration of planning and tracking whole-body dynamic locomotion on a millimeter-scale legged microrobot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge