Francois R. Hogan

Working Backwards: Learning to Place by Picking

Dec 04, 2023

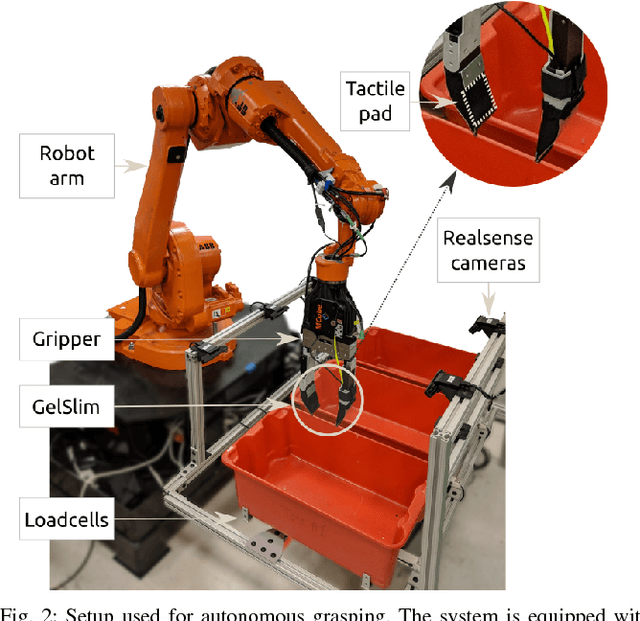

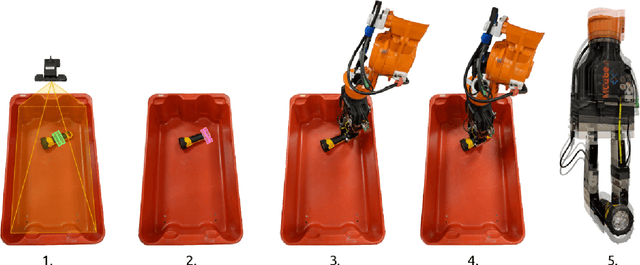

Abstract:We present Learning to Place by Picking (LPP), a method capable of autonomously collecting demonstrations for a family of placing tasks in which objects must be manipulated to specific locations. With LPP, we approach the learning of robotic object placement policies by reversing the grasping process and exploiting the inherent symmetry of the pick and place problems. Specifically, we obtain placing demonstrations from a set of grasp sequences of objects that are initially located at their target placement locations. Our system is capable of collecting hundreds of demonstrations without human intervention by using a combination of tactile sensing and compliant control for grasps. We train a policy directly from visual observations through behaviour cloning, using the autonomously-collected demonstrations. By doing so, the policy can generalize to object placement scenarios outside of the training environment without privileged information (e.g., placing a plate picked up from a table and not at the original placement location). We validate our approach on home robotic scenarios that include dishwasher loading and table setting. Our approach yields robotic placing policies that outperform policies trained with kinesthetic teaching, both in terms of performance and data efficiency, while requiring no human supervision.

A Study of Human-Robot Handover through Human-Human Object Transfer

Nov 21, 2023

Abstract:In this preliminary study, we investigate changes in handover behaviour when transferring hazardous objects with the help of a high-resolution touch sensor. Participants were asked to hand over a safe and hazardous object (a full cup and an empty cup) while instrumented with a modified STS sensor. Our data shows a clear distinction in the length of handover for the full cup vs the empty one, with the former being slower. Sensor data further suggests a change in tactile behaviour dependent on the object's risk factor. The results of this paper motivate a deeper study of tactile factors which could characterize a risky handover, allowing for safer human-robot interactions in the future.

A Long Horizon Planning Framework for Manipulating Rigid Pointcloud Objects

Nov 16, 2020

Abstract:We present a framework for solving long-horizon planning problems involving manipulation of rigid objects that operates directly from a point-cloud observation, i.e. without prior object models. Our method plans in the space of object subgoals and frees the planner from reasoning about robot-object interaction dynamics by relying on a set of generalizable manipulation primitives. We show that for rigid bodies, this abstraction can be realized using low-level manipulation skills that maintain sticking contact with the object and represent subgoals as 3D transformations. To enable generalization to unseen objects and improve planning performance, we propose a novel way of representing subgoals for rigid-body manipulation and a graph-attention based neural network architecture for processing point-cloud inputs. We experimentally validate these choices using simulated and real-world experiments on the YuMi robot. Results demonstrate that our method can successfully manipulate new objects into target configurations requiring long-term planning. Overall, our framework realizes the best of the worlds of task-and-motion planning (TAMP) and learning-based approaches. Project website: https://anthonysimeonov.github.io/rpo-planning-framework/.

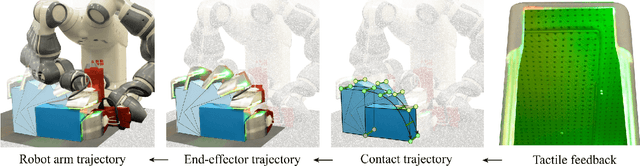

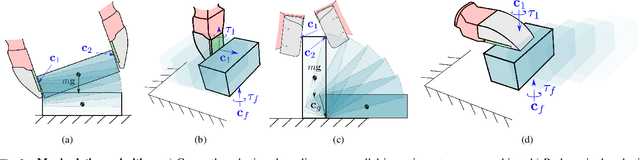

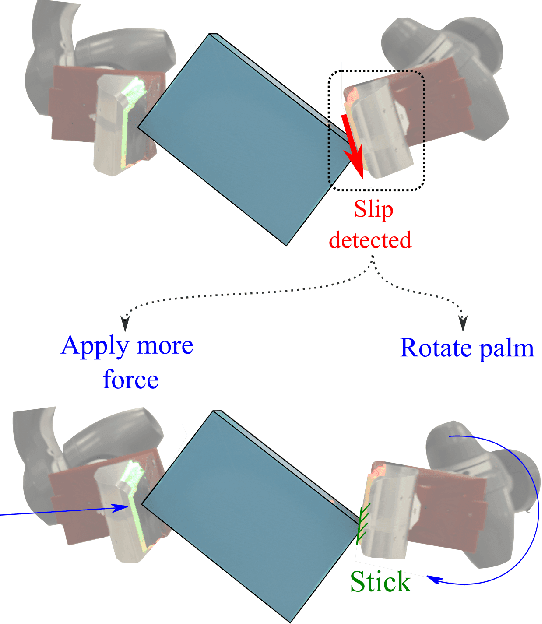

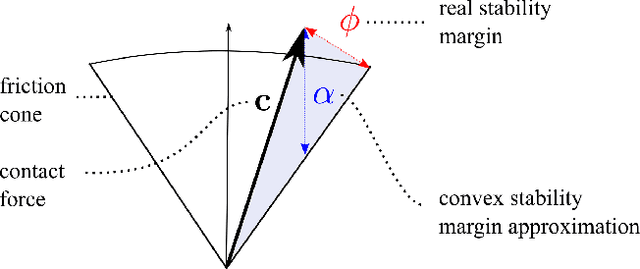

Tactile Dexterity: Manipulation Primitives with Tactile Feedback

Feb 08, 2020

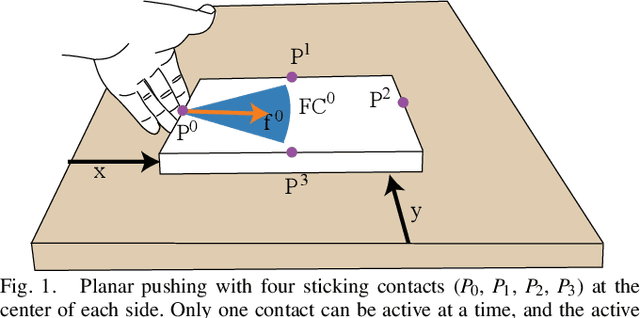

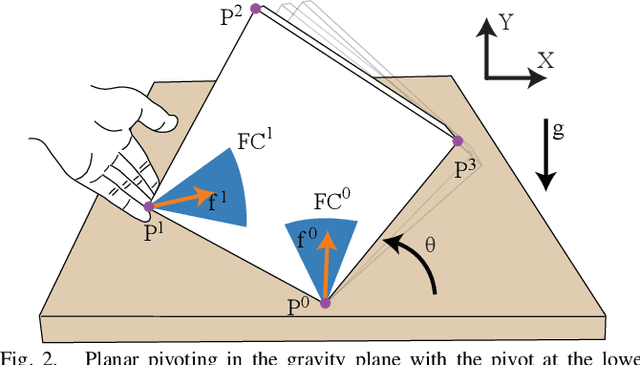

Abstract:This paper develops closed-loop tactile controllers for dexterous manipulation with dual-arm robotic palms. Tactile dexterity is an approach to dexterous manipulation that plans for robot/object interactions that render interpretable tactile information for control. We divide the role of tactile control into two goals: 1) control the contact state between the end-effector and the object (contact/no-contact, stick/slip, forces) and 2) control the object state by tracking the object with a tactile-based state estimator. Key to this formulation is the decomposition of manipulation plans into sequences of manipulation primitives with simple mechanics and efficient planners. We consider the scenario of manipulating an object from an initial pose to a target pose on a flat surface while correcting for external perturbations and uncertainty in the initial pose of the object. We validate the approach with an ABB YuMi dual-arm robot and demonstrate the ability of the tactile controller to handle external perturbations.

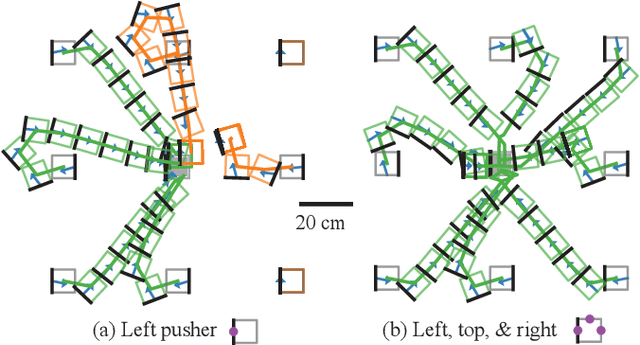

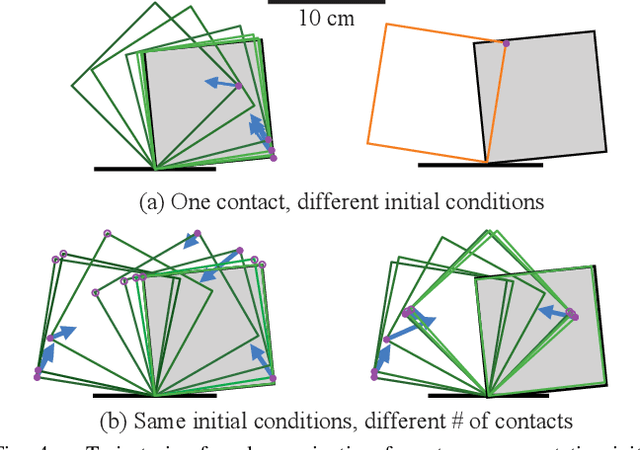

Hybrid Differential Dynamic Programming for Planar Manipulation Primitives

Nov 10, 2019

Abstract:We present a hybrid differential dynamic programming algorithm for closed-loop execution of manipulation primitives with frictional contact switches. Planning and control of these primitives is challenging as they are hybrid, under-actuated, and stochastic. We address this by planning a trajectory over a finite horizon, considering a small number of contact switches, and generating a stabilizing controller. We evaluate the performance and computational cost of our framework in ablations studies for two primitives: planar pushing and planar pivoting. We can plan pose-to-pose trajectories from most configurations with only a couple (one to two) hybrid switches and in reasonable time (one to five seconds). We further demonstrate that our controller stabilizes these hybrid trajectories on a real pushing system. A video describing out work can be found at https://youtu.be/YGSe4cUfq6Q.

A Data-Efficient Approach to Precise and Controlled Pushing

Oct 09, 2018

Abstract:Decades of research in control theory have shown that simple controllers, when provided with timely feedback, can control complex systems. Pushing is an example of a complex mechanical system that is difficult to model accurately due to unknown system parameters such as coefficients of friction and pressure distributions. In this paper, we explore the data-complexity required for controlling, rather than modeling, such a system. Results show that a model-based control approach, where the dynamical model is learned from data, is capable of performing complex pushing trajectories with a minimal amount of training data (10 data points). The dynamics of pushing interactions are modeled using a Gaussian process (GP) and are leveraged within a model predictive control approach that linearizes the GP and imposes actuator and task constraints for a planar manipulation task.

* Maria Bauza and Francois R. Hogan contributed equally to this work. 10 pages, 5 figures

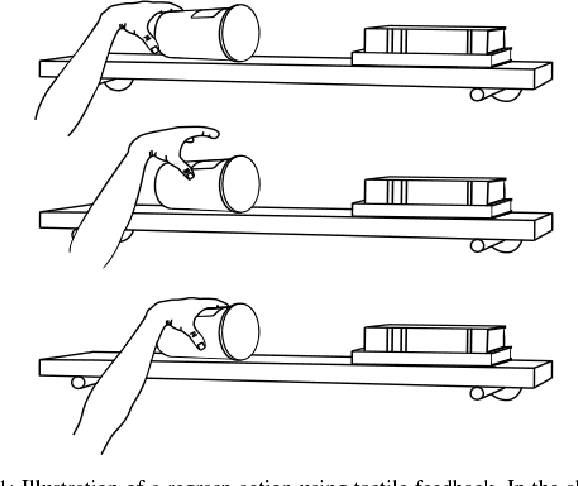

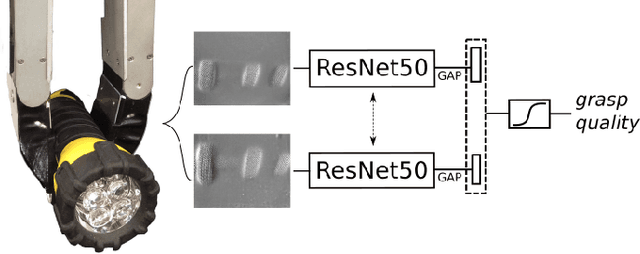

Tactile Regrasp: Grasp Adjustments via Simulated Tactile Transformations

Oct 09, 2018

Abstract:This paper presents a novel regrasp control policy that makes use of tactile sensing to plan local grasp adjustments. Our approach determines regrasp actions by virtually searching for local transformations of tactile measurements that improve the quality of the grasp. First, we construct a tactile-based grasp quality metric using a deep convolutional neural network trained on over 2800 grasps. The quality of each grasp, a continuous value between 0 and 1, is determined experimentally by measuring its resistance to external perturbations. Second, we simulate the tactile imprints associated with robot motions relative to the initial grasp by performing rigid-body transformations of the given tactile measurements. The newly generated tactile imprints are evaluated with the learned grasp quality network and the regrasp action is chosen to maximize the grasp quality. Results show that the grasp quality network can predict the outcome of grasps with an average accuracy of 85% on known objects and 75% on a cross validation set of 12 objects. The regrasp control policy improves the success rate of grasp actions by an average relative increase of 70% on a test set of 8 objects.

* Francois R. Hogan and Maria Bauza contributed equally to this work. 8 pages, 7 figures

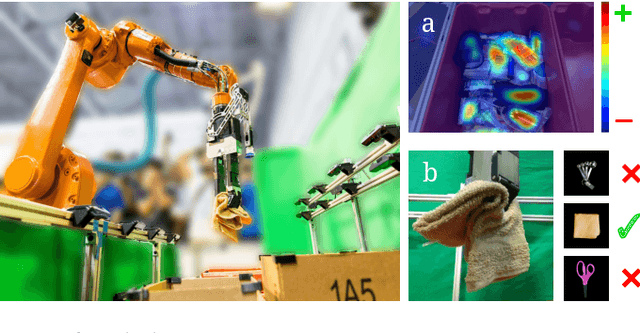

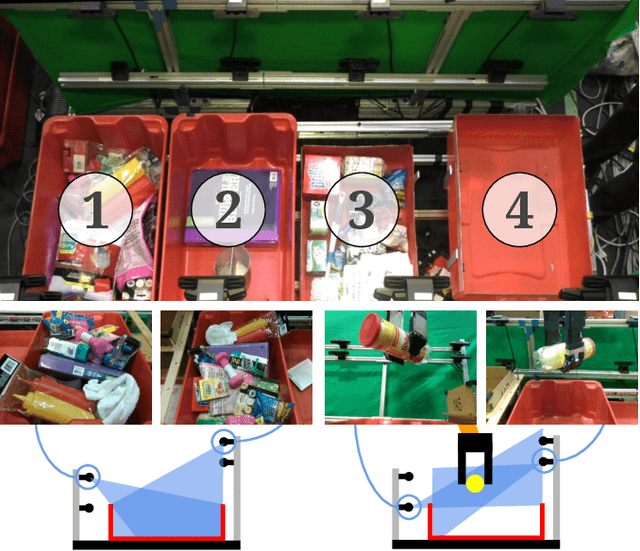

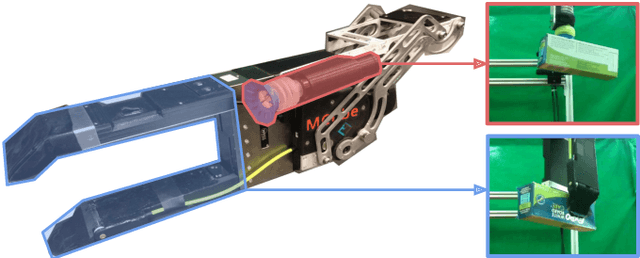

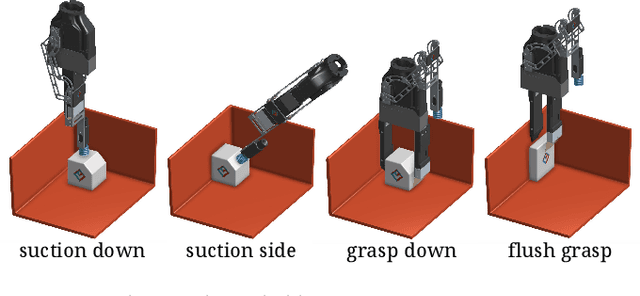

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching

Apr 01, 2018

Abstract:This paper presents a robotic pick-and-place system that is capable of grasping and recognizing both known and novel objects in cluttered environments. The key new feature of the system is that it handles a wide range of object categories without needing any task-specific training data for novel objects. To achieve this, it first uses a category-agnostic affordance prediction algorithm to select and execute among four different grasping primitive behaviors. It then recognizes picked objects with a cross-domain image classification framework that matches observed images to product images. Since product images are readily available for a wide range of objects (e.g., from the web), the system works out-of-the-box for novel objects without requiring any additional training data. Exhaustive experimental results demonstrate that our multi-affordance grasping achieves high success rates for a wide variety of objects in clutter, and our recognition algorithm achieves high accuracy for both known and novel grasped objects. The approach was part of the MIT-Princeton Team system that took 1st place in the stowing task at the 2017 Amazon Robotics Challenge. All code, datasets, and pre-trained models are available online at http://arc.cs.princeton.edu

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge