Elliott Donlon

Dense Tactile Force Distribution Estimation using GelSlim and inverse FEM

Apr 08, 2019

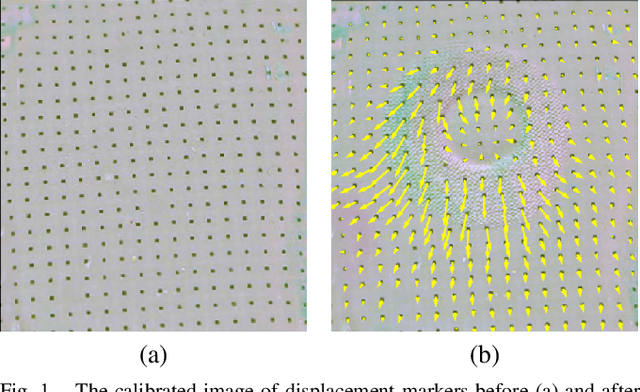

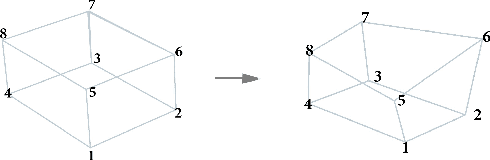

Abstract:In this paper, we present a new version of tactile sensor GelSlim 2.0 with the capability to estimate the contact force distribution in real time. The sensor is vision-based and uses an array of markers to track deformations on a gel pad due to contact. A new hardware design makes the sensor more rugged, parametrically adjustable and improves illumination. Leveraging the sensor's increased functionality, we propose to use inverse Finite Element Method (iFEM), a numerical method to reconstruct the contact force distribution based on marker displacements. The sensor is able to provide force distribution of contact with high spatial density. Experiments and comparison with ground truth show that the reconstructed force distribution is physically reasonable with good accuracy.

Maintaining Grasps within Slipping Bound by Monitoring Incipient Slip

Oct 31, 2018

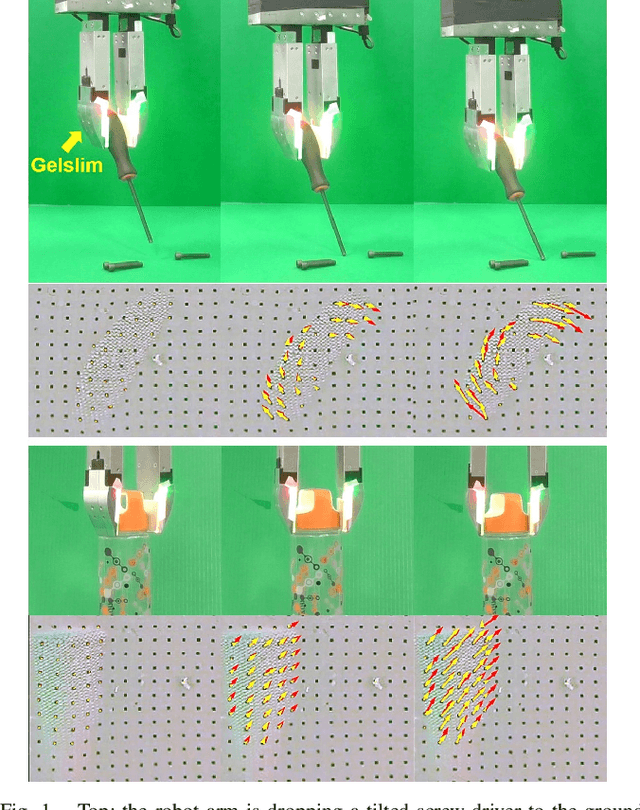

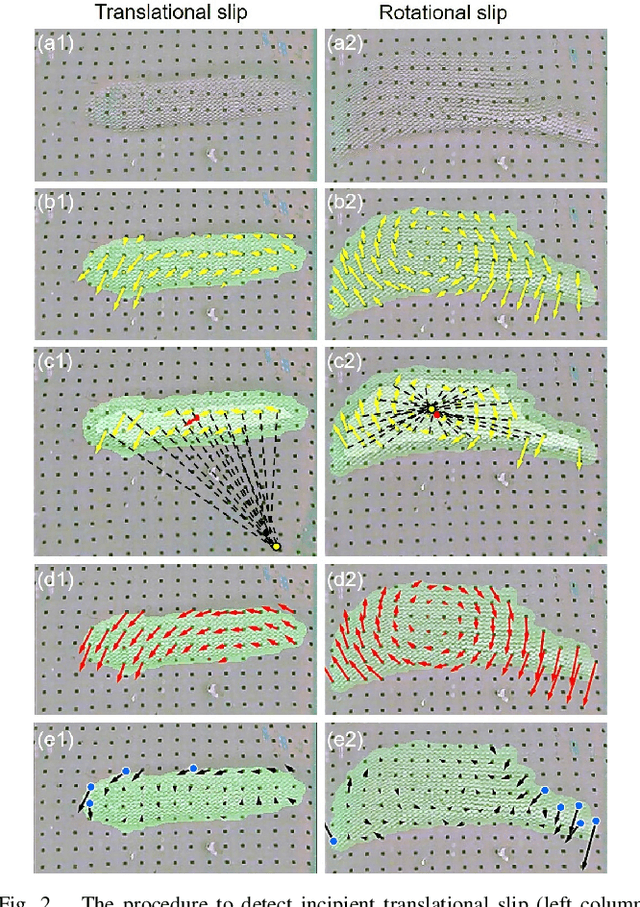

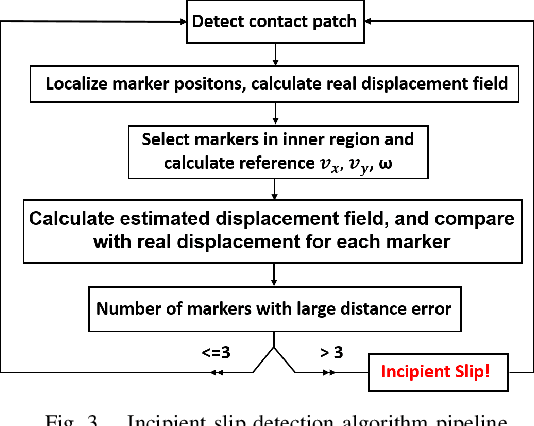

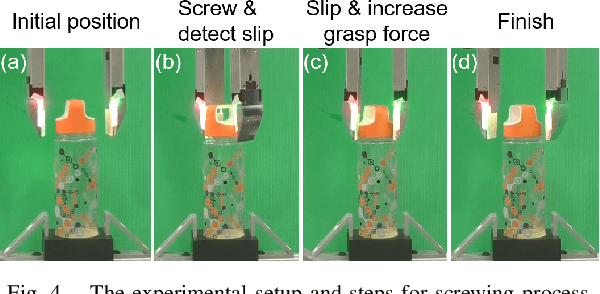

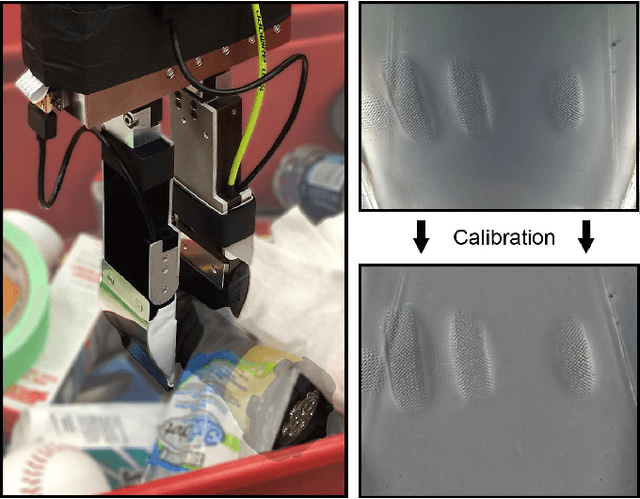

Abstract:In this paper, we propose an approach to detect incipient slip, i.e. predict slip, by using a high-resolution vision-based tactile sensor, GelSlim. The sensor dynamically captures the tactile imprints of the contact object and their changes with a soft gel pad. The method assumes the object is mostly rigid and treats the motion of object's imprint on sensor surface as a 2D rigid-body motion. We use the deviation of the true motion field from that of a 2D planar rigid transformation as a measure of slip. The output is a dense slip field which we use to detect when small areas of the contact patch start to slip (incipient slip). The method can detect both translational and rotational incipient slip without any prior knowledge of the object at 24 Hz. We test the method on 10 objects 240 times and achieve 86.25% detection accuracy. We further show how the slip feedback can be used to monitor the gripping force to avoid slip with a closed-loop bottle-cap screwing and unscrewing experiment with incipient slip detection feedback. The method was demonstrated to be useful for the robot to apply proper gripping force and stop screwing at the right point before breaking objects. The method can be applied to many manipulation tasks in both structured and unstructured environments.

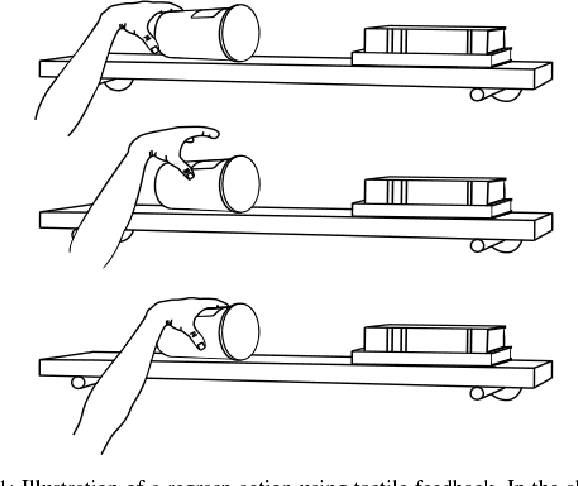

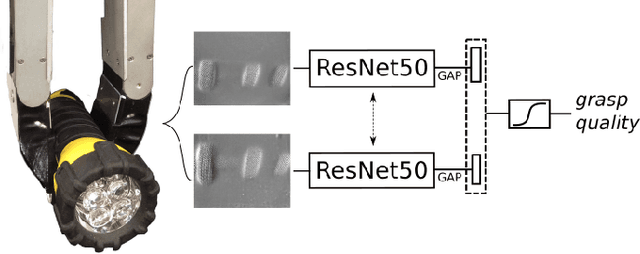

Tactile Regrasp: Grasp Adjustments via Simulated Tactile Transformations

Oct 09, 2018

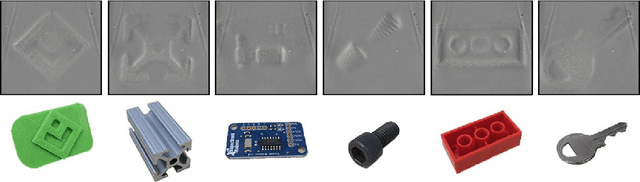

Abstract:This paper presents a novel regrasp control policy that makes use of tactile sensing to plan local grasp adjustments. Our approach determines regrasp actions by virtually searching for local transformations of tactile measurements that improve the quality of the grasp. First, we construct a tactile-based grasp quality metric using a deep convolutional neural network trained on over 2800 grasps. The quality of each grasp, a continuous value between 0 and 1, is determined experimentally by measuring its resistance to external perturbations. Second, we simulate the tactile imprints associated with robot motions relative to the initial grasp by performing rigid-body transformations of the given tactile measurements. The newly generated tactile imprints are evaluated with the learned grasp quality network and the regrasp action is chosen to maximize the grasp quality. Results show that the grasp quality network can predict the outcome of grasps with an average accuracy of 85% on known objects and 75% on a cross validation set of 12 objects. The regrasp control policy improves the success rate of grasp actions by an average relative increase of 70% on a test set of 8 objects.

* Francois R. Hogan and Maria Bauza contributed equally to this work. 8 pages, 7 figures

GelSlim: A High-Resolution, Compact, Robust, and Calibrated Tactile-sensing Finger

May 15, 2018

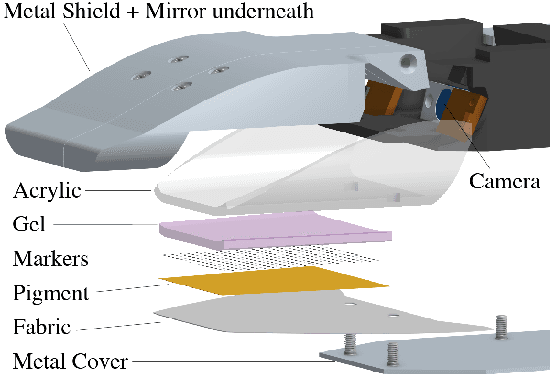

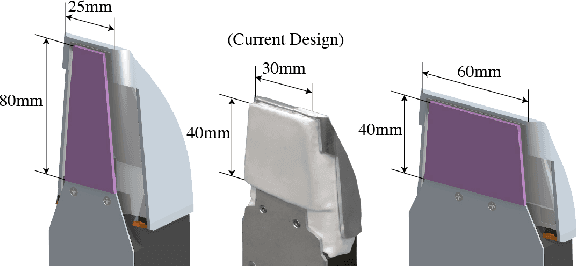

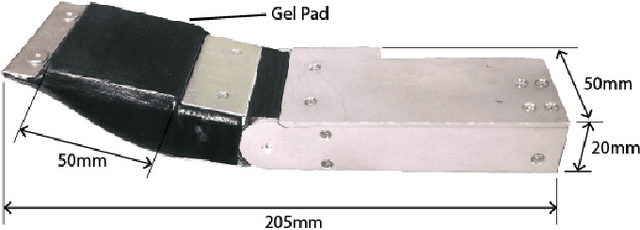

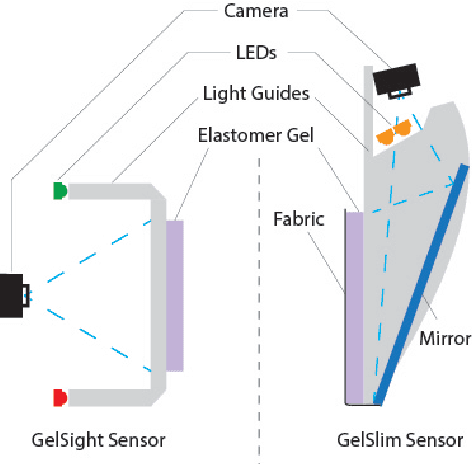

Abstract:This work describes the development of a high-resolution tactile-sensing finger for robot grasping. This finger, inspired by previous GelSight sensing techniques, features an integration that is slimmer, more robust, and with more homogeneous output than previous vision-based tactile sensors. To achieve a compact integration, we redesign the optical path from illumination source to camera by combining light guides and an arrangement of mirror reflections. We parameterize the optical path with geometric design variables and describe the tradeoffs between the finger thickness, the depth of field of the camera, and the size of the tactile sensing area. The sensor sustains the wear from continuous use -- and abuse -- in grasping tasks by combining tougher materials for the compliant soft gel, a textured fabric skin, a structurally rigid body, and a calibration process that maintains homogeneous illumination and contrast of the tactile images during use. Finally, we evaluate the sensor's durability along four metrics that track the signal quality during more than 3000 grasping experiments.

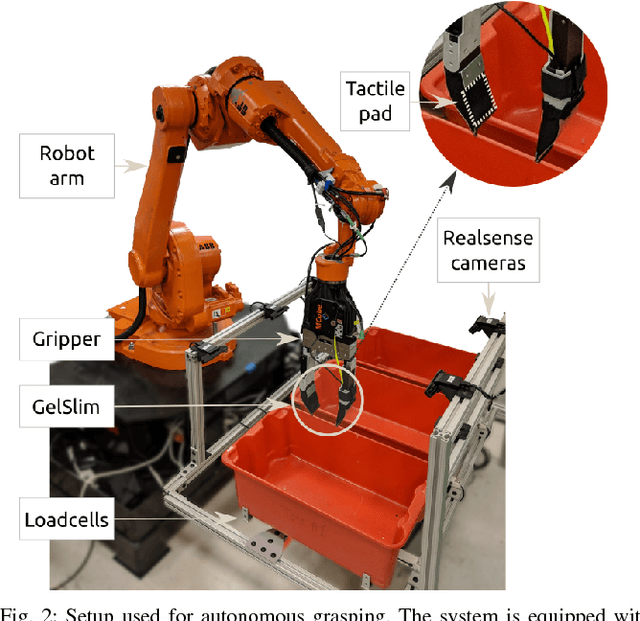

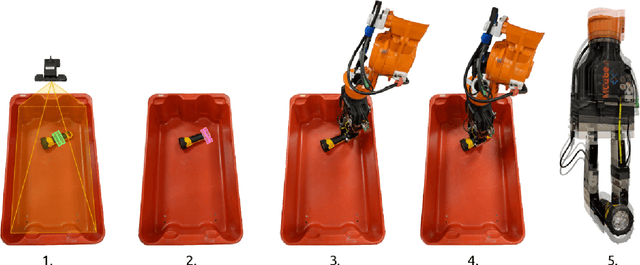

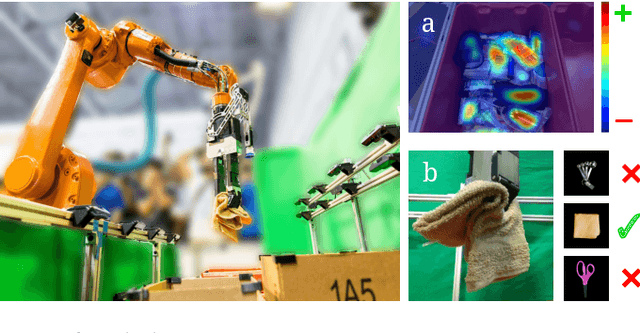

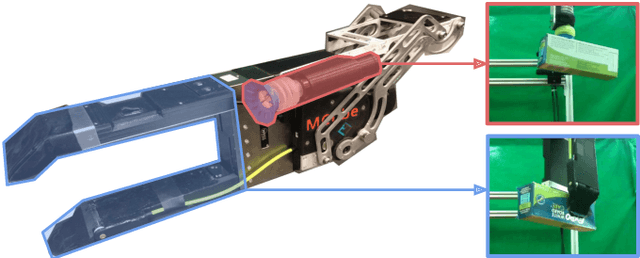

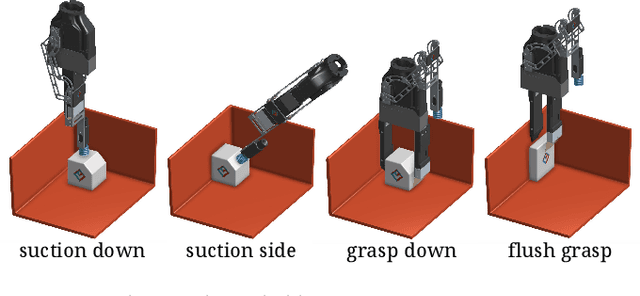

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching

Apr 01, 2018

Abstract:This paper presents a robotic pick-and-place system that is capable of grasping and recognizing both known and novel objects in cluttered environments. The key new feature of the system is that it handles a wide range of object categories without needing any task-specific training data for novel objects. To achieve this, it first uses a category-agnostic affordance prediction algorithm to select and execute among four different grasping primitive behaviors. It then recognizes picked objects with a cross-domain image classification framework that matches observed images to product images. Since product images are readily available for a wide range of objects (e.g., from the web), the system works out-of-the-box for novel objects without requiring any additional training data. Exhaustive experimental results demonstrate that our multi-affordance grasping achieves high success rates for a wide variety of objects in clutter, and our recognition algorithm achieves high accuracy for both known and novel grasped objects. The approach was part of the MIT-Princeton Team system that took 1st place in the stowing task at the 2017 Amazon Robotics Challenge. All code, datasets, and pre-trained models are available online at http://arc.cs.princeton.edu

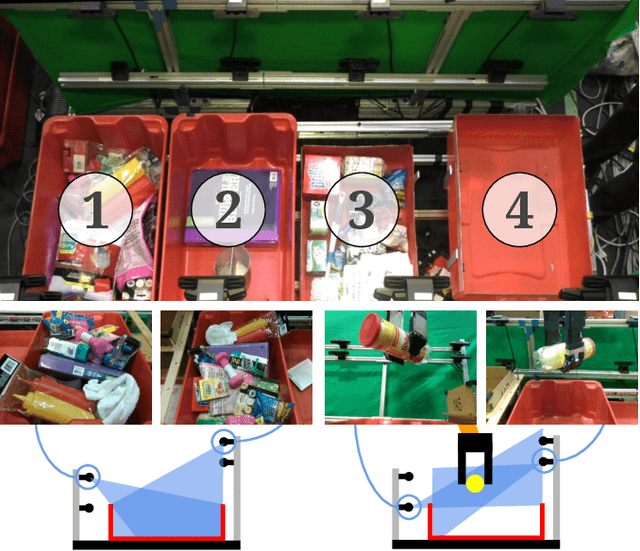

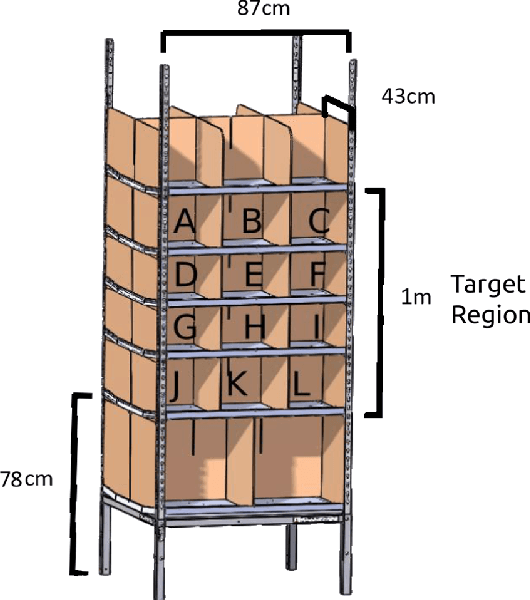

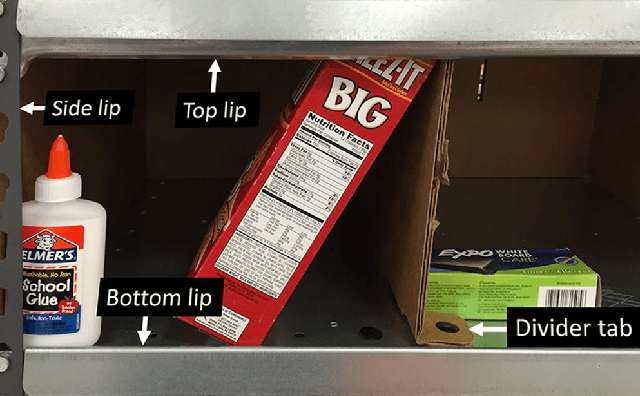

A Summary of Team MIT's Approach to the Amazon Picking Challenge 2015

Apr 13, 2016

Abstract:The Amazon Picking Challenge (APC), held alongside the International Conference on Robotics and Automation in May 2015 in Seattle, challenged roboticists from academia and industry to demonstrate fully automated solutions to the problem of picking objects from shelves in a warehouse fulfillment scenario. Packing density, object variability, speed, and reliability are the main complexities of the task. The picking challenge serves both as a motivation and an instrument to focus research efforts on a specific manipulation problem. In this document, we describe Team MIT's approach to the competition, including design considerations, contributions, and performance, and we compile the lessons learned. We also describe what we think are the main remaining challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge