Ravi Tejwani

Cross-Modality Embedding of Force and Language for Natural Human-Robot Communication

Feb 04, 2025

Abstract:A method for cross-modality embedding of force profile and words is presented for synergistic coordination of verbal and haptic communication. When two people carry a large, heavy object together, they coordinate through verbal communication about the intended movements and physical forces applied to the object. This natural integration of verbal and physical cues enables effective coordination. Similarly, human-robot interaction could achieve this level of coordination by integrating verbal and haptic communication modalities. This paper presents a framework for embedding words and force profiles in a unified manner, so that the two communication modalities can be integrated and coordinated in a way that is effective and synergistic. Here, it will be shown that, although language and physical force profiles are deemed completely different, the two can be embedded in a unified latent space and proximity between the two can be quantified. In this latent space, a force profile and words can a) supplement each other, b) integrate the individual effects, and c) substitute in an exchangeable manner. First, the need for cross-modality embedding is addressed, and the basic architecture and key building block technologies are presented. Methods for data collection and implementation challenges will be addressed, followed by experimental results and discussions.

Language Control in Robotics

May 04, 2023

Abstract:For robots performing a assistive tasks for the humans, it is crucial to synchronize their speech with their motions, in order to achieve natural and effective human-robot interaction. When a robot's speech is out of sync with their motions, it can cause confusion, frustration, and misinterpretation of the robot's intended meaning. Humans are accustomed to using both verbal and nonverbal cues to understand and coordinate with each other, and robots that can align their speech with their actions can tap into this natural mode of communication. In this research, we propose a language controller for robots to control the pace, tone, and pauses of their speech along with it's motion in the trajectory. The robot's speed is adjusted using an admittance controller based on the force input from the user, and the robot's speech speed is modulated using phase-vocoders.

An Avatar Robot Overlaid with the 3D Human Model of a Remote Operator

Mar 05, 2023

Abstract:Although telepresence assistive robots have made significant progress, they still lack the sense of realism and physical presence of the remote operator. This results in a lack of trust and adoption of such robots. In this paper, we introduce an Avatar Robot System which is a mixed real/virtual robotic system that physically interacts with a person in proximity of the robot. The robot structure is overlaid with the 3D model of the remote caregiver and visualized through Augmented Reality (AR). In this way, the person receives haptic feedback as the robot touches him/her. We further present an Optimal Non-Iterative Alignment solver that solves for the optimally aligned pose of 3D Human model to the robot (shoulder to the wrist non-iteratively). The proposed alignment solver is stateless, achieves optimal alignment and faster than the baseline solvers (demonstrated in our evaluations). We also propose an evaluation framework that quantifies the alignment quality of the solvers through multifaceted metrics. We show that our solver can consistently produce poses with similar or superior alignments as IK-based baselines without their potential drawbacks.

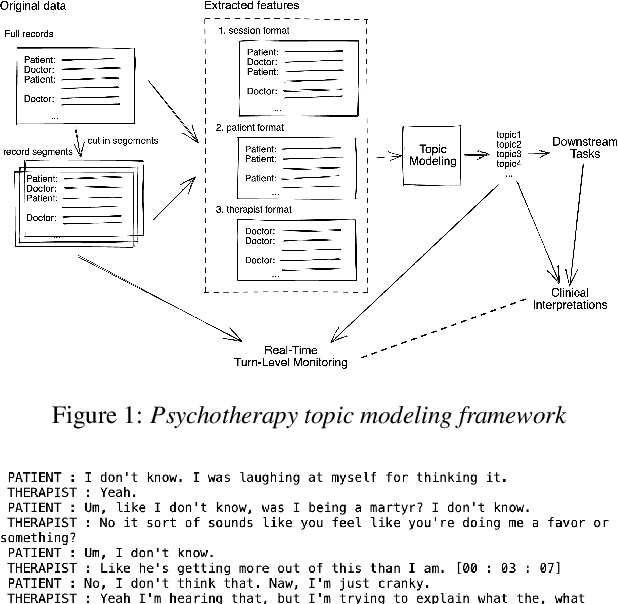

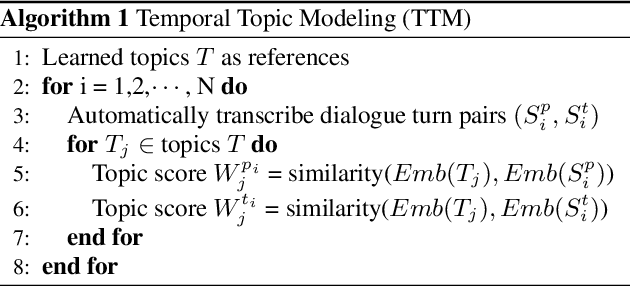

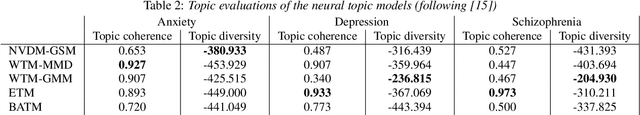

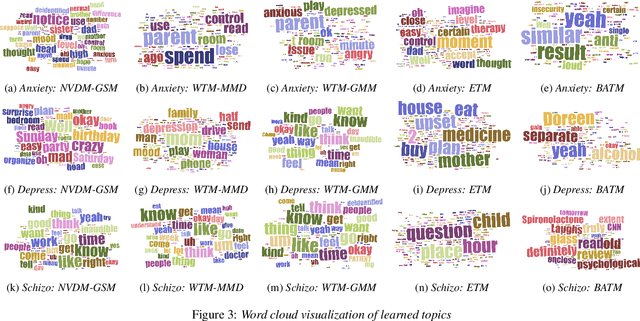

Neural Topic Modeling of Psychotherapy Sessions

Apr 13, 2022

Abstract:In this work, we compare different neural topic modeling methods in learning the topical propensities of different psychiatric conditions from the psychotherapy session transcripts parsed from speech recordings. We also incorporate temporal modeling to put this additional interpretability to action by parsing out topic similarities as a time series in a turn-level resolution. We believe this topic modeling framework can offer interpretable insights for the therapist to optimally decide his or her strategy and improve the psychotherapy effectiveness.

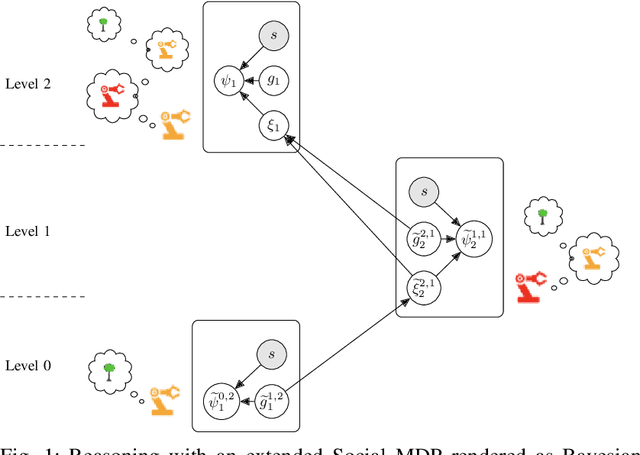

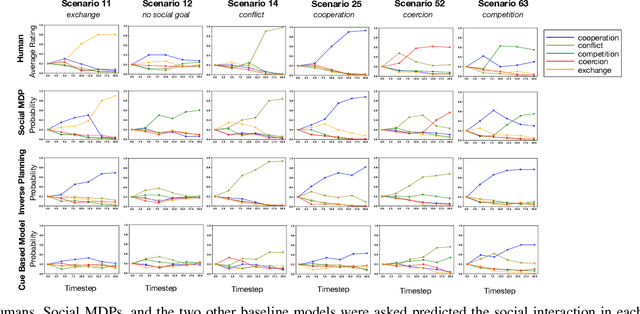

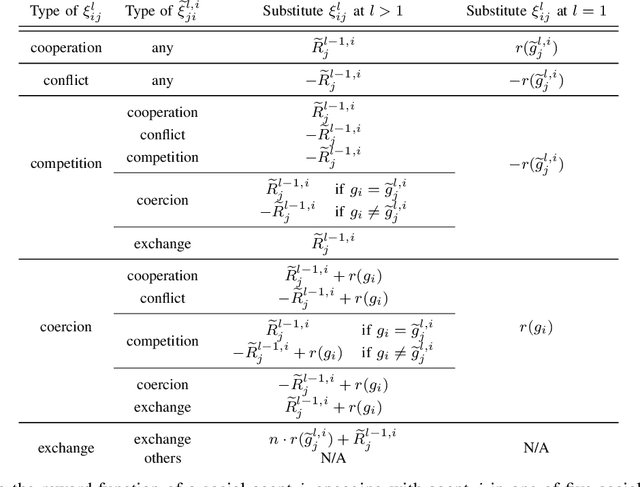

Incorporating Rich Social Interactions Into MDPs

Oct 22, 2021

Abstract:Much of what we do as humans is engage socially with other agents, a skill that robots must also eventually possess. We demonstrate that a rich theory of social interactions originating from microsociology and economics can be formalized by extending a nested MDP where agents reason about arbitrary functions of each other's hidden rewards. This extended Social MDP allows us to encode the five basic interactions that underlie microsociology: cooperation, conflict, coercion, competition, and exchange. The result is a robotic agent capable of executing social interactions zero-shot in new environments; like humans it can engage socially in novel ways even without a single example of that social interaction. Moreover, the judgments of these Social MDPs align closely with those of humans when considering which social interaction is taking place in an environment. This method both sheds light on the nature of social interactions, by providing concrete mathematical definitions, and brings rich social interactions into a mathematical framework that has proven to be natural for robotics, MDPs.

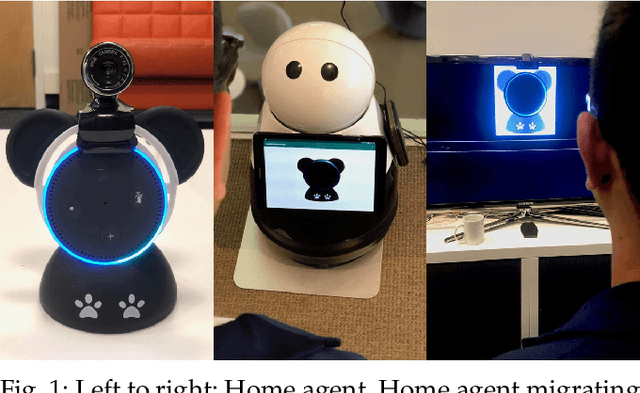

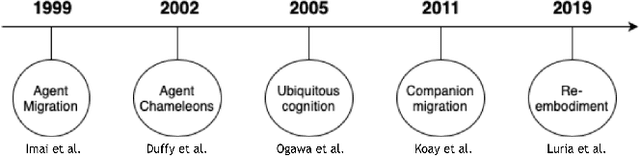

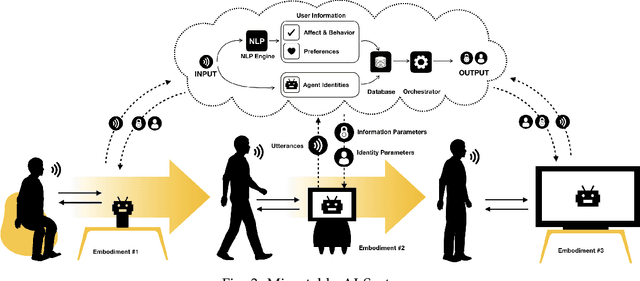

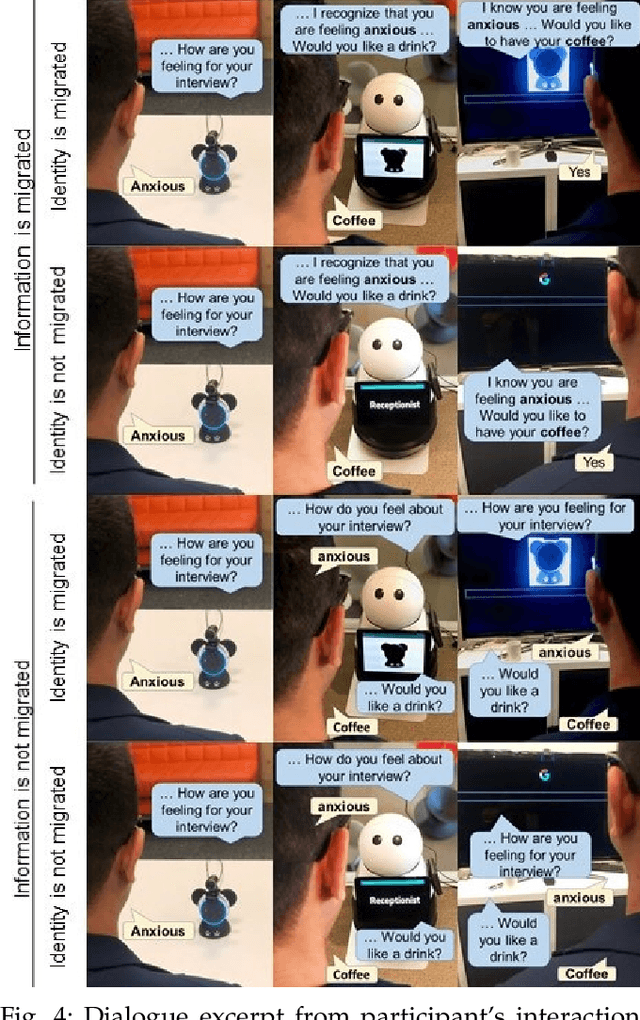

Migratable AI : Investigating users' affect on identity and information migration of a conversational AI agent

Oct 22, 2020

Abstract:Conversational AI agents are becoming ubiquitous and provide assistance to us in our everyday activities. In recent years, researchers have explored the migration of these agents across different embodiments in order to maintain the continuity of the task and improve user experience. In this paper, we investigate user's affective responses in different configurations of the migration parameters. We present a 2x2 between-subjects study in a task-based scenario using information migration and identity migration as parameters. We outline the affect processing pipeline from the video footage collected during the study and report user's responses in each condition. Our results show that users reported highest joy and were most surprised when both the information and identity was migrated; and reported most anger when the information was migrated without the identity of their agent.

Migratable AI: Personalizing Dialog Conversations with migration context

Oct 22, 2020

Abstract:The migration of conversational AI agents across different embodiments in order to maintain the continuity of the task has been recently explored to further improve user experience. However, these migratable agents lack contextual understanding of the user information and the migrated device during the dialog conversations with the user. This opens the question of how an agent might behave when migrated into an embodiment for contextually predicting the next utterance. We collected a dataset from the dialog conversations between crowdsourced workers with the migration context involving personal and non-personal utterances in different settings (public or private) of embodiment into which the agent migrated. We trained the generative and information retrieval models on the dataset using with and without migration context and report the results of both qualitative metrics and human evaluation. We believe that the migration dataset would be useful for training future migratable AI systems.

Migratable AI

Jul 11, 2020

Abstract:Conversational AI agents are proliferating, embodying a range of devices such as smart speakers, smart displays, robots, cars, and more. We can envision a future where a personal conversational agent could migrate across different form factors and environments to always accompany and assist its user to support a far more continuous, personalized, and collaborative experience. This opens the question of what properties of a conversational AI agent migrates across forms, and how it would impact user perception. To explore this, we developed a Migratable AI system where a user's information and/or the agent's identity can be preserved as it migrates across form factors to help its user with a task. We designed a 2x2 between-subjects study to explore the effects of information migration and identity migration on user perceptions of trust, competence, likeability, and social presence. Our results suggest that identity migration had a positive effect on trust, competence, and social presence, while information migration had a positive effect on trust, competence, and likeability. Overall, users report the highest trust, competence, likeability, and social presence towards the conversational agent when both identity and information were migrated across embodiments.

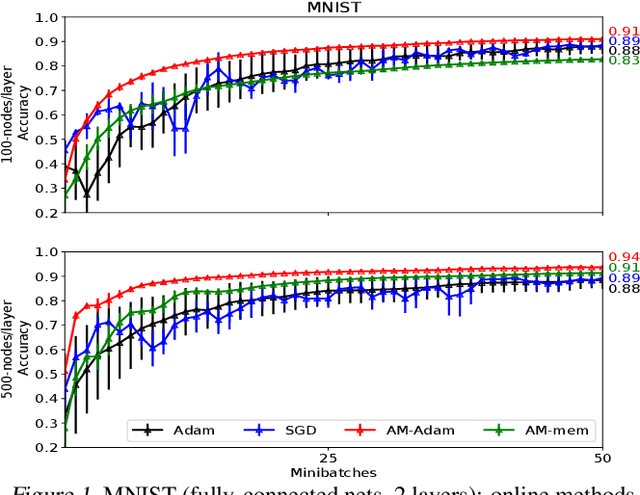

Beyond Backprop: Online Alternating Minimization with Auxiliary Variables

Oct 24, 2018

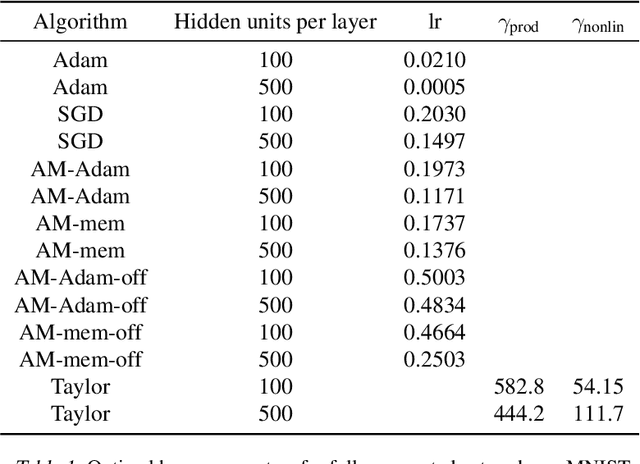

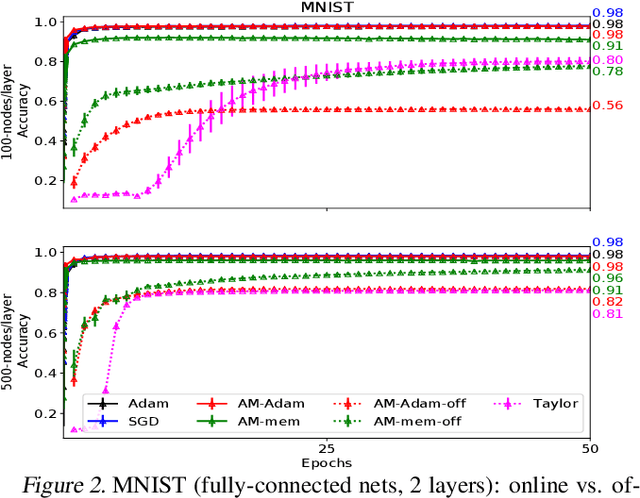

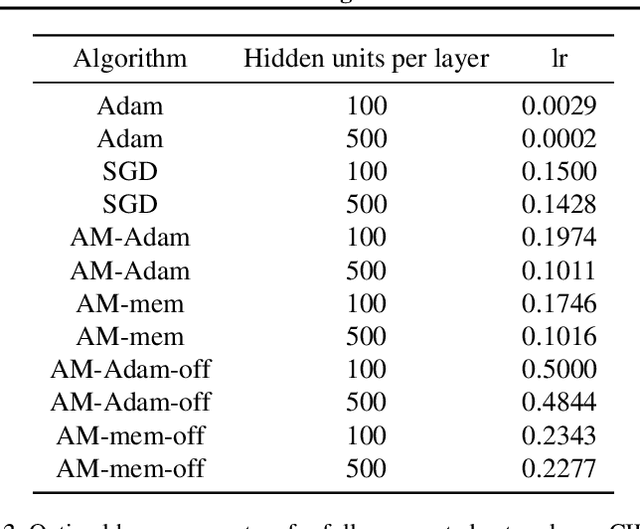

Abstract:We propose a novel online alternating minimization (AltMin) algorithm for training deep neural networks, provide theoretical convergence guarantees and demonstrate its advantages on several classification tasks as compared both to standard backpropagation with stochastic gradient descent (backprop-SGD) and to offline alternating minimization. The key difference from backpropagation is an explicit optimization over hidden activations, which eliminates gradient chain computation in backprop, and breaks the weight training problem into independent, local optimization subproblems; this allows to avoid vanishing gradient issues, simplify handling non-differentiable nonlinearities, and perform parallel weight updates across the layers. Moreover, parallel local synaptic weight optimization with explicit activation propagation is a step closer to a more biologically plausible learning model than backpropagation, whose biological implausibility has been frequently criticized. Finally, the online nature of our approach allows to handle very large datasets, as well as continual, lifelong learning, which is our key contribution on top of recently proposed offline alternating minimization schemes (e.g., (Carreira-Perpinan andWang 2014), (Taylor et al. 2016)).

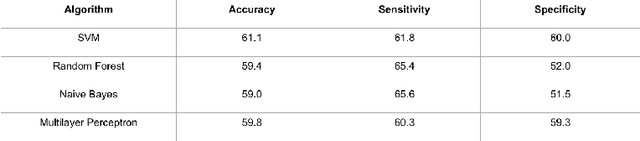

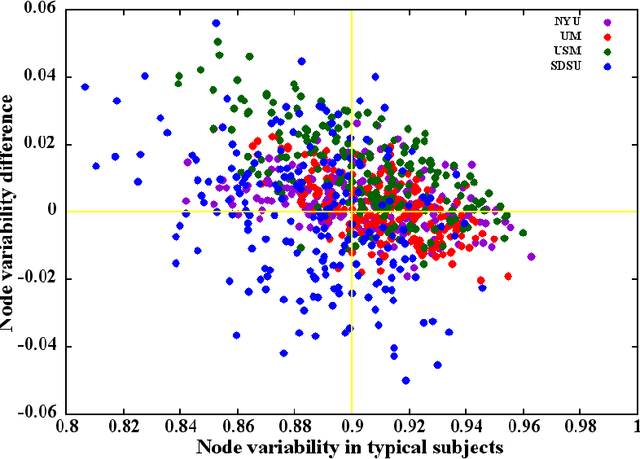

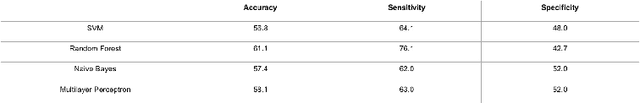

Autism Classification Using Brain Functional Connectivity Dynamics and Machine Learning

Dec 21, 2017

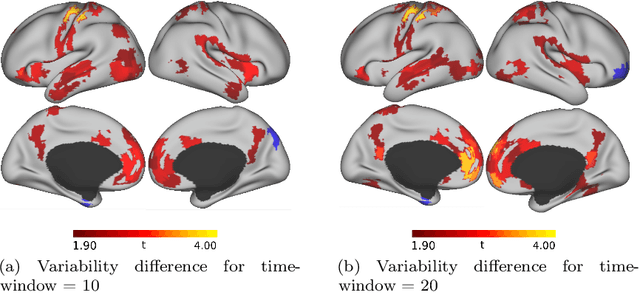

Abstract:The goal of the present study is to identify autism using machine learning techniques and resting-state brain imaging data, leveraging the temporal variability of the functional connections (FC) as the only information. We estimated and compared the FC variability across brain regions between typical, healthy subjects and autistic population by analyzing brain imaging data from a world-wide multi-site database known as ABIDE (Autism Brain Imaging Data Exchange). Our analysis revealed that patients diagnosed with autism spectrum disorder (ASD) show increased FC variability in several brain regions that are associated with low FC variability in the typical brain. We then used the enhanced FC variability of brain regions as features for training machine learning models for ASD classification and achieved 65% accuracy in identification of ASD versus control subjects within the dataset. We also used node strength estimated from number of functional connections per node averaged over the whole scan as features for ASD classification.The results reveal that the dynamic FC measures outperform or are comparable with the static FC measures in predicting ASD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge