Adam Liska

Pitfalls of Static Language Modelling

Feb 03, 2021

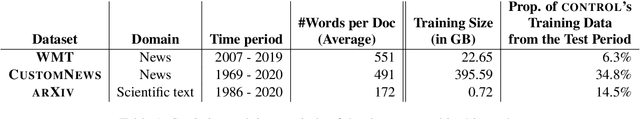

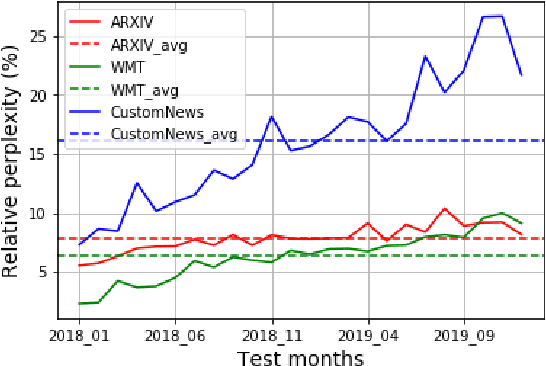

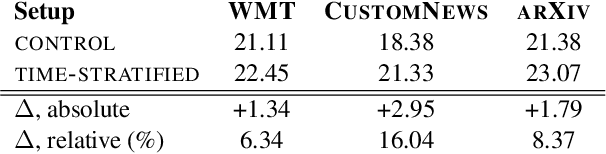

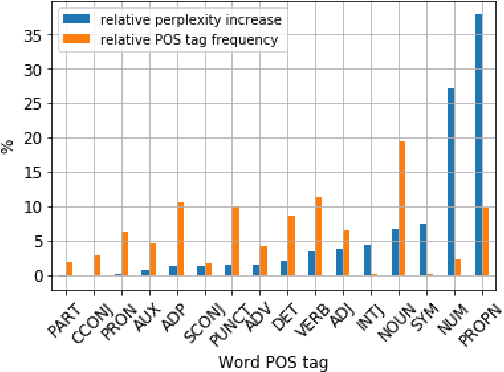

Abstract:Our world is open-ended, non-stationary and constantly evolving; thus what we talk about and how we talk about it changes over time. This inherent dynamic nature of language comes in stark contrast to the current static language modelling paradigm, which constructs training and evaluation sets from overlapping time periods. Despite recent progress, we demonstrate that state-of-the-art Transformer models perform worse in the realistic setup of predicting future utterances from beyond their training period -- a consistent pattern across three datasets from two domains. We find that, while increasing model size alone -- a key driver behind recent progress -- does not provide a solution for the temporal generalization problem, having models that continually update their knowledge with new information can indeed slow down the degradation over time. Hence, given the compilation of ever-larger language modelling training datasets, combined with the growing list of language-model-based NLP applications that require up-to-date knowledge about the world, we argue that now is the right time to rethink our static language modelling evaluation protocol, and develop adaptive language models that can remain up-to-date with respect to our ever-changing and non-stationary world.

Autism Classification Using Brain Functional Connectivity Dynamics and Machine Learning

Dec 21, 2017

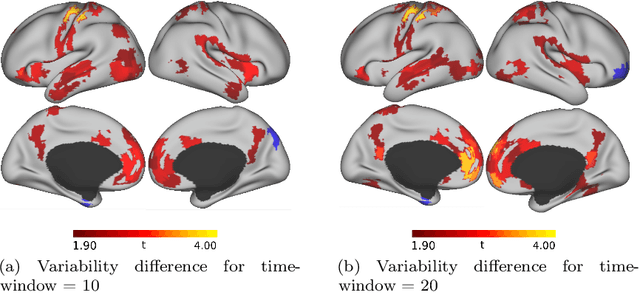

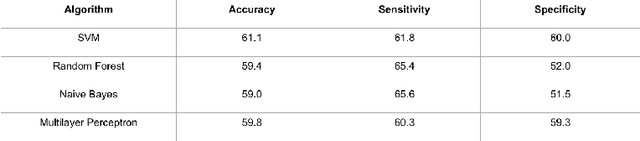

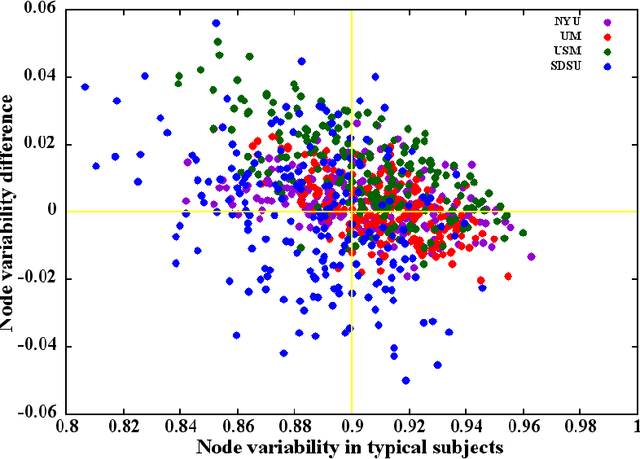

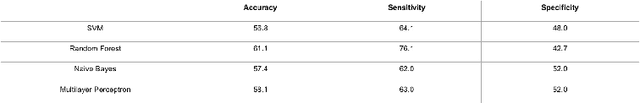

Abstract:The goal of the present study is to identify autism using machine learning techniques and resting-state brain imaging data, leveraging the temporal variability of the functional connections (FC) as the only information. We estimated and compared the FC variability across brain regions between typical, healthy subjects and autistic population by analyzing brain imaging data from a world-wide multi-site database known as ABIDE (Autism Brain Imaging Data Exchange). Our analysis revealed that patients diagnosed with autism spectrum disorder (ASD) show increased FC variability in several brain regions that are associated with low FC variability in the typical brain. We then used the enhanced FC variability of brain regions as features for training machine learning models for ASD classification and achieved 65% accuracy in identification of ASD versus control subjects within the dataset. We also used node strength estimated from number of functional connections per node averaged over the whole scan as features for ASD classification.The results reveal that the dynamic FC measures outperform or are comparable with the static FC measures in predicting ASD.

From Visual Attributes to Adjectives through Decompositional Distributional Semantics

Mar 24, 2015Abstract:As automated image analysis progresses, there is increasing interest in richer linguistic annotation of pictures, with attributes of objects (e.g., furry, brown...) attracting most attention. By building on the recent "zero-shot learning" approach, and paying attention to the linguistic nature of attributes as noun modifiers, and specifically adjectives, we show that it is possible to tag images with attribute-denoting adjectives even when no training data containing the relevant annotation are available. Our approach relies on two key observations. First, objects can be seen as bundles of attributes, typically expressed as adjectival modifiers (a dog is something furry, brown, etc.), and thus a function trained to map visual representations of objects to nominal labels can implicitly learn to map attributes to adjectives. Second, objects and attributes come together in pictures (the same thing is a dog and it is brown). We can thus achieve better attribute (and object) label retrieval by treating images as "visual phrases", and decomposing their linguistic representation into an attribute-denoting adjective and an object-denoting noun. Our approach performs comparably to a method exploiting manual attribute annotation, it outperforms various competitive alternatives in both attribute and object annotation, and it automatically constructs attribute-centric representations that significantly improve performance in supervised object recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge