Rafael Cisneros

CNRS-AIST JRL

The Kinetics Observer: A Tightly Coupled Estimator for Legged Robots

Jun 19, 2024

Abstract:In this paper, we propose the "Kinetics Observer", a novel estimator addressing the challenge of state estimation for legged robots using proprioceptive sensors (encoders, IMU and force/torque sensors). Based on a Multiplicative Extended Kalman Filter, the Kinetics Observer allows the real-time simultaneous estimation of contact and perturbation forces, and of the robot's kinematics, which are accurate enough to perform proprioceptive odometry. Thanks to a visco-elastic model of the contacts linking their kinematics to the ones of the centroid of the robot, the Kinetics Observer ensures a tight coupling between the whole-body kinematics and dynamics of the robot. This coupling entails a redundancy of the measurements that enhances the robustness and the accuracy of the estimation. This estimator was tested on two humanoid robots performing long distance walking on even terrain and non-coplanar multi-contact locomotion.

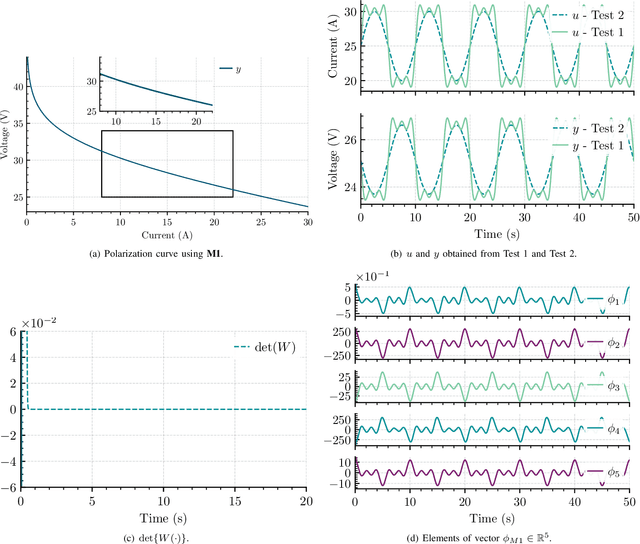

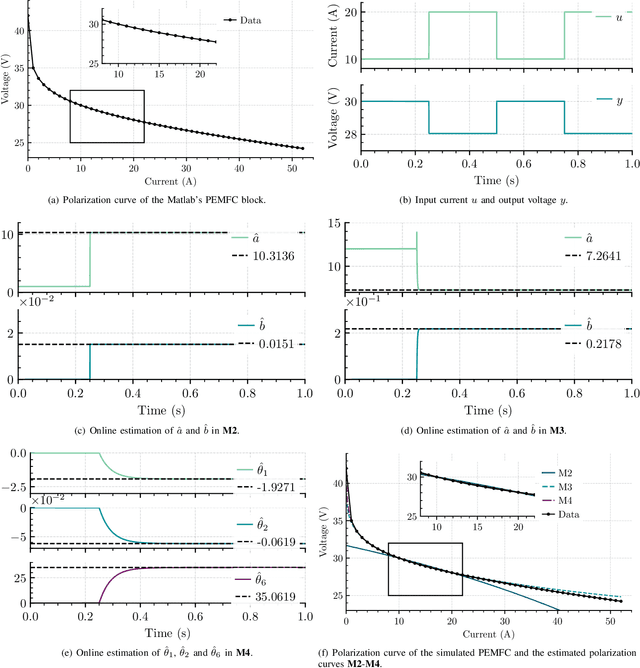

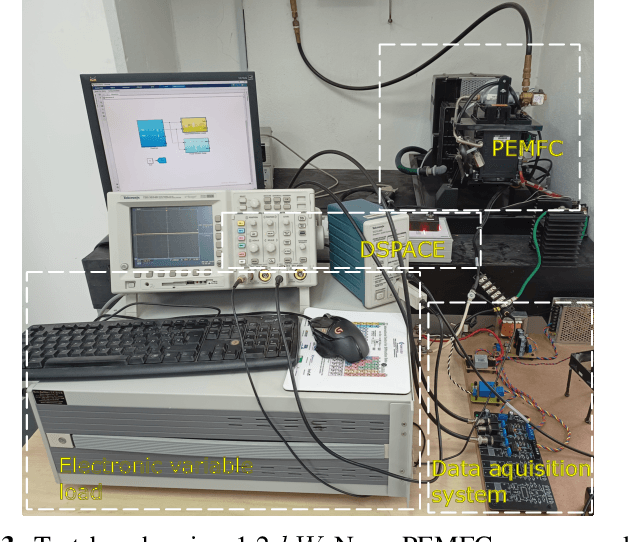

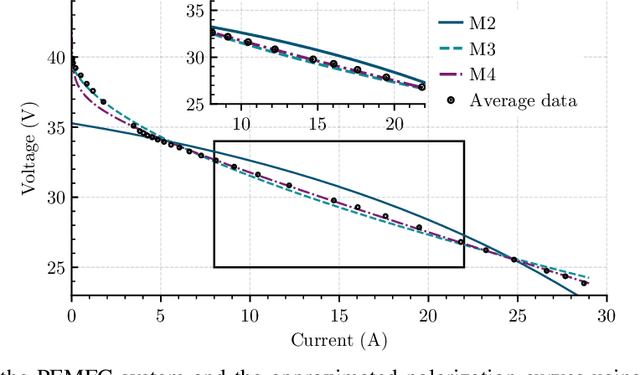

On-line Parameter Estimation of the Polarization Curve of a Fuel Cell with Guaranteed Convergence Properties: Theoretical and Experimental Results

Aug 12, 2023

Abstract:In this paper, we address the problem of online parameter estimation of a Proton Exchange Membrane Fuel Cell (PEMFC) polarization curve, that is the static relation between the voltage and the current of the PEMFC. The task of designing this estimator -- even off-line -- is complicated by the fact that the uncertain parameters enter the curve in a highly nonlinear fashion, namely in the form of nonseparable nonlinearities. We consider several scenarios for the model of the polarization curve, starting from the standard full model and including several popular simplifications to this complicated mathematical function. In all cases, we derive separable regression equations -- either linearly or nonlinearly parameterized -- which are instrumental for the implementation of the parameter estimators. We concentrate our attention on on-line estimation schemes for which, under suitable excitation conditions, global parameter convergence is ensured. Due to these global convergence properties, the estimators are robust to unavoidable additive noise and structural uncertainty. Moreover, their on-line nature endows the schemes with the ability to track (slow) parameter variations, that occur during the operation of the PEMFC. These two features -- unavailable in time-consuming off-line data-fitting procedures -- make the proposed estimators helpful for on-line time-saving characterization of a given PEMFC, and the implementation of fault-detection procedures and model-based adaptive control strategies. Simulation and experimental results that validate the theoretical claims are presented.

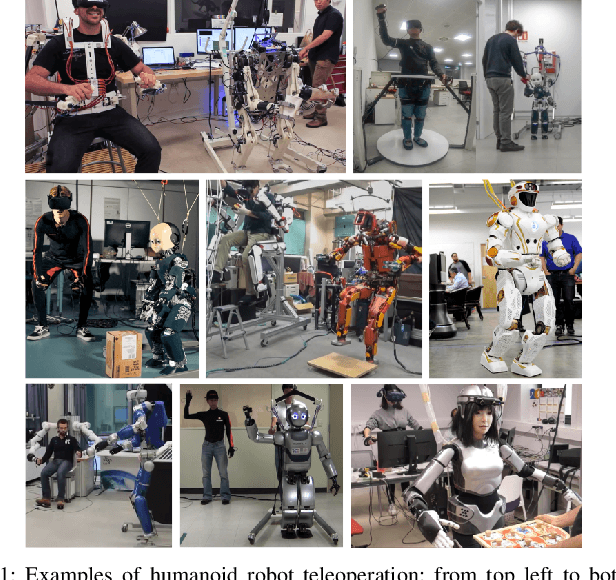

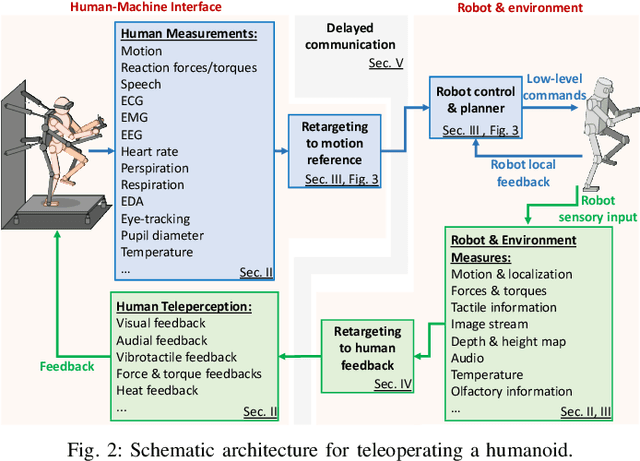

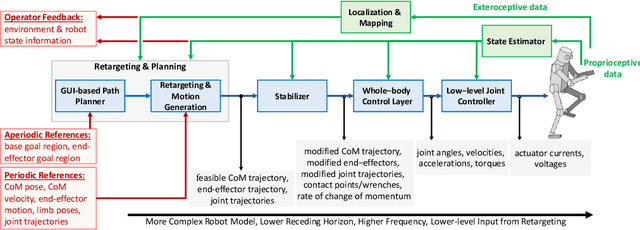

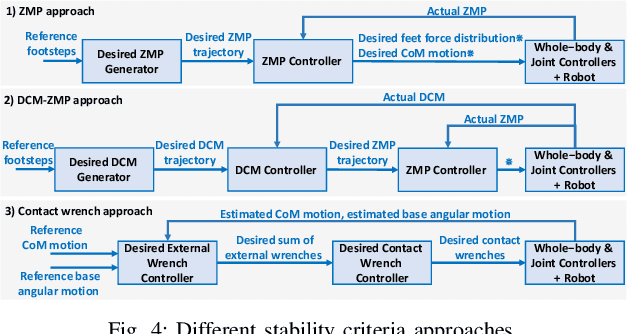

Teleoperation of Humanoid Robots: A Survey

Jan 11, 2023

Abstract:Teleoperation of humanoid robots enables the integration of the cognitive skills and domain expertise of humans with the physical capabilities of humanoid robots. The operational versatility of humanoid robots makes them the ideal platform for a wide range of applications when teleoperating in a remote environment. However, the complexity of humanoid robots imposes challenges for teleoperation, particularly in unstructured dynamic environments with limited communication. Many advancements have been achieved in the last decades in this area, but a comprehensive overview is still missing. This survey paper gives an extensive overview of humanoid robot teleoperation, presenting the general architecture of a teleoperation system and analyzing the different components. We also discuss different aspects of the topic, including technological and methodological advances, as well as potential applications. A web-based version of the paper can be found at https://humanoid-teleoperation.github.io/.

Enhanced Visual Feedback with Decoupled Viewpoint Control in Immersive Humanoid Robot Teleoperation using SLAM

Nov 03, 2022

Abstract:In immersive humanoid robot teleoperation, there are three main shortcomings that can alter the transparency of the visual feedback: the lag between the motion of the operator's and robot's head due to network communication delays or slow robot joint motion. This latency could cause a noticeable delay in the visual feedback, which jeopardizes the embodiment quality, can cause dizziness, and affects the interactivity resulting in operator frequent motion pauses for the visual feedback to settle; (ii) the mismatch between the camera's and the headset's field-of-views (FOV), the former having generally a lower FOV; and (iii) a mismatch between human's and robot's range of motions of the neck, the latter being also generally lower. In order to leverage these drawbacks, we developed a decoupled viewpoint control solution for a humanoid platform which allows visual feedback with low-latency and artificially increases the camera's FOV range to match that of the operator's headset. Our novel solution uses SLAM technology to enhance the visual feedback from a reconstructed mesh, complementing the areas that are not covered by the visual feedback from the robot. The visual feedback is presented as a point cloud in real-time to the operator. As a result, the operator is fed with real-time vision from the robot's head orientation by observing the pose of the point cloud. Balancing this kind of awareness and immersion is important in virtual reality based teleoperation, considering the safety and robustness of the control system. An experiment shows the effectiveness of our solution.

Learning Bipedal Walking On Planned Footsteps For Humanoid Robots

Jul 26, 2022

Abstract:Deep reinforcement learning (RL) based controllers for legged robots have demonstrated impressive robustness for walking in different environments for several robot platforms. To enable the application of RL policies for humanoid robots in real-world settings, it is crucial to build a system that can achieve robust walking in any direction, on 2D and 3D terrains, and be controllable by a user-command. In this paper, we tackle this problem by learning a policy to follow a given step sequence. The policy is trained with the help of a set of procedurally generated step sequences (also called footstep plans). We show that simply feeding the upcoming 2 steps to the policy is sufficient to achieve omnidirectional walking, turning in place, standing, and climbing stairs. Our method employs curriculum learning on the complexity of terrains, and circumvents the need for reference motions or pre-trained weights. We demonstrate the application of our proposed method to learn RL policies for 2 new robot platforms - HRP5P and JVRC-1 - in the MuJoCo simulation environment. The code for training and evaluation is available online.

Lyapunov-Stable Orientation Estimator for Humanoid Robots

Oct 09, 2020

Abstract:In this paper, we present an observation scheme, with proven Lyapunov stability, for estimating a humanoid's floating base orientation. The idea is to use velocity aided attitude estimation, which requires to know the velocity of the system. This velocity can be obtained by taking into account the kinematic data provided by contact information with the environment and using the IMU and joint encoders. We demonstrate how this operation can be used in the case of a fixed or a moving contact, allowing it to be employed for locomotion. We show how to use this velocity estimation within a selected two-stage state tilt estimator: (i) the first which has a global and quick convergence (ii) and the second which has smooth and robust dynamics. We provide new specific proofs of almost global Lyapunov asymptotic stability and local exponential convergence for this observer. Finally, we assess its performance by employing a comparative simulation and by using it within a closed-loop stabilization scheme for HRP-5P and HRP-2KAI robots performing whole-body kinematic tasks and locomotion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge