Luigi Penco

Stability-Aware Retargeting for Humanoid Multi-Contact Teleoperation

Oct 05, 2025Abstract:Teleoperation is a powerful method to generate reference motions and enable humanoid robots to perform a broad range of tasks. However, teleoperation becomes challenging when using hand contacts and non-coplanar surfaces, often leading to motor torque saturation or loss of stability through slipping. We propose a centroidal stability-based retargeting method that dynamically adjusts contact points and posture during teleoperation to enhance stability in these difficult scenarios. Central to our approach is an efficient analytical calculation of the stability margin gradient. This gradient is used to identify scenarios for which stability is highly sensitive to teleoperation setpoints and inform the local adjustment of these setpoints. We validate the framework in simulation and hardware by teleoperating manipulation tasks on a humanoid, demonstrating increased stability margins. We also demonstrate empirically that higher stability margins correlate with improved impulse resilience and joint torque margin.

A Behavior Architecture for Fast Humanoid Robot Door Traversals

Nov 05, 2024

Abstract:Towards the role of humanoid robots as squad mates in urban operations and other domains, we identified doors as a major area lacking capability development. In this paper, we focus on the ability of humanoid robots to navigate and deal with doors. Human-sized doors are ubiquitous in many environment domains and the humanoid form factor is uniquely suited to operate and traverse them. We present an architecture which incorporates GPU accelerated perception and a tree based interactive behavior coordination system with a whole body motion and walking controller. Our system is capable of performing door traversals on a variety of door types. It supports rapid authoring of behaviors for unseen door types and techniques to achieve re-usability of those authored behaviors. The behaviors are modelled using trees and feature logical reactivity and action sequences that can be executed with layered concurrency to increase speed. Primitive actions are built on top of our existing whole body controller which supports manipulation while walking. We include a perception system using both neural networks and classical computer vision for door mechanism detection outside of the lab environment. We present operator-robot interdependence analysis charts to explore how human cognition is combined with artificial intelligence to produce complex robot behavior. Finally, we present and discuss real robot performances of fast door traversals on our Nadia humanoid robot. Videos online at https://www.youtube.com/playlist?list=PLXuyT8w3JVgMPaB5nWNRNHtqzRK8i68dy.

Mixed Reality Teleoperation Assistance for Direct Control of Humanoids

Nov 01, 2024Abstract:Teleoperation plays a crucial role in enabling robot operations in challenging environments, yet existing limitations in effectiveness and accuracy necessitate the development of innovative strategies for improving teleoperated tasks. This article introduces a novel approach that utilizes mixed reality and assistive autonomy to enhance the efficiency and precision of humanoid robot teleoperation. By leveraging Probabilistic Movement Primitives, object detection, and Affordance Templates, the assistance combines user motion with autonomous capabilities, achieving task efficiency while maintaining human-like robot motion. Experiments and feasibility studies on the Nadia robot confirm the effectiveness of the proposed framework.

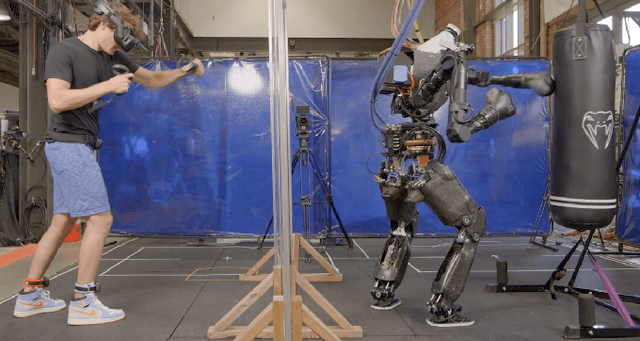

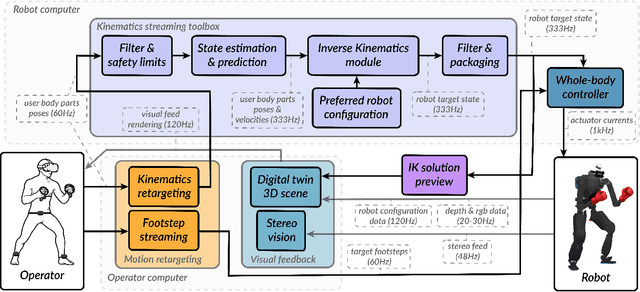

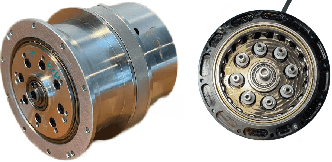

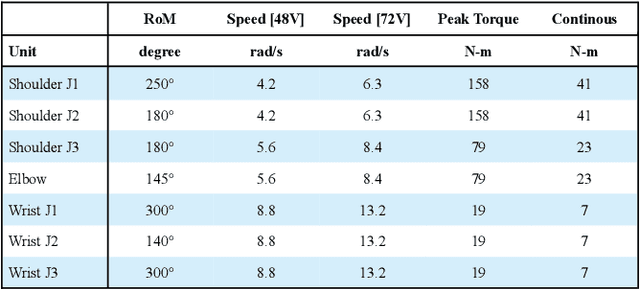

High-Speed and Impact Resilient Teleoperation of Humanoid Robots

Sep 06, 2024

Abstract:Teleoperation of humanoid robots has long been a challenging domain, necessitating advances in both hardware and software to achieve seamless and intuitive control. This paper presents an integrated solution based on several elements: calibration-free motion capture and retargeting, low-latency fast whole-body kinematics streaming toolbox and high-bandwidth cycloidal actuators. Our motion retargeting approach stands out for its simplicity, requiring only 7 IMUs to generate full-body references for the robot. The kinematics streaming toolbox, ensures real-time, responsive control of the robot's movements, significantly reducing latency and enhancing operational efficiency. Additionally, the use of cycloidal actuators makes it possible to withstand high speeds and impacts with the environment. Together, these approaches contribute to a teleoperation framework that offers unprecedented performance. Experimental results on the humanoid robot Nadia demonstrate the effectiveness of the integrated system.

Authoring and Operating Humanoid Behaviors On the Fly using Coactive Design Principles

Jul 25, 2023Abstract:Humanoid robots have the potential to perform useful tasks in a world built for humans. However, communicating intention and teaming with a humanoid robot is a multi-faceted and complex problem. In this paper, we tackle the problems associated with quickly and interactively authoring new robot behavior that works on real hardware. We bring the powerful concepts of Affordance Templates and Coactive Design methodology to this problem to attempt to solve and explain it. In our approach we use interactive stance and hand pose goals along with other types of actions to author humanoid robot behavior on the fly. We then describe how our operator interface works to author behaviors on the fly and provide interdependence analysis charts for task approach and door opening. We present timings from real robot performances for traversing a push door and doing a pick and place task on our Nadia humanoid robot.

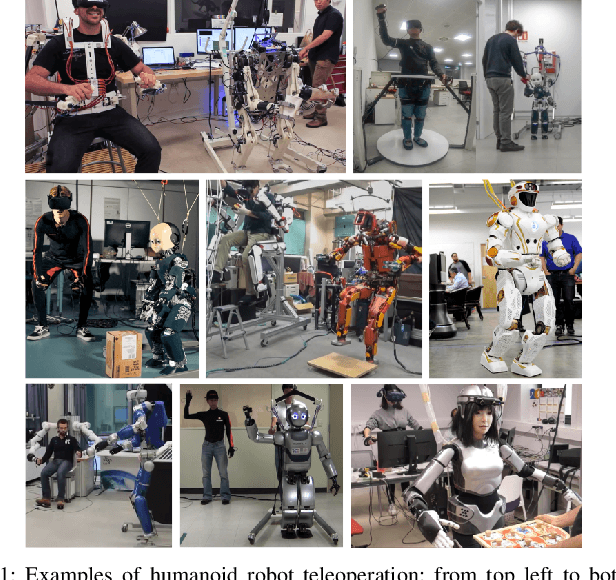

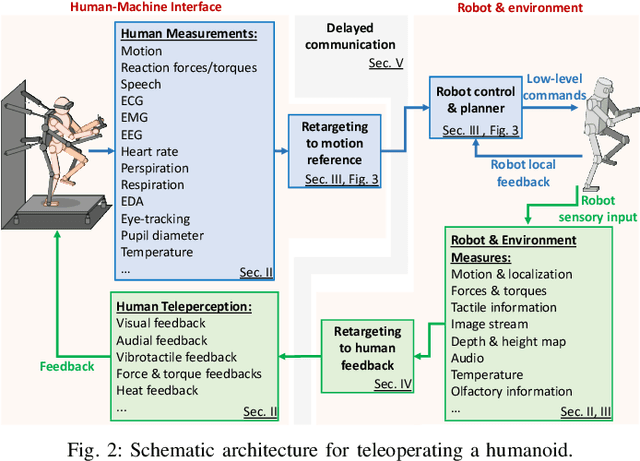

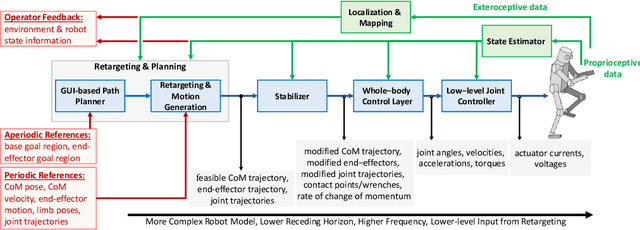

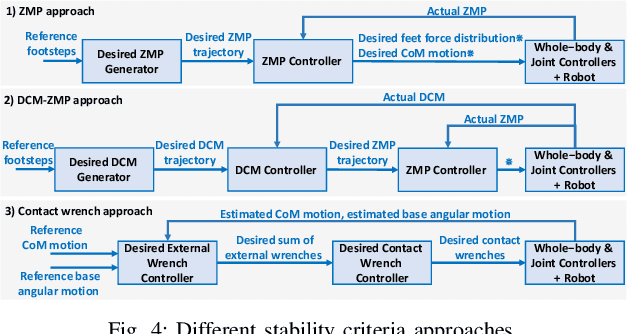

Teleoperation of Humanoid Robots: A Survey

Jan 11, 2023

Abstract:Teleoperation of humanoid robots enables the integration of the cognitive skills and domain expertise of humans with the physical capabilities of humanoid robots. The operational versatility of humanoid robots makes them the ideal platform for a wide range of applications when teleoperating in a remote environment. However, the complexity of humanoid robots imposes challenges for teleoperation, particularly in unstructured dynamic environments with limited communication. Many advancements have been achieved in the last decades in this area, but a comprehensive overview is still missing. This survey paper gives an extensive overview of humanoid robot teleoperation, presenting the general architecture of a teleoperation system and analyzing the different components. We also discuss different aspects of the topic, including technological and methodological advances, as well as potential applications. A web-based version of the paper can be found at https://humanoid-teleoperation.github.io/.

Prescient teleoperation of humanoid robots

Jul 12, 2021

Abstract:Humanoid robots could be versatile and intuitive human avatars that operate remotely in inaccessible places: the robot could reproduce in the remote location the movements of an operator equipped with a wearable motion capture device while sending visual feedback to the operator. While substantial progress has been made on transferring ("retargeting") human motions to humanoid robots, a major problem preventing the deployment of such systems in real applications is the presence of communication delays between the human input and the feedback from the robot: even a few hundred milliseconds of delay can irreversibly disturb the operator, let alone a few seconds. To overcome these delays, we introduce a system in which a humanoid robot executes commands before it actually receives them, so that the visual feedback appears to be synchronized to the operator, whereas the robot executed the commands in the past. To do so, the robot continuously predicts future commands by querying a machine learning model that is trained on past trajectories and conditioned on the last received commands. In our experiments, an operator was able to successfully control a humanoid robot (32 degrees of freedom) with stochastic delays up to 2 seconds in several whole-body manipulation tasks, including reaching different targets, picking up, and placing a box at distinct locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge