Jean-Baptiste Mouret

LARSEN

Extremum Flow Matching for Offline Goal Conditioned Reinforcement Learning

May 26, 2025Abstract:Imitation learning is a promising approach for enabling generalist capabilities in humanoid robots, but its scaling is fundamentally constrained by the scarcity of high-quality expert demonstrations. This limitation can be mitigated by leveraging suboptimal, open-ended play data, often easier to collect and offering greater diversity. This work builds upon recent advances in generative modeling, specifically Flow Matching, an alternative to Diffusion models. We introduce a method for estimating the extremum of the learned distribution by leveraging the unique properties of Flow Matching, namely, deterministic transport and support for arbitrary source distributions. We apply this method to develop several goal-conditioned imitation and reinforcement learning algorithms based on Flow Matching, where policies are conditioned on both current and goal observations. We explore and compare different architectural configurations by combining core components, such as critic, planner, actor, or world model, in various ways. We evaluated our agents on the OGBench benchmark and analyzed how different demonstration behaviors during data collection affect performance in a 2D non-prehensile pushing task. Furthermore, we validated our approach on real hardware by deploying it on the Talos humanoid robot to perform complex manipulation tasks based on high-dimensional image observations, featuring a sequence of pick-and-place and articulated object manipulation in a realistic kitchen environment. Experimental videos and code are available at: https://hucebot.github.io/extremum_flow_matching_website/

From Vocal Instructions to Household Tasks: The Inria Tiago++ in the euROBIN Service Robots Coopetition

Dec 20, 2024Abstract:This paper describes the Inria team's integrated robotics system used in the 1st euROBIN coopetition, during which service robots performed voice-activated household tasks in a kitchen setting.The team developed a modified Tiago++ platform that leverages a whole-body control stack for autonomous and teleoperated modes, and an LLM-based pipeline for instruction understanding and task planning. The key contributions (opens-sourced) are the integration of these components and the design of custom teleoperation devices, addressing practical challenges in the deployment of service robots.

Flying in air ducts

Oct 10, 2024Abstract:Air ducts are integral to modern buildings but are challenging to access for inspection. Small quadrotor drones offer a potential solution, as they can navigate both horizontal and vertical sections and smoothly fly over debris. However, hovering inside air ducts is problematic due to the airflow generated by the rotors, which recirculates inside the duct and destabilizes the drone, whereas hovering is a key feature for many inspection missions. In this article, we map the aerodynamic forces that affect a hovering drone in a duct using a robotic setup and a force/torque sensor. Based on the collected aerodynamic data, we identify a recommended position for stable flight, which corresponds to the bottom third for a circular duct. We then develop a neural network-based positioning system that leverages low-cost time-of-flight sensors. By combining these aerodynamic insights and the data-driven positioning system, we show that a small quadrotor drone (here, 180 mm) can hover and fly inside small air ducts, starting with a diameter of 350 mm. These results open a new and promising application domain for drones.

Words2Contact: Identifying Support Contacts from Verbal Instructions Using Foundation Models

Jul 19, 2024

Abstract:This paper presents Words2Contact, a language-guided multi-contact placement pipeline leveraging large language models and vision language models. Our method is a key component for language-assisted teleoperation and human-robot cooperation, where human operators can instruct the robots where to place their support contacts before whole-body reaching or manipulation using natural language. Words2Contact transforms the verbal instructions of a human operator into contact placement predictions; it also deals with iterative corrections, until the human is satisfied with the contact location identified in the robot's field of view. We benchmark state-of-the-art LLMs and VLMs for size and performance in contact prediction. We demonstrate the effectiveness of the iterative correction process, showing that users, even naive, quickly learn how to instruct the system to obtain accurate locations. Finally, we validate Words2Contact in real-world experiments with the Talos humanoid robot, instructed by human operators to place support contacts on different locations and surfaces to avoid falling when reaching for distant objects.

Flow Matching Imitation Learning for Multi-Support Manipulation

Jul 17, 2024

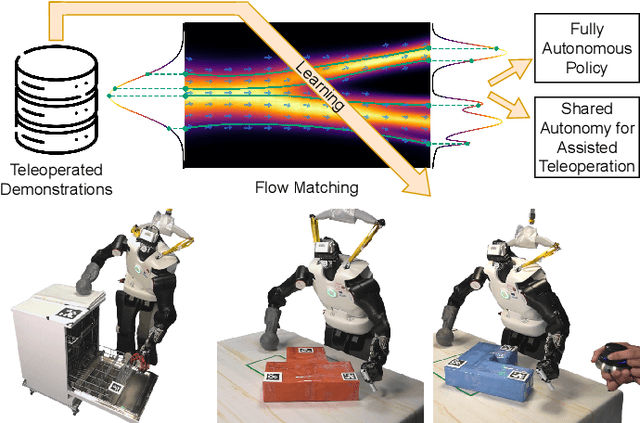

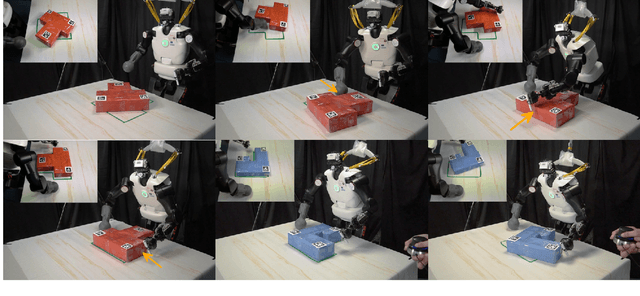

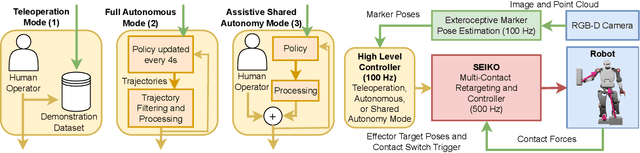

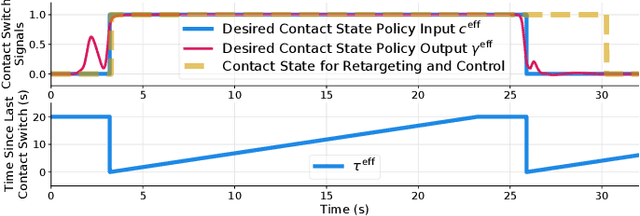

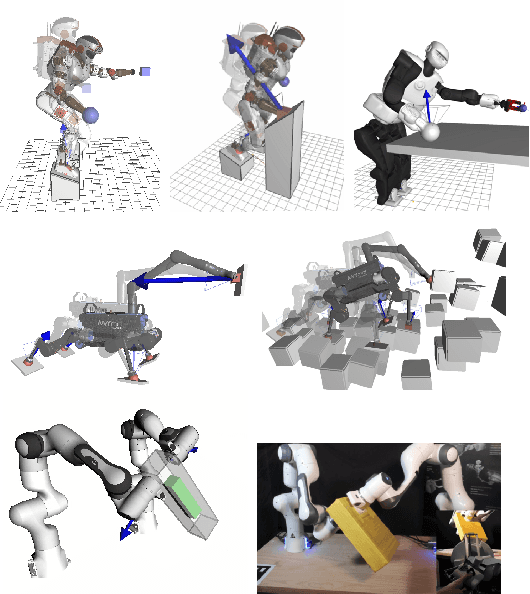

Abstract:Humanoid robots could benefit from using their upper bodies for support contacts, enhancing their workspace, stability, and ability to perform contact-rich and pushing tasks. In this paper, we propose a unified approach that combines an optimization-based multi-contact whole-body controller with Flow Matching, a recently introduced method capable of generating multi-modal trajectory distributions for imitation learning. In simulation, we show that Flow Matching is more appropriate for robotics than Diffusion and traditional behavior cloning. On a real full-size humanoid robot (Talos), we demonstrate that our approach can learn a whole-body non-prehensile box-pushing task and that the robot can close dishwasher drawers by adding contacts with its free hand when needed for balance. We also introduce a shared autonomy mode for assisted teleoperation, providing automatic contact placement for tasks not covered in the demonstrations. Full experimental videos are available at: https://hucebot.github.io/flow_multisupport_website/

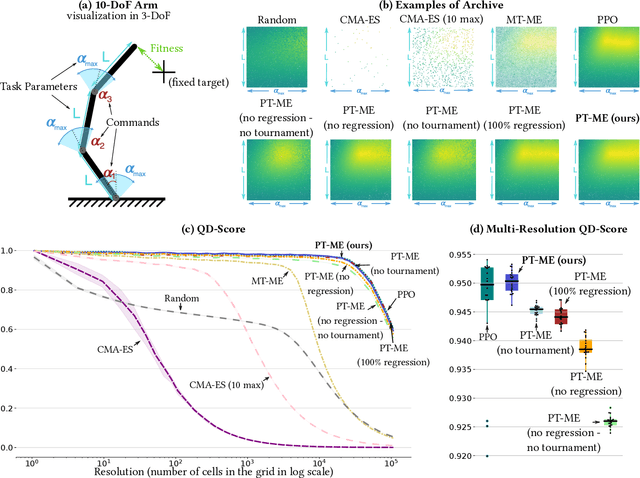

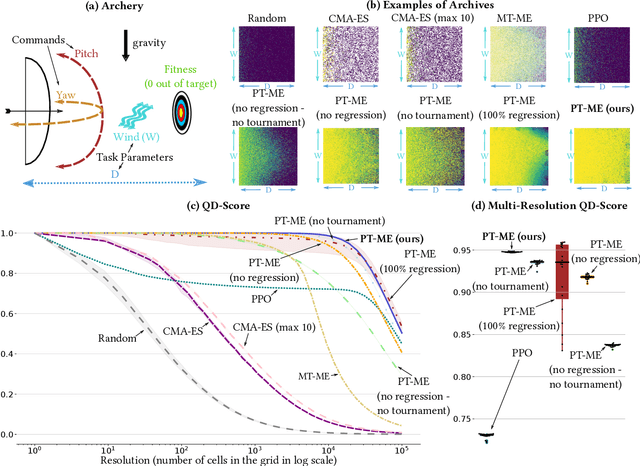

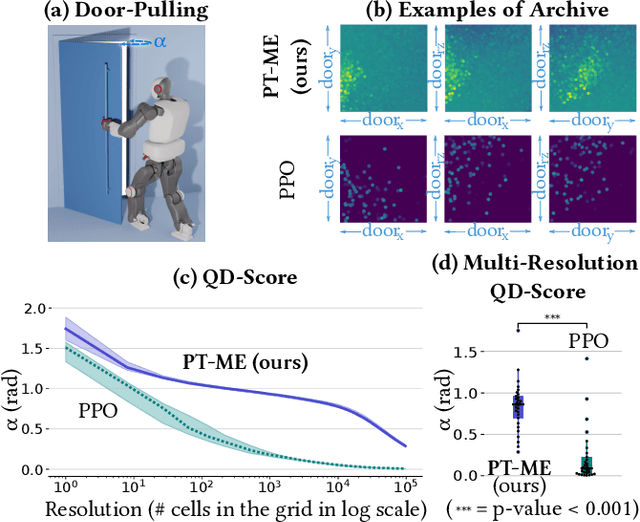

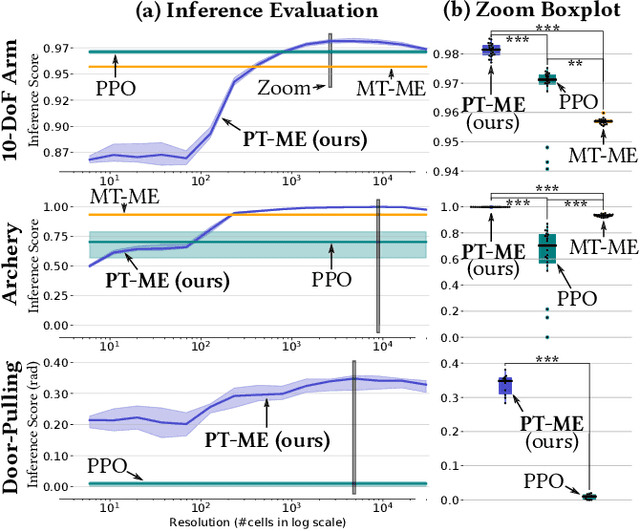

Parametric-Task MAP-Elites

Feb 02, 2024

Abstract:Optimizing a set of functions simultaneously by leveraging their similarity is called multi-task optimization. Current black-box multi-task algorithms only solve a finite set of tasks, even when the tasks originate from a continuous space. In this paper, we introduce Parametric-task MAP-Elites (PT-ME), a novel black-box algorithm to solve continuous multi-task optimization problems. This algorithm (1) solves a new task at each iteration, effectively covering the continuous space, and (2) exploits a new variation operator based on local linear regression. The resulting dataset of solutions makes it possible to create a function that maps any task parameter to its optimal solution. We show on two parametric-task toy problems and a more realistic and challenging robotic problem in simulation that PT-ME outperforms all baselines, including the deep reinforcement learning algorithm PPO.

Multi-Contact Whole Body Force Control for Position-Controlled Robots

Jan 16, 2024Abstract:Many humanoid and multi-legged robots are controlled in positions rather than in torques, preventing direct control of contact forces, and hampering their ability to create multiple contacts to enhance their balance, such as placing a hand on a wall or a handrail. This paper introduces the SEIKO (Sequential Equilibrium Inverse Kinematic Optimization) pipeline, drawing inspiration from flexibility models used in serial elastic actuators to indirectly control contact forces on traditional position-controlled robots. SEIKO formulates whole-body retargeting from Cartesian commands and admittance control using two quadratic programs solved in real time. We validated our pipeline with experiments on the real, full-scale humanoid robot Talos in various multicontact scenarios, including pushing tasks, far-reaching tasks, stair climbing, and stepping on sloped surfaces. This work opens the possibility of stable, contact-rich behaviors while getting around many of the challenges of torque-controlled robots. Code and videos are available at https://hucebot.github.io/seiko_controller_website/ .

Feasibility Retargeting for Multi-contact Teleoperation and Physical Interaction

Aug 07, 2023

Abstract:This short paper outlines two recent works on multi-contact teleoperation and the development of the SEIKO (Sequential Equilibrium Inverse Kinematic Optimization) framework. SEIKO adapts commands from the operator in real-time and ensures that the reference configuration sent to the underlying controller is feasible. Additionally, an admittance scheme is used to implement physical interaction, which is then combined with the operator's command and retargeted. SEIKO has been applied in simulations on various robots, including humanoid and quadruped robots designed for loco-manipulation. Furthermore, SEIKO has been tested on real hardware for bimanual heavy object carrying tasks.

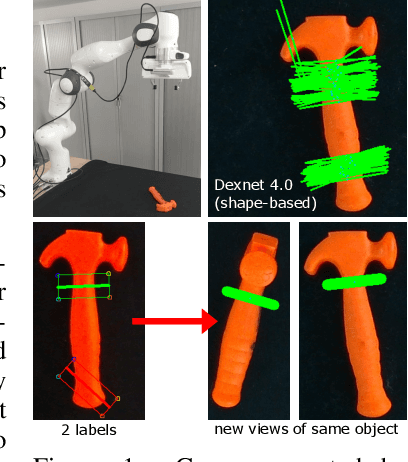

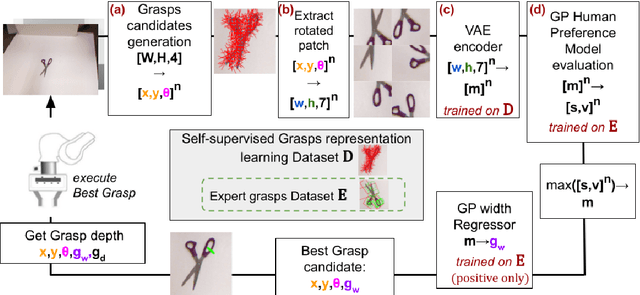

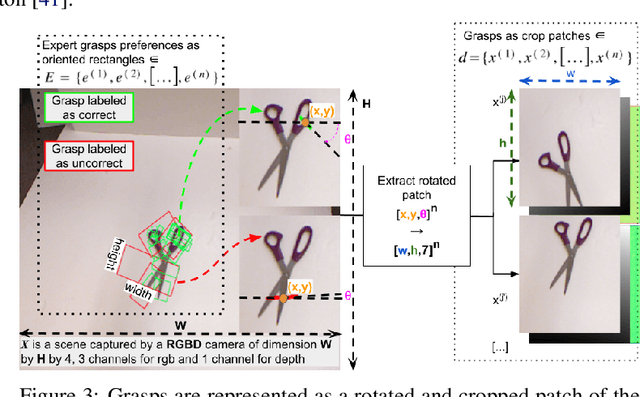

Data-efficient learning of object-centric grasp preferences

Mar 01, 2022

Abstract:Grasping made impressive progress during the last few years thanks to deep learning. However, there are many objects for which it is not possible to choose a grasp by only looking at an RGB-D image, might it be for physical reasons (e.g., a hammer with uneven mass distribution) or task constraints (e.g., food that should not be spoiled). In such situations, the preferences of experts need to be taken into account. In this paper, we introduce a data-efficient grasping pipeline (Latent Space GP Selector -- LGPS) that learns grasp preferences with only a few labels per object (typically 1 to 4) and generalizes to new views of this object. Our pipeline is based on learning a latent space of grasps with a dataset generated with any state-of-the-art grasp generator (e.g., Dex-Net). This latent space is then used as a low-dimensional input for a Gaussian process classifier that selects the preferred grasp among those proposed by the generator. The results show that our method outperforms both GR-ConvNet and GG-CNN (two state-of-the-art methods that are also based on labeled grasps) on the Cornell dataset, especially when only a few labels are used: only 80 labels are enough to correctly choose 80% of the grasps (885 scenes, 244 objects). Results are similar on our dataset (91 scenes, 28 objects).

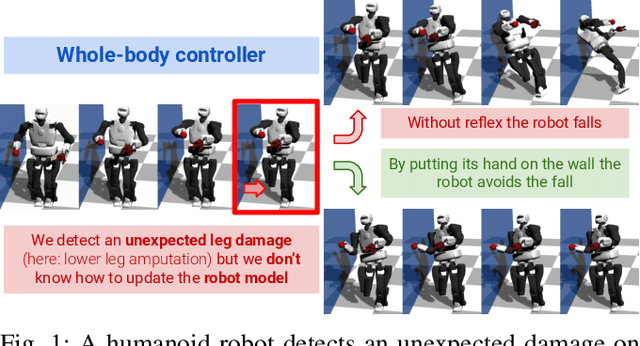

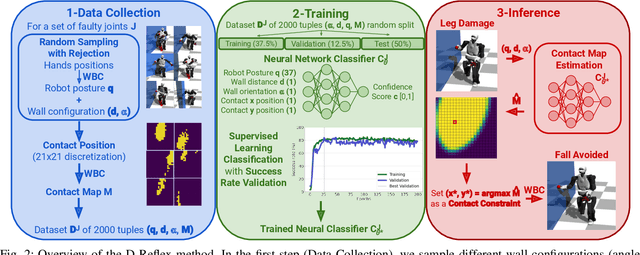

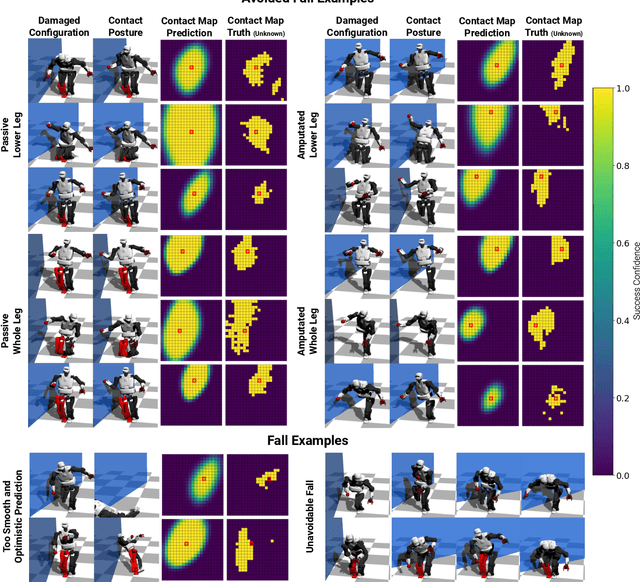

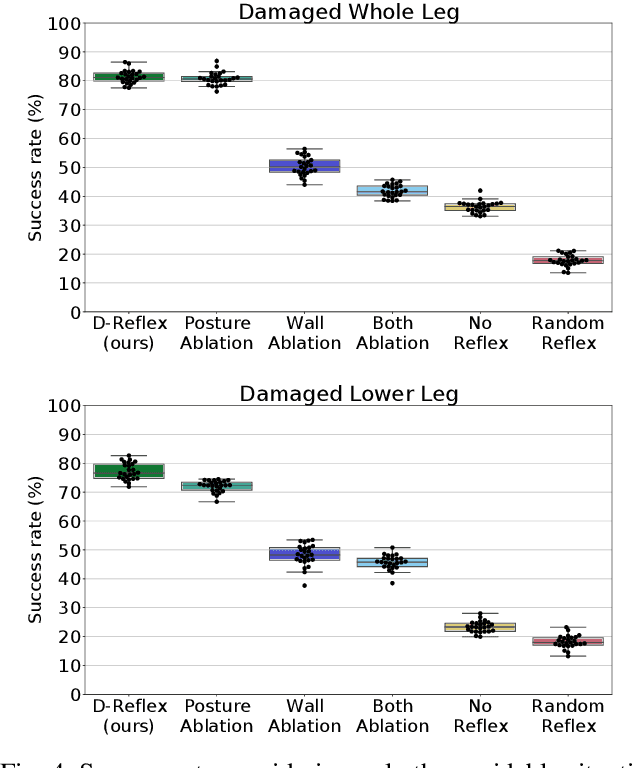

First do not fall: learning to exploit the environment with a damaged humanoid robot

Mar 01, 2022

Abstract:Humanoid robots could replace humans in hazardous situations but most of such situations are equally dangerous for them, which means that they have a high chance of being damaged and fall. We hypothesize that humanoid robots would be mostly used in buildings, which makes them likely to be close to a wall. To avoid a fall, they can therefore lean on the closest wall, like a human would do, provided that they find in a few milliseconds where to put the hand(s). This article introduces a method, called D-Reflex, that learns a neural network that chooses this contact position given the wall orientation, the wall distance, and the posture of the robot. This contact position is then used by a whole-body controller to reach a stable posture. We show that D-Reflex allows a simulated TALOS robot (1.75m, 100kg, 30 degrees of freedom) to avoid more than 75% of the avoidable falls.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge