Bhavyansh Mishra

A Behavior Architecture for Fast Humanoid Robot Door Traversals

Nov 05, 2024

Abstract:Towards the role of humanoid robots as squad mates in urban operations and other domains, we identified doors as a major area lacking capability development. In this paper, we focus on the ability of humanoid robots to navigate and deal with doors. Human-sized doors are ubiquitous in many environment domains and the humanoid form factor is uniquely suited to operate and traverse them. We present an architecture which incorporates GPU accelerated perception and a tree based interactive behavior coordination system with a whole body motion and walking controller. Our system is capable of performing door traversals on a variety of door types. It supports rapid authoring of behaviors for unseen door types and techniques to achieve re-usability of those authored behaviors. The behaviors are modelled using trees and feature logical reactivity and action sequences that can be executed with layered concurrency to increase speed. Primitive actions are built on top of our existing whole body controller which supports manipulation while walking. We include a perception system using both neural networks and classical computer vision for door mechanism detection outside of the lab environment. We present operator-robot interdependence analysis charts to explore how human cognition is combined with artificial intelligence to produce complex robot behavior. Finally, we present and discuss real robot performances of fast door traversals on our Nadia humanoid robot. Videos online at https://www.youtube.com/playlist?list=PLXuyT8w3JVgMPaB5nWNRNHtqzRK8i68dy.

A Fast, Autonomous, Bipedal Walking Behavior over Rapid Regions

Jul 17, 2022

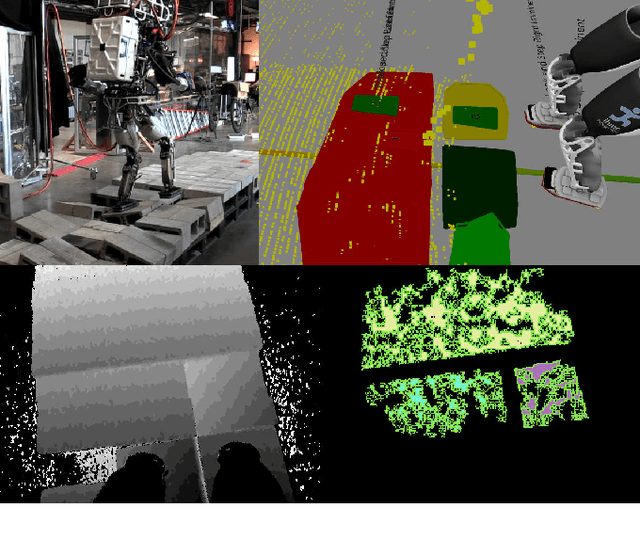

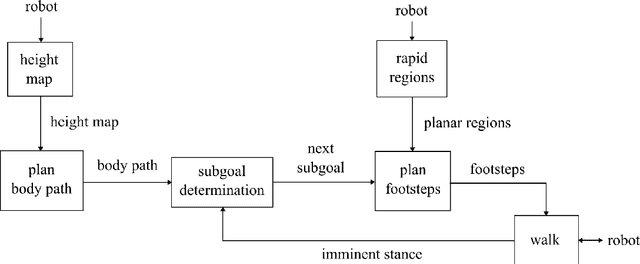

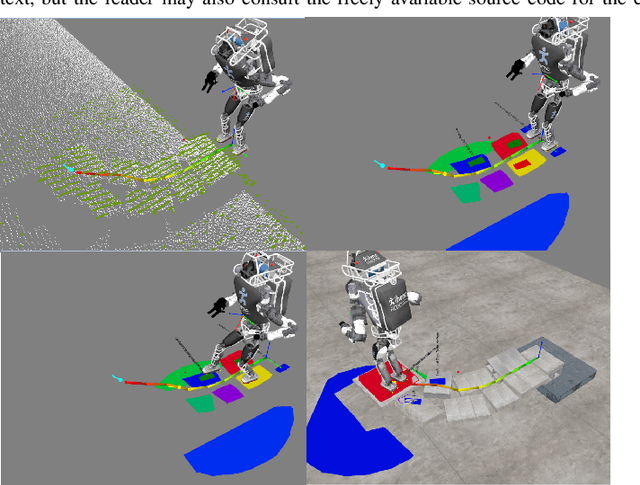

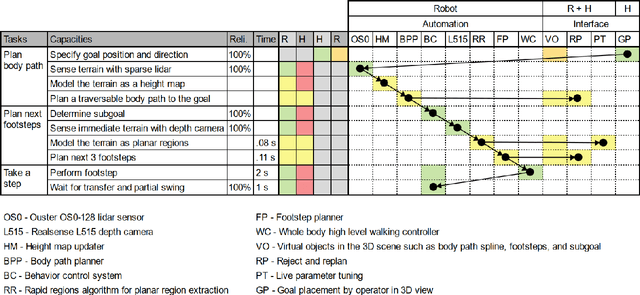

Abstract:In trying to build humanoid robots that perform useful tasks in a world built for humans, we address the problem of autonomous locomotion. Humanoid robot planning and control algorithms for walking over rough terrain are becoming increasingly capable. At the same time, commercially available depth cameras have been getting more accurate and GPU computing has become a primary tool in AI research. In this paper, we present a newly constructed behavior control system for achieving fast, autonomous, bipedal walking, without pauses or deliberation. We achieve this using a recently published rapid planar regions perception algorithm, a height map based body path planner, an A* footstep planner, and a momentum-based walking controller. We put these elements together to form a behavior control system supported by modern software development practices and simulation tools.

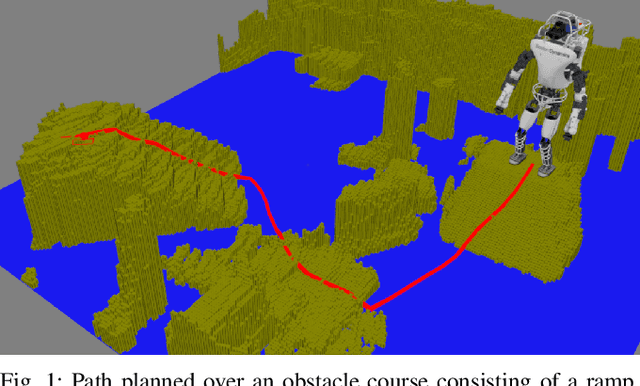

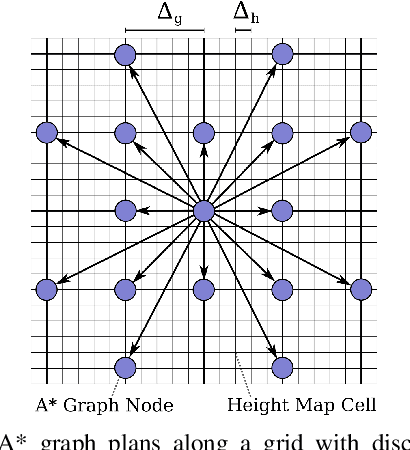

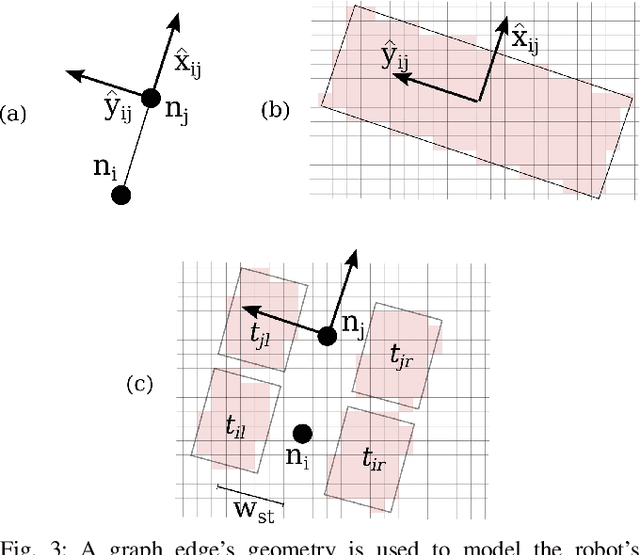

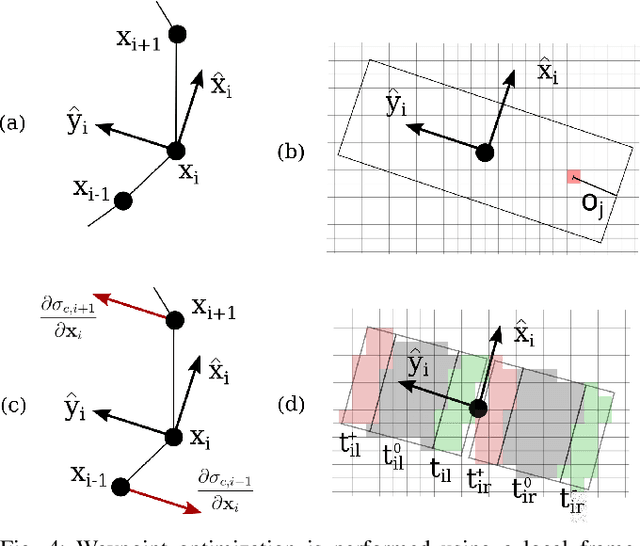

Humanoid Path Planning over Rough Terrain using Traversability Assessment

Mar 01, 2022

Abstract:We present a planning framework designed for humanoid navigation over challenging terrain. This framework is designed to plan a traversable, smooth, and collision-free path using a 2.5D height map. The planner is comprised of two stages. The first stage consists of an A* planner which reasons about traversability using terrain features. A novel cost function is presented which encodes the bipedal gait directly into the graph structure, enabling natural paths that are robust to small gaps in traversability. The second stage is an optimization framework which smooths the path while further improving traversability. The planner is tested on a variety of terrains in simulation and is combined with a footstep planner and balance controller to create an integrated navigation framework, which is demonstrated on a DRC Boston Dynamics Atlas robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge