Sylvain Bertrand

ZEST: Zero-shot Embodied Skill Transfer for Athletic Robot Control

Jan 30, 2026Abstract:Achieving robust, human-like whole-body control on humanoid robots for agile, contact-rich behaviors remains a central challenge, demanding heavy per-skill engineering and a brittle process of tuning controllers. We introduce ZEST (Zero-shot Embodied Skill Transfer), a streamlined motion-imitation framework that trains policies via reinforcement learning from diverse sources -- high-fidelity motion capture, noisy monocular video, and non-physics-constrained animation -- and deploys them to hardware zero-shot. ZEST generalizes across behaviors and platforms while avoiding contact labels, reference or observation windows, state estimators, and extensive reward shaping. Its training pipeline combines adaptive sampling, which focuses training on difficult motion segments, and an automatic curriculum using a model-based assistive wrench, together enabling dynamic, long-horizon maneuvers. We further provide a procedure for selecting joint-level gains from approximate analytical armature values for closed-chain actuators, along with a refined model of actuators. Trained entirely in simulation with moderate domain randomization, ZEST demonstrates remarkable generality. On Boston Dynamics' Atlas humanoid, ZEST learns dynamic, multi-contact skills (e.g., army crawl, breakdancing) from motion capture. It transfers expressive dance and scene-interaction skills, such as box-climbing, directly from videos to Atlas and the Unitree G1. Furthermore, it extends across morphologies to the Spot quadruped, enabling acrobatics, such as a continuous backflip, through animation. Together, these results demonstrate robust zero-shot deployment across heterogeneous data sources and embodiments, establishing ZEST as a scalable interface between biological movements and their robotic counterparts.

A Behavior Architecture for Fast Humanoid Robot Door Traversals

Nov 05, 2024

Abstract:Towards the role of humanoid robots as squad mates in urban operations and other domains, we identified doors as a major area lacking capability development. In this paper, we focus on the ability of humanoid robots to navigate and deal with doors. Human-sized doors are ubiquitous in many environment domains and the humanoid form factor is uniquely suited to operate and traverse them. We present an architecture which incorporates GPU accelerated perception and a tree based interactive behavior coordination system with a whole body motion and walking controller. Our system is capable of performing door traversals on a variety of door types. It supports rapid authoring of behaviors for unseen door types and techniques to achieve re-usability of those authored behaviors. The behaviors are modelled using trees and feature logical reactivity and action sequences that can be executed with layered concurrency to increase speed. Primitive actions are built on top of our existing whole body controller which supports manipulation while walking. We include a perception system using both neural networks and classical computer vision for door mechanism detection outside of the lab environment. We present operator-robot interdependence analysis charts to explore how human cognition is combined with artificial intelligence to produce complex robot behavior. Finally, we present and discuss real robot performances of fast door traversals on our Nadia humanoid robot. Videos online at https://www.youtube.com/playlist?list=PLXuyT8w3JVgMPaB5nWNRNHtqzRK8i68dy.

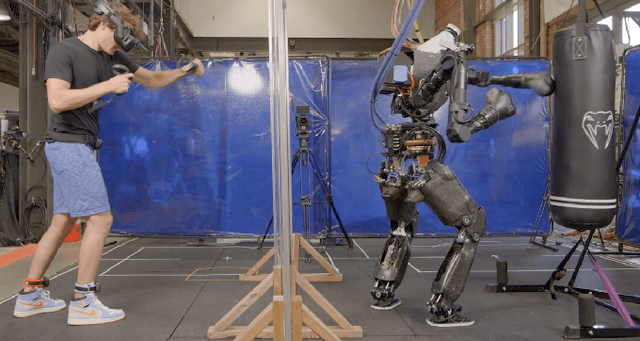

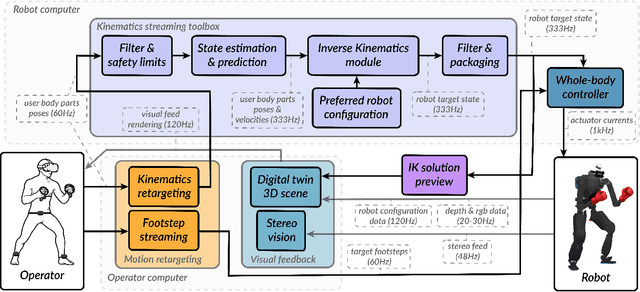

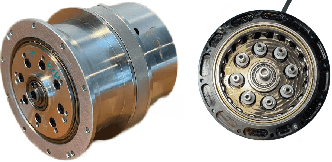

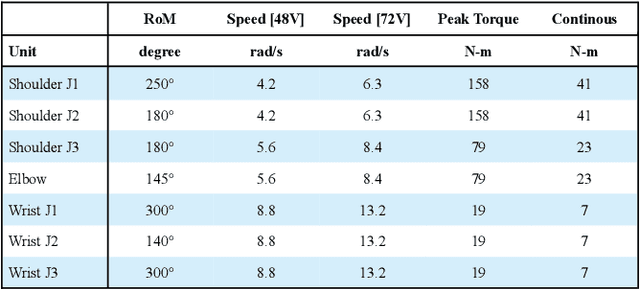

High-Speed and Impact Resilient Teleoperation of Humanoid Robots

Sep 06, 2024

Abstract:Teleoperation of humanoid robots has long been a challenging domain, necessitating advances in both hardware and software to achieve seamless and intuitive control. This paper presents an integrated solution based on several elements: calibration-free motion capture and retargeting, low-latency fast whole-body kinematics streaming toolbox and high-bandwidth cycloidal actuators. Our motion retargeting approach stands out for its simplicity, requiring only 7 IMUs to generate full-body references for the robot. The kinematics streaming toolbox, ensures real-time, responsive control of the robot's movements, significantly reducing latency and enhancing operational efficiency. Additionally, the use of cycloidal actuators makes it possible to withstand high speeds and impacts with the environment. Together, these approaches contribute to a teleoperation framework that offers unprecedented performance. Experimental results on the humanoid robot Nadia demonstrate the effectiveness of the integrated system.

Physically Consistent Online Inertial Adaptation for Humanoid Loco-manipulation

May 13, 2024

Abstract:The ability to accomplish manipulation and locomotion tasks in the presence of significant time-varying external loads is a remarkable skill of humans that has yet to be replicated convincingly by humanoid robots. Such an ability will be a key requirement in the environments we envision deploying our robots: dull, dirty, and dangerous. External loads constitute a large model bias, which is typically unaccounted for. In this work, we enable our humanoid robot to engage in loco-manipulation tasks in the presence of significant model bias due to external loads. We propose an online estimation and control framework involving the combination of a physically consistent extended Kalman filter for inertial parameter estimation coupled to a whole-body controller. We showcase our results both in simulation and in hardware, where weights are mounted on Nadia's wrist links as a proxy for engaging in tasks where large external loads are applied to the robot.

Efficient, Dynamic Locomotion through Step Placement with Straight Legs and Rolling Contacts

Oct 19, 2023Abstract:For humans, fast, efficient walking over flat ground represents the vast majority of locomotion that an individual experiences on a daily basis, and for an effective, real-world humanoid robot the same will likely be the case. In this work, we propose a locomotion controller for efficient walking over near-flat ground using a relatively simple, model-based controller that utilizes a novel combination of several interesting design features including an ALIP-based step adjustment strategy, stance leg length control as an alternative to center of mass height control, and rolling contact for heel-to-toe motion of the stance foot. We then present the results of this controller on our robot Nadia, both in simulation and on hardware. These results include validation of this controller's ability to perform fast, reliable forward walking at 0.75 m/s along with backwards walking, side-stepping, turning in place, and push recovery. We also present an efficiency comparison between the proposed control strategy and our baseline walking controller over three steady-state walking speeds. Lastly, we demonstrate some of the benefits of utilizing rolling contact in the stance foot, specifically the reduction of necessary positive and negative work throughout the stride.

Reachability Aware Capture Regions with Time Adjustment and Cross-Over for Step Recovery

Jul 22, 2023Abstract:For humanoid robots to live up to their potential utility, they must be able to robustly recover from instabilities. In this work, we propose a number of balance enhancements to enable the robot to both achieve specific, desired footholds in the world and adjusting the step positions and times as necessary while leveraging ankle and hip. This includes improving the calculation of capture regions for bipedal locomotion to better consider how step constraints affect the ability to recover. We then explore a new strategy for performing cross-over steps to maintain stability, which greatly enhances the variety of tracking error from which the robot may recover. Our last contribution is a strategy for time adaptation during the transfer phase for recovery. We then present these results on our humanoid robot, Nadia, in both simulation and hardware, showing the robot walking over rough terrain, recovering from external disturbances, and taking cross-over steps to maintain balance.

A Virtual-Reality Driven Approach for Generating Humanoid Multi-Contact Trajectories

Mar 14, 2023Abstract:We present a virtual reality (VR) framework designed to intuitively generate humanoid multi-contact maneuvers for use in unstructured environments. Our framework allows the operator to directly manipulate the inverse kinematics objectives which parameterize a trajectory. Kinematic objectives consisting of spatial poses, center-of-mass position and joint positions are used in an optimization based inverse kinematics solver to compute whole-body configurations while enforcing static contact stability. Virtual ``anchors'' allow the operator to freely drag and constrain the robot as well as modify objective weights and constraint sets. The interface's design novelty is a generalized use of anchors which enables arbitrary posture and contact modes. The operator is aided by visual cues of actuation feasibility and tools for rapid anchor placement. We demonstrate our approach in simulation and hardware on a NASA Valkyrie humanoid, focusing on multi-contact trajectories which are challenging to generate autonomously or through alternative teleoperation approaches.

Proprioceptive State Estimation of Legged Robots with Kinematic Chain Modeling

Sep 12, 2022

Abstract:Legged robot locomotion is a challenging task due to a myriad of sub-problems, such as the hybrid dynamics of foot contact and the effects of the desired gait on the terrain. Accurate and efficient state estimation of the floating base and the feet joints can help alleviate much of these issues by providing feedback information to robot controllers. Current state estimation methods are highly reliant on a conjunction of visual and inertial measurements to provide real-time estimates, thus being handicapped in perceptually poor environments. In this work, we show that by leveraging the kinematic chain model of the robot via a factor graph formulation, we can perform state estimation of the base and the leg joints using primarily proprioceptive inertial data. We perform state estimation using a combination of preintegrated IMU measurements, forward kinematic computations, and contact detections in a factor-graph based framework, allowing our state estimate to be constrained by the robot model. Experimental results in simulation and on hardware show that our approach out-performs current proprioceptive state estimation methods by 27% on average, while being generalizable to a variety of legged robot platforms. We demonstrate our results both quantitatively and qualitatively on a wide variety of trajectories.

A Fast, Autonomous, Bipedal Walking Behavior over Rapid Regions

Jul 17, 2022

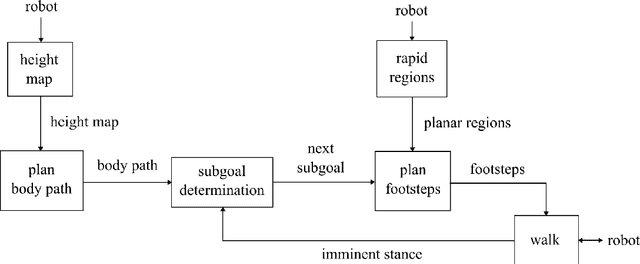

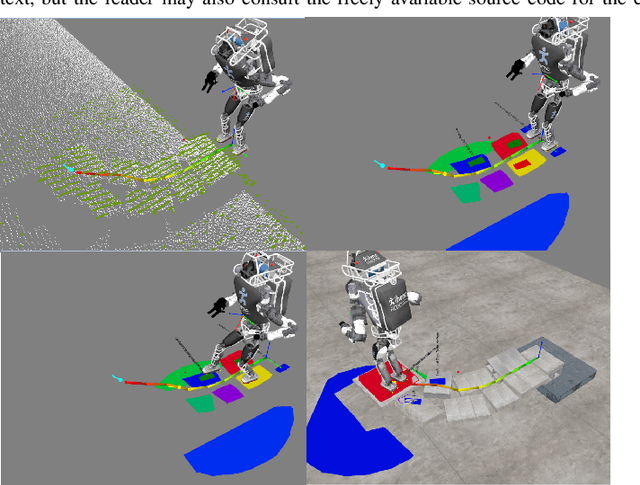

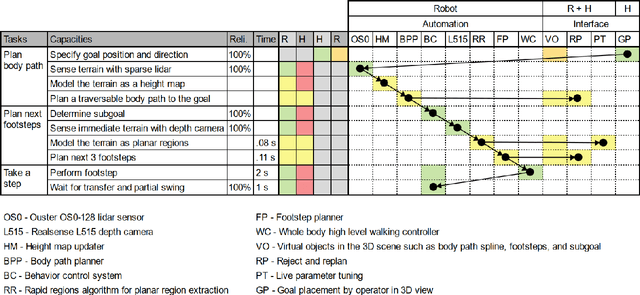

Abstract:In trying to build humanoid robots that perform useful tasks in a world built for humans, we address the problem of autonomous locomotion. Humanoid robot planning and control algorithms for walking over rough terrain are becoming increasingly capable. At the same time, commercially available depth cameras have been getting more accurate and GPU computing has become a primary tool in AI research. In this paper, we present a newly constructed behavior control system for achieving fast, autonomous, bipedal walking, without pauses or deliberation. We achieve this using a recently published rapid planar regions perception algorithm, a height map based body path planner, an A* footstep planner, and a momentum-based walking controller. We put these elements together to form a behavior control system supported by modern software development practices and simulation tools.

Non-Linear Trajectory Optimization for Large Step-Ups: Application to the Humanoid Robot Atlas

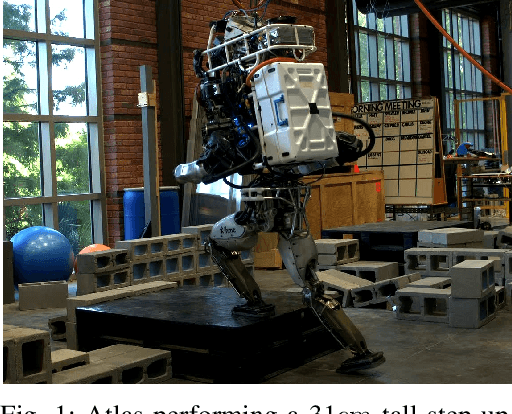

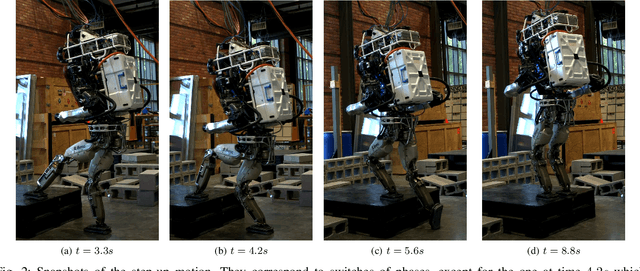

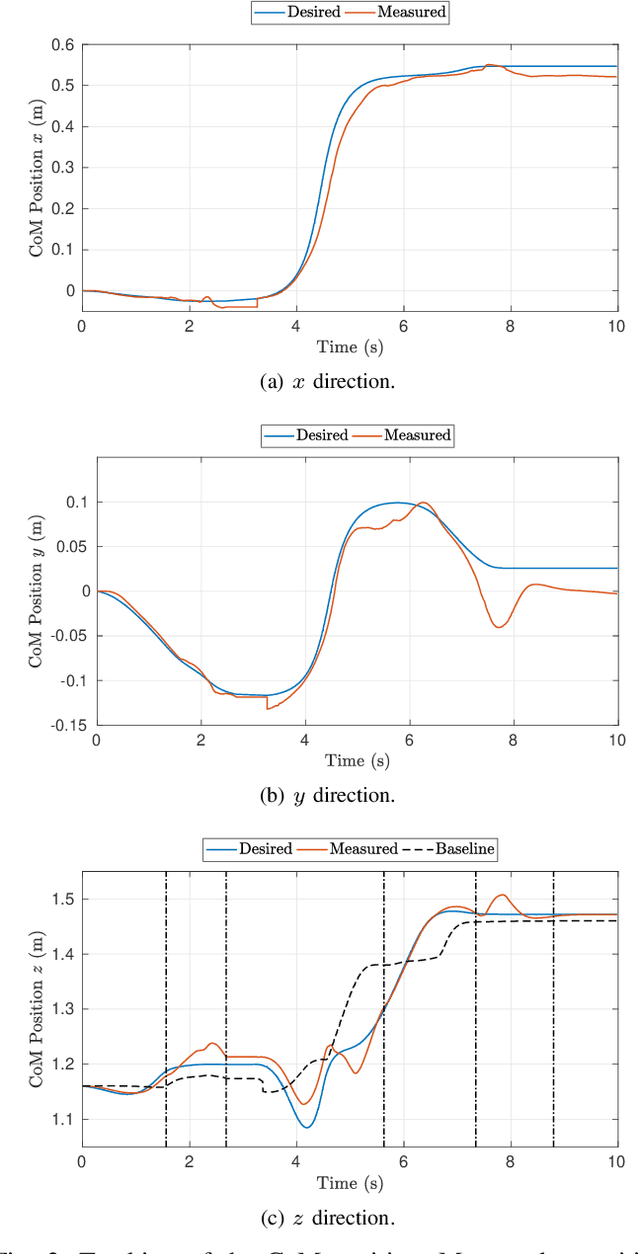

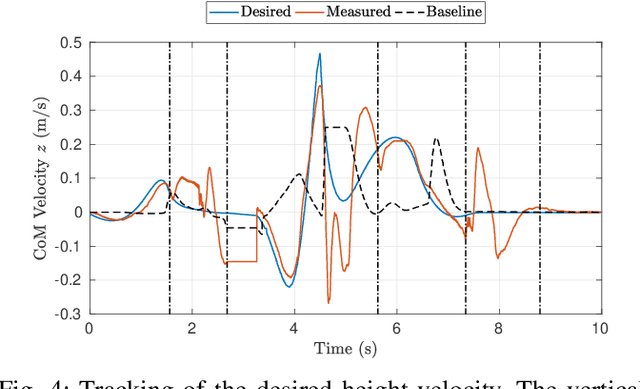

Apr 25, 2020

Abstract:Performing large step-ups is a challenging task for a humanoid robot. It requires the robot to perform motions at the limit of its reachable workspace while straining to move its body upon the obstacle. This paper presents a non-linear trajectory optimization method for generating step-up motions. We adopt a simplified model of the centroidal dynamics to generate feasible Center of Mass trajectories aimed at reducing the torques required for the step-up motion. The activation and deactivation of contacts at both feet are considered explicitly. The output of the planner is a Center of Mass trajectory plus an optimal duration for each walking phase. These desired values are stabilized by a whole-body controller that determines a set of desired joint torques. We experimentally demonstrate that by using trajectory optimization techniques, the maximum torque required to the full-size humanoid robot Atlas can be reduced up to 20% when performing a step-up motion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge