Mehdi Benallegue

CNRS-AIST JRL

QP-Based Control of an Underactuated Aerial Manipulator under Constraints

Jan 13, 2026Abstract:This paper presents a constraint-aware control framework for underactuated aerial manipulators, enabling accurate end-effector trajectory tracking while explicitly accounting for safety and feasibility constraints. The control problem is formulated as a quadratic program that computes dynamically consistent generalized accelerations subject to underactuation, actuator bounds, and system constraints. To enhance robustness against disturbances, modeling uncertainties, and steady-state errors, a passivity-based integral action is incorporated at the torque level without compromising feasibility. The effectiveness of the proposed approach is demonstrated through high-fidelity physics-based simulations, which include parameter perturbations, viscous joint friction, and realistic sensing and state-estimation effects. This demonstrates accurate tracking, smooth control inputs, and reliable constraint satisfaction under realistic operating conditions.

Humanoid Loco-Manipulations Pattern Generation and Stabilization Control

May 30, 2025Abstract:In order for a humanoid robot to perform loco-manipulation such as moving an object while walking, it is necessary to account for sustained or alternating external forces other than ground-feet reaction, resulting from humanoid-object contact interactions. In this letter, we propose a bipedal control strategy for humanoid loco-manipulation that can cope with such external forces. First, the basic formulas of the bipedal dynamics, i.e., linear inverted pendulum mode and divergent component of motion, are derived, taking into account the effects of external manipulation forces. Then, we propose a pattern generator to plan center of mass trajectories consistent with the reference trajectory of the manipulation forces, and a stabilizer to compensate for the error between desired and actual manipulation forces. The effectiveness of our controller is assessed both in simulation and loco-manipulation experiments with real humanoid robots.

Robust Humanoid Walking on Compliant and Uneven Terrain with Deep Reinforcement Learning

Apr 18, 2025Abstract:For the deployment of legged robots in real-world environments, it is essential to develop robust locomotion control methods for challenging terrains that may exhibit unexpected deformability and irregularity. In this paper, we explore the application of sim-to-real deep reinforcement learning (RL) for the design of bipedal locomotion controllers for humanoid robots on compliant and uneven terrains. Our key contribution is to show that a simple training curriculum for exposing the RL agent to randomized terrains in simulation can achieve robust walking on a real humanoid robot using only proprioceptive feedback. We train an end-to-end bipedal locomotion policy using the proposed approach, and show extensive real-robot demonstration on the HRP-5P humanoid over several difficult terrains inside and outside the lab environment. Further, we argue that the robustness of a bipedal walking policy can be improved if the robot is allowed to exhibit aperiodic motion with variable stepping frequency. We propose a new control policy to enable modification of the observed clock signal, leading to adaptive gait frequencies depending on the terrain and command velocity. Through simulation experiments, we show the effectiveness of this policy specifically for walking over challenging terrains by controlling swing and stance durations. The code for training and evaluation is available online at https://github.com/rohanpsingh/LearningHumanoidWalking. Demo video is available at https://www.youtube.com/watch?v=ZgfNzGAkk2Q.

Humanoid Robot RHP Friends: Seamless Combination of Autonomous and Teleoperated Tasks in a Nursing Context

Dec 30, 2024

Abstract:This paper describes RHP Friends, a social humanoid robot developed to enable assistive robotic deployments in human-coexisting environments. As a use-case application, we present its potential use in nursing by extending its capabilities to operate human devices and tools according to the task and by enabling remote assistance operations. To meet a wide variety of tasks and situations in environments designed by and for humans, we developed a system that seamlessly integrates the slim and lightweight robot and several technologies: locomanipulation, multi-contact motion, teleoperation, and object detection and tracking. We demonstrated the system's usage in a nursing application. The robot efficiently performed the daily task of patient transfer and a non-routine task, represented by a request to operate a circuit breaker. This demonstration, held at the 2023 International Robot Exhibition (IREX), conducted three times a day over three days.

The Kinetics Observer: A Tightly Coupled Estimator for Legged Robots

Jun 19, 2024

Abstract:In this paper, we propose the "Kinetics Observer", a novel estimator addressing the challenge of state estimation for legged robots using proprioceptive sensors (encoders, IMU and force/torque sensors). Based on a Multiplicative Extended Kalman Filter, the Kinetics Observer allows the real-time simultaneous estimation of contact and perturbation forces, and of the robot's kinematics, which are accurate enough to perform proprioceptive odometry. Thanks to a visco-elastic model of the contacts linking their kinematics to the ones of the centroid of the robot, the Kinetics Observer ensures a tight coupling between the whole-body kinematics and dynamics of the robot. This coupling entails a redundancy of the measurements that enhances the robustness and the accuracy of the estimation. This estimator was tested on two humanoid robots performing long distance walking on even terrain and non-coplanar multi-contact locomotion.

Enhanced Visual Feedback with Decoupled Viewpoint Control in Immersive Humanoid Robot Teleoperation using SLAM

Nov 03, 2022

Abstract:In immersive humanoid robot teleoperation, there are three main shortcomings that can alter the transparency of the visual feedback: the lag between the motion of the operator's and robot's head due to network communication delays or slow robot joint motion. This latency could cause a noticeable delay in the visual feedback, which jeopardizes the embodiment quality, can cause dizziness, and affects the interactivity resulting in operator frequent motion pauses for the visual feedback to settle; (ii) the mismatch between the camera's and the headset's field-of-views (FOV), the former having generally a lower FOV; and (iii) a mismatch between human's and robot's range of motions of the neck, the latter being also generally lower. In order to leverage these drawbacks, we developed a decoupled viewpoint control solution for a humanoid platform which allows visual feedback with low-latency and artificially increases the camera's FOV range to match that of the operator's headset. Our novel solution uses SLAM technology to enhance the visual feedback from a reconstructed mesh, complementing the areas that are not covered by the visual feedback from the robot. The visual feedback is presented as a point cloud in real-time to the operator. As a result, the operator is fed with real-time vision from the robot's head orientation by observing the pose of the point cloud. Balancing this kind of awareness and immersion is important in virtual reality based teleoperation, considering the safety and robustness of the control system. An experiment shows the effectiveness of our solution.

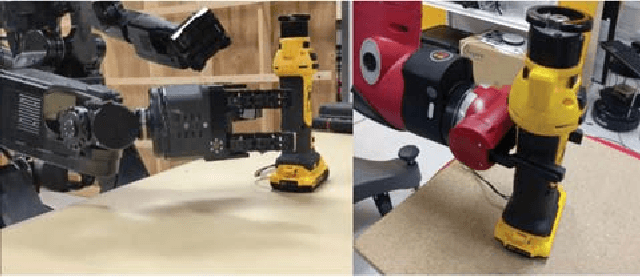

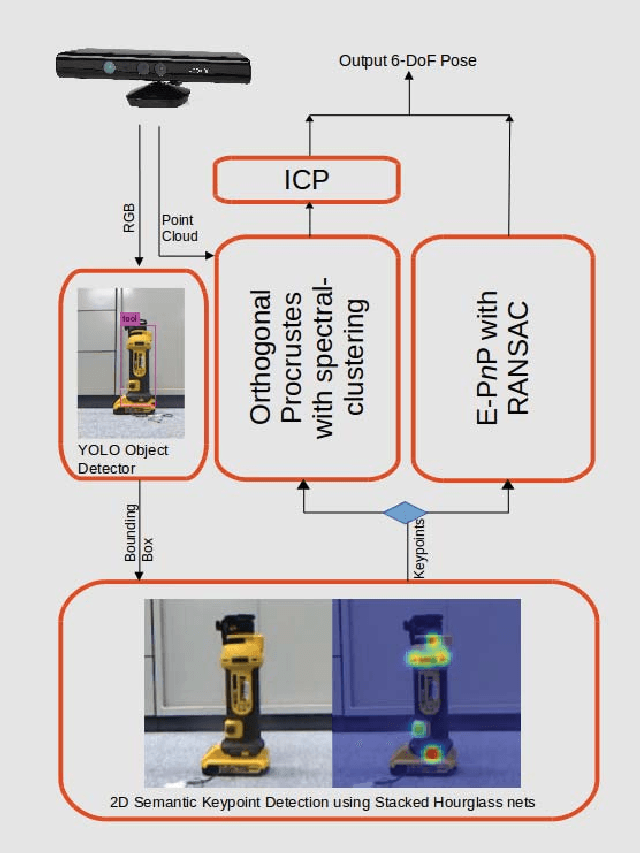

Instance-specific 6-DoF Object Pose Estimation from Minimal Annotations

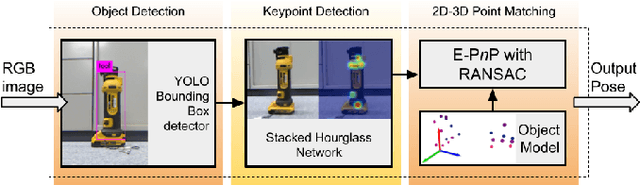

Jul 27, 2022

Abstract:In many robotic applications, the environment setting in which the 6-DoF pose estimation of a known, rigid object and its subsequent grasping is to be performed, remains nearly unchanging and might even be known to the robot in advance. In this paper, we refer to this problem as instance-specific pose estimation: the robot is expected to estimate the pose with a high degree of accuracy in only a limited set of familiar scenarios. Minor changes in the scene, including variations in lighting conditions and background appearance, are acceptable but drastic alterations are not anticipated. To this end, we present a method to rapidly train and deploy a pipeline for estimating the continuous 6-DoF pose of an object from a single RGB image. The key idea is to leverage known camera poses and rigid body geometry to partially automate the generation of a large labeled dataset. The dataset, along with sufficient domain randomization, is then used to supervise the training of deep neural networks for predicting semantic keypoints. Experimentally, we demonstrate the convenience and effectiveness of our proposed method to accurately estimate object pose requiring only a very small amount of manual annotation for training.

* GitHub code: https://github.com/rohanpsingh/ObjectKeypointTrainer

Learning Bipedal Walking On Planned Footsteps For Humanoid Robots

Jul 26, 2022

Abstract:Deep reinforcement learning (RL) based controllers for legged robots have demonstrated impressive robustness for walking in different environments for several robot platforms. To enable the application of RL policies for humanoid robots in real-world settings, it is crucial to build a system that can achieve robust walking in any direction, on 2D and 3D terrains, and be controllable by a user-command. In this paper, we tackle this problem by learning a policy to follow a given step sequence. The policy is trained with the help of a set of procedurally generated step sequences (also called footstep plans). We show that simply feeding the upcoming 2 steps to the policy is sufficient to achieve omnidirectional walking, turning in place, standing, and climbing stairs. Our method employs curriculum learning on the complexity of terrains, and circumvents the need for reference motions or pre-trained weights. We demonstrate the application of our proposed method to learn RL policies for 2 new robot platforms - HRP5P and JVRC-1 - in the MuJoCo simulation environment. The code for training and evaluation is available online.

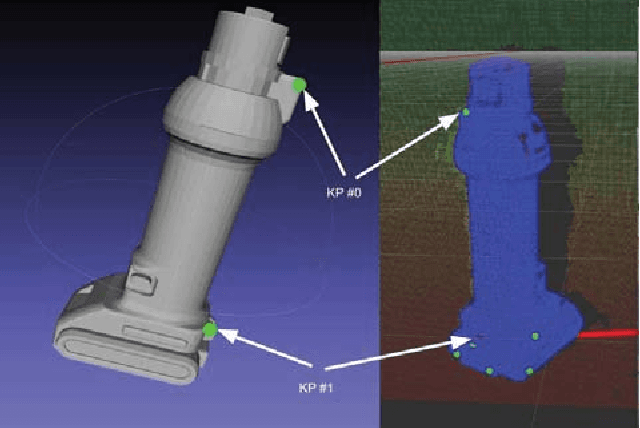

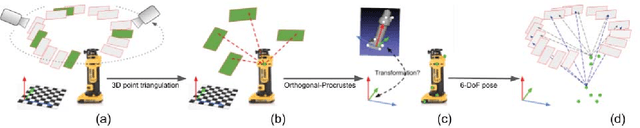

Rapid Pose Label Generation through Sparse Representation of Unknown Objects

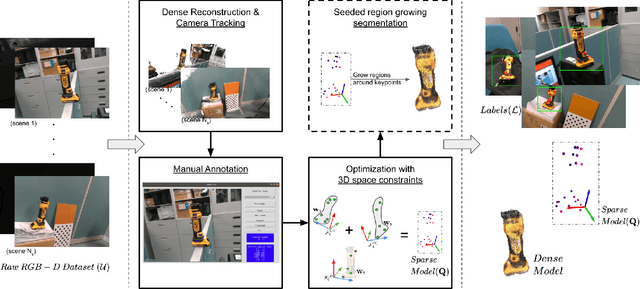

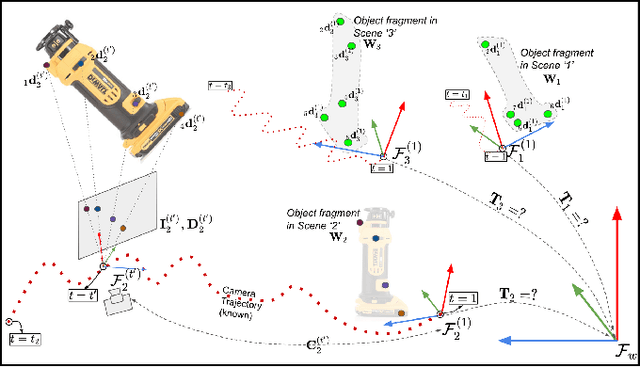

Nov 07, 2020

Abstract:Deep Convolutional Neural Networks (CNNs) have been successfully deployed on robots for 6-DoF object pose estimation through visual perception. However, obtaining labeled data on a scale required for the supervised training of CNNs is a difficult task - exacerbated if the object is novel and a 3D model is unavailable. To this end, this work presents an approach for rapidly generating real-world, pose-annotated RGB-D data for unknown objects. Our method not only circumvents the need for a prior 3D object model (textured or otherwise) but also bypasses complicated setups of fiducial markers, turntables, and sensors. With the help of a human user, we first source minimalistic labelings of an ordered set of arbitrarily chosen keypoints over a set of RGB-D videos. Then, by solving an optimization problem, we combine these labels under a world frame to recover a sparse, keypoint-based representation of the object. The sparse representation leads to the development of a dense model and the pose labels for each image frame in the set of scenes. We show that the sparse model can also be efficiently used for scaling to a large number of new scenes. We demonstrate the practicality of the generated labeled dataset by training a pipeline for 6-DoF object pose estimation and a pixel-wise segmentation network.

On the mechanical contribution of head stabilization to passive dynamics of anthropometric walkers

Oct 29, 2020

Abstract:During the steady gait, humans stabilize their head around the vertical orientation. While there are sensori-cognitive explanations for this phenomenon, its mechanical e fect on the body dynamics remains un-explored. In this study, we take profit from the similarities that human steady gait share with the locomotion of passive dynamics robots. We introduce a simplified anthropometric D model to reproduce a broad walking dynamics. In a previous study, we showed heuristically that the presence of a stabilized head-neck system significantly influences the dynamics of walking. This paper gives new insights that lead to understanding this mechanical e fect. In particular, we introduce an original cart upper-body model that allows to better understand the mechanical interest of head stabilization when walking, and we study how this e fect is sensitive to the choice of control parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge