Masaki Murooka

CNRS-AIST JRL

TACT: Humanoid Whole-body Contact Manipulation through Deep Imitation Learning with Tactile Modality

Jun 18, 2025Abstract:Manipulation with whole-body contact by humanoid robots offers distinct advantages, including enhanced stability and reduced load. On the other hand, we need to address challenges such as the increased computational cost of motion generation and the difficulty of measuring broad-area contact. We therefore have developed a humanoid control system that allows a humanoid robot equipped with tactile sensors on its upper body to learn a policy for whole-body manipulation through imitation learning based on human teleoperation data. This policy, named tactile-modality extended ACT (TACT), has a feature to take multiple sensor modalities as input, including joint position, vision, and tactile measurements. Furthermore, by integrating this policy with retargeting and locomotion control based on a biped model, we demonstrate that the life-size humanoid robot RHP7 Kaleido is capable of achieving whole-body contact manipulation while maintaining balance and walking. Through detailed experimental verification, we show that inputting both vision and tactile modalities into the policy contributes to improving the robustness of manipulation involving broad and delicate contact.

Humanoid Loco-Manipulations Pattern Generation and Stabilization Control

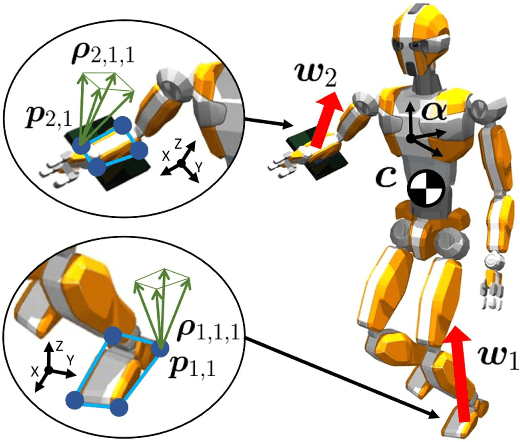

May 30, 2025Abstract:In order for a humanoid robot to perform loco-manipulation such as moving an object while walking, it is necessary to account for sustained or alternating external forces other than ground-feet reaction, resulting from humanoid-object contact interactions. In this letter, we propose a bipedal control strategy for humanoid loco-manipulation that can cope with such external forces. First, the basic formulas of the bipedal dynamics, i.e., linear inverted pendulum mode and divergent component of motion, are derived, taking into account the effects of external manipulation forces. Then, we propose a pattern generator to plan center of mass trajectories consistent with the reference trajectory of the manipulation forces, and a stabilizer to compensate for the error between desired and actual manipulation forces. The effectiveness of our controller is assessed both in simulation and loco-manipulation experiments with real humanoid robots.

Humanoid Loco-manipulation Planning based on Graph Search and Reachability Maps

May 29, 2025Abstract:In this letter, we propose an efficient and highly versatile loco-manipulation planning for humanoid robots. Loco-manipulation planning is a key technological brick enabling humanoid robots to autonomously perform object transportation by manipulating them. We formulate planning of the alternation and sequencing of footsteps and grasps as a graph search problem with a new transition model that allows for a flexible representation of loco-manipulation. Our transition model is quickly evaluated by relocating and switching the reachability maps depending on the motion of both the robot and object. We evaluate our approach by applying it to loco-manipulation use-cases, such as a bobbin rolling operation with regrasping, where the motion is automatically planned by our framework.

Centroidal Trajectory Generation and Stabilization based on Preview Control for Humanoid Multi-contact Motion

May 29, 2025

Abstract:Multi-contact motion is important for humanoid robots to work in various environments. We propose a centroidal online trajectory generation and stabilization control for humanoid dynamic multi-contact motion. The proposed method features the drastic reduction of the computational cost by using preview control instead of the conventional model predictive control that considers the constraints of all sample times. By combining preview control with centroidal state feedback for robustness to disturbances and wrench distribution for satisfying contact constraints, we show that the robot can stably perform a variety of multi-contact motions through simulation experiments.

Optimization-based Posture Generation for Whole-body Contact Motion by Contact Point Search on the Body Surface

May 29, 2025Abstract:Whole-body contact is an effective strategy for improving the stability and efficiency of the motion of robots. For robots to automatically perform such motions, we propose a posture generation method that employs all available surfaces of the robot links. By representing the contact point on the body surface by two-dimensional configuration variables, the joint positions and contact points are simultaneously determined through a gradient-based optimization. By generating motions with the proposed method, we present experiments in which robots manipulate objects effectively utilizing whole-body contact.

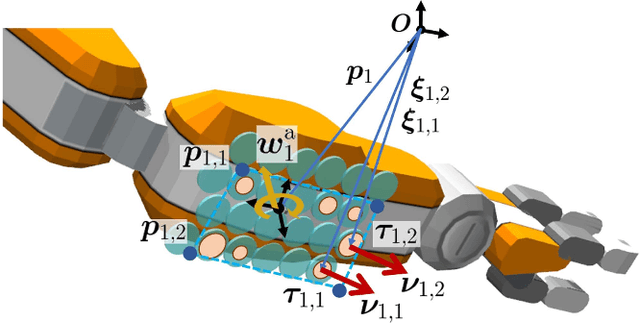

Whole-body Multi-contact Motion Control for Humanoid Robots Based on Distributed Tactile Sensors

May 26, 2025

Abstract:To enable humanoid robots to work robustly in confined environments, multi-contact motion that makes contacts not only at extremities, such as hands and feet, but also at intermediate areas of the limbs, such as knees and elbows, is essential. We develop a method to realize such whole-body multi-contact motion involving contacts at intermediate areas by a humanoid robot. Deformable sheet-shaped distributed tactile sensors are mounted on the surface of the robot's limbs to measure the contact force without significantly changing the robot body shape. The multi-contact motion controller developed earlier, which is dedicated to contact at extremities, is extended to handle contact at intermediate areas, and the robot motion is stabilized by feedback control using not only force/torque sensors but also distributed tactile sensors. Through verification on dynamics simulations, we show that the developed tactile feedback improves the stability of whole-body multi-contact motion against disturbances and environmental errors. Furthermore, the life-sized humanoid RHP Kaleido demonstrates whole-body multi-contact motions, such as stepping forward while supporting the body with forearm contact and balancing in a sitting posture with thigh contacts.

Learning Bimanual Manipulation via Action Chunking and Inter-Arm Coordination with Transformers

Mar 18, 2025Abstract:Robots that can operate autonomously in a human living environment are necessary to have the ability to handle various tasks flexibly. One crucial element is coordinated bimanual movements that enable functions that are difficult to perform with one hand alone. In recent years, learning-based models that focus on the possibilities of bimanual movements have been proposed. However, the high degree of freedom of the robot makes it challenging to reason about control, and the left and right robot arms need to adjust their actions depending on the situation, making it difficult to realize more dexterous tasks. To address the issue, we focus on coordination and efficiency between both arms, particularly for synchronized actions. Therefore, we propose a novel imitation learning architecture that predicts cooperative actions. We differentiate the architecture for both arms and add an intermediate encoder layer, Inter-Arm Coordinated transformer Encoder (IACE), that facilitates synchronization and temporal alignment to ensure smooth and coordinated actions. To verify the effectiveness of our architectures, we perform distinctive bimanual tasks. The experimental results showed that our model demonstrated a high success rate for comparison and suggested a suitable architecture for the policy learning of bimanual manipulation.

Humanoid Robot RHP Friends: Seamless Combination of Autonomous and Teleoperated Tasks in a Nursing Context

Dec 30, 2024

Abstract:This paper describes RHP Friends, a social humanoid robot developed to enable assistive robotic deployments in human-coexisting environments. As a use-case application, we present its potential use in nursing by extending its capabilities to operate human devices and tools according to the task and by enabling remote assistance operations. To meet a wide variety of tasks and situations in environments designed by and for humans, we developed a system that seamlessly integrates the slim and lightweight robot and several technologies: locomanipulation, multi-contact motion, teleoperation, and object detection and tracking. We demonstrated the system's usage in a nursing application. The robot efficiently performed the daily task of patient transfer and a non-routine task, represented by a request to operate a circuit breaker. This demonstration, held at the 2023 International Robot Exhibition (IREX), conducted three times a day over three days.

The Kinetics Observer: A Tightly Coupled Estimator for Legged Robots

Jun 19, 2024

Abstract:In this paper, we propose the "Kinetics Observer", a novel estimator addressing the challenge of state estimation for legged robots using proprioceptive sensors (encoders, IMU and force/torque sensors). Based on a Multiplicative Extended Kalman Filter, the Kinetics Observer allows the real-time simultaneous estimation of contact and perturbation forces, and of the robot's kinematics, which are accurate enough to perform proprioceptive odometry. Thanks to a visco-elastic model of the contacts linking their kinematics to the ones of the centroid of the robot, the Kinetics Observer ensures a tight coupling between the whole-body kinematics and dynamics of the robot. This coupling entails a redundancy of the measurements that enhances the robustness and the accuracy of the estimation. This estimator was tested on two humanoid robots performing long distance walking on even terrain and non-coplanar multi-contact locomotion.

3D Object Segmentation for Shelf Bin Picking by Humanoid with Deep Learning and Occupancy Voxel Grid Map

Jan 16, 2020

Abstract:Picking objects in a narrow space such as shelf bins is an important task for humanoid to extract target object from environment. In those situations, however, there are many occlusions between the camera and objects, and this makes it difficult to segment the target object three dimensionally because of the lack of three dimentional sensor inputs. We address this problem with accumulating segmentation result with multiple camera angles, and generating voxel model of the target object. Our approach consists of two components: first is object probability prediction for input image with convolutional networks, and second is generating voxel grid map which is designed for object segmentation. We evaluated the method with the picking task experiment for target objects in narrow shelf bins. Our method generates dense 3D object segments even with occlusions, and the real robot successfuly picked target objects from the narrow space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge