Iori Kumagai

CNRS-AIST JRL

Humanoid Loco-Manipulations Pattern Generation and Stabilization Control

May 30, 2025Abstract:In order for a humanoid robot to perform loco-manipulation such as moving an object while walking, it is necessary to account for sustained or alternating external forces other than ground-feet reaction, resulting from humanoid-object contact interactions. In this letter, we propose a bipedal control strategy for humanoid loco-manipulation that can cope with such external forces. First, the basic formulas of the bipedal dynamics, i.e., linear inverted pendulum mode and divergent component of motion, are derived, taking into account the effects of external manipulation forces. Then, we propose a pattern generator to plan center of mass trajectories consistent with the reference trajectory of the manipulation forces, and a stabilizer to compensate for the error between desired and actual manipulation forces. The effectiveness of our controller is assessed both in simulation and loco-manipulation experiments with real humanoid robots.

Humanoid Loco-manipulation Planning based on Graph Search and Reachability Maps

May 29, 2025Abstract:In this letter, we propose an efficient and highly versatile loco-manipulation planning for humanoid robots. Loco-manipulation planning is a key technological brick enabling humanoid robots to autonomously perform object transportation by manipulating them. We formulate planning of the alternation and sequencing of footsteps and grasps as a graph search problem with a new transition model that allows for a flexible representation of loco-manipulation. Our transition model is quickly evaluated by relocating and switching the reachability maps depending on the motion of both the robot and object. We evaluate our approach by applying it to loco-manipulation use-cases, such as a bobbin rolling operation with regrasping, where the motion is automatically planned by our framework.

Humanoid Robot RHP Friends: Seamless Combination of Autonomous and Teleoperated Tasks in a Nursing Context

Dec 30, 2024

Abstract:This paper describes RHP Friends, a social humanoid robot developed to enable assistive robotic deployments in human-coexisting environments. As a use-case application, we present its potential use in nursing by extending its capabilities to operate human devices and tools according to the task and by enabling remote assistance operations. To meet a wide variety of tasks and situations in environments designed by and for humans, we developed a system that seamlessly integrates the slim and lightweight robot and several technologies: locomanipulation, multi-contact motion, teleoperation, and object detection and tracking. We demonstrated the system's usage in a nursing application. The robot efficiently performed the daily task of patient transfer and a non-routine task, represented by a request to operate a circuit breaker. This demonstration, held at the 2023 International Robot Exhibition (IREX), conducted three times a day over three days.

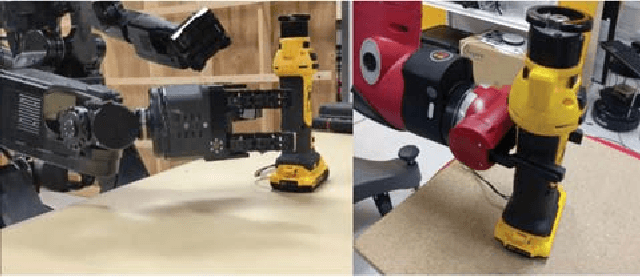

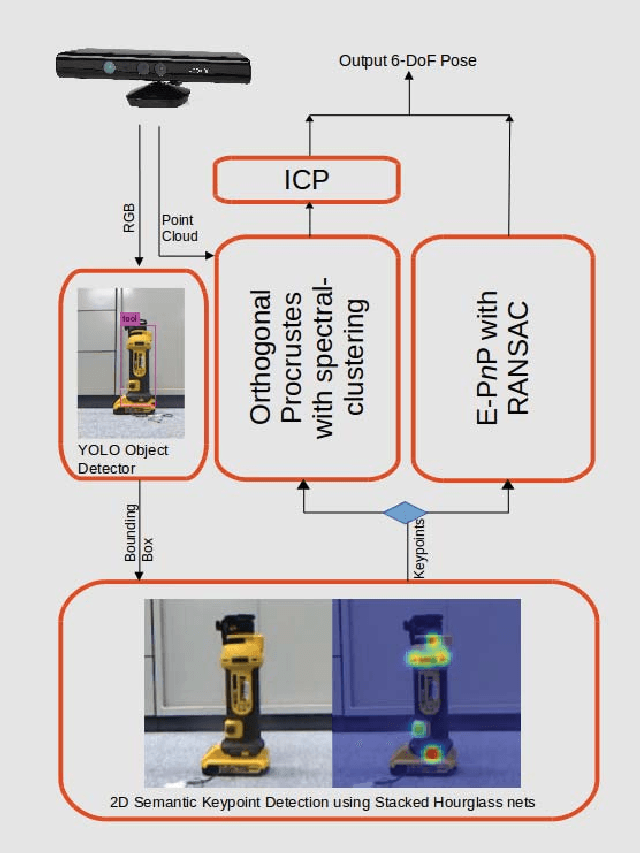

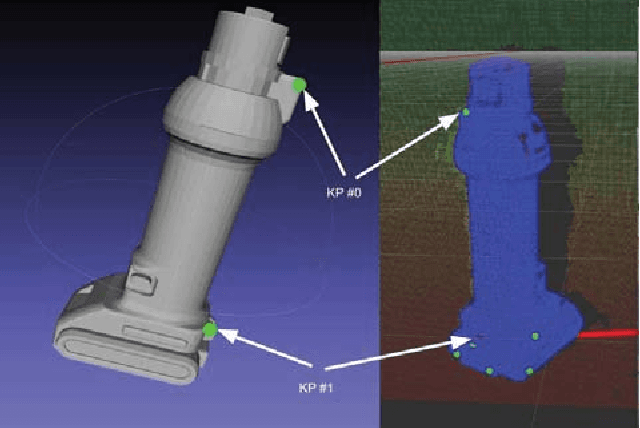

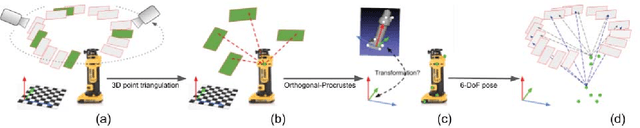

Instance-specific 6-DoF Object Pose Estimation from Minimal Annotations

Jul 27, 2022

Abstract:In many robotic applications, the environment setting in which the 6-DoF pose estimation of a known, rigid object and its subsequent grasping is to be performed, remains nearly unchanging and might even be known to the robot in advance. In this paper, we refer to this problem as instance-specific pose estimation: the robot is expected to estimate the pose with a high degree of accuracy in only a limited set of familiar scenarios. Minor changes in the scene, including variations in lighting conditions and background appearance, are acceptable but drastic alterations are not anticipated. To this end, we present a method to rapidly train and deploy a pipeline for estimating the continuous 6-DoF pose of an object from a single RGB image. The key idea is to leverage known camera poses and rigid body geometry to partially automate the generation of a large labeled dataset. The dataset, along with sufficient domain randomization, is then used to supervise the training of deep neural networks for predicting semantic keypoints. Experimentally, we demonstrate the convenience and effectiveness of our proposed method to accurately estimate object pose requiring only a very small amount of manual annotation for training.

* GitHub code: https://github.com/rohanpsingh/ObjectKeypointTrainer

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge