Qiegen Liu

Visible Singularities Guided Correlation Network for Limited-Angle CT Reconstruction

Jan 30, 2026Abstract:Limited-angle computed tomography (LACT) offers the advantages of reduced radiation dose and shortened scanning time. Traditional reconstruction algorithms exhibit various inherent limitations in LACT. Currently, most deep learning-based LACT reconstruction methods focus on multi-domain fusion or the introduction of generic priors, failing to fully align with the core imaging characteristics of LACT-such as the directionality of artifacts and directional loss of structural information, which are caused by the absence of projection angles in certain directions. Inspired by the theory of visible and invisible singularities, taking into account the aforementioned core imaging characteristics of LACT, we propose a Visible Singularities Guided Correlation network for LACT reconstruction (VSGC). The design philosophy of VSGC consists of two core steps: First, extract VS edge features from LACT images and focus the model's attention on these VS. Second, establish correlations between the VS edge features and other regions of the image. Additionally, a multi-scale loss function with anisotropic constraint is employed to constrain the model to converge in multiple aspects. Finally, qualitative and quantitative validations are conducted on both simulated and real datasets to verify the effectiveness and feasibility of the proposed design. Particularly, in comparison with alternative methods, VSGC delivers more prominent performance in small angular ranges, with the PSNR improvement of 2.45 dB and the SSIM enhancement of 1.5\%. The code is publicly available at https://github.com/yqx7150/VSGC.

LaminoDiff: Artifact-Free Computed Laminography in Non-Destructive Testing via Diffusion Model

Jan 12, 2026Abstract:Computed Laminography (CL) is a key non-destructive testing technology for the visualization of internal structures in large planar objects. The inherent scanning geometry of CL inevitably results in inter-layer aliasing artifacts, limiting its practical application, particularly in electronic component inspection. While deep learning (DL) provides a powerful paradigm for artifact removal, its effectiveness is often limited by the domain gap between synthetic data and real-world data. In this work, we present LaminoDiff, a framework to integrate a diffusion model with a high-fidelity prior representation to bridge the domain gap in CL imaging. This prior, generated via a dual-modal CT-CL fusion strategy, is integrated into the proposed network as a conditional constraint. This integration ensures high-precision preservation of circuit structures and geometric fidelity while suppressing artifacts. Extensive experiments on both simulated and real PCB datasets demonstrate that LaminoDiff achieves high-fidelity reconstruction with competitive performance in artifact suppression and detail recovery. More importantly, the results facilitate reliable automated defect recognition.

Anatomy Aware Cascade Network: Bridging Epistemic Uncertainty and Geometric Manifold for 3D Tooth Segmentation

Jan 12, 2026Abstract:Accurate three-dimensional (3D) tooth segmentation from Cone-Beam Computed Tomography (CBCT) is a prerequisite for digital dental workflows. However, achieving high-fidelity segmentation remains challenging due to adhesion artifacts in naturally occluded scans, which are caused by low contrast and indistinct inter-arch boundaries. To address these limitations, we propose the Anatomy Aware Cascade Network (AACNet), a coarse-to-fine framework designed to resolve boundary ambiguity while maintaining global structural consistency. Specifically, we introduce two mechanisms: the Ambiguity Gated Boundary Refiner (AGBR) and the Signed Distance Map guided Anatomical Attention (SDMAA). The AGBR employs an entropy based gating mechanism to perform targeted feature rectification in high uncertainty transition zones. Meanwhile, the SDMAA integrates implicit geometric constraints via signed distance map to enforce topological consistency, preventing the loss of spatial details associated with standard pooling. Experimental results on a dataset of 125 CBCT volumes demonstrate that AACNet achieves a Dice Similarity Coefficient of 90.17 \% and a 95\% Hausdorff Distance of 3.63 mm, significantly outperforming state-of-the-art methods. Furthermore, the model exhibits strong generalization on an external dataset with an HD95 of 2.19 mm, validating its reliability for downstream clinical applications such as surgical planning. Code for AACNet is available at https://github.com/shiliu0114/AACNet.

WHU-PCPR: A cross-platform heterogeneous point cloud dataset for place recognition in complex urban scenes

Jan 10, 2026Abstract:Point Cloud-based Place Recognition (PCPR) demonstrates considerable potential in applications such as autonomous driving, robot localization and navigation, and map update. In practical applications, point clouds used for place recognition are often acquired from different platforms and LiDARs across varying scene. However, existing PCPR datasets lack diversity in scenes, platforms, and sensors, which limits the effective development of related research. To address this gap, we establish WHU-PCPR, a cross-platform heterogeneous point cloud dataset designed for place recognition. The dataset differentiates itself from existing datasets through its distinctive characteristics: 1) cross-platform heterogeneous point clouds: collected from survey-grade vehicle-mounted Mobile Laser Scanning (MLS) systems and low-cost Portable helmet-mounted Laser Scanning (PLS) systems, each equipped with distinct mechanical and solid-state LiDAR sensors. 2) Complex localization scenes: encompassing real-time and long-term changes in both urban and campus road scenes. 3) Large-scale spatial coverage: featuring 82.3 km of trajectory over a 60-month period and an unrepeated route of approximately 30 km. Based on WHU-PCPR, we conduct extensive evaluation and in-depth analysis of several representative PCPR methods, and provide a concise discussion of key challenges and future research directions. The dataset and benchmark code are available at https://github.com/zouxianghong/WHU-PCPR.

Meta-information Guided Cross-domain Synergistic Diffusion Model for Low-dose PET Reconstruction

Dec 23, 2025Abstract:Low-dose PET imaging is crucial for reducing patient radiation exposure but faces challenges like noise interference, reduced contrast, and difficulty in preserving physiological details. Existing methods often neglect both projection-domain physics knowledge and patient-specific meta-information, which are critical for functional-semantic correlation mining. In this study, we introduce a meta-information guided cross-domain synergistic diffusion model (MiG-DM) that integrates comprehensive cross-modal priors to generate high-quality PET images. Specifically, a meta-information encoding module transforms clinical parameters into semantic prompts by considering patient characteristics, dose-related information, and semi-quantitative parameters, enabling cross-modal alignment between textual meta-information and image reconstruction. Additionally, the cross-domain architecture combines projection-domain and image-domain processing. In the projection domain, a specialized sinogram adapter captures global physical structures through convolution operations equivalent to global image-domain filtering. Experiments on the UDPET public dataset and clinical datasets with varying dose levels demonstrate that MiG-DM outperforms state-of-the-art methods in enhancing PET image quality and preserving physiological details.

Iterative Diffusion-Refined Neural Attenuation Fields for Multi-Source Stationary CT Reconstruction: NAF Meets Diffusion Model

Nov 18, 2025

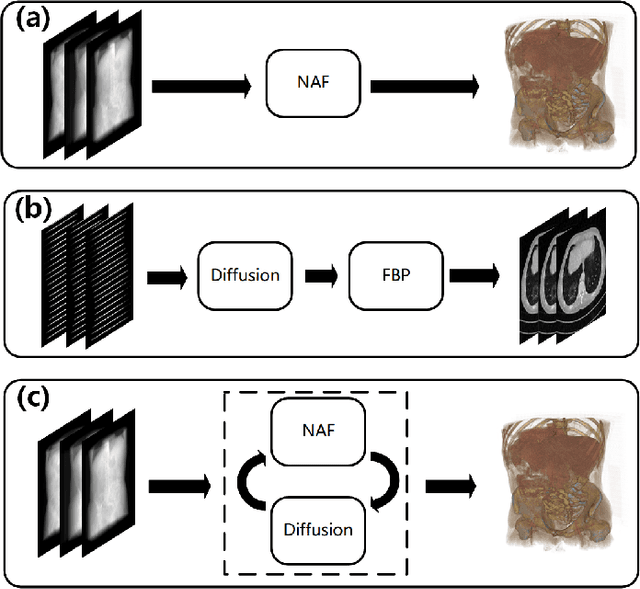

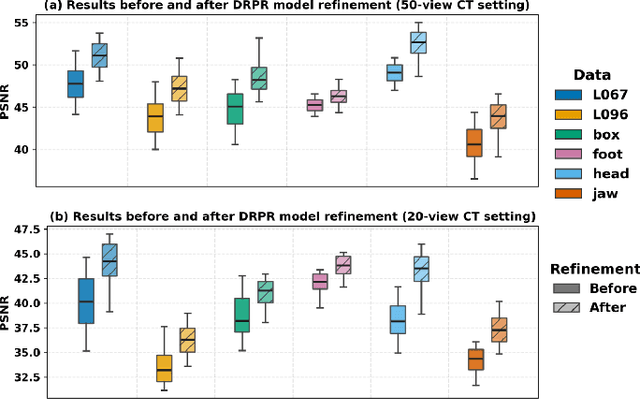

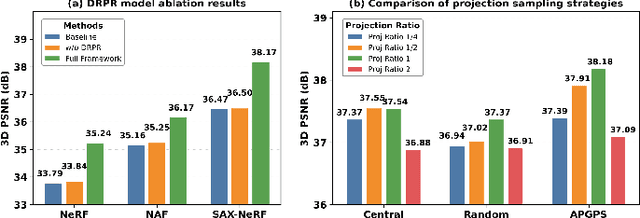

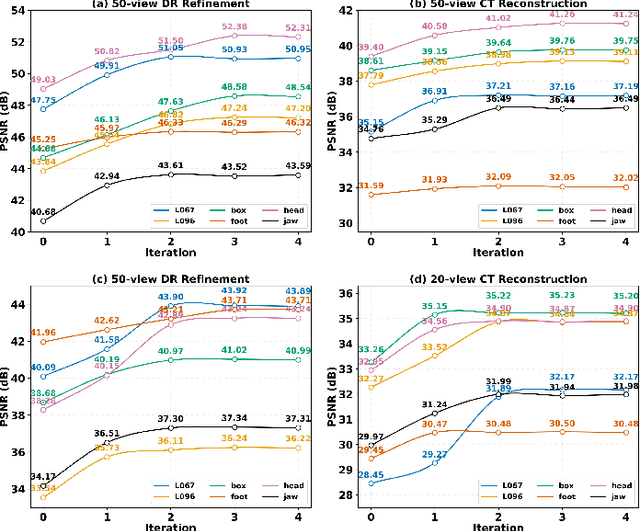

Abstract:Multi-source stationary computed tomography (CT) has recently attracted attention for its ability to achieve rapid image reconstruction, making it suitable for time-sensitive clinical and industrial applications. However, practical systems are often constrained by ultra-sparse-view sampling, which significantly degrades reconstruction quality. Traditional methods struggle under ultra-sparse-view settings, where interpolation becomes inaccurate and the resulting reconstructions are unsatisfactory. To address this challenge, this study proposes Diffusion-Refined Neural Attenuation Fields (Diff-NAF), an iterative framework tailored for multi-source stationary CT under ultra-sparse-view conditions. Diff-NAF combines a Neural Attenuation Field representation with a dual-branch conditional diffusion model. The process begins by training an initial NAF using ultra-sparse-view projections. New projections are then generated through an Angle-Prior Guided Projection Synthesis strategy that exploits inter view priors, and are subsequently refined by a Diffusion-driven Reuse Projection Refinement Module. The refined projections are incorporated as pseudo-labels into the training set for the next iteration. Through iterative refinement, Diff-NAF progressively enhances projection completeness and reconstruction fidelity under ultra-sparse-view conditions, ultimately yielding high-quality CT reconstructions. Experimental results on multiple simulated 3D CT volumes and real projection data demonstrate that Diff-NAF achieves the best performance under ultra-sparse-view conditions.

ALL-PET: A Low-resource and Low-shot PET Foundation Model in the Projection Domain

Sep 11, 2025Abstract:Building large-scale foundation model for PET imaging is hindered by limited access to labeled data and insufficient computational resources. To overcome data scarcity and efficiency limitations, we propose ALL-PET, a low-resource, low-shot PET foundation model operating directly in the projection domain. ALL-PET leverages a latent diffusion model (LDM) with three key innovations. First, we design a Radon mask augmentation strategy (RMAS) that generates over 200,000 structurally diverse training samples by projecting randomized image-domain masks into sinogram space, significantly improving generalization with minimal data. This is extended by a dynamic multi-mask (DMM) mechanism that varies mask quantity and distribution, enhancing data diversity without added model complexity. Second, we implement positive/negative mask constraints to embed strict geometric consistency, reducing parameter burden while preserving generation quality. Third, we introduce transparent medical attention (TMA), a parameter-free, geometry-driven mechanism that enhances lesion-related regions in raw projection data. Lesion-focused attention maps are derived from coarse segmentation, covering both hypermetabolic and hypometabolic areas, and projected into sinogram space for physically consistent guidance. The system supports clinician-defined ROI adjustments, ensuring flexible, interpretable, and task-adaptive emphasis aligned with PET acquisition physics. Experimental results show ALL-PET achieves high-quality sinogram generation using only 500 samples, with performance comparable to models trained on larger datasets. ALL-PET generalizes across tasks including low-dose reconstruction, attenuation correction, delayed-frame prediction, and tracer separation, operating efficiently with memory use under 24GB.

UniSino: Physics-Driven Foundational Model for Universal CT Sinogram Standardization

Aug 25, 2025

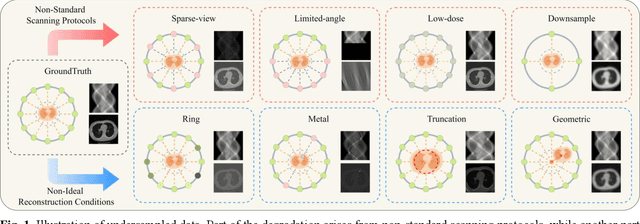

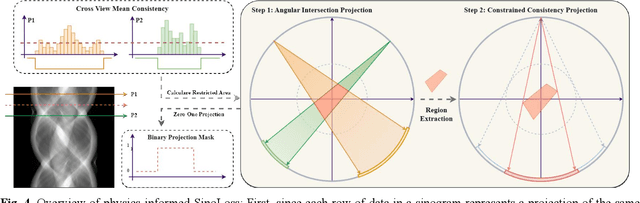

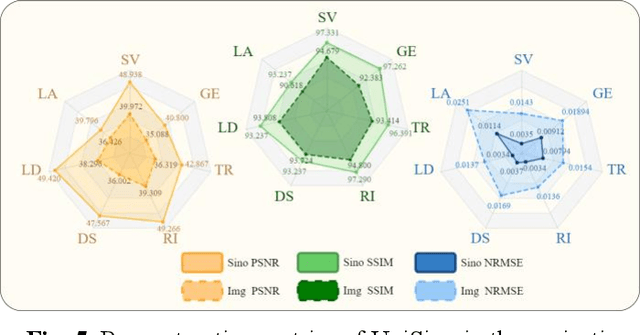

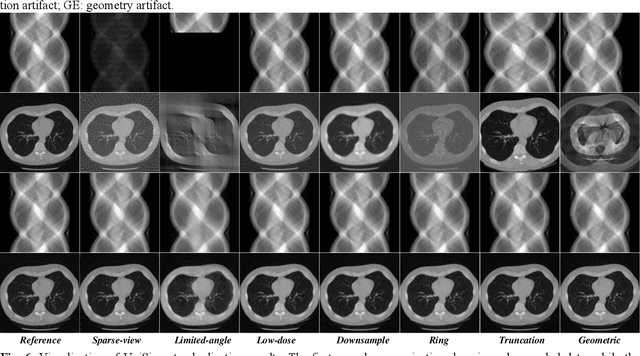

Abstract:During raw-data acquisition in CT imaging, diverse factors can degrade the collected sinograms, with undersampling and noise leading to severe artifacts and noise in reconstructed images and compromising diagnostic accuracy. Conventional correction methods rely on manually designed algorithms or fixed empirical parameters, but these approaches often lack generalizability across heterogeneous artifact types. To address these limitations, we propose UniSino, a foundation model for universal CT sinogram standardization. Unlike existing foundational models that operate in image domain, UniSino directly standardizes data in the projection domain, which enables stronger generalization across diverse undersampling scenarios. Its training framework incorporates the physical characteristics of sinograms, enhancing generalization and enabling robust performance across multiple subtasks spanning four benchmark datasets. Experimental results demonstrate thatUniSino achieves superior reconstruction quality both single and mixed undersampling case, demonstrating exceptional robustness and generalization in sinogram enhancement for CT imaging. The code is available at: https://github.com/yqx7150/UniSino.

PRO: Projection Domain Synthesis for CT Imaging

Jun 16, 2025Abstract:Synthesizing high quality CT images remains a signifi-cant challenge due to the limited availability of annotat-ed data and the complex nature of CT imaging. In this work, we present PRO, a novel framework that, to the best of our knowledge, is the first to perform CT image synthesis in the projection domain using latent diffusion models. Unlike previous approaches that operate in the image domain, PRO learns rich structural representa-tions from raw projection data and leverages anatomi-cal text prompts for controllable synthesis. This projec-tion domain strategy enables more faithful modeling of underlying imaging physics and anatomical structures. Moreover, PRO functions as a foundation model, capa-ble of generalizing across diverse downstream tasks by adjusting its generative behavior via prompt inputs. Experimental results demonstrated that incorporating our synthesized data significantly improves perfor-mance across multiple downstream tasks, including low-dose and sparse-view reconstruction, even with limited training data. These findings underscore the versatility and scalability of PRO in data generation for various CT applications. These results highlight the potential of projection domain synthesis as a powerful tool for data augmentation and robust CT imaging. Our source code is publicly available at: https://github.com/yqx7150/PRO.

Ordered-subsets Multi-diffusion Model for Sparse-view CT Reconstruction

May 15, 2025Abstract:Score-based diffusion models have shown significant promise in the field of sparse-view CT reconstruction. However, the projection dataset is large and riddled with redundancy. Consequently, applying the diffusion model to unprocessed data results in lower learning effectiveness and higher learning difficulty, frequently leading to reconstructed images that lack fine details. To address these issues, we propose the ordered-subsets multi-diffusion model (OSMM) for sparse-view CT reconstruction. The OSMM innovatively divides the CT projection data into equal subsets and employs multi-subsets diffusion model (MSDM) to learn from each subset independently. This targeted learning approach reduces complexity and enhances the reconstruction of fine details. Furthermore, the integration of one-whole diffusion model (OWDM) with complete sinogram data acts as a global information constraint, which can reduce the possibility of generating erroneous or inconsistent sinogram information. Moreover, the OSMM's unsupervised learning framework provides strong robustness and generalizability, adapting seamlessly to varying sparsity levels of CT sinograms. This ensures consistent and reliable performance across different clinical scenarios. Experimental results demonstrate that OSMM outperforms traditional diffusion models in terms of image quality and noise resilience, offering a powerful and versatile solution for advanced CT imaging in sparse-view scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge