Noah Maul

Pattern Recognition Lab, FAU Erlangen-Nürnberg, Germany, Siemens Healthcare GmbH, Forchheim, Germany

Comparison Study: Glacier Calving Front Delineation in Synthetic Aperture Radar Images With Deep Learning

Jan 09, 2025

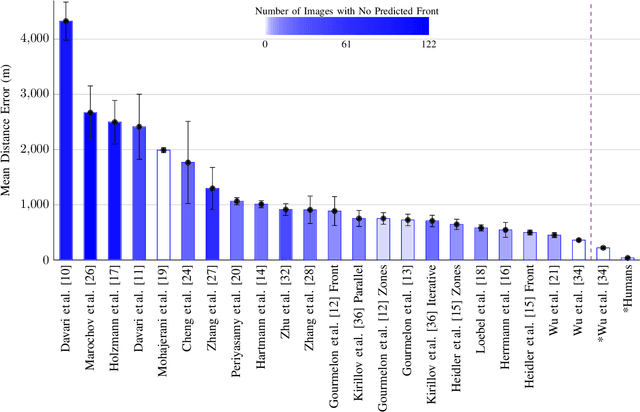

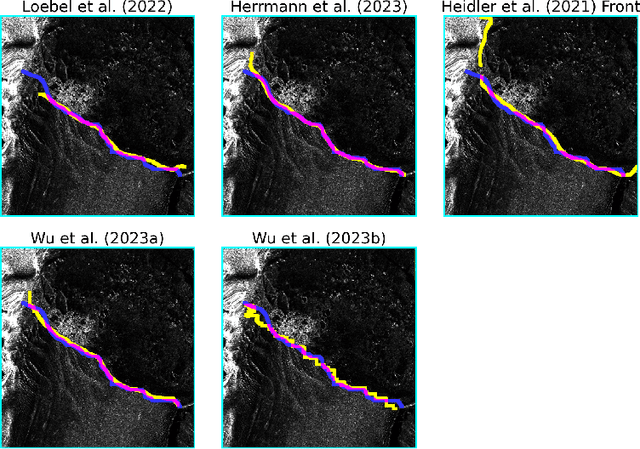

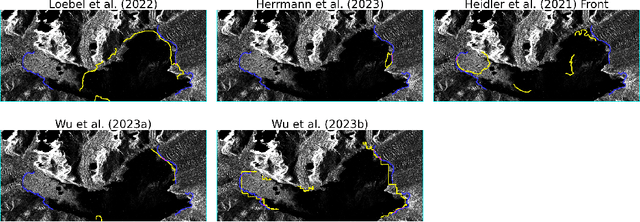

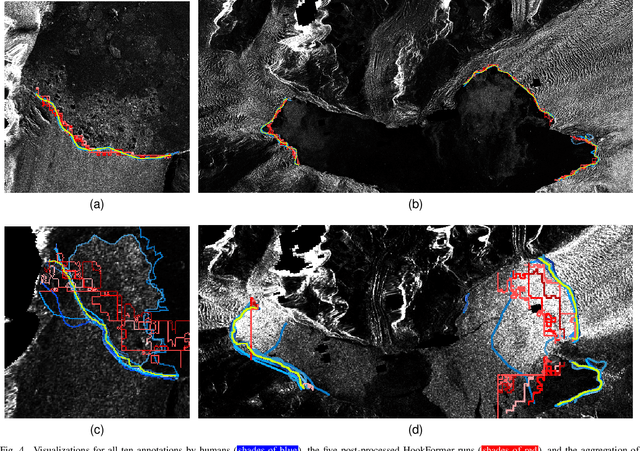

Abstract:Calving front position variation of marine-terminating glaciers is an indicator of ice mass loss and a crucial parameter in numerical glacier models. Deep Learning (DL) systems can automatically extract this position from Synthetic Aperture Radar (SAR) imagery, enabling continuous, weather- and illumination-independent, large-scale monitoring. This study presents the first comparison of DL systems on a common calving front benchmark dataset. A multi-annotator study with ten annotators is performed to contrast the best-performing DL system against human performance. The best DL model's outputs deviate 221 m on average, while the average deviation of the human annotators is 38 m. This significant difference shows that current DL systems do not yet match human performance and that further research is needed to enable fully automated monitoring of glacier calving fronts. The study of Vision Transformers, foundation models, and the inclusion and processing strategy of more information are identified as avenues for future research.

Neural Image Unfolding: Flattening Sparse Anatomical Structures using Neural Fields

Nov 27, 2024

Abstract:Tomographic imaging reveals internal structures of 3D objects and is crucial for medical diagnoses. Visualizing the morphology and appearance of non-planar sparse anatomical structures that extend over multiple 2D slices in tomographic volumes is inherently difficult but valuable for decision-making and reporting. Hence, various organ-specific unfolding techniques exist to map their densely sampled 3D surfaces to a distortion-minimized 2D representation. However, there is no versatile framework to flatten complex sparse structures including vascular, duct or bone systems. We deploy a neural field to fit the transformation of the anatomy of interest to a 2D overview image. We further propose distortion regularization strategies and combine geometric with intensity-based loss formulations to also display non-annotated and auxiliary targets. In addition to improved versatility, our unfolding technique outperforms mesh-based baselines for sparse structures w.r.t. peak distortion and our regularization scheme yields smoother transformations compared to Jacobian formulations from neural field-based image registration.

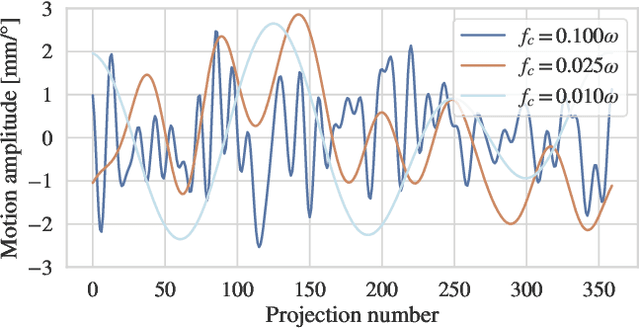

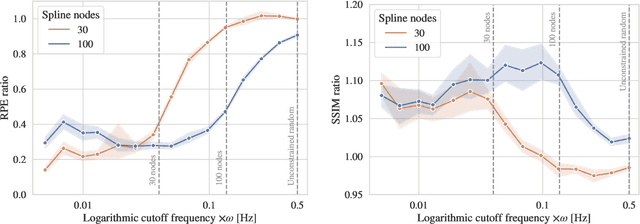

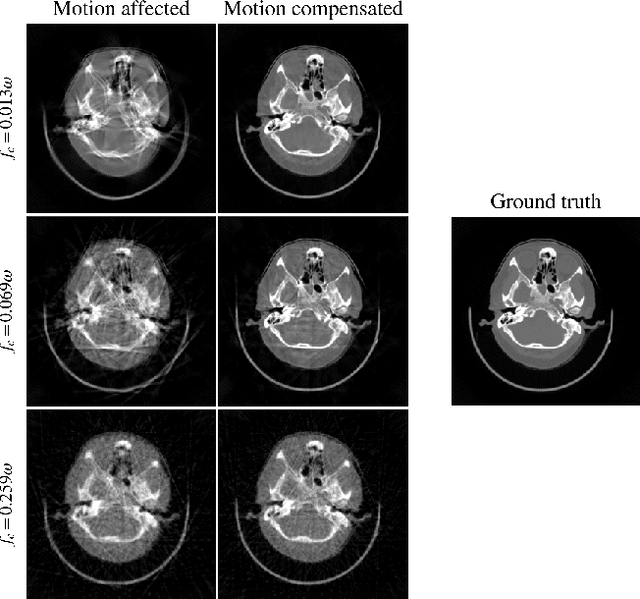

On the Influence of Smoothness Constraints in Computed Tomography Motion Compensation

May 29, 2024

Abstract:Computed tomography (CT) relies on precise patient immobilization during image acquisition. Nevertheless, motion artifacts in the reconstructed images can persist. Motion compensation methods aim to correct such artifacts post-acquisition, often incorporating temporal smoothness constraints on the estimated motion patterns. This study analyzes the influence of a spline-based motion model within an existing rigid motion compensation algorithm for cone-beam CT on the recoverable motion frequencies. Results demonstrate that the choice of motion model crucially influences recoverable frequencies. The optimization-based motion compensation algorithm is able to accurately fit the spline nodes for frequencies almost up to the node-dependent theoretical limit according to the Nyquist-Shannon theorem. Notably, a higher node count does not compromise reconstruction performance for slow motion patterns, but can extend the range of recoverable high frequencies for the investigated algorithm. Eventually, the optimal motion model is dependent on the imaged anatomy, clinical use case, and scanning protocol and should be tailored carefully to the expected motion frequency spectrum to ensure accurate motion compensation.

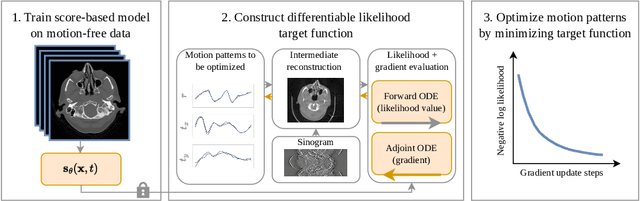

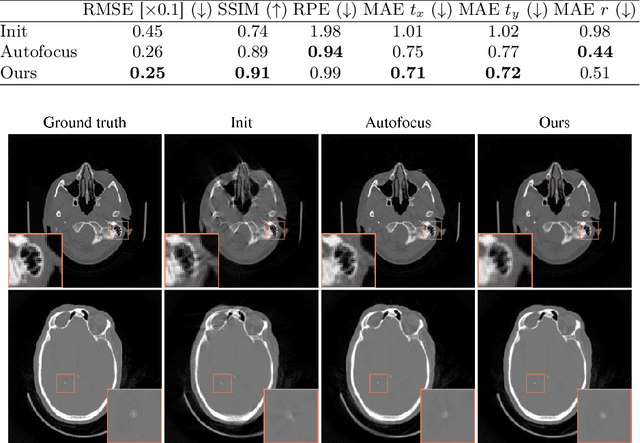

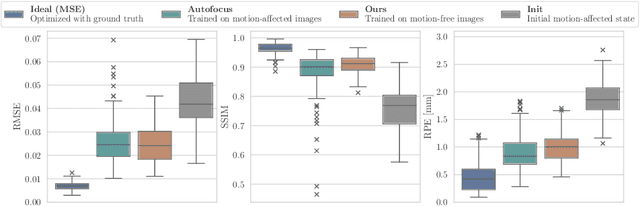

Differentiable Score-Based Likelihoods: Learning CT Motion Compensation From Clean Images

Apr 23, 2024

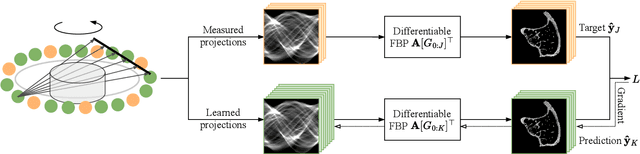

Abstract:Motion artifacts can compromise the diagnostic value of computed tomography (CT) images. Motion correction approaches require a per-scan estimation of patient-specific motion patterns. In this work, we train a score-based model to act as a probability density estimator for clean head CT images. Given the trained model, we quantify the deviation of a given motion-affected CT image from the ideal distribution through likelihood computation. We demonstrate that the likelihood can be utilized as a surrogate metric for motion artifact severity in the CT image facilitating the application of an iterative, gradient-based motion compensation algorithm. By optimizing the underlying motion parameters to maximize likelihood, our method effectively reduces motion artifacts, bringing the image closer to the distribution of motion-free scans. Our approach achieves comparable performance to state-of-the-art methods while eliminating the need for a representative data set of motion-affected samples. This is particularly advantageous in real-world applications, where patient motion patterns may exhibit unforeseen variability, ensuring robustness without implicit assumptions about recoverable motion types.

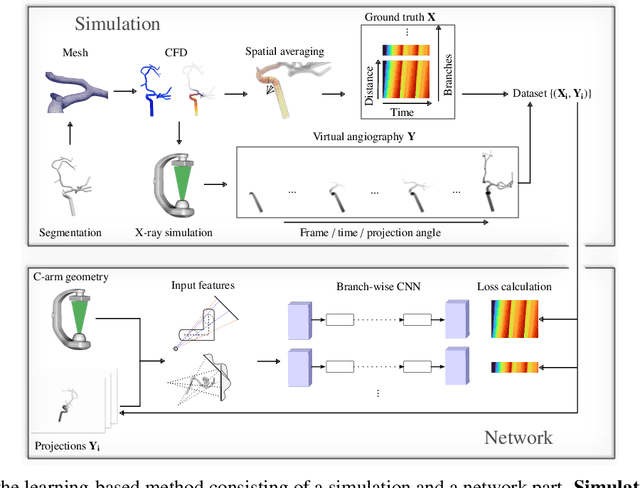

Physics-Informed Learning for Time-Resolved Angiographic Contrast Agent Concentration Reconstruction

Mar 04, 2024

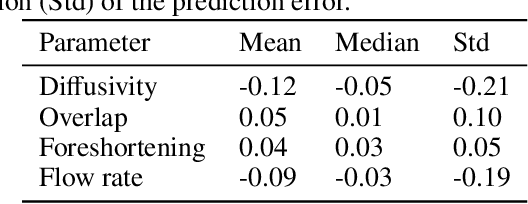

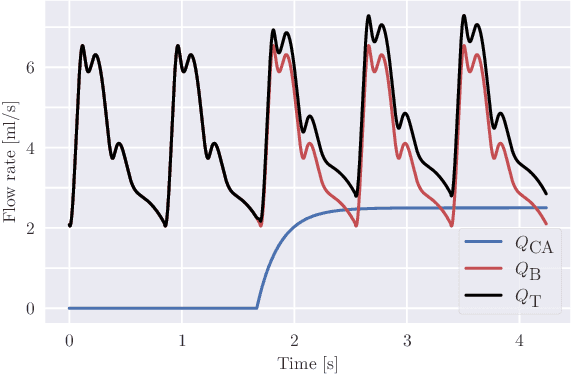

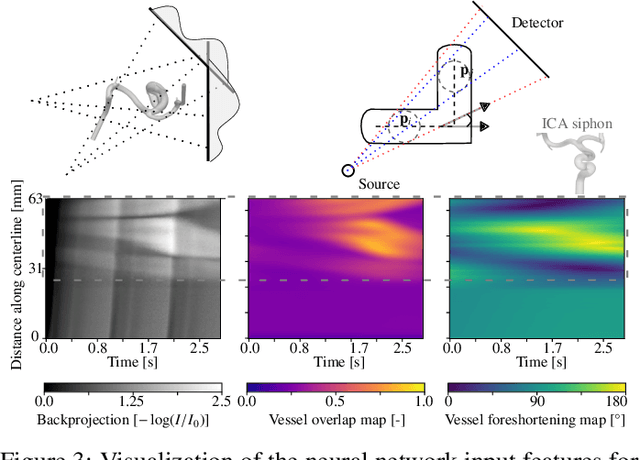

Abstract:Three-dimensional Digital Subtraction Angiography (3D-DSA) is a well-established X-ray-based technique for visualizing vascular anatomy. Recently, four-dimensional DSA (4D-DSA) reconstruction algorithms have been developed to enable the visualization of volumetric contrast flow dynamics through time-series of volumes. . This reconstruction problem is ill-posed mainly due to vessel overlap in the projection direction and geometric vessel foreshortening, which leads to information loss in the recorded projection images. However, knowledge about the underlying fluid dynamics can be leveraged to constrain the solution space. In our work, we implicitly include this information in a neural network-based model that is trained on a dataset of image-based blood flow simulations. The model predicts the spatially averaged contrast agent concentration for each centerline point of the vasculature over time, lowering the overall computational demand. The trained network enables the reconstruction of relative contrast agent concentrations with a mean absolute error of 0.02 $\pm$ 0.02 and a mean absolute percentage error of 5.31 % $\pm$ 9.25 %. Moreover, the network is robust to varying degrees of vessel overlap and vessel foreshortening. Our approach demonstrates the potential of the integration of machine learning and blood flow simulations in time-resolved angiographic flow reconstruction.

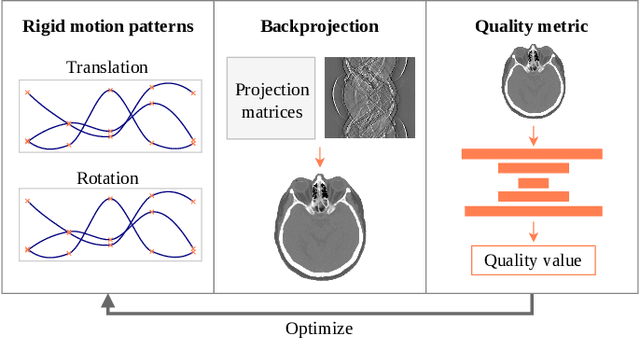

A gradient-based approach to fast and accurate head motion compensation in cone-beam CT

Jan 17, 2024Abstract:Cone-beam computed tomography (CBCT) systems, with their portability, present a promising avenue for direct point-of-care medical imaging, particularly in critical scenarios such as acute stroke assessment. However, the integration of CBCT into clinical workflows faces challenges, primarily linked to long scan duration resulting in patient motion during scanning and leading to image quality degradation in the reconstructed volumes. This paper introduces a novel approach to CBCT motion estimation using a gradient-based optimization algorithm, which leverages generalized derivatives of the backprojection operator for cone-beam CT geometries. Building on that, a fully differentiable target function is formulated which grades the quality of the current motion estimate in reconstruction space. We drastically accelerate motion estimation yielding a 19-fold speed-up compared to existing methods. Additionally, we investigate the architecture of networks used for quality metric regression and propose predicting voxel-wise quality maps, favoring autoencoder-like architectures over contracting ones. This modification improves gradient flow, leading to more accurate motion estimation. The presented method is evaluated through realistic experiments on head anatomy. It achieves a reduction in reprojection error from an initial average of 3mm to 0.61mm after motion compensation and consistently demonstrates superior performance compared to existing approaches. The analytic Jacobian for the backprojection operation, which is at the core of the proposed method, is made publicly available. In summary, this paper contributes to the advancement of CBCT integration into clinical workflows by proposing a robust motion estimation approach that enhances efficiency and accuracy, addressing critical challenges in time-sensitive scenarios.

Geometric Constraints Enable Self-Supervised Sinogram Inpainting in Sparse-View Tomography

Feb 13, 2023

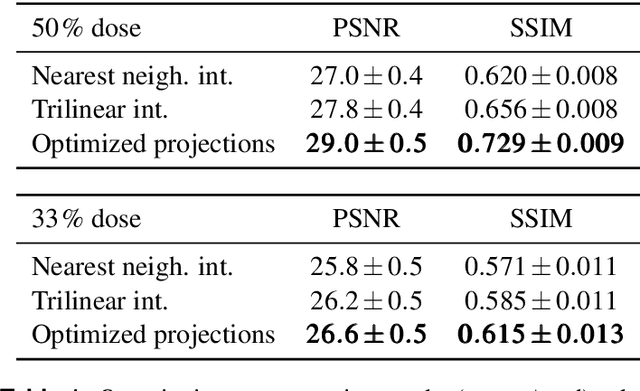

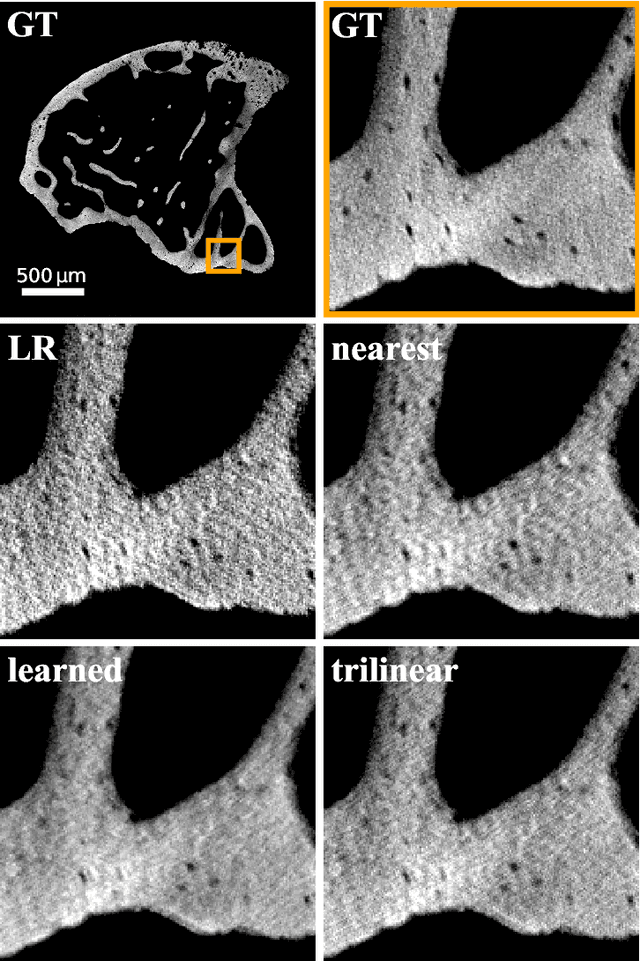

Abstract:The diagnostic quality of computed tomography (CT) scans is usually restricted by the induced patient dose, scan speed, and image quality. Sparse-angle tomographic scans reduce radiation exposure and accelerate data acquisition, but suffer from image artifacts and noise. Existing image processing algorithms can restore CT reconstruction quality but often require large training data sets or can not be used for truncated objects. This work presents a self-supervised projection inpainting method that allows learning missing projective views via gradient-based optimization. By reconstructing independent stacks of projection data, a self-supervised loss is calculated in the CT image domain and used to directly optimize projection image intensities to match the missing tomographic views constrained by the projection geometry. Our experiments on real X-ray microscope (XRM) tomographic mouse tibia bone scans show that our method improves reconstructions by 3.1-7.4%/7.7-17.6% in terms of PSNR/SSIM with respect to the interpolation baseline. Our approach is applicable as a flexible self-supervised projection inpainting tool for tomographic applications.

Optimizing CT Scan Geometries With and Without Gradients

Feb 13, 2023Abstract:In computed tomography (CT), the projection geometry used for data acquisition needs to be known precisely to obtain a clear reconstructed image. Rigid patient motion is a cause for misalignment between measured data and employed geometry. Commonly, such motion is compensated by solving an optimization problem that, e.g., maximizes the quality of the reconstructed image with respect to the projection geometry. So far, gradient-free optimization algorithms have been utilized to find the solution for this problem. Here, we show that gradient-based optimization algorithms are a possible alternative and compare the performance to their gradient-free counterparts on a benchmark motion compensation problem. Gradient-based algorithms converge substantially faster while being comparable to gradient-free algorithms in terms of capture range and robustness to the number of free parameters. Hence, gradient-based optimization is a viable alternative for the given type of problems.

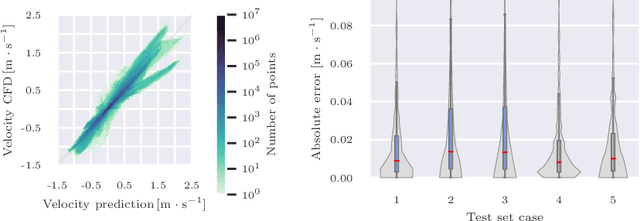

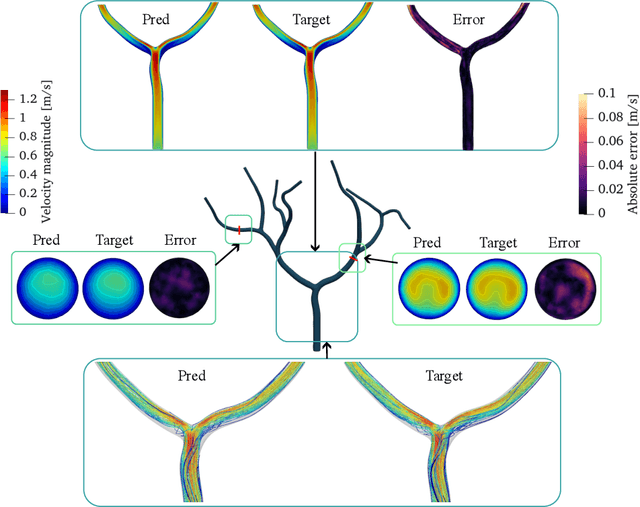

Transient Hemodynamics Prediction Using an Efficient Octree-Based Deep Learning Model

Feb 13, 2023

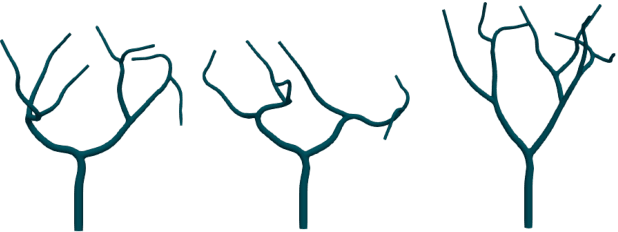

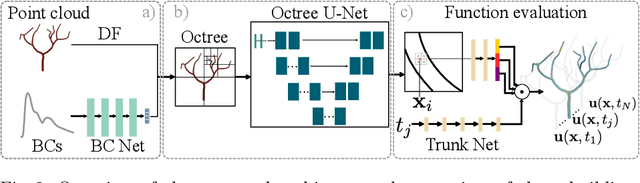

Abstract:Patient-specific hemodynamics assessment could support diagnosis and treatment of neurovascular diseases. Currently, conventional medical imaging modalities are not able to accurately acquire high-resolution hemodynamic information that would be required to assess complex neurovascular pathologies. Therefore, computational fluid dynamics (CFD) simulations can be applied to tomographic reconstructions to obtain clinically relevant information. However, three-dimensional (3D) CFD simulations require enormous computational resources and simulation-related expert knowledge that are usually not available in clinical environments. Recently, deep-learning-based methods have been proposed as CFD surrogates to improve computational efficiency. Nevertheless, the prediction of high-resolution transient CFD simulations for complex vascular geometries poses a challenge to conventional deep learning models. In this work, we present an architecture that is tailored to predict high-resolution (spatial and temporal) velocity fields for complex synthetic vascular geometries. For this, an octree-based spatial discretization is combined with an implicit neural function representation to efficiently handle the prediction of the 3D velocity field for each time step. The presented method is evaluated for the task of cerebral hemodynamics prediction before and during the injection of contrast agent in the internal carotid artery (ICA). Compared to CFD simulations, the velocity field can be estimated with a mean absolute error of 0.024 m/s, whereas the run time reduces from several hours on a high-performance cluster to a few seconds on a consumer graphical processing unit.

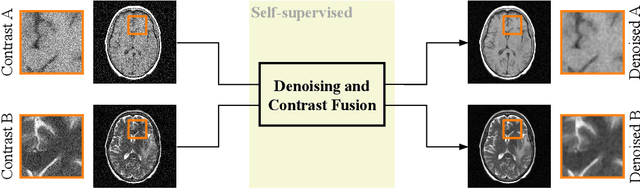

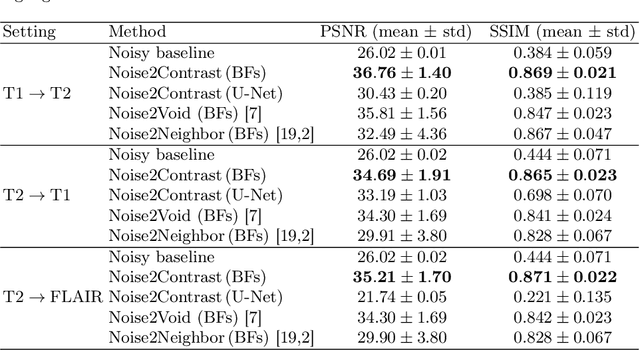

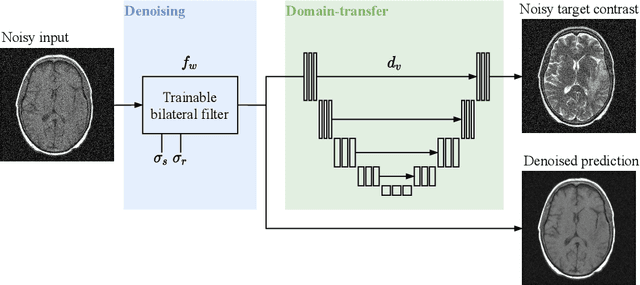

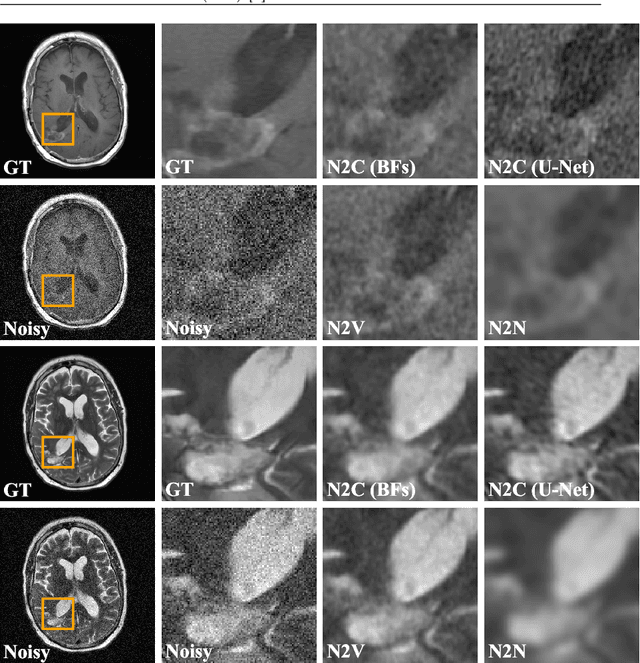

Noise2Contrast: Multi-Contrast Fusion Enables Self-Supervised Tomographic Image Denoising

Dec 09, 2022

Abstract:Self-supervised image denoising techniques emerged as convenient methods that allow training denoising models without requiring ground-truth noise-free data. Existing methods usually optimize loss metrics that are calculated from multiple noisy realizations of similar images, e.g., from neighboring tomographic slices. However, those approaches fail to utilize the multiple contrasts that are routinely acquired in medical imaging modalities like MRI or dual-energy CT. In this work, we propose the new self-supervised training scheme Noise2Contrast that combines information from multiple measured image contrasts to train a denoising model. We stack denoising with domain-transfer operators to utilize the independent noise realizations of different image contrasts to derive a self-supervised loss. The trained denoising operator achieves convincing quantitative and qualitative results, outperforming state-of-the-art self-supervised methods by 4.7-11.0%/4.8-7.3% (PSNR/SSIM) on brain MRI data and by 43.6-50.5%/57.1-77.1% (PSNR/SSIM) on dual-energy CT X-ray microscopy data with respect to the noisy baseline. Our experiments on different real measured data sets indicate that Noise2Contrast training generalizes to other multi-contrast imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge