Nina Weng

Weight Space Correlation Analysis: Quantifying Feature Utilization in Deep Learning Models

Dec 15, 2025

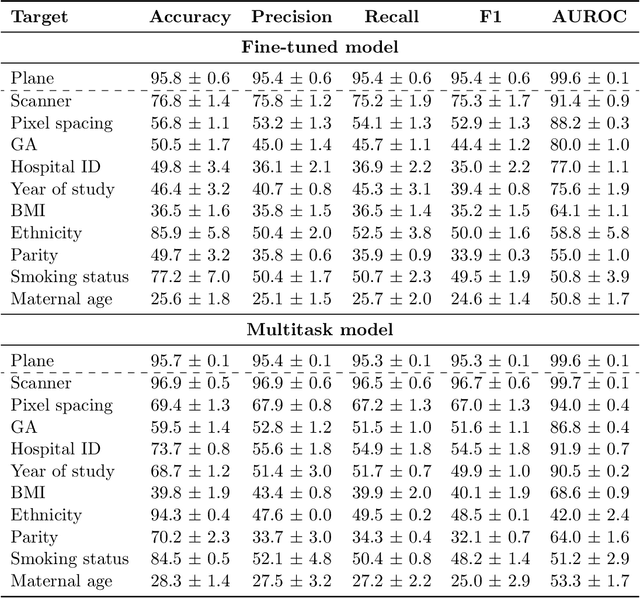

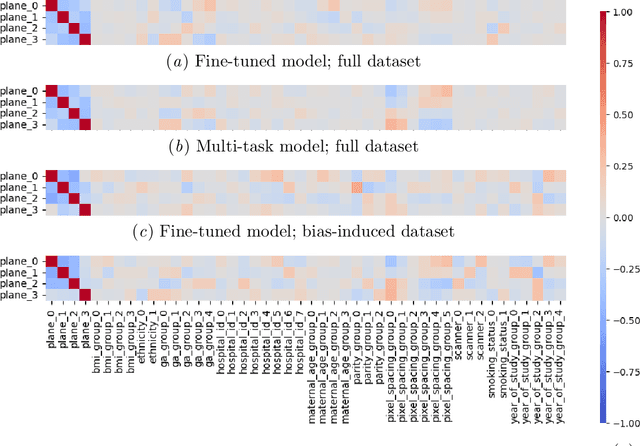

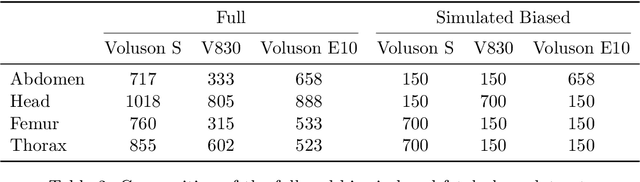

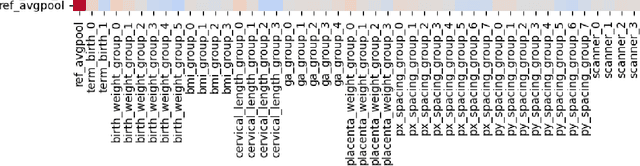

Abstract:Deep learning models in medical imaging are susceptible to shortcut learning, relying on confounding metadata (e.g., scanner model) that is often encoded in image embeddings. The crucial question is whether the model actively utilizes this encoded information for its final prediction. We introduce Weight Space Correlation Analysis, an interpretable methodology that quantifies feature utilization by measuring the alignment between the classification heads of a primary clinical task and auxiliary metadata tasks. We first validate our method by successfully detecting artificially induced shortcut learning. We then apply it to probe the feature utilization of an SA-SonoNet model trained for Spontaneous Preterm Birth (sPTB) prediction. Our analysis confirmed that while the embeddings contain substantial metadata, the sPTB classifier's weight vectors were highly correlated with clinically relevant factors (e.g., birth weight) but decoupled from clinically irrelevant acquisition factors (e.g. scanner). Our methodology provides a tool to verify model trustworthiness, demonstrating that, in the absence of induced bias, the clinical model selectively utilizes features related to the genuine clinical signal.

Patronus: Bringing Transparency to Diffusion Models with Prototypes

Mar 28, 2025Abstract:Diffusion-based generative models, such as Denoising Diffusion Probabilistic Models (DDPMs), have achieved remarkable success in image generation, but their step-by-step denoising process remains opaque, leaving critical aspects of the generation mechanism unexplained. To address this, we introduce \emph{Patronus}, an interpretable diffusion model inspired by ProtoPNet. Patronus integrates a prototypical network into DDPMs, enabling the extraction of prototypes and conditioning of the generation process on their prototype activation vector. This design enhances interpretability by showing the learned prototypes and how they influence the generation process. Additionally, the model supports downstream tasks like image manipulation, enabling more transparent and controlled modifications. Moreover, Patronus could reveal shortcut learning in the generation process by detecting unwanted correlations between learned prototypes. Notably, Patronus operates entirely without any annotations or text prompts. This work opens new avenues for understanding and controlling diffusion models through prototype-based interpretability. Our code is available at \href{https://github.com/nina-weng/patronus}{https://github.com/nina-weng/patronus}.

In the Picture: Medical Imaging Datasets, Artifacts, and their Living Review

Jan 18, 2025

Abstract:Datasets play a critical role in medical imaging research, yet issues such as label quality, shortcuts, and metadata are often overlooked. This lack of attention may harm the generalizability of algorithms and, consequently, negatively impact patient outcomes. While existing medical imaging literature reviews mostly focus on machine learning (ML) methods, with only a few focusing on datasets for specific applications, these reviews remain static -- they are published once and not updated thereafter. This fails to account for emerging evidence, such as biases, shortcuts, and additional annotations that other researchers may contribute after the dataset is published. We refer to these newly discovered findings of datasets as research artifacts. To address this gap, we propose a living review that continuously tracks public datasets and their associated research artifacts across multiple medical imaging applications. Our approach includes a framework for the living review to monitor data documentation artifacts, and an SQL database to visualize the citation relationships between research artifact and dataset. Lastly, we discuss key considerations for creating medical imaging datasets, review best practices for data annotation, discuss the significance of shortcuts and demographic diversity, and emphasize the importance of managing datasets throughout their entire lifecycle. Our demo is publicly available at http://130.226.140.142.

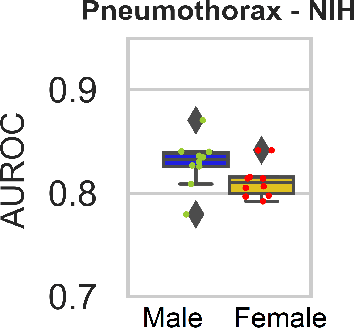

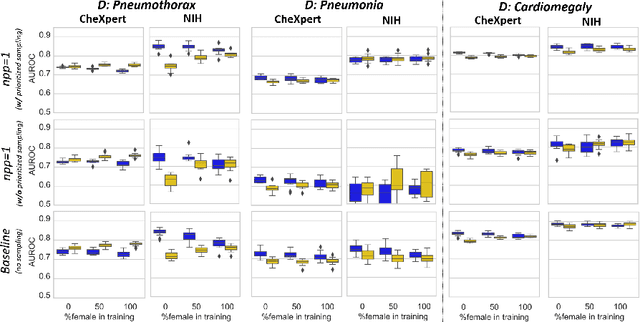

Slicing Through Bias: Explaining Performance Gaps in Medical Image Analysis using Slice Discovery Methods

Jun 17, 2024Abstract:Machine learning models have achieved high overall accuracy in medical image analysis. However, performance disparities on specific patient groups pose challenges to their clinical utility, safety, and fairness. This can affect known patient groups - such as those based on sex, age, or disease subtype - as well as previously unknown and unlabeled groups. Furthermore, the root cause of such observed performance disparities is often challenging to uncover, hindering mitigation efforts. In this paper, to address these issues, we leverage Slice Discovery Methods (SDMs) to identify interpretable underperforming subsets of data and formulate hypotheses regarding the cause of observed performance disparities. We introduce a novel SDM and apply it in a case study on the classification of pneumothorax and atelectasis from chest x-rays. Our study demonstrates the effectiveness of SDMs in hypothesis formulation and yields an explanation of previously observed but unexplained performance disparities between male and female patients in widely used chest X-ray datasets and models. Our findings indicate shortcut learning in both classification tasks, through the presence of chest drains and ECG wires, respectively. Sex-based differences in the prevalence of these shortcut features appear to cause the observed classification performance gap, representing a previously underappreciated interaction between shortcut learning and model fairness analyses.

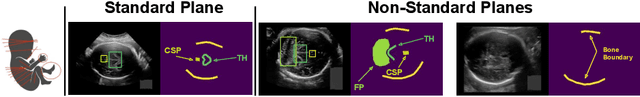

Diffusion-based Iterative Counterfactual Explanations for Fetal Ultrasound Image Quality Assessment

Mar 13, 2024

Abstract:Obstetric ultrasound image quality is crucial for accurate diagnosis and monitoring of fetal health. However, producing high-quality standard planes is difficult, influenced by the sonographer's expertise and factors like the maternal BMI or the fetus dynamics. In this work, we propose using diffusion-based counterfactual explainable AI to generate realistic high-quality standard planes from low-quality non-standard ones. Through quantitative and qualitative evaluation, we demonstrate the effectiveness of our method in producing plausible counterfactuals of increased quality. This shows future promise both for enhancing training of clinicians by providing visual feedback, as well as for improving image quality and, consequently, downstream diagnosis and monitoring.

Non-discrimination Criteria for Generative Language Models

Mar 13, 2024Abstract:Within recent years, generative AI, such as large language models, has undergone rapid development. As these models become increasingly available to the public, concerns arise about perpetuating and amplifying harmful biases in applications. Gender stereotypes can be harmful and limiting for the individuals they target, whether they consist of misrepresentation or discrimination. Recognizing gender bias as a pervasive societal construct, this paper studies how to uncover and quantify the presence of gender biases in generative language models. In particular, we derive generative AI analogues of three well-known non-discrimination criteria from classification, namely independence, separation and sufficiency. To demonstrate these criteria in action, we design prompts for each of the criteria with a focus on occupational gender stereotype, specifically utilizing the medical test to introduce the ground truth in the generative AI context. Our results address the presence of occupational gender bias within such conversational language models.

Shortcut Learning in Medical Image Segmentation

Mar 11, 2024Abstract:Shortcut learning is a phenomenon where machine learning models prioritize learning simple, potentially misleading cues from data that do not generalize well beyond the training set. While existing research primarily investigates this in the realm of image classification, this study extends the exploration of shortcut learning into medical image segmentation. We demonstrate that clinical annotations such as calipers, and the combination of zero-padded convolutions and center-cropped training sets in the dataset can inadvertently serve as shortcuts, impacting segmentation accuracy. We identify and evaluate the shortcut learning on two different but common medical image segmentation tasks. In addition, we suggest strategies to mitigate the influence of shortcut learning and improve the generalizability of the segmentation models. By uncovering the presence and implications of shortcuts in medical image segmentation, we provide insights and methodologies for evaluating and overcoming this pervasive challenge and call for attention in the community for shortcuts in segmentation.

Fast Diffusion-Based Counterfactuals for Shortcut Removal and Generation

Dec 21, 2023Abstract:Shortcut learning is when a model -- e.g. a cardiac disease classifier -- exploits correlations between the target label and a spurious shortcut feature, e.g. a pacemaker, to predict the target label based on the shortcut rather than real discriminative features. This is common in medical imaging, where treatment and clinical annotations correlate with disease labels, making them easy shortcuts to predict disease. We propose a novel detection and quantification of the impact of potential shortcut features via a fast diffusion-based counterfactual image generation that can synthetically remove or add shortcuts. Via a novel inpainting-based modification we spatially limit the changes made with no extra inference step, encouraging the removal of spatially constrained shortcut features while ensuring that the shortcut-free counterfactuals preserve their remaining image features to a high degree. Using these, we assess how shortcut features influence model predictions. This is enabled by our second contribution: An efficient diffusion-based counterfactual explanation method with significant inference speed-up at comparable image quality as state-of-the-art. We confirm this on two large chest X-ray datasets, a skin lesion dataset, and CelebA.

An Interpretable and Attention-based Method for Gaze Estimation Using Electroencephalography

Aug 09, 2023Abstract:Eye movements can reveal valuable insights into various aspects of human mental processes, physical well-being, and actions. Recently, several datasets have been made available that simultaneously record EEG activity and eye movements. This has triggered the development of various methods to predict gaze direction based on brain activity. However, most of these methods lack interpretability, which limits their technology acceptance. In this paper, we leverage a large data set of simultaneously measured Electroencephalography (EEG) and Eye tracking, proposing an interpretable model for gaze estimation from EEG data. More specifically, we present a novel attention-based deep learning framework for EEG signal analysis, which allows the network to focus on the most relevant information in the signal and discard problematic channels. Additionally, we provide a comprehensive evaluation of the presented framework, demonstrating its superiority over current methods in terms of accuracy and robustness. Finally, the study presents visualizations that explain the results of the analysis and highlights the potential of attention mechanism for improving the efficiency and effectiveness of EEG data analysis in a variety of applications.

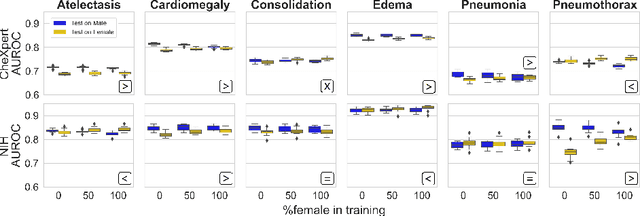

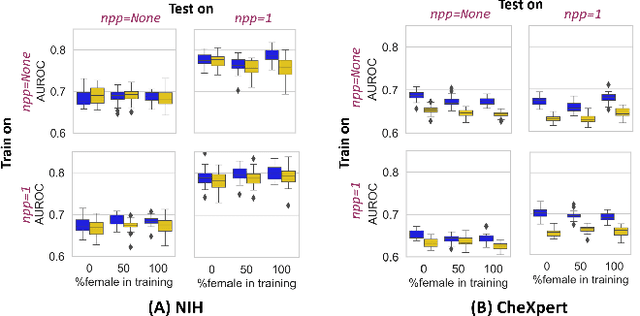

Are Sex-based Physiological Differences the Cause of Gender Bias for Chest X-ray Diagnosis?

Aug 09, 2023

Abstract:While many studies have assessed the fairness of AI algorithms in the medical field, the causes of differences in prediction performance are often unknown. This lack of knowledge about the causes of bias hampers the efficacy of bias mitigation, as evidenced by the fact that simple dataset balancing still often performs best in reducing performance gaps but is unable to resolve all performance differences. In this work, we investigate the causes of gender bias in machine learning-based chest X-ray diagnosis. In particular, we explore the hypothesis that breast tissue leads to underexposure of the lungs and causes lower model performance. Methodologically, we propose a new sampling method which addresses the highly skewed distribution of recordings per patient in two widely used public datasets, while at the same time reducing the impact of label errors. Our comprehensive analysis of gender differences across diseases, datasets, and gender representations in the training set shows that dataset imbalance is not the sole cause of performance differences. Moreover, relative group performance differs strongly between datasets, indicating important dataset-specific factors influencing male/female group performance. Finally, we investigate the effect of breast tissue more specifically, by cropping out the breasts from recordings, finding that this does not resolve the observed performance gaps. In conclusion, our results indicate that dataset-specific factors, not fundamental physiological differences, are the main drivers of male--female performance gaps in chest X-ray analyses on widely used NIH and CheXpert Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge