Annan Li

QianfanHuijin Technical Report: A Novel Multi-Stage Training Paradigm for Finance Industrial LLMs

Dec 30, 2025Abstract:Domain-specific enhancement of Large Language Models (LLMs) within the financial context has long been a focal point of industrial application. While previous models such as BloombergGPT and Baichuan-Finance primarily focused on knowledge enhancement, the deepening complexity of financial services has driven a growing demand for models that possess not only domain knowledge but also robust financial reasoning and agentic capabilities. In this paper, we present QianfanHuijin, a financial domain LLM, and propose a generalizable multi-stage training paradigm for industrial model enhancement. Our approach begins with Continual Pre-training (CPT) on financial corpora to consolidate the knowledge base. This is followed by a fine-grained Post-training pipeline designed with increasing specificity: starting with Financial SFT, progressing to Finance Reasoning RL and Finance Agentic RL, and culminating in General RL aligned with real-world business scenarios. Empirical results demonstrate that QianfanHuijin achieves superior performance across various authoritative financial benchmarks. Furthermore, ablation studies confirm that the targeted Reasoning RL and Agentic RL stages yield significant gains in their respective capabilities. These findings validate our motivation and suggest that this fine-grained, progressive post-training methodology is poised to become a mainstream paradigm for various industrial-enhanced LLMs.

The FM Agent

Oct 30, 2025Abstract:Large language models (LLMs) are catalyzing the development of autonomous AI research agents for scientific and engineering discovery. We present FM Agent, a novel and general-purpose multi-agent framework that leverages a synergistic combination of LLM-based reasoning and large-scale evolutionary search to address complex real-world challenges. The core of FM Agent integrates several key innovations: 1) a cold-start initialization phase incorporating expert guidance, 2) a novel evolutionary sampling strategy for iterative optimization, 3) domain-specific evaluators that combine correctness, effectiveness, and LLM-supervised feedback, and 4) a distributed, asynchronous execution infrastructure built on Ray. Demonstrating broad applicability, our system has been evaluated across diverse domains, including operations research, machine learning, GPU kernel optimization, and classical mathematical problems. FM Agent reaches state-of-the-art results autonomously, without human interpretation or tuning -- 1976.3 on ALE-Bench (+5.2\%), 43.56\% on MLE-Bench (+4.0pp), up to 20x speedups on KernelBench, and establishes new state-of-the-art(SOTA) results on several classical mathematical problems. Beyond academic benchmarks, FM Agent shows considerable promise for both large-scale enterprise R\&D workflows and fundamental scientific research, where it can accelerate innovation, automate complex discovery processes, and deliver substantial engineering and scientific advances with broader societal impact.

OctoNav: Towards Generalist Embodied Navigation

Jun 11, 2025Abstract:Embodied navigation stands as a foundation pillar within the broader pursuit of embodied AI. However, previous navigation research is divided into different tasks/capabilities, e.g., ObjNav, ImgNav and VLN, where they differ in task objectives and modalities, making datasets and methods are designed individually. In this work, we take steps toward generalist navigation agents, which can follow free-form instructions that include arbitrary compounds of multi-modal and multi-capability. To achieve this, we propose a large-scale benchmark and corresponding method, termed OctoNav-Bench and OctoNav-R1. Specifically, OctoNav-Bench features continuous environments and is constructed via a designed annotation pipeline. We thoroughly craft instruction-trajectory pairs, where instructions are diverse in free-form with arbitrary modality and capability. Also, we construct a Think-Before-Action (TBA-CoT) dataset within OctoNav-Bench to provide the thinking process behind actions. For OctoNav-R1, we build it upon MLLMs and adapt it to a VLA-type model, which can produce low-level actions solely based on 2D visual observations. Moreover, we design a Hybrid Training Paradigm (HTP) that consists of three stages, i.e., Action-/TBA-SFT, Nav-GPRO, and Online RL stages. Each stage contains specifically designed learning policies and rewards. Importantly, for TBA-SFT and Nav-GRPO designs, we are inspired by the OpenAI-o1 and DeepSeek-R1, which show impressive reasoning ability via thinking-before-answer. Thus, we aim to investigate how to achieve thinking-before-action in the embodied navigation field, to improve model's reasoning ability toward generalists. Specifically, we propose TBA-SFT to utilize the TBA-CoT dataset to fine-tune the model as a cold-start phrase and then leverage Nav-GPRO to improve its thinking ability. Finally, OctoNav-R1 shows superior performance compared with previous methods.

CodeJudge-Eval: Can Large Language Models be Good Judges in Code Understanding?

Aug 20, 2024

Abstract:Recent advancements in large language models (LLMs) have showcased impressive code generation capabilities, primarily evaluated through language-to-code benchmarks. However, these benchmarks may not fully capture a model's code understanding abilities. We introduce CodeJudge-Eval (CJ-Eval), a novel benchmark designed to assess LLMs' code understanding abilities from the perspective of code judging rather than code generation. CJ-Eval challenges models to determine the correctness of provided code solutions, encompassing various error types and compilation issues. By leveraging a diverse set of problems and a fine-grained judging system, CJ-Eval addresses the limitations of traditional benchmarks, including the potential memorization of solutions. Evaluation of 12 well-known LLMs on CJ-Eval reveals that even state-of-the-art models struggle, highlighting the benchmark's ability to probe deeper into models' code understanding abilities. Our benchmark will be available at \url{https://github.com/CodeLLM-Research/CodeJudge-Eval}.

GLGait: A Global-Local Temporal Receptive Field Network for Gait Recognition in the Wild

Aug 13, 2024

Abstract:Gait recognition has attracted increasing attention from academia and industry as a human recognition technology from a distance in non-intrusive ways without requiring cooperation. Although advanced methods have achieved impressive success in lab scenarios, most of them perform poorly in the wild. Recently, some Convolution Neural Networks (ConvNets) based methods have been proposed to address the issue of gait recognition in the wild. However, the temporal receptive field obtained by convolution operations is limited for long gait sequences. If directly replacing convolution blocks with visual transformer blocks, the model may not enhance a local temporal receptive field, which is important for covering a complete gait cycle. To address this issue, we design a Global-Local Temporal Receptive Field Network (GLGait). GLGait employs a Global-Local Temporal Module (GLTM) to establish a global-local temporal receptive field, which mainly consists of a Pseudo Global Temporal Self-Attention (PGTA) and a temporal convolution operation. Specifically, PGTA is used to obtain a pseudo global temporal receptive field with less memory and computation complexity compared with a multi-head self-attention (MHSA). The temporal convolution operation is used to enhance the local temporal receptive field. Besides, it can also aggregate pseudo global temporal receptive field to a true holistic temporal receptive field. Furthermore, we also propose a Center-Augmented Triplet Loss (CTL) in GLGait to reduce the intra-class distance and expand the positive samples in the training stage. Extensive experiments show that our method obtains state-of-the-art results on in-the-wild datasets, $i.e.$, Gait3D and GREW. The code is available at https://github.com/bgdpgz/GLGait.

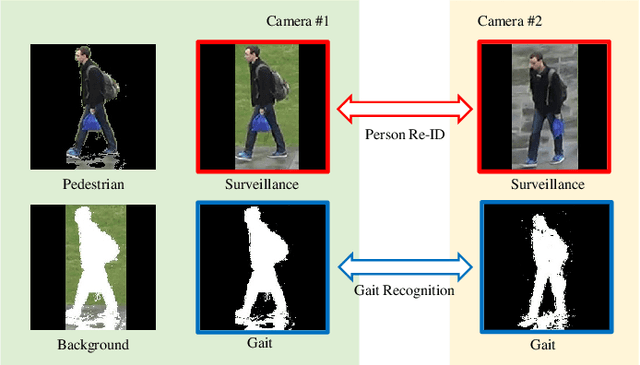

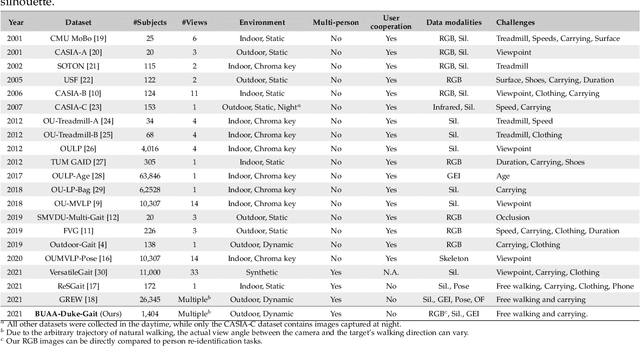

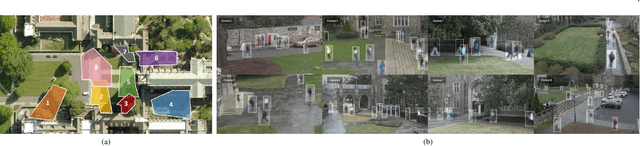

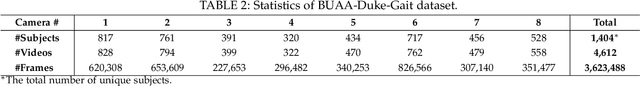

RealGait: Gait Recognition for Person Re-Identification

Jan 13, 2022

Abstract:Human gait is considered a unique biometric identifier which can be acquired in a covert manner at a distance. However, models trained on existing public domain gait datasets which are captured in controlled scenarios lead to drastic performance decline when applied to real-world unconstrained gait data. On the other hand, video person re-identification techniques have achieved promising performance on large-scale publicly available datasets. Given the diversity of clothing characteristics, clothing cue is not reliable for person recognition in general. So, it is actually not clear why the state-of-the-art person re-identification methods work as well as they do. In this paper, we construct a new gait dataset by extracting silhouettes from an existing video person re-identification challenge which consists of 1,404 persons walking in an unconstrained manner. Based on this dataset, a consistent and comparative study between gait recognition and person re-identification can be carried out. Given that our experimental results show that current gait recognition approaches designed under data collected in controlled scenarios are inappropriate for real surveillance scenarios, we propose a novel gait recognition method, called RealGait. Our results suggest that recognizing people by their gait in real surveillance scenarios is feasible and the underlying gait pattern is probably the true reason why video person re-idenfification works in practice.

Will You Ever Become Popular? Learning to Predict Virality of Dance Clips

Nov 06, 2021

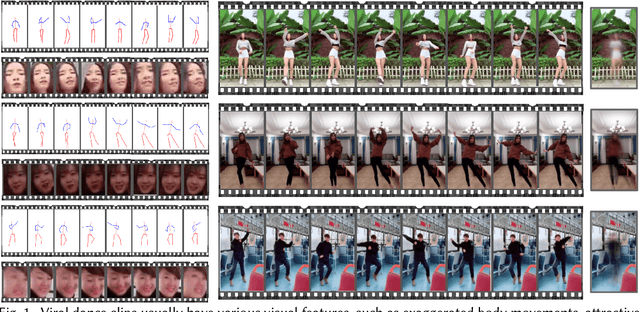

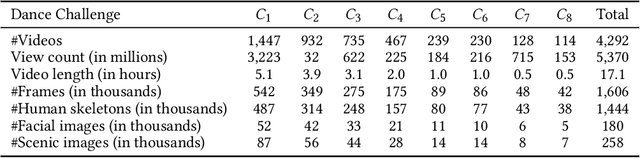

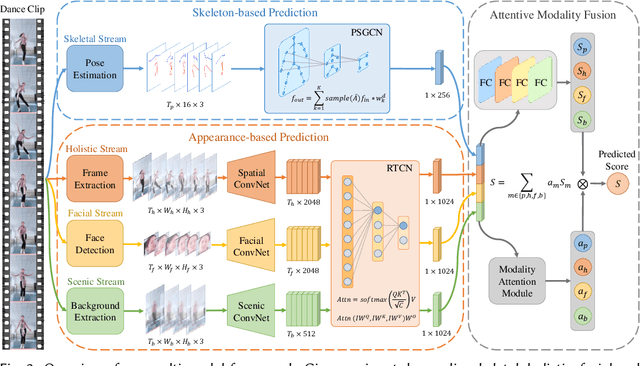

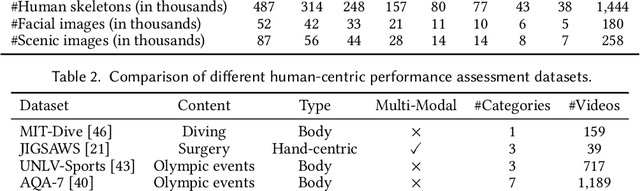

Abstract:Dance challenges are going viral in video communities like TikTok nowadays. Once a challenge becomes popular, thousands of short-form videos will be uploaded in merely a couple of days. Therefore, virality prediction from dance challenges is of great commercial value and has a wide range of applications, such as smart recommendation and popularity promotion. In this paper, a novel multi-modal framework which integrates skeletal, holistic appearance, facial and scenic cues is proposed for comprehensive dance virality prediction. To model body movements, we propose a pyramidal skeleton graph convolutional network (PSGCN) which hierarchically refines spatio-temporal skeleton graphs. Meanwhile, we introduce a relational temporal convolutional network (RTCN) to exploit appearance dynamics with non-local temporal relations. An attentive fusion approach is finally proposed to adaptively aggregate predictions from different modalities. To validate our method, we introduce a large-scale viral dance video (VDV) dataset, which contains over 4,000 dance clips of eight viral dance challenges. Extensive experiments on the VDV dataset demonstrate the efficacy of our model. Extensive experiments on the VDV dataset well demonstrate the effectiveness of our approach. Furthermore, we show that short video applications like multi-dimensional recommendation and action feedback can be derived from our model.

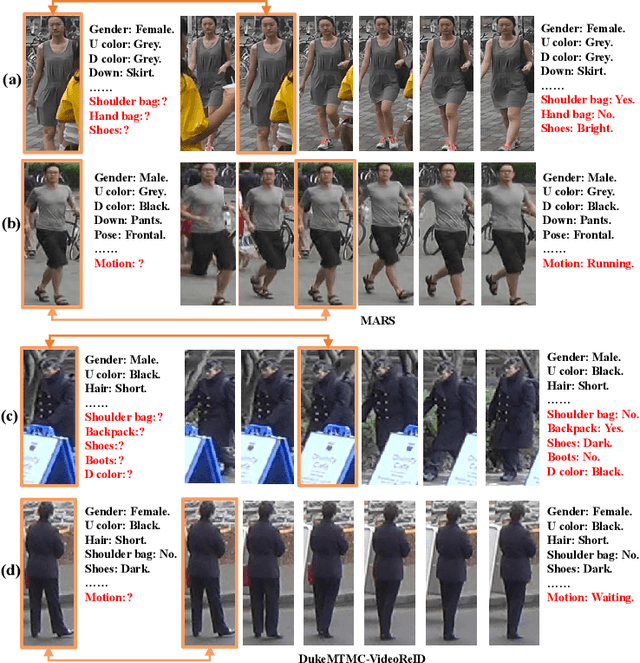

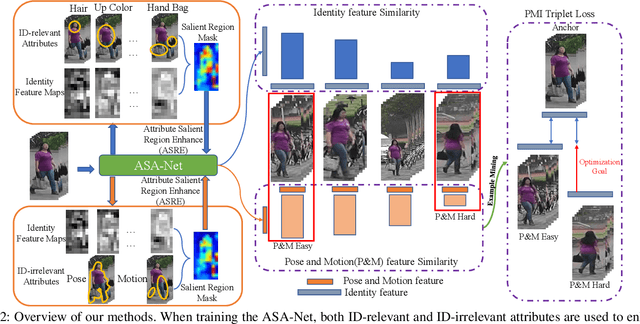

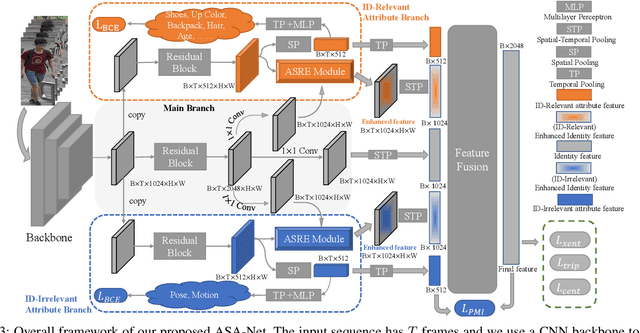

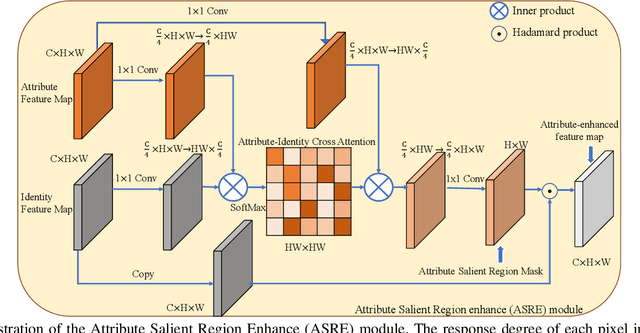

Video Person Re-identification using Attribute-enhanced Features

Aug 16, 2021

Abstract:Video-based person re-identification (Re-ID) which aims to associate people across non-overlapping cameras using surveillance video is a challenging task. Pedestrian attribute, such as gender, age and clothing characteristics contains rich and supplementary information but is less explored in video person Re-ID. In this work, we propose a novel network architecture named Attribute Salience Assisted Network (ASA-Net) for attribute-assisted video person Re-ID, which achieved considerable improvement to existing works by two methods.First, to learn a better separation of the target from background, we propose to learn the visual attention from middle-level attribute instead of high-level identities. The proposed Attribute Salient Region Enhance (ASRE) module can attend more accurately on the body of pedestrian. Second, we found that many identity-irrelevant but object or subject-relevant factors like the view angle and movement of the target pedestrian can greatly influence the two dimensional appearance of a pedestrian. This problem can be mitigated by investigating both identity-relevant and identity-irrelevant attributes via a novel triplet loss which is referred as the Pose~\&~Motion-Invariant (PMI) triplet loss.

Few-Shot Fine-Grained Action Recognition via Bidirectional Attention and Contrastive Meta-Learning

Aug 15, 2021

Abstract:Fine-grained action recognition is attracting increasing attention due to the emerging demand of specific action understanding in real-world applications, whereas the data of rare fine-grained categories is very limited. Therefore, we propose the few-shot fine-grained action recognition problem, aiming to recognize novel fine-grained actions with only few samples given for each class. Although progress has been made in coarse-grained actions, existing few-shot recognition methods encounter two issues handling fine-grained actions: the inability to capture subtle action details and the inadequacy in learning from data with low inter-class variance. To tackle the first issue, a human vision inspired bidirectional attention module (BAM) is proposed. Combining top-down task-driven signals with bottom-up salient stimuli, BAM captures subtle action details by accurately highlighting informative spatio-temporal regions. To address the second issue, we introduce contrastive meta-learning (CML). Compared with the widely adopted ProtoNet-based method, CML generates more discriminative video representations for low inter-class variance data, since it makes full use of potential contrastive pairs in each training episode. Furthermore, to fairly compare different models, we establish specific benchmark protocols on two large-scale fine-grained action recognition datasets. Extensive experiments show that our method consistently achieves state-of-the-art performance across evaluated tasks.

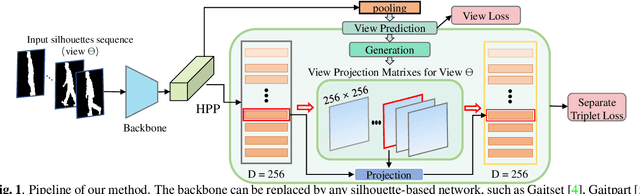

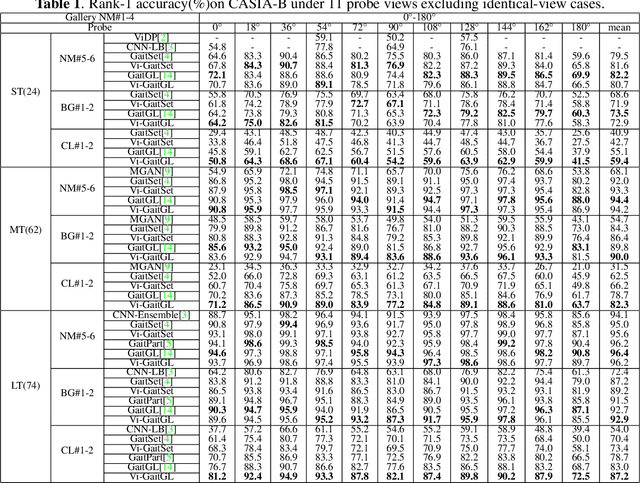

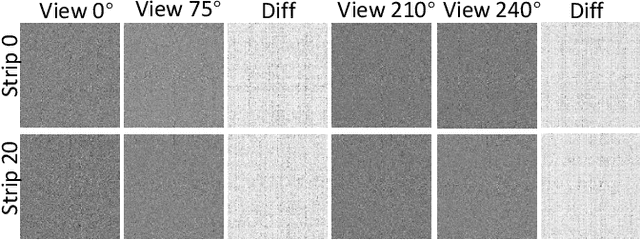

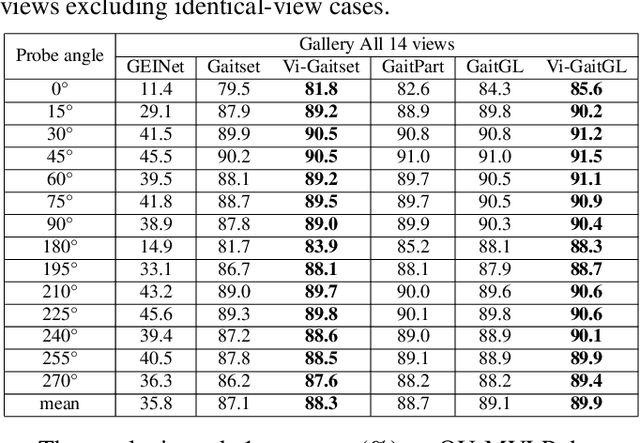

Silhouette based View embeddings for Gait Recognition under Multiple Views

Aug 12, 2021

Abstract:Gait recognition under multiple views is an important computer vision and pattern recognition task. In the emerging convolutional neural network based approaches, the information of view angle is ignored to some extent. Instead of direct view estimation and training view-specific recognition models, we propose a compatible framework that can embed view information into existing architectures of gait recognition. The embedding is simply achieved by a selective projection layer. Experimental results on two large public datasets show that the proposed framework is very effective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge