Martyna Plomecka

Estimating Pasture Biomass from Top-View Images: A Dataset for Precision Agriculture

Oct 27, 2025Abstract:Accurate estimation of pasture biomass is important for decision-making in livestock production systems. Estimates of pasture biomass can be used to manage stocking rates to maximise pasture utilisation, while minimising the risk of overgrazing and promoting overall system health. We present a comprehensive dataset of 1,162 annotated top-view images of pastures collected across 19 locations in Australia. The images were taken across multiple seasons and include a range of temperate pasture species. Each image captures a 70cm * 30cm quadrat and is paired with on-ground measurements including biomass sorted by component (green, dead, and legume fraction), vegetation height, and Normalized Difference Vegetation Index (NDVI) from Active Optical Sensors (AOS). The multidimensional nature of the data, which combines visual, spectral, and structural information, opens up new possibilities for advancing the use of precision grazing management. The dataset is released and hosted in a Kaggle competition that challenges the international Machine Learning community with the task of pasture biomass estimation. The dataset is available on the official Kaggle webpage: https://www.kaggle.com/competitions/csiro-biomass

An Interpretable and Attention-based Method for Gaze Estimation Using Electroencephalography

Aug 09, 2023Abstract:Eye movements can reveal valuable insights into various aspects of human mental processes, physical well-being, and actions. Recently, several datasets have been made available that simultaneously record EEG activity and eye movements. This has triggered the development of various methods to predict gaze direction based on brain activity. However, most of these methods lack interpretability, which limits their technology acceptance. In this paper, we leverage a large data set of simultaneously measured Electroencephalography (EEG) and Eye tracking, proposing an interpretable model for gaze estimation from EEG data. More specifically, we present a novel attention-based deep learning framework for EEG signal analysis, which allows the network to focus on the most relevant information in the signal and discard problematic channels. Additionally, we provide a comprehensive evaluation of the presented framework, demonstrating its superiority over current methods in terms of accuracy and robustness. Finally, the study presents visualizations that explain the results of the analysis and highlights the potential of attention mechanism for improving the efficiency and effectiveness of EEG data analysis in a variety of applications.

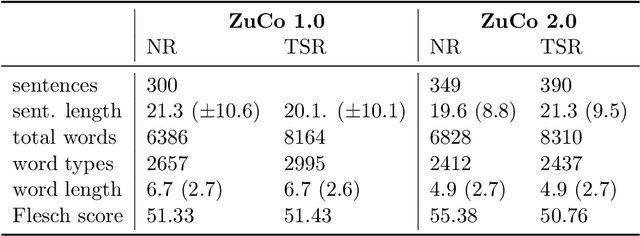

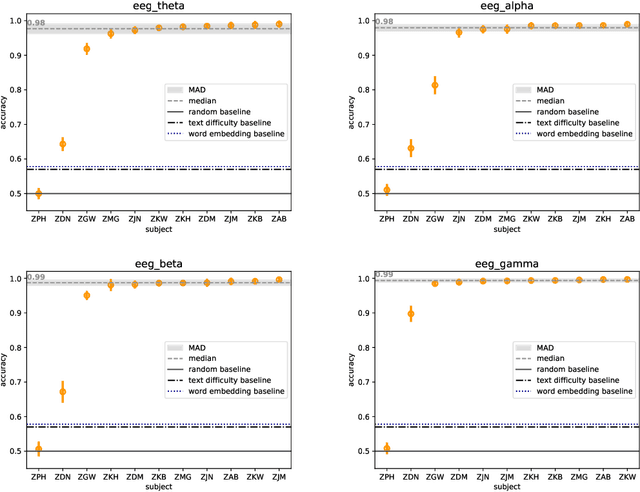

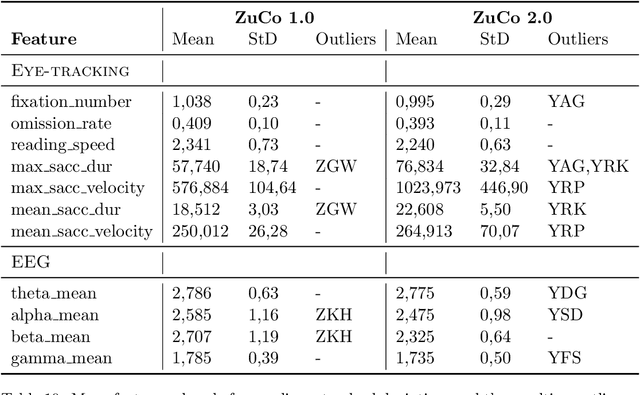

Reading Task Classification Using EEG and Eye-Tracking Data

Dec 12, 2021

Abstract:The Zurich Cognitive Language Processing Corpus (ZuCo) provides eye-tracking and EEG signals from two reading paradigms, normal reading and task-specific reading. We analyze whether machine learning methods are able to classify these two tasks using eye-tracking and EEG features. We implement models with aggregated sentence-level features as well as fine-grained word-level features. We test the models in within-subject and cross-subject evaluation scenarios. All models are tested on the ZuCo 1.0 and ZuCo 2.0 data subsets, which are characterized by differing recording procedures and thus allow for different levels of generalizability. Finally, we provide a series of control experiments to analyze the results in more detail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge