Lena A. Jäger

Language models emulate certain cognitive profiles: An investigation of how predictability measures interact with individual differences

Jun 07, 2024

Abstract:To date, most investigations on surprisal and entropy effects in reading have been conducted on the group level, disregarding individual differences. In this work, we revisit the predictive power of surprisal and entropy measures estimated from a range of language models (LMs) on data of human reading times as a measure of processing effort by incorporating information of language users' cognitive capacities. To do so, we assess the predictive power of surprisal and entropy estimated from generative LMs on reading data obtained from individuals who also completed a wide range of psychometric tests. Specifically, we investigate if modulating surprisal and entropy relative to cognitive scores increases prediction accuracy of reading times, and we examine whether LMs exhibit systematic biases in the prediction of reading times for cognitively high- or low-performing groups, revealing what type of psycholinguistic subject a given LM emulates. Our study finds that in most cases, incorporating cognitive capacities increases predictive power of surprisal and entropy on reading times, and that generally, high performance in the psychometric tests is associated with lower sensitivity to predictability effects. Finally, our results suggest that the analyzed LMs emulate readers with lower verbal intelligence, suggesting that for a given target group (i.e., individuals with high verbal intelligence), these LMs provide less accurate predictability estimates.

Reporting Eye-Tracking Data Quality: Towards a New Standard

Mar 31, 2024Abstract:Eye-tracking datasets are often shared in the format used by their creators for their original analyses, usually resulting in the exclusion of data considered irrelevant to the primary purpose. In order to increase re-usability of existing eye-tracking datasets for more diverse and initially not considered use cases, this work advocates a new approach of sharing eye-tracking data. Instead of publishing filtered and pre-processed datasets, the eye-tracking data at all pre-processing stages should be published together with data quality reports. In order to transparently report data quality and enable cross-dataset comparisons, we develop data quality reporting standards and metrics that can be automatically applied to a dataset, and integrate them into the open-source Python package pymovements (https://github.com/aeye-lab/pymovements).

PoTeC: A German Naturalistic Eye-tracking-while-reading Corpus

Mar 01, 2024Abstract:The Potsdam Textbook Corpus (PoTeC) is a naturalistic eye-tracking-while-reading corpus containing data from 75 participants reading 12 scientific texts. PoTeC is the first naturalistic eye-tracking-while-reading corpus that contains eye-movements from domain-experts as well as novices in a within-participant manipulation: It is based on a 2x2x2 fully-crossed factorial design which includes the participants' level of study and the participants' discipline of study as between-subject factors and the text domain as a within-subject factor. The participants' reading comprehension was assessed by a series of text comprehension questions and their domain knowledge was tested by text-independent background questions for each of the texts. The materials are annotated for a variety of linguistic features at different levels. We envision PoTeC to be used for a wide range of studies including but not limited to analyses of expert and non-expert reading strategies. The corpus and all the accompanying data at all stages of the preprocessing pipeline and all code used to preprocess the data are made available via GitHub: https://github.com/DiLi-Lab/PoTeC.

ScanDL: A Diffusion Model for Generating Synthetic Scanpaths on Texts

Oct 24, 2023

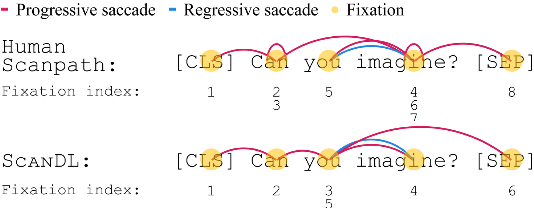

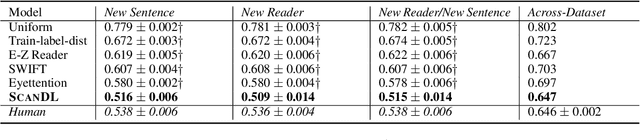

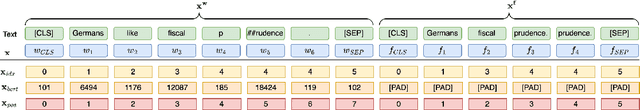

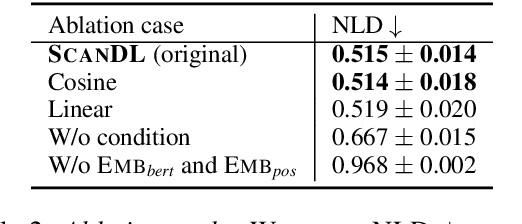

Abstract:Eye movements in reading play a crucial role in psycholinguistic research studying the cognitive mechanisms underlying human language processing. More recently, the tight coupling between eye movements and cognition has also been leveraged for language-related machine learning tasks such as the interpretability, enhancement, and pre-training of language models, as well as the inference of reader- and text-specific properties. However, scarcity of eye movement data and its unavailability at application time poses a major challenge for this line of research. Initially, this problem was tackled by resorting to cognitive models for synthesizing eye movement data. However, for the sole purpose of generating human-like scanpaths, purely data-driven machine-learning-based methods have proven to be more suitable. Following recent advances in adapting diffusion processes to discrete data, we propose ScanDL, a novel discrete sequence-to-sequence diffusion model that generates synthetic scanpaths on texts. By leveraging pre-trained word representations and jointly embedding both the stimulus text and the fixation sequence, our model captures multi-modal interactions between the two inputs. We evaluate ScanDL within- and across-dataset and demonstrate that it significantly outperforms state-of-the-art scanpath generation methods. Finally, we provide an extensive psycholinguistic analysis that underlines the model's ability to exhibit human-like reading behavior. Our implementation is made available at https://github.com/DiLi-Lab/ScanDL.

Pre-Trained Language Models Augmented with Synthetic Scanpaths for Natural Language Understanding

Oct 23, 2023

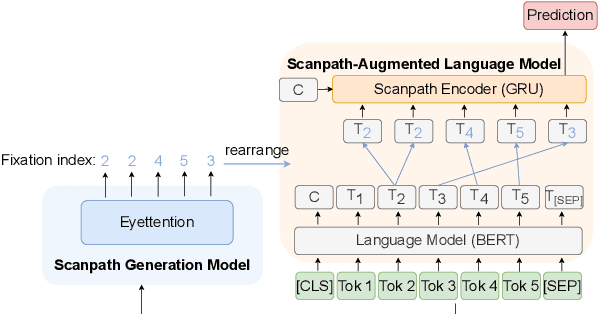

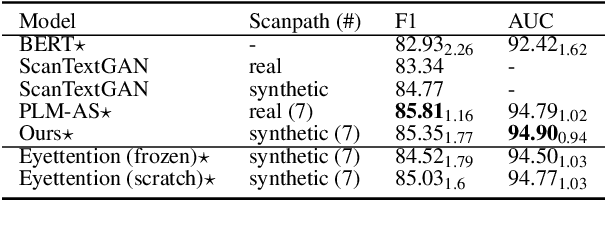

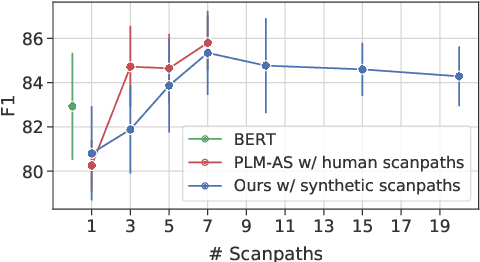

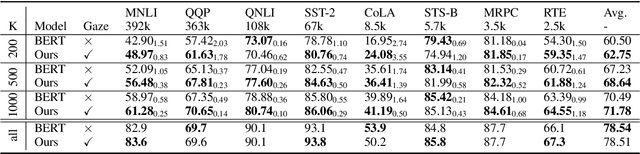

Abstract:Human gaze data offer cognitive information that reflects natural language comprehension. Indeed, augmenting language models with human scanpaths has proven beneficial for a range of NLP tasks, including language understanding. However, the applicability of this approach is hampered because the abundance of text corpora is contrasted by a scarcity of gaze data. Although models for the generation of human-like scanpaths during reading have been developed, the potential of synthetic gaze data across NLP tasks remains largely unexplored. We develop a model that integrates synthetic scanpath generation with a scanpath-augmented language model, eliminating the need for human gaze data. Since the model's error gradient can be propagated throughout all parts of the model, the scanpath generator can be fine-tuned to downstream tasks. We find that the proposed model not only outperforms the underlying language model, but achieves a performance that is comparable to a language model augmented with real human gaze data. Our code is publicly available.

Eyettention: An Attention-based Dual-Sequence Model for Predicting Human Scanpaths during Reading

Apr 21, 2023Abstract:Eye movements during reading offer insights into both the reader's cognitive processes and the characteristics of the text that is being read. Hence, the analysis of scanpaths in reading have attracted increasing attention across fields, ranging from cognitive science over linguistics to computer science. In particular, eye-tracking-while-reading data has been argued to bear the potential to make machine-learning-based language models exhibit a more human-like linguistic behavior. However, one of the main challenges in modeling human scanpaths in reading is their dual-sequence nature: the words are ordered following the grammatical rules of the language, whereas the fixations are chronologically ordered. As humans do not strictly read from left-to-right, but rather skip or refixate words and regress to previous words, the alignment of the linguistic and the temporal sequence is non-trivial. In this paper, we develop Eyettention, the first dual-sequence model that simultaneously processes the sequence of words and the chronological sequence of fixations. The alignment of the two sequences is achieved by a cross-sequence attention mechanism. We show that Eyettention outperforms state-of-the-art models in predicting scanpaths. We provide an extensive within- and across-data set evaluation on different languages. An ablation study and qualitative analysis support an in-depth understanding of the model's behavior.

Bridging the Gap: Gaze Events as Interpretable Concepts to Explain Deep Neural Sequence Models

Apr 12, 2023Abstract:Recent work in XAI for eye tracking data has evaluated the suitability of feature attribution methods to explain the output of deep neural sequence models for the task of oculomotric biometric identification. These methods provide saliency maps to highlight important input features of a specific eye gaze sequence. However, to date, its localization analysis has been lacking a quantitative approach across entire datasets. In this work, we employ established gaze event detection algorithms for fixations and saccades and quantitatively evaluate the impact of these events by determining their concept influence. Input features that belong to saccades are shown to be substantially more important than features that belong to fixations. By dissecting saccade events into sub-events, we are able to show that gaze samples that are close to the saccadic peak velocity are most influential. We further investigate the effect of event properties like saccadic amplitude or fixational dispersion on the resulting concept influence.

Detection of ADHD based on Eye Movements during Natural Viewing

Jul 14, 2022

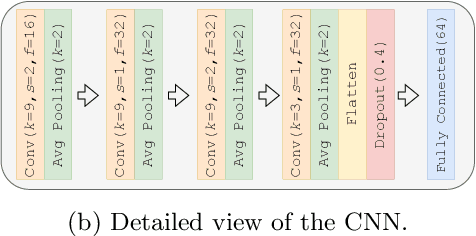

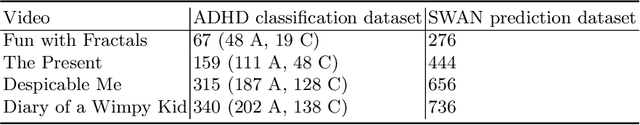

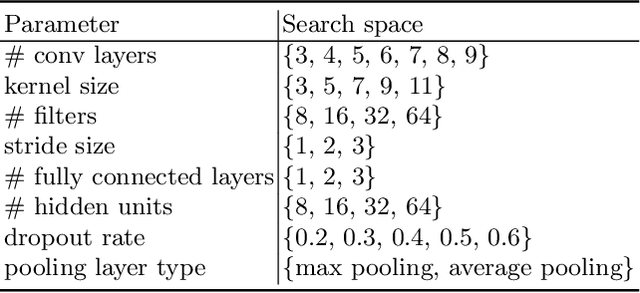

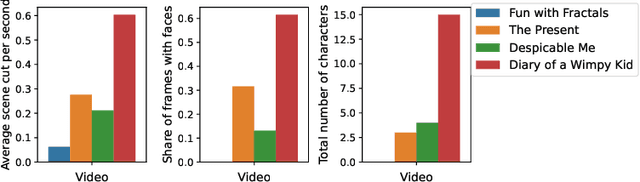

Abstract:Attention-deficit/hyperactivity disorder (ADHD) is a neurodevelopmental disorder that is highly prevalent and requires clinical specialists to diagnose. It is known that an individual's viewing behavior, reflected in their eye movements, is directly related to attentional mechanisms and higher-order cognitive processes. We therefore explore whether ADHD can be detected based on recorded eye movements together with information about the video stimulus in a free-viewing task. To this end, we develop an end-to-end deep learning-based sequence model which we pre-train on a related task for which more data are available. We find that the method is in fact able to detect ADHD and outperforms relevant baselines. We investigate the relevance of the input features in an ablation study. Interestingly, we find that the model's performance is closely related to the content of the video, which provides insights for future experimental designs.

Reading Task Classification Using EEG and Eye-Tracking Data

Dec 12, 2021

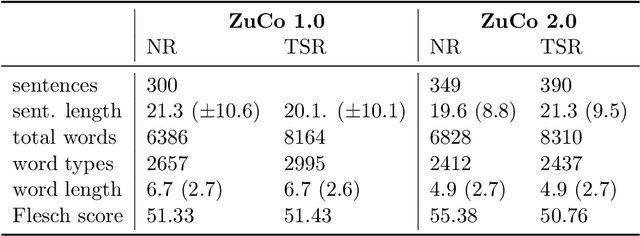

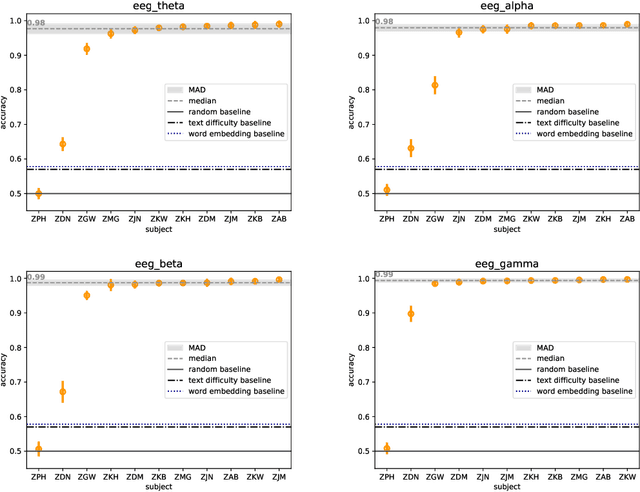

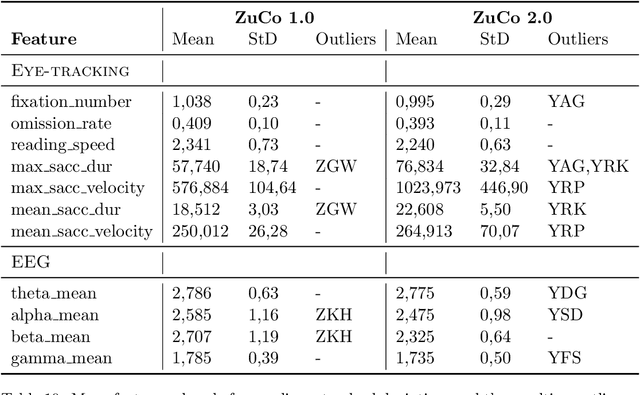

Abstract:The Zurich Cognitive Language Processing Corpus (ZuCo) provides eye-tracking and EEG signals from two reading paradigms, normal reading and task-specific reading. We analyze whether machine learning methods are able to classify these two tasks using eye-tracking and EEG features. We implement models with aggregated sentence-level features as well as fine-grained word-level features. We test the models in within-subject and cross-subject evaluation scenarios. All models are tested on the ZuCo 1.0 and ZuCo 2.0 data subsets, which are characterized by differing recording procedures and thus allow for different levels of generalizability. Finally, we provide a series of control experiments to analyze the results in more detail.

Discriminative Viewer Identification using Generative Models of Eye Gaze

Mar 25, 2020

Abstract:We study the problem of identifying viewers of arbitrary images based on their eye gaze. Psychological research has derived generative stochastic models of eye movements. In order to exploit this background knowledge within a discriminatively trained classification model, we derive Fisher kernels from different generative models of eye gaze. Experimentally, we find that the performance of the classifier strongly depends on the underlying generative model. Using an SVM with Fisher kernel improves the classification performance over the underlying generative model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge