Tobias Scheffer

University of Potsdam

Pre-Trained Language Models Augmented with Synthetic Scanpaths for Natural Language Understanding

Oct 23, 2023

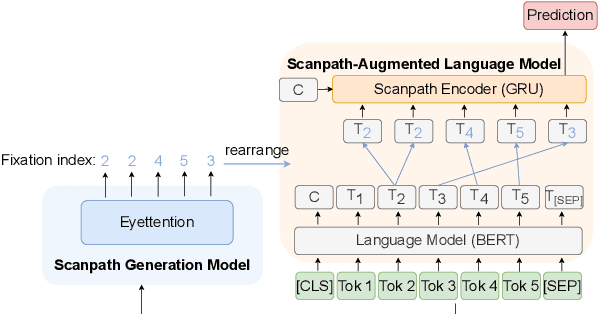

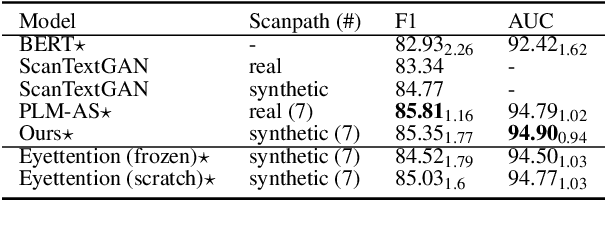

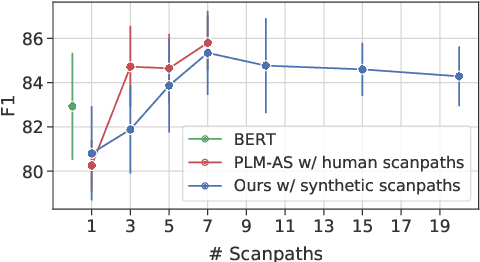

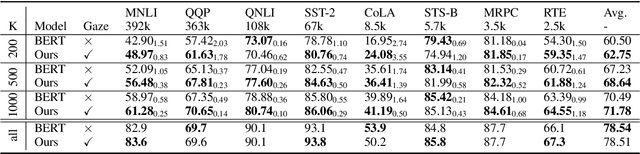

Abstract:Human gaze data offer cognitive information that reflects natural language comprehension. Indeed, augmenting language models with human scanpaths has proven beneficial for a range of NLP tasks, including language understanding. However, the applicability of this approach is hampered because the abundance of text corpora is contrasted by a scarcity of gaze data. Although models for the generation of human-like scanpaths during reading have been developed, the potential of synthetic gaze data across NLP tasks remains largely unexplored. We develop a model that integrates synthetic scanpath generation with a scanpath-augmented language model, eliminating the need for human gaze data. Since the model's error gradient can be propagated throughout all parts of the model, the scanpath generator can be fine-tuned to downstream tasks. We find that the proposed model not only outperforms the underlying language model, but achieves a performance that is comparable to a language model augmented with real human gaze data. Our code is publicly available.

Eyettention: An Attention-based Dual-Sequence Model for Predicting Human Scanpaths during Reading

Apr 21, 2023Abstract:Eye movements during reading offer insights into both the reader's cognitive processes and the characteristics of the text that is being read. Hence, the analysis of scanpaths in reading have attracted increasing attention across fields, ranging from cognitive science over linguistics to computer science. In particular, eye-tracking-while-reading data has been argued to bear the potential to make machine-learning-based language models exhibit a more human-like linguistic behavior. However, one of the main challenges in modeling human scanpaths in reading is their dual-sequence nature: the words are ordered following the grammatical rules of the language, whereas the fixations are chronologically ordered. As humans do not strictly read from left-to-right, but rather skip or refixate words and regress to previous words, the alignment of the linguistic and the temporal sequence is non-trivial. In this paper, we develop Eyettention, the first dual-sequence model that simultaneously processes the sequence of words and the chronological sequence of fixations. The alignment of the two sequences is achieved by a cross-sequence attention mechanism. We show that Eyettention outperforms state-of-the-art models in predicting scanpaths. We provide an extensive within- and across-data set evaluation on different languages. An ablation study and qualitative analysis support an in-depth understanding of the model's behavior.

Bridging the Gap: Gaze Events as Interpretable Concepts to Explain Deep Neural Sequence Models

Apr 12, 2023Abstract:Recent work in XAI for eye tracking data has evaluated the suitability of feature attribution methods to explain the output of deep neural sequence models for the task of oculomotric biometric identification. These methods provide saliency maps to highlight important input features of a specific eye gaze sequence. However, to date, its localization analysis has been lacking a quantitative approach across entire datasets. In this work, we employ established gaze event detection algorithms for fixations and saccades and quantitatively evaluate the impact of these events by determining their concept influence. Input features that belong to saccades are shown to be substantially more important than features that belong to fixations. By dissecting saccade events into sub-events, we are able to show that gaze samples that are close to the saccadic peak velocity are most influential. We further investigate the effect of event properties like saccadic amplitude or fixational dispersion on the resulting concept influence.

Detection of ADHD based on Eye Movements during Natural Viewing

Jul 14, 2022

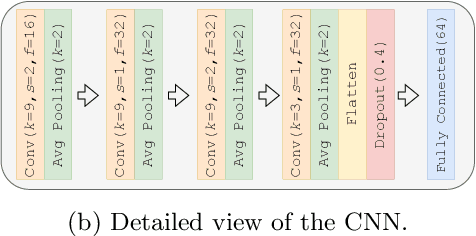

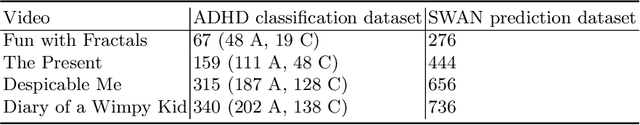

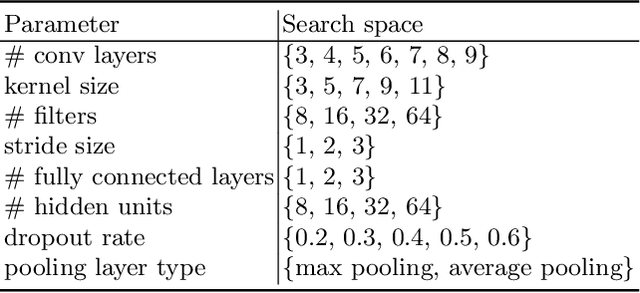

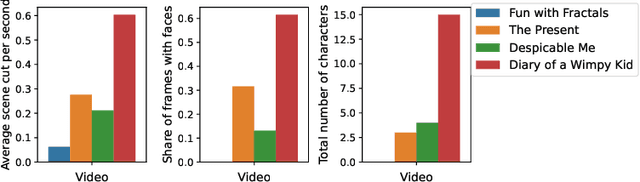

Abstract:Attention-deficit/hyperactivity disorder (ADHD) is a neurodevelopmental disorder that is highly prevalent and requires clinical specialists to diagnose. It is known that an individual's viewing behavior, reflected in their eye movements, is directly related to attentional mechanisms and higher-order cognitive processes. We therefore explore whether ADHD can be detected based on recorded eye movements together with information about the video stimulus in a free-viewing task. To this end, we develop an end-to-end deep learning-based sequence model which we pre-train on a related task for which more data are available. We find that the method is in fact able to detect ADHD and outperforms relevant baselines. We investigate the relevance of the input features in an ablation study. Interestingly, we find that the model's performance is closely related to the content of the video, which provides insights for future experimental designs.

Learning Explainable Representations of Malware Behavior

Jun 23, 2021

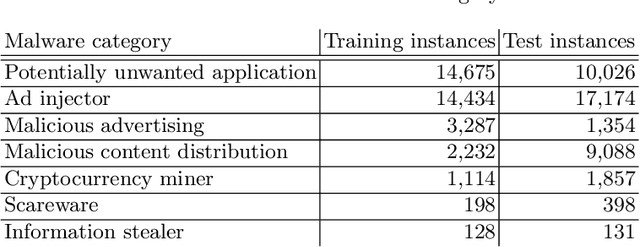

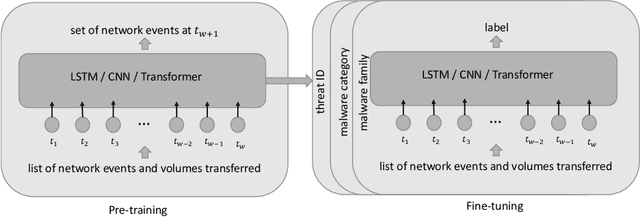

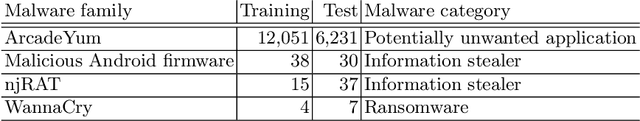

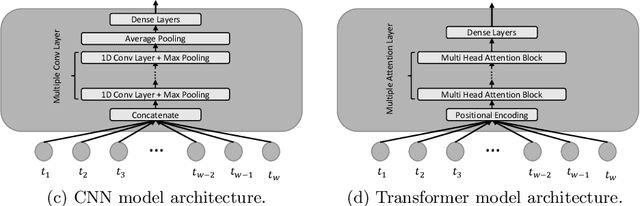

Abstract:We address the problems of identifying malware in network telemetry logs and providing \emph{indicators of compromise} -- comprehensible explanations of behavioral patterns that identify the threat. In our system, an array of specialized detectors abstracts network-flow data into comprehensible \emph{network events} in a first step. We develop a neural network that processes this sequence of events and identifies specific threats, malware families and broad categories of malware. We then use the \emph{integrated-gradients} method to highlight events that jointly constitute the characteristic behavioral pattern of the threat. We compare network architectures based on CNNs, LSTMs, and transformers, and explore the efficacy of unsupervised pre-training experimentally on large-scale telemetry data. We demonstrate how this system detects njRAT and other malware based on behavioral patterns.

Discriminative Viewer Identification using Generative Models of Eye Gaze

Mar 25, 2020

Abstract:We study the problem of identifying viewers of arbitrary images based on their eye gaze. Psychological research has derived generative stochastic models of eye movements. In order to exploit this background knowledge within a discriminatively trained classification model, we derive Fisher kernels from different generative models of eye gaze. Experimentally, we find that the performance of the classifier strongly depends on the underlying generative model. Using an SVM with Fisher kernel improves the classification performance over the underlying generative model.

Deep Eyedentification: Biometric Identification using Micro-Movements of the Eye

Jul 04, 2019

Abstract:We study involuntary micro-movements of the eye for biometric identification. While prior studies extract lower-frequency macro-movements from the output of video-based eye-tracking systems and engineer explicit features of these macro-movements, we develop a deep convolutional architecture that processes the raw eye-tracking signal. Compared to prior work, the network attains a lower error rate by one order of magnitude and is faster by two orders of magnitude: it identifies users accurately within seconds.

Joint Detection of Malicious Domains and Infected Clients

Jun 21, 2019

Abstract:Detection of malware-infected computers and detection of malicious web domains based on their encrypted HTTPS traffic are challenging problems, because only addresses, timestamps, and data volumes are observable. The detection problems are coupled, because infected clients tend to interact with malicious domains. Traffic data can be collected at a large scale, and antivirus tools can be used to identify infected clients in retrospect. Domains, by contrast, have to be labeled individually after forensic analysis. We explore transfer learning based on sluice networks; this allows the detection models to bootstrap each other. In a large-scale experimental study, we find that the model outperforms known reference models and detects previously unknown malware, previously unknown malware families, and previously unknown malicious domains.

A Discriminative Model for Identifying Readers and Assessing Text Comprehension from Eye Movements

Sep 21, 2018

Abstract:We study the problem of inferring readers' identities and estimating their level of text comprehension from observations of their eye movements during reading. We develop a generative model of individual gaze patterns (scanpaths) that makes use of lexical features of the fixated words. Using this generative model, we derive a Fisher-score representation of eye-movement sequences. We study whether a Fisher-SVM with this Fisher kernel and several reference methods are able to identify readers and estimate their level of text comprehension based on eye-tracking data. While none of the methods are able to estimate text comprehension accurately, we find that the SVM with Fisher kernel excels at identifying readers.

Varying-coefficient models with isotropic Gaussian process priors

Oct 14, 2015

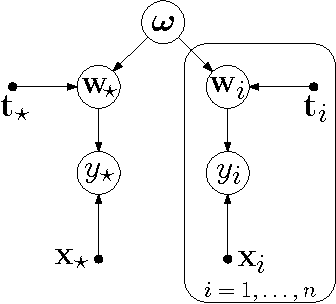

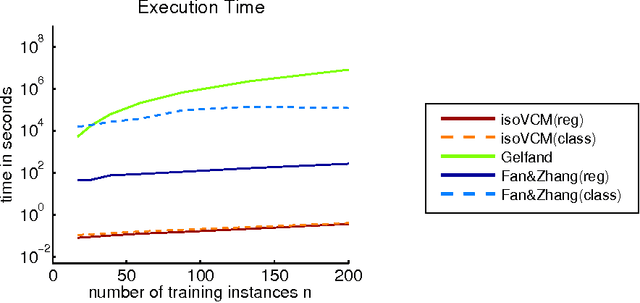

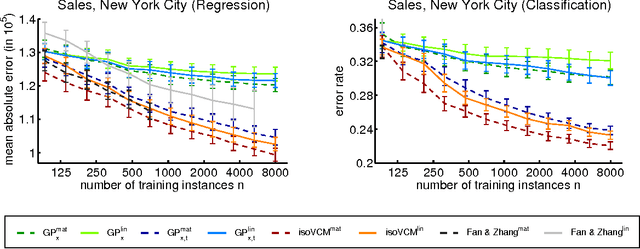

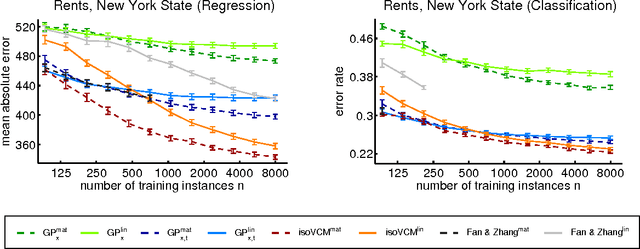

Abstract:We study learning problems in which the conditional distribution of the output given the input varies as a function of additional task variables. In varying-coefficient models with Gaussian process priors, a Gaussian process generates the functional relationship between the task variables and the parameters of this conditional. Varying-coefficient models subsume hierarchical Bayesian multitask models, but also generalizations in which the conditional varies continuously, for instance, in time or space. However, Bayesian inference in varying-coefficient models is generally intractable. We show that inference for varying-coefficient models with isotropic Gaussian process priors resolves to standard inference for a Gaussian process that can be solved efficiently. MAP inference in this model resolves to multitask learning using task and instance kernels, and inference for hierarchical Bayesian multitask models can be carried out efficiently using graph-Laplacian kernels. We report on experiments for geospatial prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge