Mingshen Wang

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

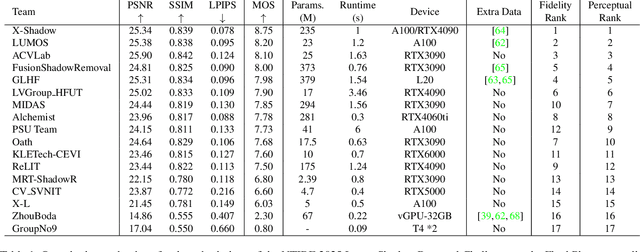

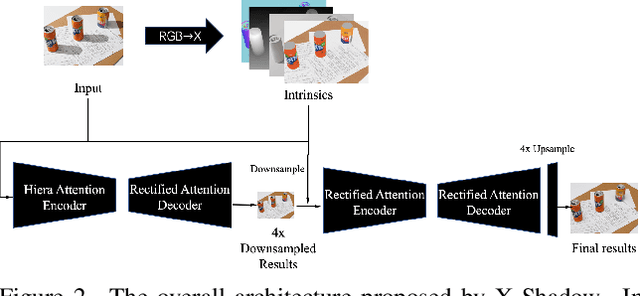

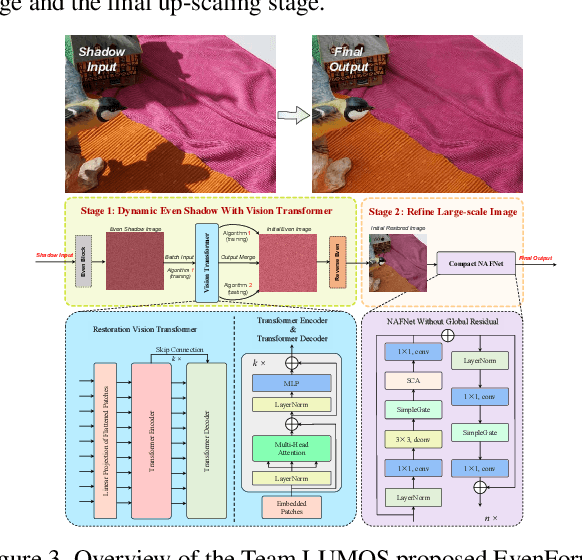

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

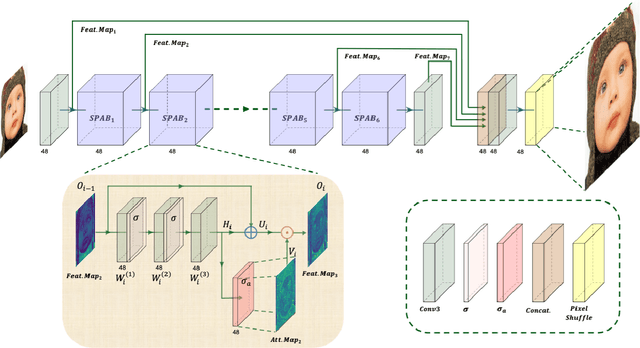

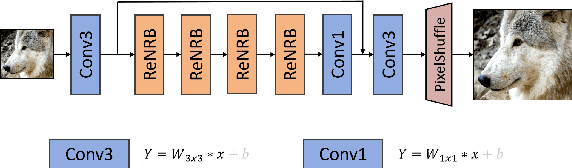

Thinking in Granularity: Dynamic Quantization for Image Super-Resolution by Intriguing Multi-Granularity Clues

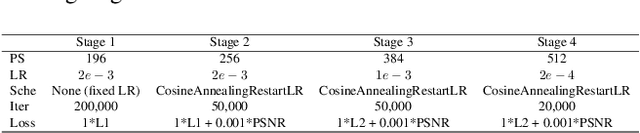

Sep 22, 2024Abstract:Dynamic quantization has attracted rising attention in image super-resolution (SR) as it expands the potential of heavy SR models onto mobile devices while preserving competitive performance. Existing methods explore layer-to-bit configuration upon varying local regions, adaptively allocating the bit to each layer and patch. Despite the benefits, they still fall short in the trade-off of SR accuracy and quantization efficiency. Apart from this, adapting the quantization level for each layer individually can disturb the original inter-layer relationships, thus diminishing the representation capability of quantized models. In this work, we propose Granular-DQ, which capitalizes on the intrinsic characteristics of images while dispensing with the previous consideration for layer sensitivity in quantization. Granular-DQ conducts a multi-granularity analysis of local patches with further exploration of their information densities, achieving a distinctive patch-wise and layer-invariant dynamic quantization paradigm. Specifically, Granular-DQ initiates by developing a granularity-bit controller (GBC) to apprehend the coarse-to-fine granular representations of different patches, matching their proportional contribution to the entire image to determine the proper bit-width allocation. On this premise, we investigate the relation between bit-width and information density, devising an entropy-to-bit (E2B) mechanism that enables further fine-grained dynamic bit adaption of high-bit patches. Extensive experiments validate the superiority and generalization ability of Granular-DQ over recent state-of-the-art methods on various SR models. Code will be available at \url{https://github.com/MmmingS/Granular-DQ.git}.

NTIRE 2024 Challenge on Low Light Image Enhancement: Methods and Results

Apr 22, 2024

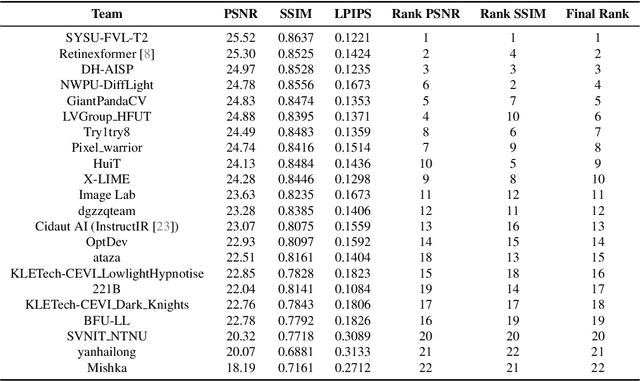

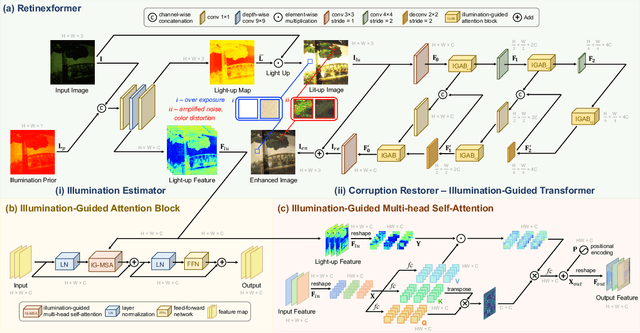

Abstract:This paper reviews the NTIRE 2024 low light image enhancement challenge, highlighting the proposed solutions and results. The aim of this challenge is to discover an effective network design or solution capable of generating brighter, clearer, and visually appealing results when dealing with a variety of conditions, including ultra-high resolution (4K and beyond), non-uniform illumination, backlighting, extreme darkness, and night scenes. A notable total of 428 participants registered for the challenge, with 22 teams ultimately making valid submissions. This paper meticulously evaluates the state-of-the-art advancements in enhancing low-light images, reflecting the significant progress and creativity in this field.

The Ninth NTIRE 2024 Efficient Super-Resolution Challenge Report

Apr 16, 2024

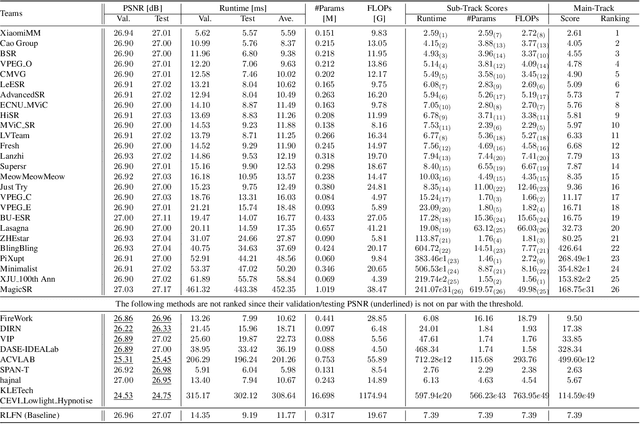

Abstract:This paper provides a comprehensive review of the NTIRE 2024 challenge, focusing on efficient single-image super-resolution (ESR) solutions and their outcomes. The task of this challenge is to super-resolve an input image with a magnification factor of x4 based on pairs of low and corresponding high-resolution images. The primary objective is to develop networks that optimize various aspects such as runtime, parameters, and FLOPs, while still maintaining a peak signal-to-noise ratio (PSNR) of approximately 26.90 dB on the DIV2K_LSDIR_valid dataset and 26.99 dB on the DIV2K_LSDIR_test dataset. In addition, this challenge has 4 tracks including the main track (overall performance), sub-track 1 (runtime), sub-track 2 (FLOPs), and sub-track 3 (parameters). In the main track, all three metrics (ie runtime, FLOPs, and parameter count) were considered. The ranking of the main track is calculated based on a weighted sum-up of the scores of all other sub-tracks. In sub-track 1, the practical runtime performance of the submissions was evaluated, and the corresponding score was used to determine the ranking. In sub-track 2, the number of FLOPs was considered. The score calculated based on the corresponding FLOPs was used to determine the ranking. In sub-track 3, the number of parameters was considered. The score calculated based on the corresponding parameters was used to determine the ranking. RLFN is set as the baseline for efficiency measurement. The challenge had 262 registered participants, and 34 teams made valid submissions. They gauge the state-of-the-art in efficient single-image super-resolution. To facilitate the reproducibility of the challenge and enable other researchers to build upon these findings, the code and the pre-trained model of validated solutions are made publicly available at https://github.com/Amazingren/NTIRE2024_ESR/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge