Mikhail Martynov

MorphoNavi: Aerial-Ground Robot Navigation with Object Oriented Mapping in Digital Twin

Apr 23, 2025

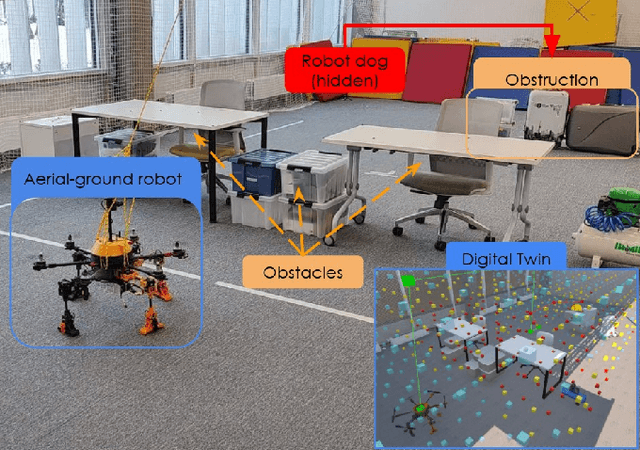

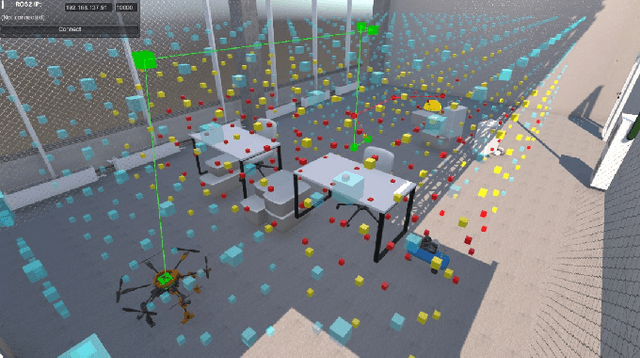

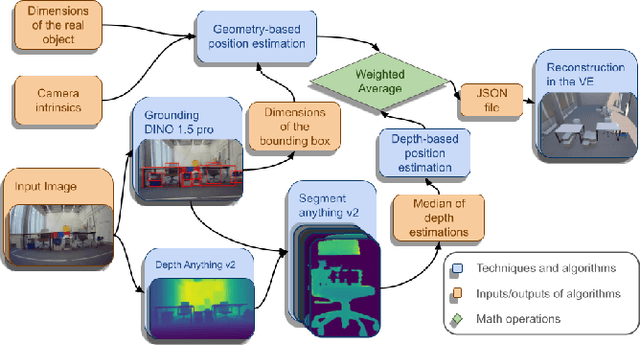

Abstract:This paper presents a novel mapping approach for a universal aerial-ground robotic system utilizing a single monocular camera. The proposed system is capable of detecting a diverse range of objects and estimating their positions without requiring fine-tuning for specific environments. The system's performance was evaluated through a simulated search-and-rescue scenario, where the MorphoGear robot successfully located a robotic dog while an operator monitored the process. This work contributes to the development of intelligent, multimodal robotic systems capable of operating in unstructured environments.

UAV-VLA: Vision-Language-Action System for Large Scale Aerial Mission Generation

Jan 09, 2025

Abstract:The UAV-VLA (Visual-Language-Action) system is a tool designed to facilitate communication with aerial robots. By integrating satellite imagery processing with the Visual Language Model (VLM) and the powerful capabilities of GPT, UAV-VLA enables users to generate general flight paths-and-action plans through simple text requests. This system leverages the rich contextual information provided by satellite images, allowing for enhanced decision-making and mission planning. The combination of visual analysis by VLM and natural language processing by GPT can provide the user with the path-and-action set, making aerial operations more efficient and accessible. The newly developed method showed the difference in the length of the created trajectory in 22% and the mean error in finding the objects of interest on a map in 34.22 m by Euclidean distance in the K-Nearest Neighbors (KNN) approach.

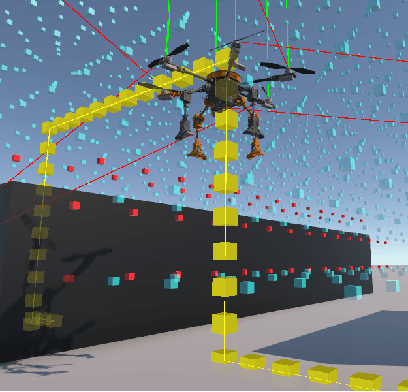

MorphoMove: Bi-Modal Path Planner with MPC-based Path Follower for Multi-Limb Morphogenetic UAV

Jul 12, 2024

Abstract:This paper discusses developments for a multi-limb morphogenetic UAV, MorphoGear, that is capable of both aerial flight and ground locomotion. A hybrid path planning algorithm based on A* strategy has been developed enabling seamless transition between air-to-ground navigation modes, thereby enhancing robot's mobility in complex environments. Moreover, precise path following is achieved during ground locomotion with a Model Predictive Control (MPC) architecture for its novel walking behaviour. Experimental validation was conducted in the Unity simulation environment utilizing Python scripts to compute control values. The algorithms' performance is validated by the Root Mean Squared Error (RMSE) of 0.91 cm and a maximum error of 1.85 cm, as demonstrated by the results. These developments highlight the adaptability of MorphoGear in navigation through cluttered environments, establishing it as a usable tool in autonomous exploration, both aerial and ground-based.

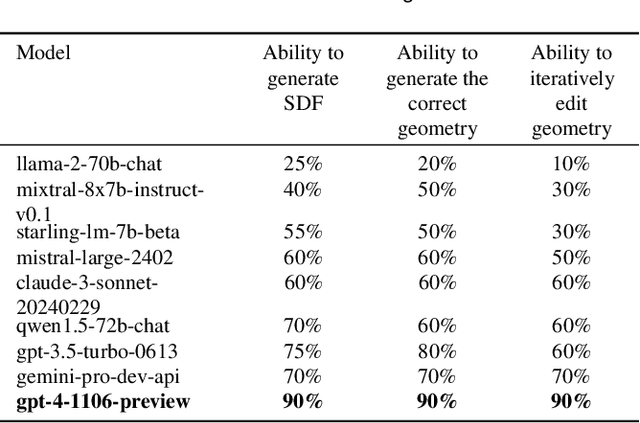

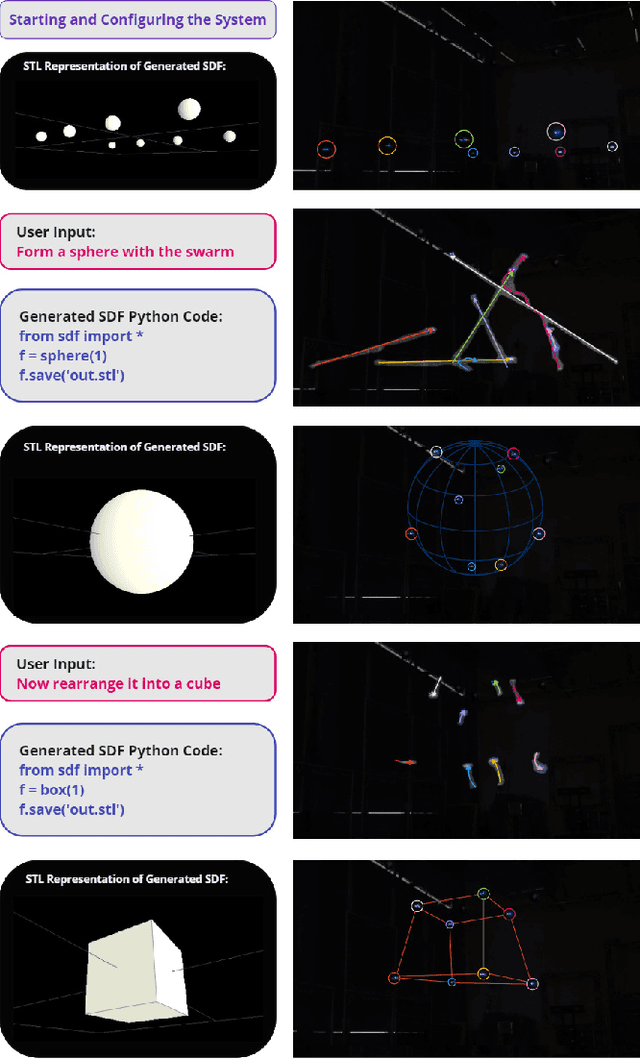

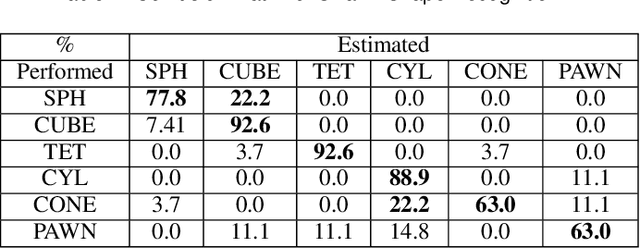

FlockGPT: Guiding UAV Flocking with Linguistic Orchestration

May 09, 2024

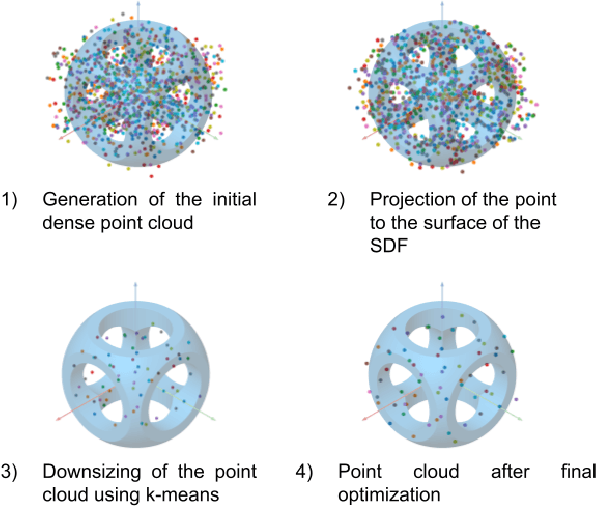

Abstract:This article presents the world's first rapid drone flocking control using natural language through generative AI. The described approach enables the intuitive orchestration of a flock of any size to achieve the desired geometry. The key feature of the method is the development of a new interface based on Large Language Models to communicate with the user and to generate the target geometry descriptions. Users can interactively modify or provide comments during the construction of the flock geometry model. By combining flocking technology and defining the target surface using a signed distance function, smooth and adaptive movement of the drone swarm between target states is achieved. Our user study on FlockGPT confirmed a high level of intuitive control over drone flocking by users. Subjects who had never previously controlled a swarm of drones were able to construct complex figures in just a few iterations and were able to accurately distinguish the formed swarm drone figures. The results revealed a high recognition rate for six different geometric patterns generated through the LLM-based interface and performed by a simulated drone flock (mean of 80% with a maximum of 93\% for cube and tetrahedron patterns). Users commented on low temporal demand (19.2 score in NASA-TLX), high performance (26 score in NASA-TLX), attractiveness (1.94 UEQ score), and hedonic quality (1.81 UEQ score) of the developed system. The FlockGPT demo code repository can be found at: coming soon

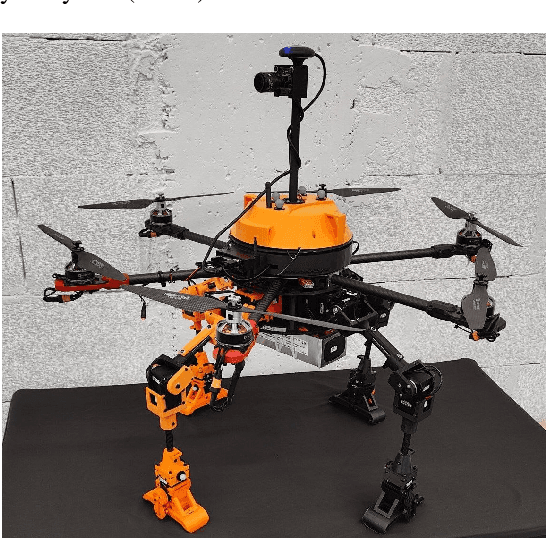

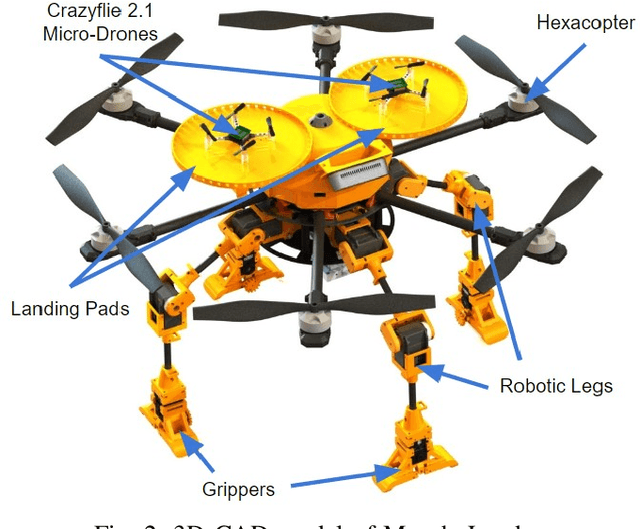

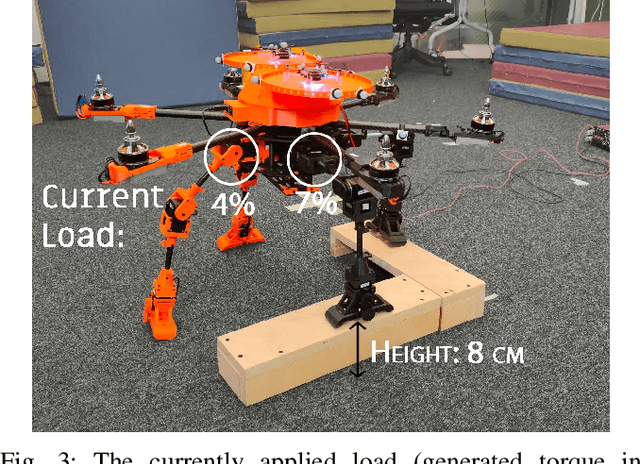

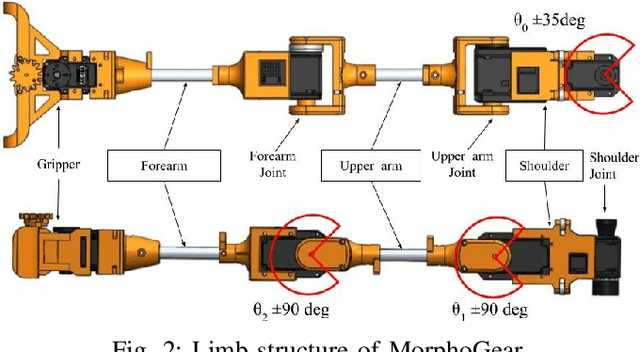

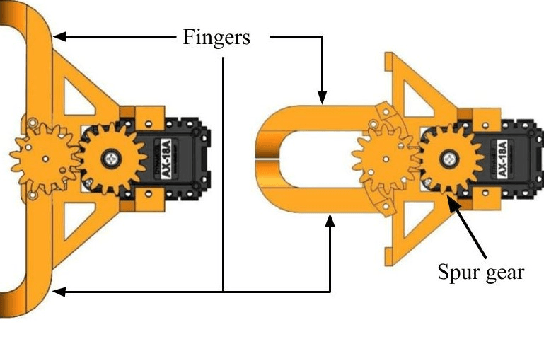

MorphoGear: An UAV with Multi-Limb Morphogenetic Gear for Rough-Terrain Locomotion

Mar 13, 2024Abstract:Robots able to run, fly, and grasp have a high potential to solve a wide scope of tasks and navigate in complex environments. Several mechatronic designs of such robots with adaptive morphologies are emerging. However, the task of landing on an uneven surface, traversing rough terrain, and manipulating objects still presents high challenges. This paper introduces the design of a novel rotor UAV MorphoGear with morphogenetic gear and includes a description of the robot's mechanics, electronics, and control architecture, as well as walking behavior and an analysis of experimental results. MorphoGear is able to fly, walk on surfaces with several gaits, and grasp objects with four compatible robotic limbs. Robotic limbs with three degrees of freedom (DoFs) are used by this UAV as pedipulators when walking or flying and as manipulators when performing actions in the environment. We performed a locomotion analysis of the landing gear of the robot. Three types of robot gaits have been developed. The experimental results revealed low crosstrack error of the most accurate gait (mean of 1.9 cm and max of 5.5 cm) and the ability of the drone to move with a 210 mm step length. Another type of robot gait also showed low crosstrack error (mean of 2.3 cm and max of 6.9 cm). The proposed MorphoGear system can potentially achieve a high scope of tasks in environmental surveying, delivery, and high-altitude operations.

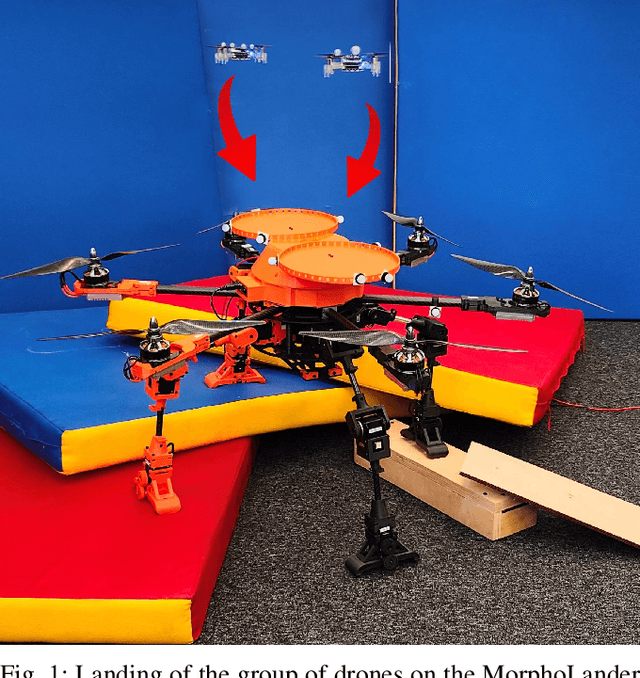

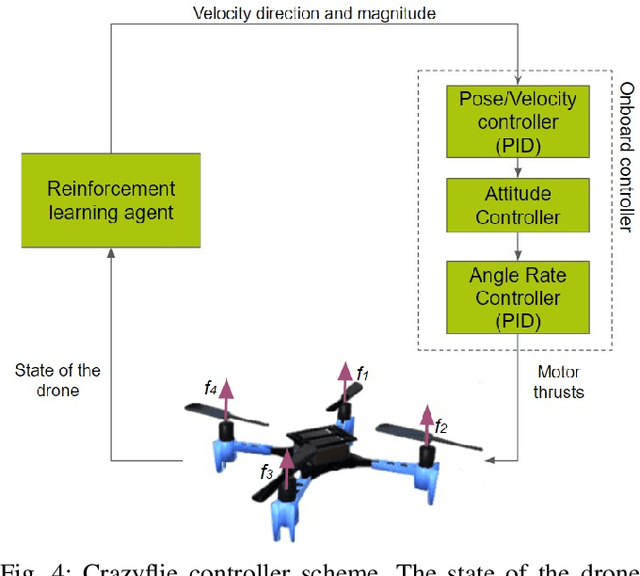

MorphoLander: Reinforcement Learning Based Landing of a Group of Drones on the Adaptive Morphogenetic UAV

Jul 28, 2023

Abstract:This paper focuses on a novel robotic system MorphoLander representing heterogeneous swarm of drones for exploring rough terrain environments. The morphogenetic leader drone is capable of landing on uneven terrain, traversing it, and maintaining horizontal position to deploy smaller drones for extensive area exploration. After completing their tasks, these drones return and land back on the landing pads of MorphoGear. The reinforcement learning algorithm was developed for a precise landing of drones on the leader robot that either remains static during their mission or relocates to the new position. Several experiments were conducted to evaluate the performance of the developed landing algorithm under both even and uneven terrain conditions. The experiments revealed that the proposed system results in high landing accuracy of 0.5 cm when landing on the leader drone under even terrain conditions and 2.35 cm under uneven terrain conditions. MorphoLander has the potential to significantly enhance the efficiency of the industrial inspections, seismic surveys, and rescue missions in highly cluttered and unstructured environments.

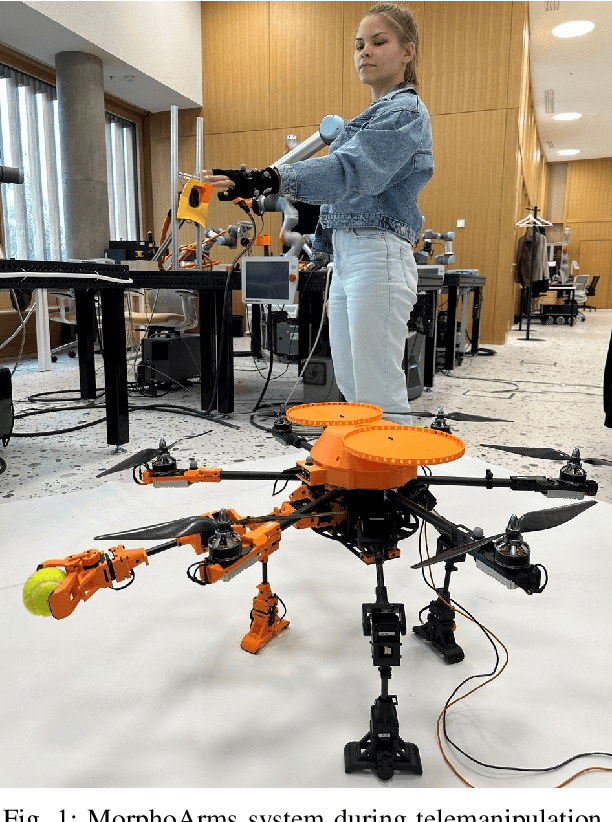

MorphoArms: Morphogenetic Teleoperation of Multimanual Robot

Jul 06, 2023

Abstract:Nowadays, there are few unmanned aerial vehicles (UAVs) capable of flying, walking and grasping. A drone with all these functionalities can significantly improve its performance in complex tasks such as monitoring and exploring different types of terrain, and rescue operations. This paper presents MorphoArms, a novel system that consists of a morphogenetic chassis and a hand gesture recognition teleoperation system. The mechanics, electronics, control architecture, and walking behavior of the morphogenetic chassis are described. This robot is capable of walking and grasping objects using four robotic limbs. Robotic limbs with four degrees-of-freedom are used as pedipulators when walking and as manipulators when performing actions in the environment. The robot control system is implemented using teleoperation, where commands are given by hand gestures. A motion capture system is used to track the user's hands and to recognize their gestures. The method of controlling the robot was experimentally tested in a study involving 10 users. The evaluation included three questionnaires (NASA TLX, SUS, and UEQ). The results showed that the proposed system was more user-friendly than 56% of the systems, and it was rated above average in terms of attractiveness, stimulation, and novelty.

SwarmGear: Heterogeneous Swarm of Drones with Reconfigurable Leader Drone and Virtual Impedance Links for Multi-Robot Inspection

Apr 06, 2023

Abstract:The continuous monitoring by drone swarms remains a challenging problem due to the lack of power supply and the inability of drones to land on uneven surfaces. Heterogeneous swarms, including ground and aerial vehicles, can support longer inspections and carry a higher number of sensors on board. However, their capabilities are limited by the mobility of wheeled and legged robots in a cluttered environment. In this paper, we propose a novel concept for autonomous inspection that we call SwarmGear. SwarmGear utilizes a heterogeneous swarm that investigates the environment in a leader-follower formation. The leader drone is able to land on rough terrain and traverse it by four compliant robotic legs, possessing both the functionalities of an aerial and mobile robot. To preserve the formation of the swarm during its motion, virtual impedance links were developed between the leader and the follower drones. We evaluated experimentally the accuracy of the hybrid leader drone's ground locomotion. By changing the step parameters, the optimal step configuration was found. Two types of gaits were evaluated. The experiments revealed low crosstrack error (mean of 2 cm and max of 4.8 cm) and the ability of the leader drone to move with a 190 mm step length and a 3 degree standard yaw deviation. Four types of drone formations were considered. The best formation was used for experiments with SwarmGear, and it showed low overall crosstrack error for the swarm (mean 7.9 cm for the type 1 gait and 5.1 cm for the type 2 gait). The proposed system can potentially improve the performance of autonomous swarms in cluttered and unstructured environments by allowing all agents of the swarm to switch between aerial and ground formations to overcome various obstacles and perform missions over a large area.

Many Heads but One Brain: an Overview of Fusion Brain Challenge on AI Journey 2021

Nov 22, 2021

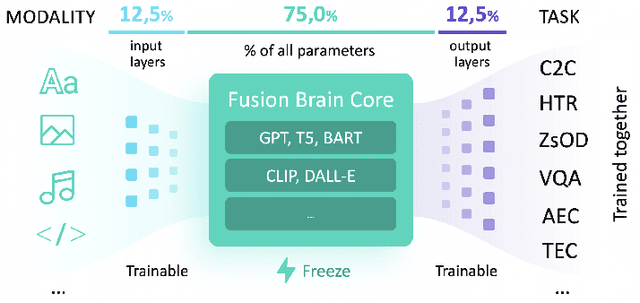

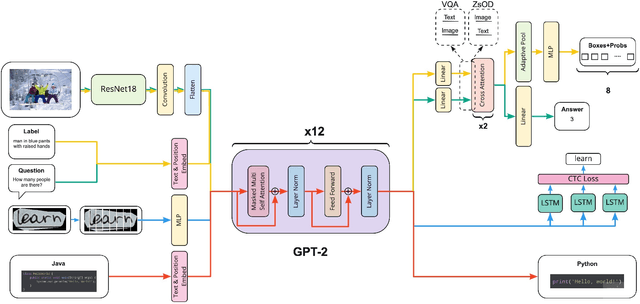

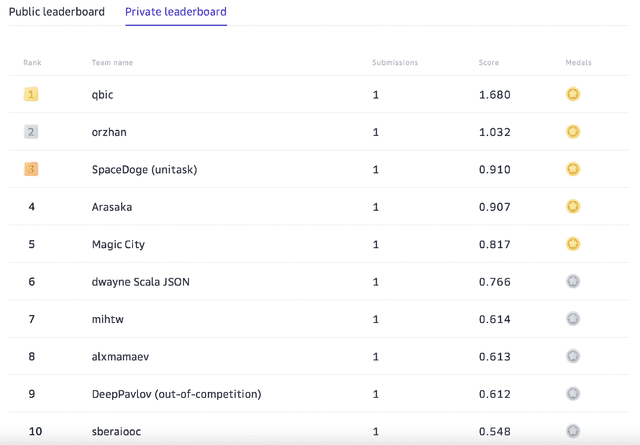

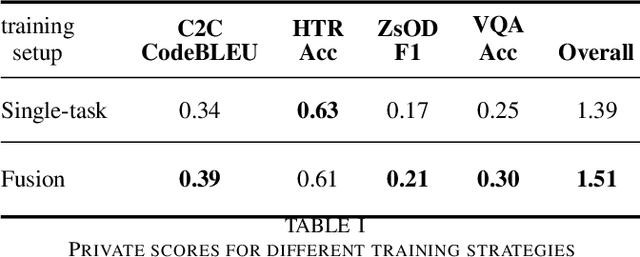

Abstract:Supporting the current trend in the AI community, we propose the AI Journey 2021 Challenge called Fusion Brain which is targeted to make the universal architecture process different modalities (namely, images, texts, and code) and to solve multiple tasks for vision and language. The Fusion Brain Challenge https://github.com/sberbank-ai/fusion_brain_aij2021 combines the following specific tasks: Code2code Translation, Handwritten Text recognition, Zero-shot Object Detection, and Visual Question Answering. We have created datasets for each task to test the participants' submissions on it. Moreover, we have opened a new handwritten dataset in both Russian and English, which consists of 94,130 pairs of images and texts. The Russian part of the dataset is the largest Russian handwritten dataset in the world. We also propose the baseline solution and corresponding task-specific solutions as well as overall metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge