Alex Shonenkov

Sber AI, MIPT

RuCLIP -- new models and experiments: a technical report

Feb 22, 2022

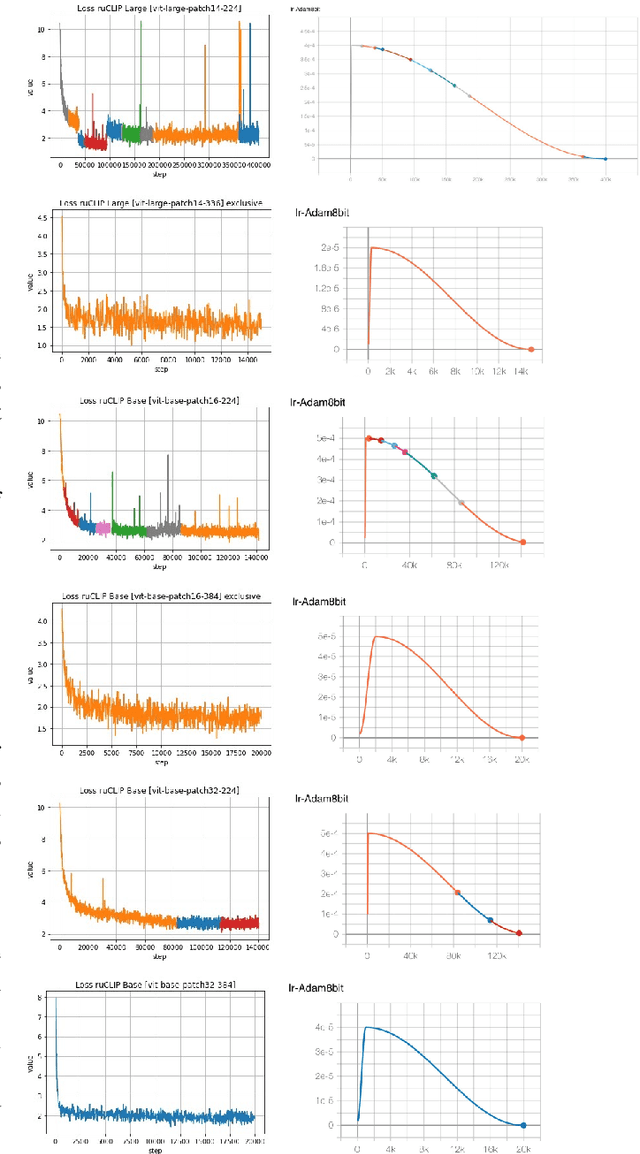

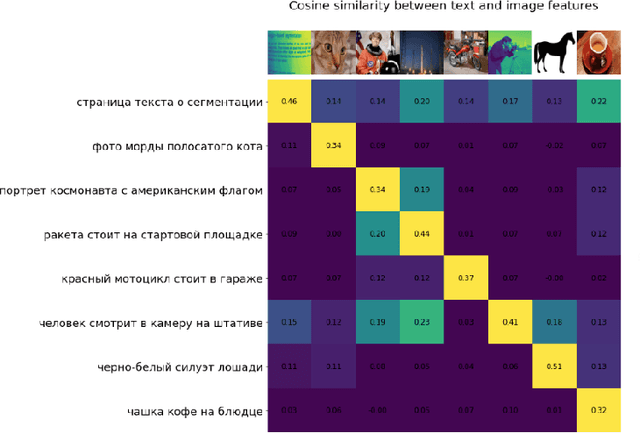

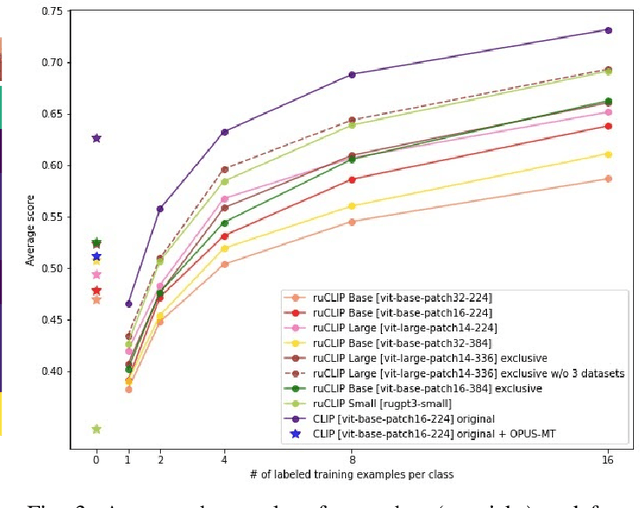

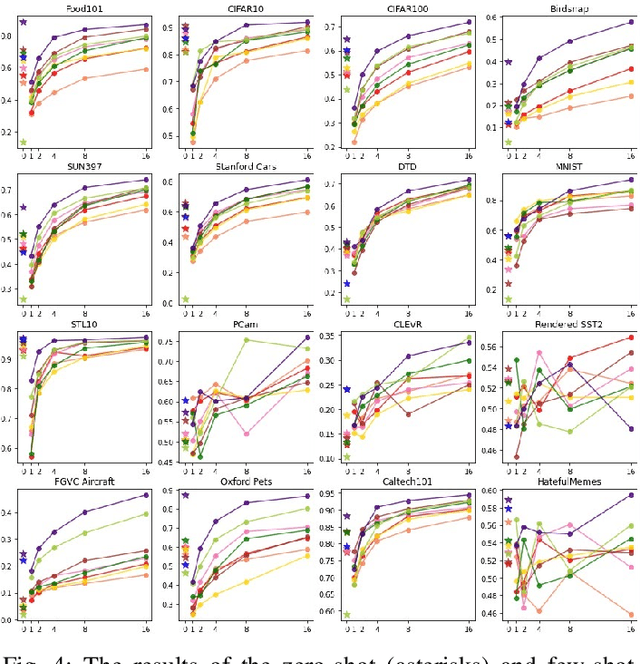

Abstract:In the report we propose six new implementations of ruCLIP model trained on our 240M pairs. The accuracy results are compared with original CLIP model with Ru-En translation (OPUS-MT) on 16 datasets from different domains. Our best implementations outperform CLIP + OPUS-MT solution on most of the datasets in few-show and zero-shot tasks. In the report we briefly describe the implementations and concentrate on the conducted experiments. Inference execution time comparison is also presented in the report.

Few-Bit Backward: Quantized Gradients of Activation Functions for Memory Footprint Reduction

Feb 02, 2022

Abstract:Memory footprint is one of the main limiting factors for large neural network training. In backpropagation, one needs to store the input to each operation in the computational graph. Every modern neural network model has quite a few pointwise nonlinearities in its architecture, and such operation induces additional memory costs which -- as we show -- can be significantly reduced by quantization of the gradients. We propose a systematic approach to compute optimal quantization of the retained gradients of the pointwise nonlinear functions with only a few bits per each element. We show that such approximation can be achieved by computing optimal piecewise-constant approximation of the derivative of the activation function, which can be done by dynamic programming. The drop-in replacements are implemented for all popular nonlinearities and can be used in any existing pipeline. We confirm the memory reduction and the same convergence on several open benchmarks.

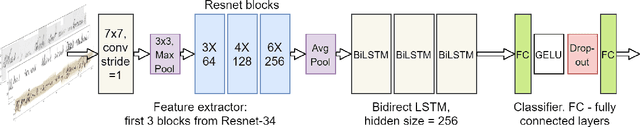

Handwritten text generation and strikethrough characters augmentation

Dec 14, 2021

Abstract:We introduce two data augmentation techniques, which, used with a Resnet-BiLSTM-CTC network, significantly reduce Word Error Rate (WER) and Character Error Rate (CER) beyond best-reported results on handwriting text recognition (HTR) tasks. We apply a novel augmentation that simulates strikethrough text (HandWritten Blots) and a handwritten text generation method based on printed text (StackMix), which proved to be very effective in HTR tasks. StackMix uses weakly-supervised framework to get character boundaries. Because these data augmentation techniques are independent of the network used, they could also be applied to enhance the performance of other networks and approaches to HTR. Extensive experiments on ten handwritten text datasets show that HandWritten Blots augmentation and StackMix significantly improve the quality of HTR models

Emojich -- zero-shot emoji generation using Russian language: a technical report

Dec 04, 2021

Abstract:This technical report presents a text-to-image neural network "Emojich" that generates emojis using captions in Russian language as a condition. We aim to keep the generalization ability of a pretrained big model ruDALL-E Malevich (XL) 1.3B parameters at the fine-tuning stage, while giving special style to the images generated. Here are presented some engineering methods, code realization, all hyper-parameters for reproducing results and a Telegram bot where everyone can create their own customized sets of stickers. Also, some newly generated emojis obtained by "Emojich" model are demonstrated.

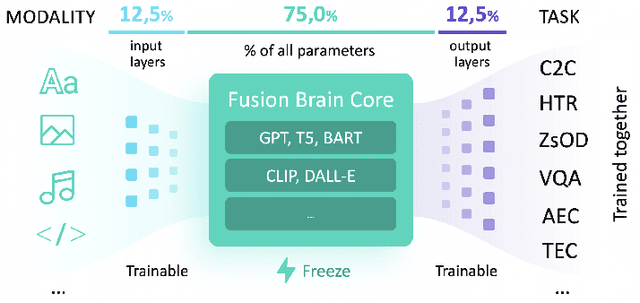

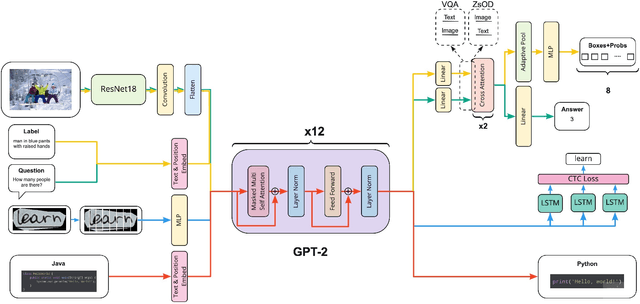

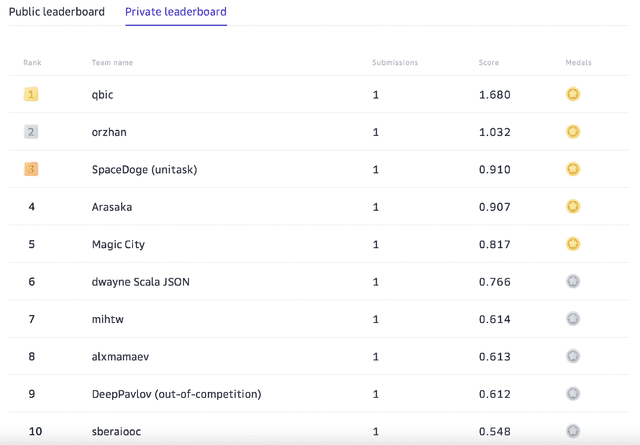

Many Heads but One Brain: an Overview of Fusion Brain Challenge on AI Journey 2021

Nov 22, 2021

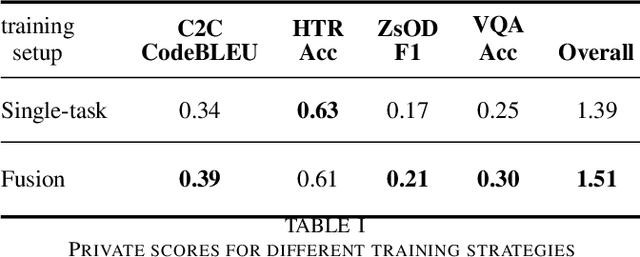

Abstract:Supporting the current trend in the AI community, we propose the AI Journey 2021 Challenge called Fusion Brain which is targeted to make the universal architecture process different modalities (namely, images, texts, and code) and to solve multiple tasks for vision and language. The Fusion Brain Challenge https://github.com/sberbank-ai/fusion_brain_aij2021 combines the following specific tasks: Code2code Translation, Handwritten Text recognition, Zero-shot Object Detection, and Visual Question Answering. We have created datasets for each task to test the participants' submissions on it. Moreover, we have opened a new handwritten dataset in both Russian and English, which consists of 94,130 pairs of images and texts. The Russian part of the dataset is the largest Russian handwritten dataset in the world. We also propose the baseline solution and corresponding task-specific solutions as well as overall metrics.

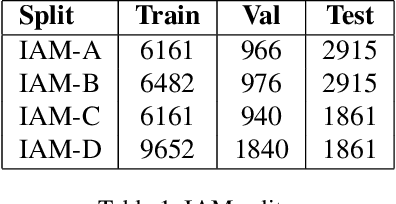

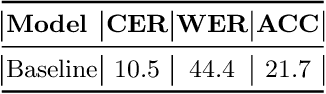

StackMix and Blot Augmentations for Handwritten Text Recognition

Aug 26, 2021

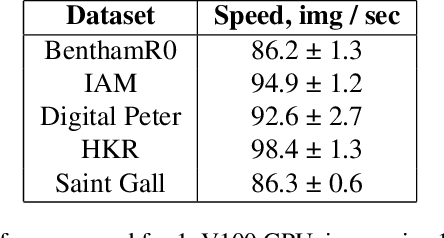

Abstract:This paper proposes a handwritten text recognition(HTR) system that outperforms current state-of-the-artmethods. The comparison was carried out on three of themost frequently used in HTR task datasets, namely Ben-tham, IAM, and Saint Gall. In addition, the results on tworecently presented datasets, Peter the Greats manuscriptsand HKR Dataset, are provided.The paper describes the architecture of the neural net-work and two ways of increasing the volume of train-ing data: augmentation that simulates strikethrough text(HandWritten Blots) and a new text generation method(StackMix), which proved to be very effective in HTR tasks.StackMix can also be applied to the standalone task of gen-erating handwritten text based on printed text.

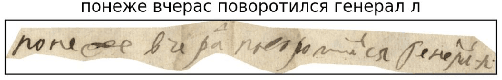

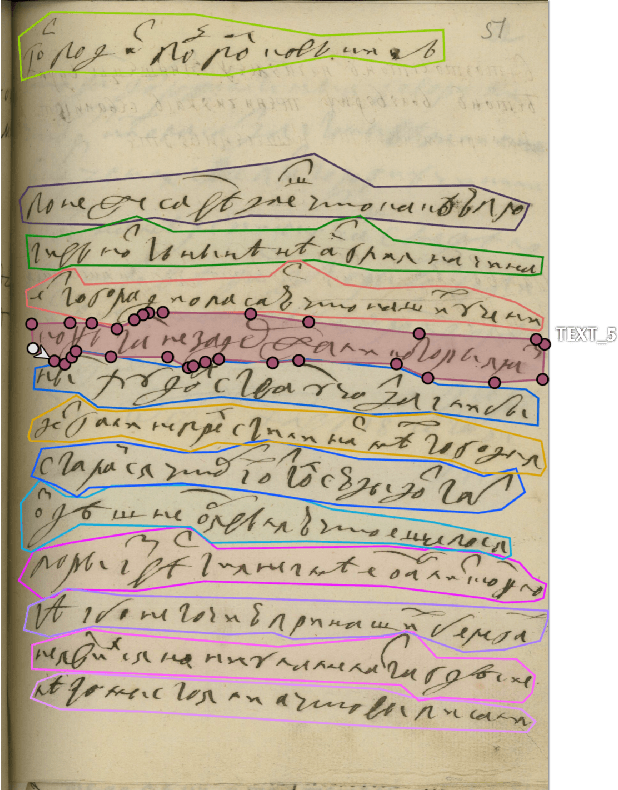

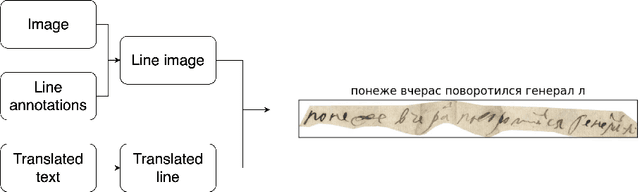

Digital Peter: Dataset, Competition and Handwriting Recognition Methods

Mar 16, 2021

Abstract:This paper presents a new dataset of Peter the Great's manuscripts and describes a segmentation procedure that converts initial images of documents into the lines. The new dataset may be useful for researchers to train handwriting text recognition models as a benchmark for comparing different models. It consists of 9 694 images and text files corresponding to lines in historical documents. The open machine learning competition Digital Peter was held based on the considered dataset. The baseline solution for this competition as well as more advanced methods on handwritten text recognition are described in the article. Full dataset and all code are publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge