Aleksey Fedoseev

DiffusionCinema: Text-to-Aerial Cinematography

Jan 24, 2026Abstract:We propose a novel Unmanned Aerial Vehicles (UAV) assisted creative capture system that leverages diffusion models to interpret high-level natural language prompts and automatically generate optimal flight trajectories for cinematic video recording. Instead of manually piloting the drone, the user simply describes the desired shot (e.g., "orbit around me slowly from the right and reveal the background waterfall"). Our system encodes the prompt along with an initial visual snapshot from the onboard camera, and a diffusion model samples plausible spatio-temporal motion plans that satisfy both the scene geometry and shot semantics. The generated flight trajectory is then executed autonomously by the UAV to record smooth, repeatable video clips that match the prompt. User evaluation using NASA-TLX showed a significantly lower overall workload with our interface (M = 21.6) compared to a traditional remote controller (M = 58.1), demonstrating a substantial reduction in perceived effort. Mental demand (M = 11.5 vs. 60.5) and frustration (M = 14.0 vs. 54.5) were also markedly lower for our system, confirming clear usability advantages in autonomous text-driven flight control. This project demonstrates a new interaction paradigm: text-to-cinema flight, where diffusion models act as the "creative operator" converting story intentions directly into aerial motion.

SwarmVLM: VLM-Guided Impedance Control for Autonomous Navigation of Heterogeneous Robots in Dynamic Warehousing

Aug 11, 2025Abstract:With the growing demand for efficient logistics, unmanned aerial vehicles (UAVs) are increasingly being paired with automated guided vehicles (AGVs). While UAVs offer the ability to navigate through dense environments and varying altitudes, they are limited by battery life, payload capacity, and flight duration, necessitating coordinated ground support. Focusing on heterogeneous navigation, SwarmVLM addresses these limitations by enabling semantic collaboration between UAVs and ground robots through impedance control. The system leverages the Vision Language Model (VLM) and the Retrieval-Augmented Generation (RAG) to adjust impedance control parameters in response to environmental changes. In this framework, the UAV acts as a leader using Artificial Potential Field (APF) planning for real-time navigation, while the ground robot follows via virtual impedance links with adaptive link topology to avoid collisions with short obstacles. The system demonstrated a 92% success rate across 12 real-world trials. Under optimal lighting conditions, the VLM-RAG framework achieved 8% accuracy in object detection and selection of impedance parameters. The mobile robot prioritized short obstacle avoidance, occasionally resulting in a lateral deviation of up to 50 cm from the UAV path, which showcases safe navigation in a cluttered setting.

NMPC-Lander: Nonlinear MPC with Barrier Function for UAV Landing on a Mobile Platform

May 06, 2025Abstract:Quadcopters are versatile aerial robots gaining popularity in numerous critical applications. However, their operational effectiveness is constrained by limited battery life and restricted flight range. To address these challenges, autonomous drone landing on stationary or mobile charging and battery-swapping stations has become an essential capability. In this study, we present NMPC-Lander, a novel control architecture that integrates Nonlinear Model Predictive Control (NMPC) with Control Barrier Functions (CBF) to achieve precise and safe autonomous landing on both static and dynamic platforms. Our approach employs NMPC for accurate trajectory tracking and landing, while simultaneously incorporating CBF to ensure collision avoidance with static obstacles. Experimental evaluations on the real hardware demonstrate high precision in landing scenarios, with an average final position error of 9.0 cm and 11 cm for stationary and mobile platforms, respectively. Notably, NMPC-Lander outperforms the B-spline combined with the A* planning method by nearly threefold in terms of position tracking, underscoring its superior robustness and practical effectiveness.

AttentionSwarm: Reinforcement Learning with Attention Control Barier Function for Crazyflie Drones in Dynamic Environments

Mar 10, 2025Abstract:We introduce AttentionSwarm, a novel benchmark designed to evaluate safe and efficient swarm control across three challenging environments: a landing environment with obstacles, a competitive drone game setting, and a dynamic drone racing scenario. Central to our approach is the Attention Model Based Control Barrier Function (CBF) framework, which integrates attention mechanisms with safety-critical control theory to enable real-time collision avoidance and trajectory optimization. This framework dynamically prioritizes critical obstacles and agents in the swarms vicinity using attention weights, while CBFs formally guarantee safety by enforcing collision-free constraints. The safe attention net algorithm was developed and evaluated using a swarm of Crazyflie 2.1 micro quadrotors, which were tested indoors with the Vicon motion capture system to ensure precise localization and control. Experimental results show that our system achieves landing accuracy of 3.02 cm with a mean time of 23 s and collision-free landings in a dynamic landing environment, 100% and collision-free navigation in a drone game environment, and 95% and collision-free navigation for a dynamic multiagent drone racing environment, underscoring its effectiveness and robustness in real-world scenarios. This work offers a promising foundation for applications in dynamic environments where safety and fastness are paramount.

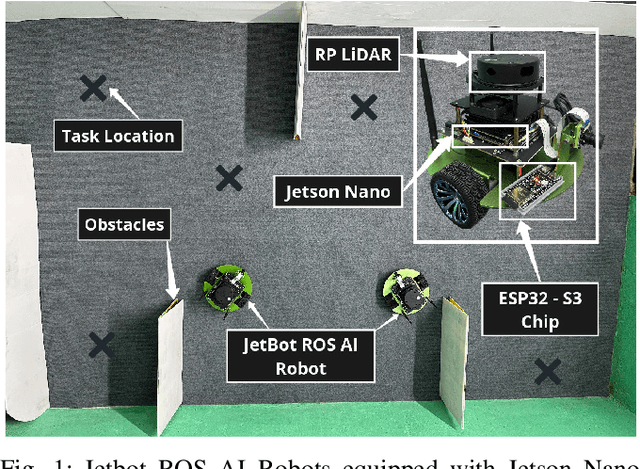

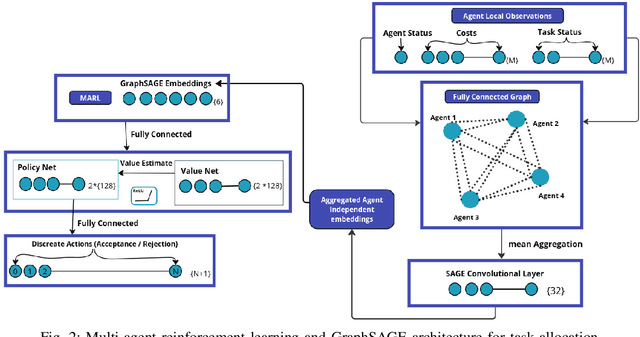

HIPPO-MAT: Decentralized Task Allocation Using GraphSAGE and Multi-Agent Deep Reinforcement Learning

Mar 08, 2025

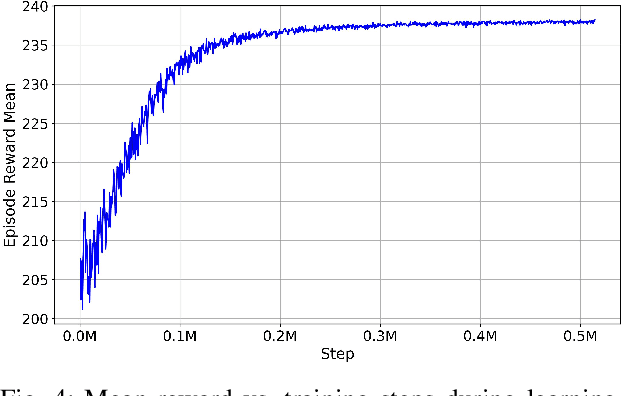

Abstract:This paper tackles decentralized continuous task allocation in heterogeneous multi-agent systems. We present a novel framework HIPPO-MAT that integrates graph neural networks (GNN) employing a GraphSAGE architecture to compute independent embeddings on each agent with an Independent Proximal Policy Optimization (IPPO) approach for multi-agent deep reinforcement learning. In our system, unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) share aggregated observation data via communication channels while independently processing these inputs to generate enriched state embeddings. This design enables dynamic, cost-optimal, conflict-aware task allocation in a 3D grid environment without the need for centralized coordination. A modified A* path planner is incorporated for efficient routing and collision avoidance. Simulation experiments demonstrate scalability with up to 30 agents and preliminary real-world validation on JetBot ROS AI Robots, each running its model on a Jetson Nano and communicating through an ESP-NOW protocol using ESP32-S3, which confirms the practical viability of the approach that incorporates simultaneous localization and mapping (SLAM). Experimental results revealed that our method achieves a high 92.5% conflict-free success rate, with only a 16.49% performance gap compared to the centralized Hungarian method, while outperforming the heuristic decentralized baseline based on greedy approach. Additionally, the framework exhibits scalability with up to 30 agents with allocation processing of 0.32 simulation step time and robustness in responding to dynamically generated tasks.

ImpedanceGPT: VLM-driven Impedance Control of Swarm of Mini-drones for Intelligent Navigation in Dynamic Environment

Mar 04, 2025Abstract:Swarm robotics plays a crucial role in enabling autonomous operations in dynamic and unpredictable environments. However, a major challenge remains ensuring safe and efficient navigation in environments filled with both dynamic alive (e.g., humans) and dynamic inanimate (e.g., non-living objects) obstacles. In this paper, we propose ImpedanceGPT, a novel system that combines a Vision-Language Model (VLM) with retrieval-augmented generation (RAG) to enable real-time reasoning for adaptive navigation of mini-drone swarms in complex environments. The key innovation of ImpedanceGPT lies in the integration of VLM and RAG, which provides the drones with enhanced semantic understanding of their surroundings. This enables the system to dynamically adjust impedance control parameters in response to obstacle types and environmental conditions. Our approach not only ensures safe and precise navigation but also improves coordination between drones in the swarm. Experimental evaluations demonstrate the effectiveness of the system. The VLM-RAG framework achieved an obstacle detection and retrieval accuracy of 80 % under optimal lighting. In static environments, drones navigated dynamic inanimate obstacles at 1.4 m/s but slowed to 0.7 m/s with increased separation around humans. In dynamic environments, speed adjusted to 1.0 m/s near hard obstacles, while reducing to 0.6 m/s with higher deflection to safely avoid moving humans.

AgilePilot: DRL-Based Drone Agent for Real-Time Motion Planning in Dynamic Environments by Leveraging Object Detection

Feb 10, 2025Abstract:Autonomous drone navigation in dynamic environments remains a critical challenge, especially when dealing with unpredictable scenarios including fast-moving objects with rapidly changing goal positions. While traditional planners and classical optimisation methods have been extensively used to address this dynamic problem, they often face real-time, unpredictable changes that ultimately leads to sub-optimal performance in terms of adaptiveness and real-time decision making. In this work, we propose a novel motion planner, AgilePilot, based on Deep Reinforcement Learning (DRL) that is trained in dynamic conditions, coupled with real-time Computer Vision (CV) for object detections during flight. The training-to-deployment framework bridges the Sim2Real gap, leveraging sophisticated reward structures that promotes both safety and agility depending upon environment conditions. The system can rapidly adapt to changing environments, while achieving a maximum speed of 3.0 m/s in real-world scenarios. In comparison, our approach outperforms classical algorithms such as Artificial Potential Field (APF) based motion planner by 3 times, both in performance and tracking accuracy of dynamic targets by using velocity predictions while exhibiting 90% success rate in 75 conducted experiments. This work highlights the effectiveness of DRL in tackling real-time dynamic navigation challenges, offering intelligent safety and agility.

HetSwarm: Cooperative Navigation of Heterogeneous Swarm in Dynamic and Dense Environments through Impedance-based Guidance

Feb 10, 2025

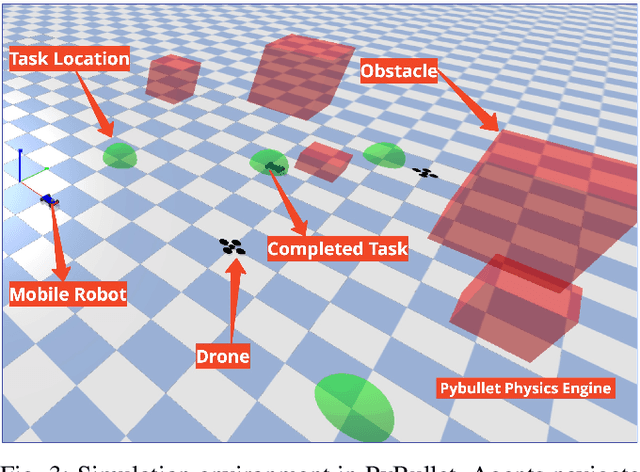

Abstract:With the growing demand for efficient logistics and warehouse management, unmanned aerial vehicles (UAVs) are emerging as a valuable complement to automated guided vehicles (AGVs). UAVs enhance efficiency by navigating dense environments and operating at varying altitudes. However, their limited flight time, battery life, and payload capacity necessitate a supporting ground station. To address these challenges, we propose HetSwarm, a heterogeneous multi-robot system that combines a UAV and a mobile ground robot for collaborative navigation in cluttered and dynamic conditions. Our approach employs an artificial potential field (APF)-based path planner for the UAV, allowing it to dynamically adjust its trajectory in real time. The ground robot follows this path while maintaining connectivity through impedance links, ensuring stable coordination. Additionally, the ground robot establishes temporal impedance links with low-height ground obstacles to avoid local collisions, as these obstacles do not interfere with the UAV's flight. Experimental validation of HetSwarm in diverse environmental conditions demonstrated a 90% success rate across 30 test cases. The ground robot exhibited an average deviation of 45 cm near obstacles, confirming effective collision avoidance. Extensive simulations in the Gym PyBullet environment further validated the robustness of our system for real-world applications, demonstrating its potential for dynamic, real-time task execution in cluttered environments.

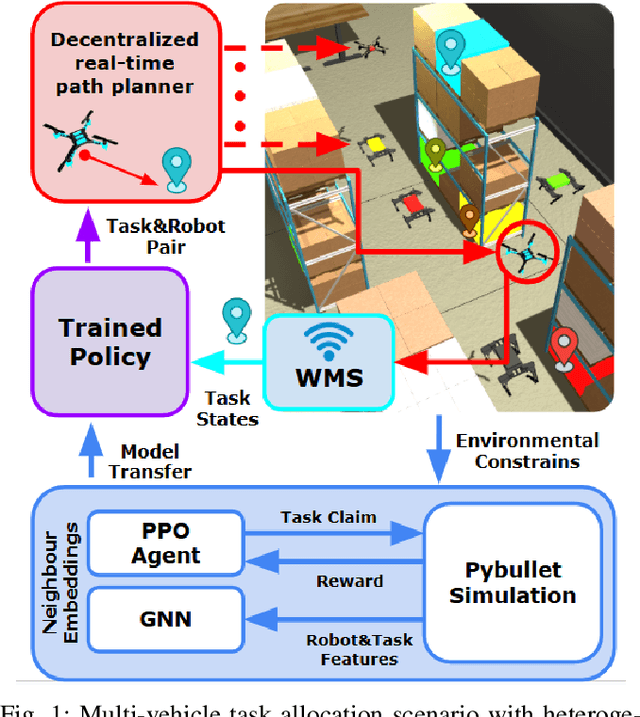

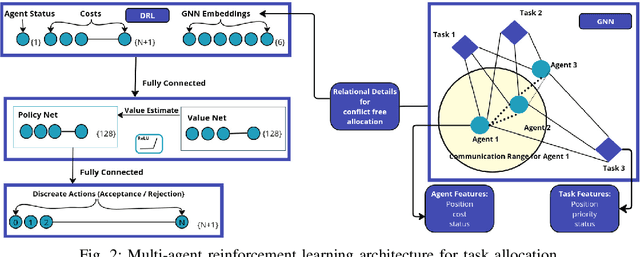

MAGNNET: Multi-Agent Graph Neural Network-based Efficient Task Allocation for Autonomous Vehicles with Deep Reinforcement Learning

Feb 04, 2025

Abstract:This paper addresses the challenge of decentralized task allocation within heterogeneous multi-agent systems operating under communication constraints. We introduce a novel framework that integrates graph neural networks (GNNs) with a centralized training and decentralized execution (CTDE) paradigm, further enhanced by a tailored Proximal Policy Optimization (PPO) algorithm for multi-agent deep reinforcement learning (MARL). Our approach enables unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) to dynamically allocate tasks efficiently without necessitating central coordination in a 3D grid environment. The framework minimizes total travel time while simultaneously avoiding conflicts in task assignments. For the cost calculation and routing, we employ reservation-based A* and R* path planners. Experimental results revealed that our method achieves a high 92.5% conflict-free success rate, with only a 7.49% performance gap compared to the centralized Hungarian method, while outperforming the heuristic decentralized baseline based on greedy approach. Additionally, the framework exhibits scalability with up to 20 agents with allocation processing of 2.8 s and robustness in responding to dynamically generated tasks, underscoring its potential for real-world applications in complex multi-agent scenarios.

SafeSwarm: Decentralized Safe RL for the Swarm of Drones Landing in Dense Crowds

Jan 13, 2025Abstract:This paper introduces a safe swarm of drones capable of performing landings in crowded environments robustly by relying on Reinforcement Learning techniques combined with Safe Learning. The developed system allows us to teach the swarm of drones with different dynamics to land on moving landing pads in an environment while avoiding collisions with obstacles and between agents. The safe barrier net algorithm was developed and evaluated using a swarm of Crazyflie 2.1 micro quadrotors, which were tested indoors with the Vicon motion capture system to ensure precise localization and control. Experimental results show that our system achieves landing accuracy of 2.25 cm with a mean time of 17 s and collision-free landings, underscoring its effectiveness and robustness in real-world scenarios. This work offers a promising foundation for applications in environments where safety and precision are paramount.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge