Demetros Aschu

AttentionSwarm: Reinforcement Learning with Attention Control Barier Function for Crazyflie Drones in Dynamic Environments

Mar 10, 2025Abstract:We introduce AttentionSwarm, a novel benchmark designed to evaluate safe and efficient swarm control across three challenging environments: a landing environment with obstacles, a competitive drone game setting, and a dynamic drone racing scenario. Central to our approach is the Attention Model Based Control Barrier Function (CBF) framework, which integrates attention mechanisms with safety-critical control theory to enable real-time collision avoidance and trajectory optimization. This framework dynamically prioritizes critical obstacles and agents in the swarms vicinity using attention weights, while CBFs formally guarantee safety by enforcing collision-free constraints. The safe attention net algorithm was developed and evaluated using a swarm of Crazyflie 2.1 micro quadrotors, which were tested indoors with the Vicon motion capture system to ensure precise localization and control. Experimental results show that our system achieves landing accuracy of 3.02 cm with a mean time of 23 s and collision-free landings in a dynamic landing environment, 100% and collision-free navigation in a drone game environment, and 95% and collision-free navigation for a dynamic multiagent drone racing environment, underscoring its effectiveness and robustness in real-world scenarios. This work offers a promising foundation for applications in dynamic environments where safety and fastness are paramount.

SafeSwarm: Decentralized Safe RL for the Swarm of Drones Landing in Dense Crowds

Jan 13, 2025Abstract:This paper introduces a safe swarm of drones capable of performing landings in crowded environments robustly by relying on Reinforcement Learning techniques combined with Safe Learning. The developed system allows us to teach the swarm of drones with different dynamics to land on moving landing pads in an environment while avoiding collisions with obstacles and between agents. The safe barrier net algorithm was developed and evaluated using a swarm of Crazyflie 2.1 micro quadrotors, which were tested indoors with the Vicon motion capture system to ensure precise localization and control. Experimental results show that our system achieves landing accuracy of 2.25 cm with a mean time of 17 s and collision-free landings, underscoring its effectiveness and robustness in real-world scenarios. This work offers a promising foundation for applications in environments where safety and precision are paramount.

TornadoDrone: Bio-inspired DRL-based Drone Landing on 6D Platform with Wind Force Disturbances

Jun 25, 2024

Abstract:Autonomous drone navigation faces a critical challenge in achieving accurate landings on dynamic platforms, especially under unpredictable conditions such as wind turbulence. Our research introduces TornadoDrone, a novel Deep Reinforcement Learning (DRL) model that adopts bio-inspired mechanisms to adapt to wind forces, mirroring the natural adaptability seen in birds. This model, unlike traditional approaches, derives its adaptability from indirect cues such as changes in position and velocity, rather than direct wind force measurements. TornadoDrone was rigorously trained in the gym-pybullet-drone simulator, which closely replicates the complexities of wind dynamics in the real world. Through extensive testing with Crazyflie 2.1 drones in both simulated and real windy conditions, TornadoDrone demonstrated a high performance in maintaining high-precision landing accuracy on moving platforms, surpassing conventional control methods such as PID controllers with Extended Kalman Filters. The study not only highlights the potential of DRL to tackle complex aerodynamic challenges but also paves the way for advanced autonomous systems that can adapt to environmental changes in real-time. The success of TornadoDrone signifies a leap forward in drone technology, particularly for critical applications such as surveillance and emergency response, where reliability and precision are paramount.

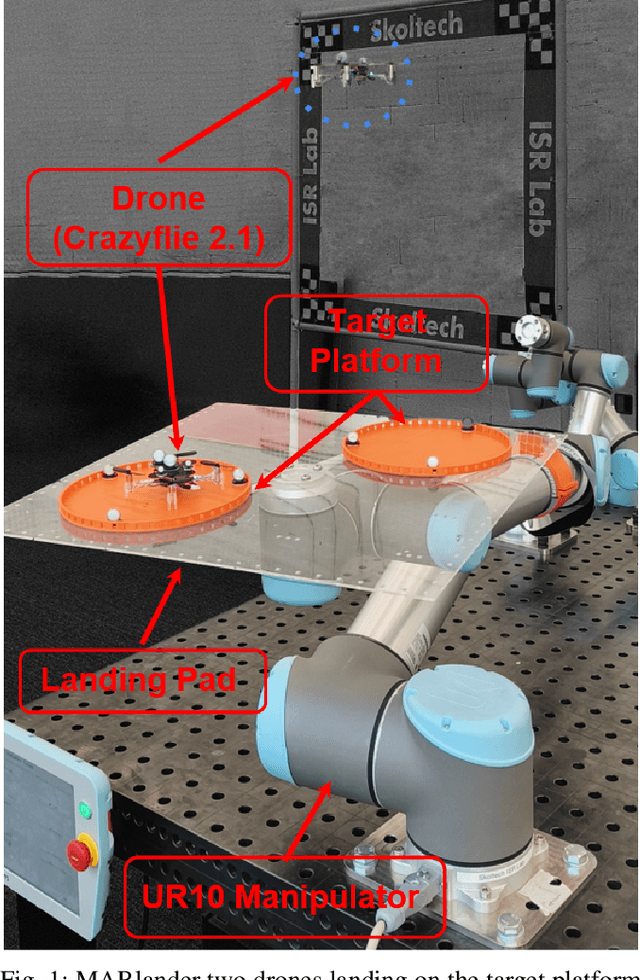

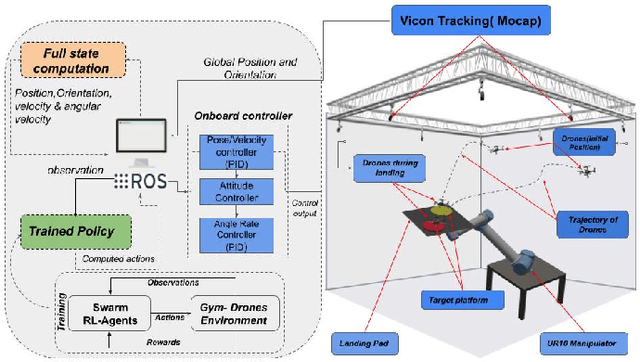

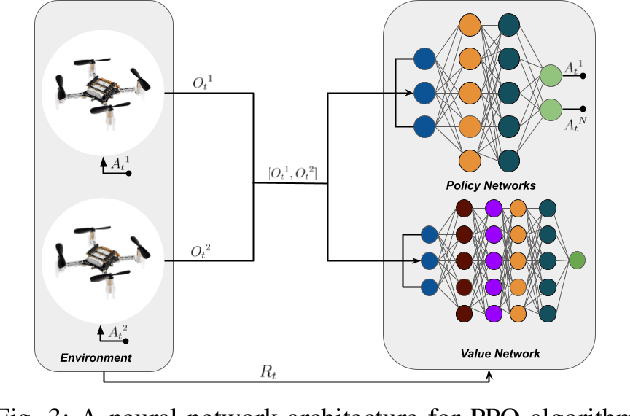

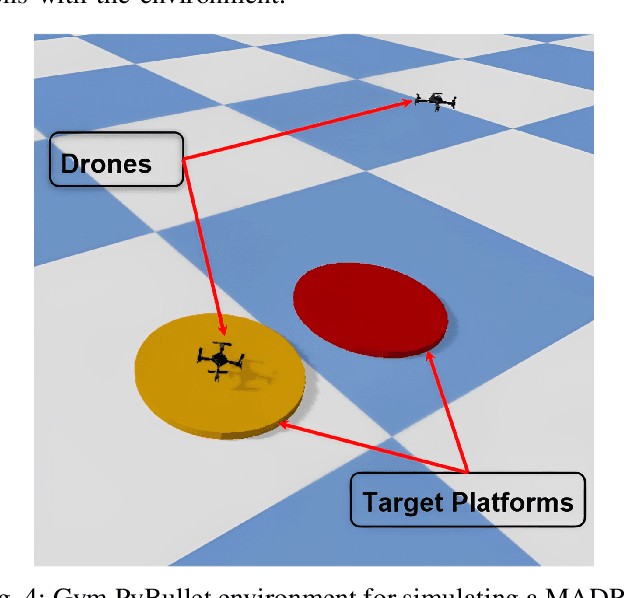

MARLander: A Local Path Planning for Drone Swarms using Multiagent Deep Reinforcement Learning

Jun 06, 2024

Abstract:Achieving safe and precise landings for a swarm of drones poses a significant challenge, primarily attributed to conventional control and planning methods. This paper presents the implementation of multi-agent deep reinforcement learning (MADRL) techniques for the precise landing of a drone swarm at relocated target locations. The system is trained in a realistic simulated environment with a maximum velocity of 3 m/s in training spaces of 4 x 4 x 4 m and deployed utilizing Crazyflie drones with a Vicon indoor localization system. The experimental results revealed that the proposed approach achieved a landing accuracy of 2.26 cm on stationary and 3.93 cm on moving platforms surpassing a baseline method used with a Proportional-integral-derivative (PID) controller with an Artificial Potential Field (APF). This research highlights drone landing technologies that eliminate the need for analytical centralized systems, potentially offering scalability and revolutionizing applications in logistics, safety, and rescue missions.

Lander.AI: Adaptive Landing Behavior Agent for Expertise in 3D Dynamic Platform Landings

Mar 12, 2024

Abstract:Mastering autonomous drone landing on dynamic platforms presents formidable challenges due to unpredictable velocities and external disturbances caused by the wind, ground effect, turbines or propellers of the docking platform. This study introduces an advanced Deep Reinforcement Learning (DRL) agent, Lander:AI, designed to navigate and land on platforms in the presence of windy conditions, thereby enhancing drone autonomy and safety. Lander:AI is rigorously trained within the gym-pybullet-drone simulation, an environment that mirrors real-world complexities, including wind turbulence, to ensure the agent's robustness and adaptability. The agent's capabilities were empirically validated with Crazyflie 2.1 drones across various test scenarios, encompassing both simulated environments and real-world conditions. The experimental results showcased Lander:AI's high-precision landing and its ability to adapt to moving platforms, even under wind-induced disturbances. Furthermore, the system performance was benchmarked against a baseline PID controller augmented with an Extended Kalman Filter, illustrating significant improvements in landing precision and error recovery. Lander:AI leverages bio-inspired learning to adapt to external forces like birds, enhancing drone adaptability without knowing force magnitudes.This research not only advances drone landing technologies, essential for inspection and emergency applications, but also highlights the potential of DRL in addressing intricate aerodynamic challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge