Robinroy Peter

HIPPO-MAT: Decentralized Task Allocation Using GraphSAGE and Multi-Agent Deep Reinforcement Learning

Mar 08, 2025

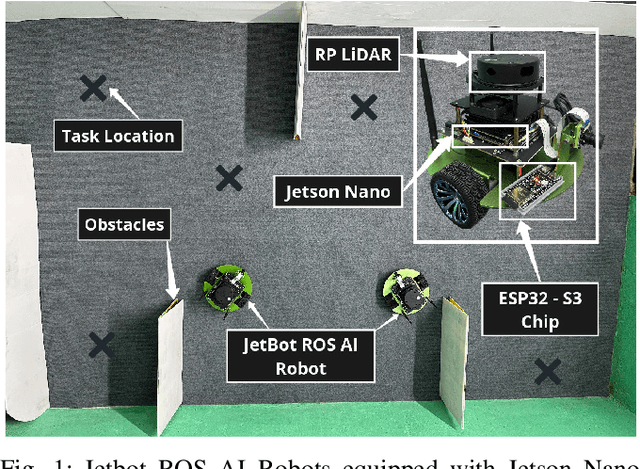

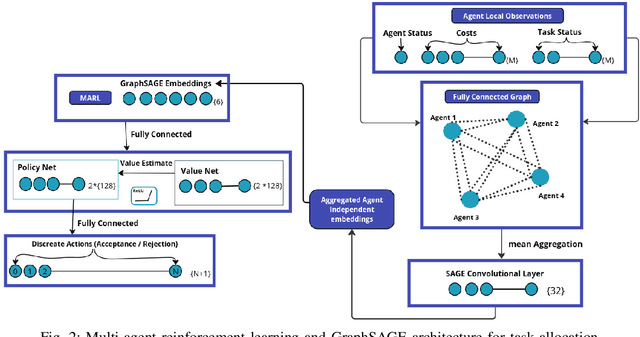

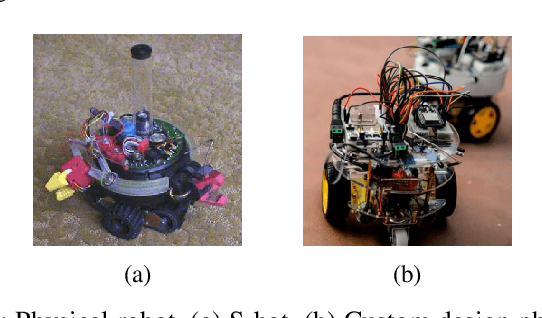

Abstract:This paper tackles decentralized continuous task allocation in heterogeneous multi-agent systems. We present a novel framework HIPPO-MAT that integrates graph neural networks (GNN) employing a GraphSAGE architecture to compute independent embeddings on each agent with an Independent Proximal Policy Optimization (IPPO) approach for multi-agent deep reinforcement learning. In our system, unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) share aggregated observation data via communication channels while independently processing these inputs to generate enriched state embeddings. This design enables dynamic, cost-optimal, conflict-aware task allocation in a 3D grid environment without the need for centralized coordination. A modified A* path planner is incorporated for efficient routing and collision avoidance. Simulation experiments demonstrate scalability with up to 30 agents and preliminary real-world validation on JetBot ROS AI Robots, each running its model on a Jetson Nano and communicating through an ESP-NOW protocol using ESP32-S3, which confirms the practical viability of the approach that incorporates simultaneous localization and mapping (SLAM). Experimental results revealed that our method achieves a high 92.5% conflict-free success rate, with only a 16.49% performance gap compared to the centralized Hungarian method, while outperforming the heuristic decentralized baseline based on greedy approach. Additionally, the framework exhibits scalability with up to 30 agents with allocation processing of 0.32 simulation step time and robustness in responding to dynamically generated tasks.

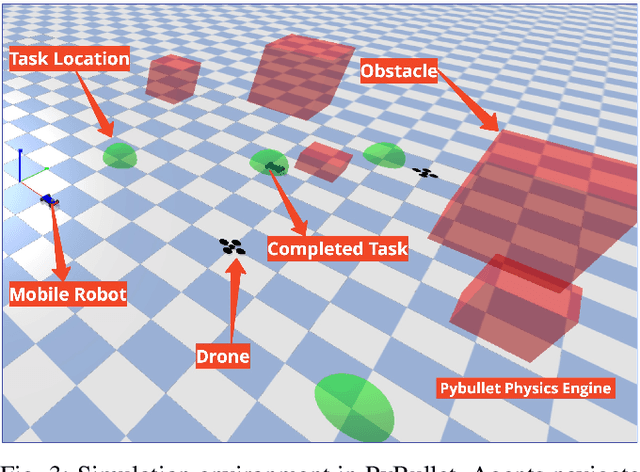

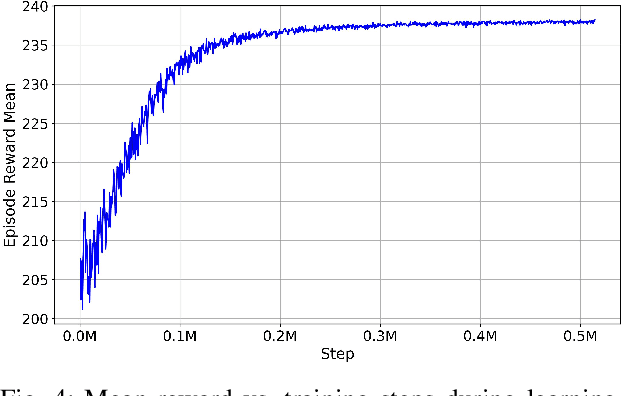

MAGNNET: Multi-Agent Graph Neural Network-based Efficient Task Allocation for Autonomous Vehicles with Deep Reinforcement Learning

Feb 04, 2025

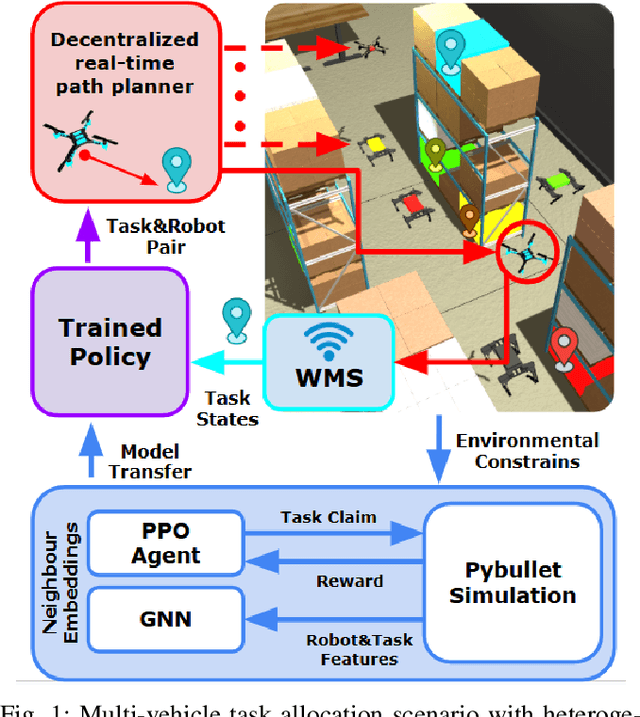

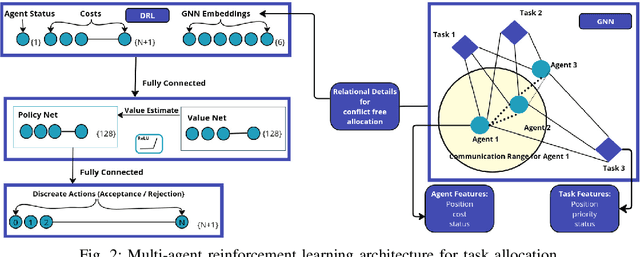

Abstract:This paper addresses the challenge of decentralized task allocation within heterogeneous multi-agent systems operating under communication constraints. We introduce a novel framework that integrates graph neural networks (GNNs) with a centralized training and decentralized execution (CTDE) paradigm, further enhanced by a tailored Proximal Policy Optimization (PPO) algorithm for multi-agent deep reinforcement learning (MARL). Our approach enables unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) to dynamically allocate tasks efficiently without necessitating central coordination in a 3D grid environment. The framework minimizes total travel time while simultaneously avoiding conflicts in task assignments. For the cost calculation and routing, we employ reservation-based A* and R* path planners. Experimental results revealed that our method achieves a high 92.5% conflict-free success rate, with only a 7.49% performance gap compared to the centralized Hungarian method, while outperforming the heuristic decentralized baseline based on greedy approach. Additionally, the framework exhibits scalability with up to 20 agents with allocation processing of 2.8 s and robustness in responding to dynamically generated tasks, underscoring its potential for real-world applications in complex multi-agent scenarios.

Dynamic Subgoal based Path Formation and Task Allocation: A NeuroFleets Approach to Scalable Swarm Robotics

Sep 01, 2024

Abstract:This paper addresses the challenges of exploration and navigation in unknown environments from the perspective of evolutionary swarm robotics. A key focus is on path formation, which is essential for enabling cooperative swarm robots to navigate effectively. We designed the task allocation and path formation process based on a finite state machine, ensuring systematic decision-making and efficient state transitions. The approach is decentralized, allowing each robot to make decisions independently based on local information, which enhances scalability and robustness. We present a novel subgoal-based path formation method that establishes paths between locations by leveraging visually connected subgoals. Simulation experiments conducted in the Argos simulator show that this method successfully forms paths in the majority of trials. However, inter-collision (traffic) among numerous robots during path formation can negatively impact performance. To address this issue, we propose a task allocation strategy that uses local communication protocols and light signal-based communication to manage robot deployment. This strategy assesses the distance between points and determines the optimal number of robots needed for the path formation task, thereby reducing unnecessary exploration and traffic congestion. The performance of both the subgoal-based path formation method and the task allocation strategy is evaluated by comparing the path length, time, and resource usage against the A* algorithm. Simulation results demonstrate the effectiveness of our approach, highlighting its scalability, robustness, and fault tolerance.

TornadoDrone: Bio-inspired DRL-based Drone Landing on 6D Platform with Wind Force Disturbances

Jun 25, 2024

Abstract:Autonomous drone navigation faces a critical challenge in achieving accurate landings on dynamic platforms, especially under unpredictable conditions such as wind turbulence. Our research introduces TornadoDrone, a novel Deep Reinforcement Learning (DRL) model that adopts bio-inspired mechanisms to adapt to wind forces, mirroring the natural adaptability seen in birds. This model, unlike traditional approaches, derives its adaptability from indirect cues such as changes in position and velocity, rather than direct wind force measurements. TornadoDrone was rigorously trained in the gym-pybullet-drone simulator, which closely replicates the complexities of wind dynamics in the real world. Through extensive testing with Crazyflie 2.1 drones in both simulated and real windy conditions, TornadoDrone demonstrated a high performance in maintaining high-precision landing accuracy on moving platforms, surpassing conventional control methods such as PID controllers with Extended Kalman Filters. The study not only highlights the potential of DRL to tackle complex aerodynamic challenges but also paves the way for advanced autonomous systems that can adapt to environmental changes in real-time. The success of TornadoDrone signifies a leap forward in drone technology, particularly for critical applications such as surveillance and emergency response, where reliability and precision are paramount.

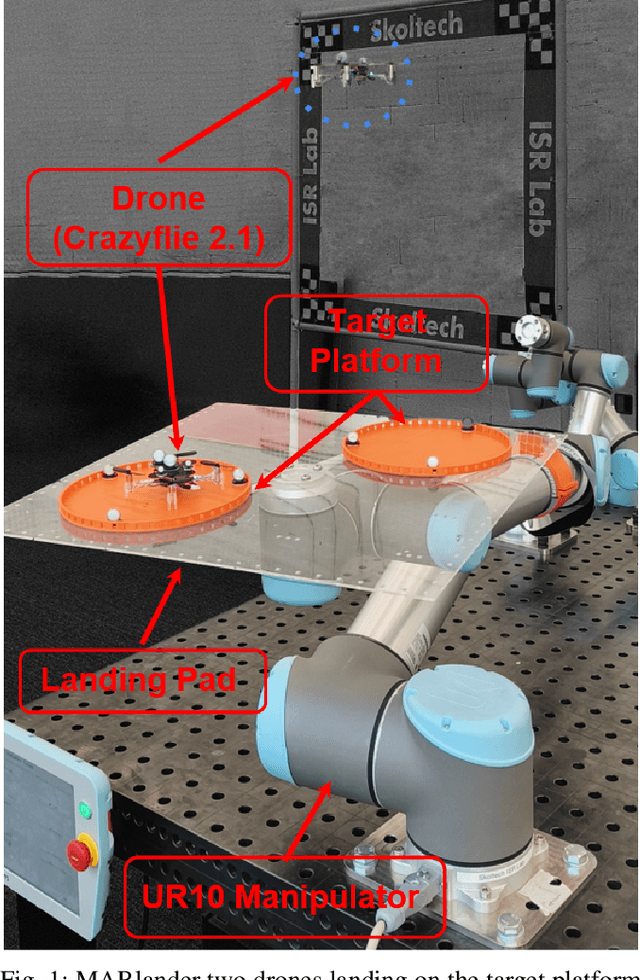

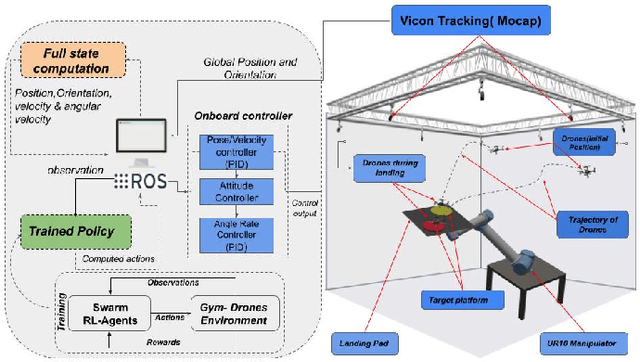

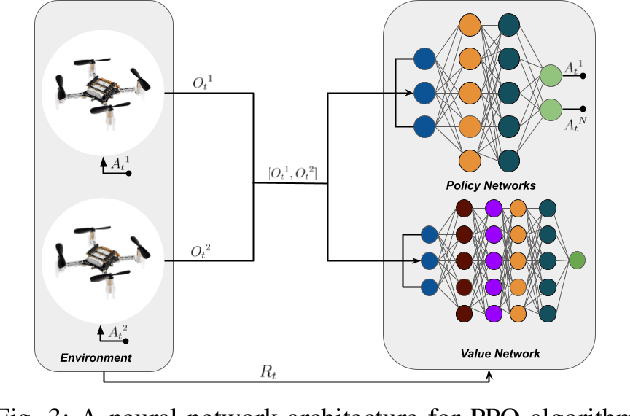

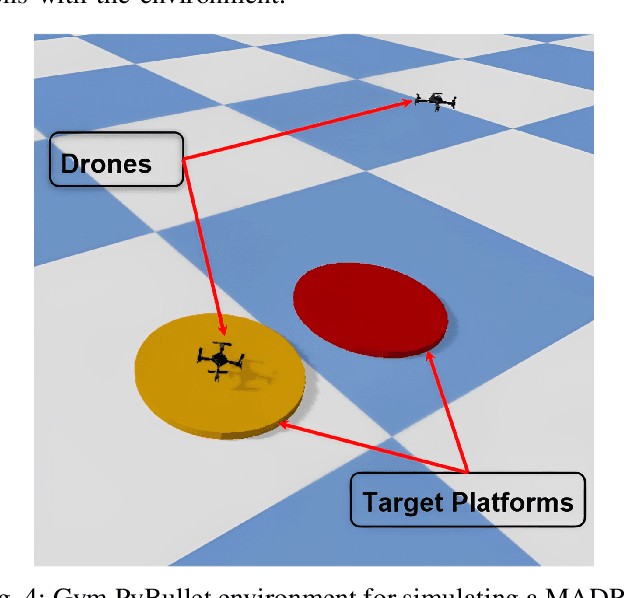

MARLander: A Local Path Planning for Drone Swarms using Multiagent Deep Reinforcement Learning

Jun 06, 2024

Abstract:Achieving safe and precise landings for a swarm of drones poses a significant challenge, primarily attributed to conventional control and planning methods. This paper presents the implementation of multi-agent deep reinforcement learning (MADRL) techniques for the precise landing of a drone swarm at relocated target locations. The system is trained in a realistic simulated environment with a maximum velocity of 3 m/s in training spaces of 4 x 4 x 4 m and deployed utilizing Crazyflie drones with a Vicon indoor localization system. The experimental results revealed that the proposed approach achieved a landing accuracy of 2.26 cm on stationary and 3.93 cm on moving platforms surpassing a baseline method used with a Proportional-integral-derivative (PID) controller with an Artificial Potential Field (APF). This research highlights drone landing technologies that eliminate the need for analytical centralized systems, potentially offering scalability and revolutionizing applications in logistics, safety, and rescue missions.

Lander.AI: Adaptive Landing Behavior Agent for Expertise in 3D Dynamic Platform Landings

Mar 12, 2024

Abstract:Mastering autonomous drone landing on dynamic platforms presents formidable challenges due to unpredictable velocities and external disturbances caused by the wind, ground effect, turbines or propellers of the docking platform. This study introduces an advanced Deep Reinforcement Learning (DRL) agent, Lander:AI, designed to navigate and land on platforms in the presence of windy conditions, thereby enhancing drone autonomy and safety. Lander:AI is rigorously trained within the gym-pybullet-drone simulation, an environment that mirrors real-world complexities, including wind turbulence, to ensure the agent's robustness and adaptability. The agent's capabilities were empirically validated with Crazyflie 2.1 drones across various test scenarios, encompassing both simulated environments and real-world conditions. The experimental results showcased Lander:AI's high-precision landing and its ability to adapt to moving platforms, even under wind-induced disturbances. Furthermore, the system performance was benchmarked against a baseline PID controller augmented with an Extended Kalman Filter, illustrating significant improvements in landing precision and error recovery. Lander:AI leverages bio-inspired learning to adapt to external forces like birds, enhancing drone adaptability without knowing force magnitudes.This research not only advances drone landing technologies, essential for inspection and emergency applications, but also highlights the potential of DRL in addressing intricate aerodynamic challenges.

CognitiveOS: Large Multimodal Model based System to Endow Any Type of Robot with Generative AI

Jan 29, 2024

Abstract:This paper introduces CognitiveOS, a disruptive system based on multiple transformer-based models, endowing robots of various types with cognitive abilities not only for communication with humans but also for task resolution through physical interaction with the environment. The system operates smoothly on different robotic platforms without extra tuning. It autonomously makes decisions for task execution by analyzing the environment and using information from its long-term memory. The system underwent testing on various platforms, including quadruped robots and manipulator robots, showcasing its capability to formulate behavioral plans even for robots whose behavioral examples were absent in the training dataset. Experimental results revealed the system's high performance in advanced task comprehension and adaptability, emphasizing its potential for real-world applications. The chapters of this paper describe the key components of the system and the dataset structure. The dataset for fine-tuning step generation model is provided at the following link: link coming soon

Evolutionary Swarm Robotics: Dynamic Subgoal-Based Path Formation and Task Allocation for Exploration and Navigation in Unknown Environments

Dec 27, 2023Abstract:This research paper addresses the challenges of exploration and navigation in unknown environments from an evolutionary swarm robotics perspective. Path formation plays a crucial role in enabling cooperative swarm robots to accomplish these tasks. The paper presents a method called the sub-goal-based path formation, which establishes a path between two different locations by exploiting visually connected sub-goals. Simulation experiments conducted in the Argos simulator demonstrate the successful formation of paths in the majority of trials. Furthermore, the paper tackles the problem of inter-collision (traffic) among a large number of robots engaged in path formation, which negatively impacts the performance of the sub-goal-based method. To mitigate this issue, a task allocation strategy is proposed, leveraging local communication protocols and light signal-based communication. The strategy evaluates the distance between points and determines the required number of robots for the path formation task, reducing unwanted exploration and traffic congestion. The performance of the sub-goal-based path formation and task allocation strategy is evaluated by comparing path length, time, and resource reduction against the A* algorithm. The simulation experiments demonstrate promising results, showcasing the scalability, robustness, and fault tolerance characteristics of the proposed approach.

NeuroSwarm: Multi-Agent Neural 3D Scene Reconstruction and Segmentation with UAV for Optimal Navigation of Quadruped Robot

Aug 03, 2023

Abstract:Quadruped robots have the distinct ability to adapt their body and step height to navigate through cluttered environments. Nonetheless, for these robots to utilize their full potential in real-world scenarios, they require awareness of their environment and obstacle geometry. We propose a novel multi-agent robotic system that incorporates cutting-edge technologies. The proposed solution features a 3D neural reconstruction algorithm that enables navigation of a quadruped robot in both static and semi-static environments. The prior areas of the environment are also segmented according to the quadruped robots' abilities to pass them. Moreover, we have developed an adaptive neural field optimal motion planner (ANFOMP) that considers both collision probability and obstacle height in 2D space.Our new navigation and mapping approach enables quadruped robots to adjust their height and behavior to navigate under arches and push through obstacles with smaller dimensions. The multi-agent mapping operation has proven to be highly accurate, with an obstacle reconstruction precision of 82%. Moreover, the quadruped robot can navigate with 3D obstacle information and the ANFOMP system, resulting in a 33.3% reduction in path length and a 70% reduction in navigation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge