Sausar Karaf

MorphoNavi: Aerial-Ground Robot Navigation with Object Oriented Mapping in Digital Twin

Apr 23, 2025

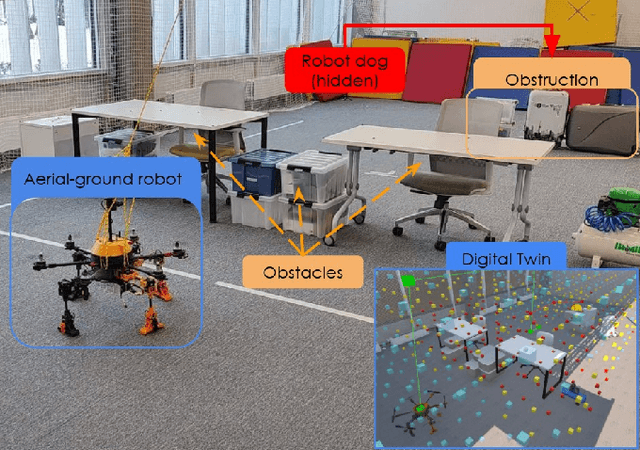

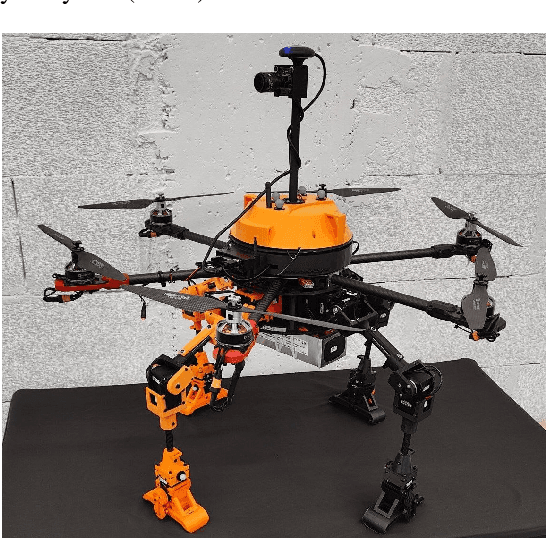

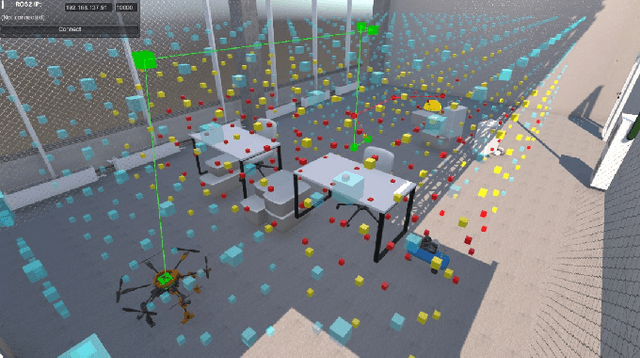

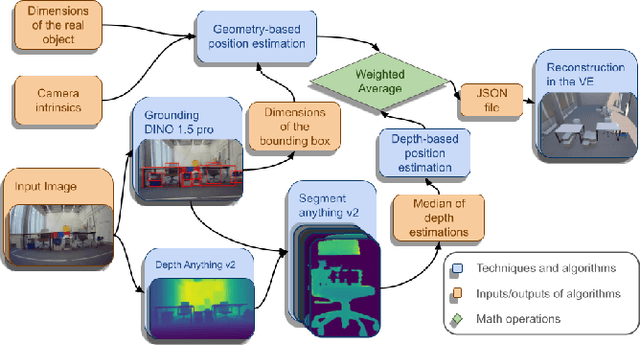

Abstract:This paper presents a novel mapping approach for a universal aerial-ground robotic system utilizing a single monocular camera. The proposed system is capable of detecting a diverse range of objects and estimating their positions without requiring fine-tuning for specific environments. The system's performance was evaluated through a simulated search-and-rescue scenario, where the MorphoGear robot successfully located a robotic dog while an operator monitored the process. This work contributes to the development of intelligent, multimodal robotic systems capable of operating in unstructured environments.

SafeSwarm: Decentralized Safe RL for the Swarm of Drones Landing in Dense Crowds

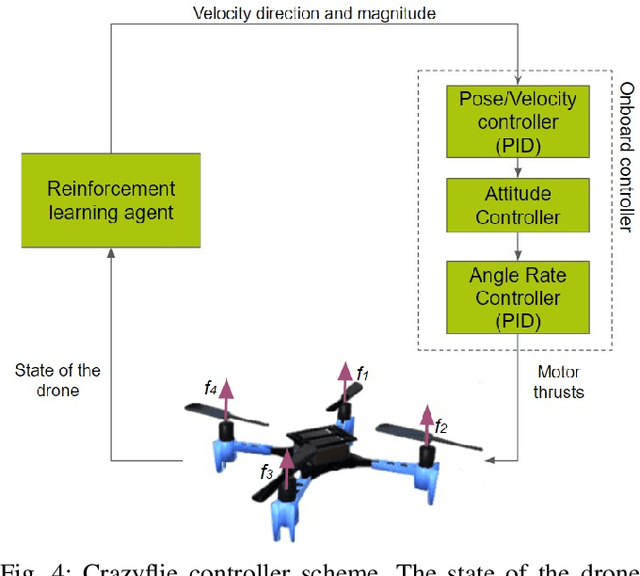

Jan 13, 2025Abstract:This paper introduces a safe swarm of drones capable of performing landings in crowded environments robustly by relying on Reinforcement Learning techniques combined with Safe Learning. The developed system allows us to teach the swarm of drones with different dynamics to land on moving landing pads in an environment while avoiding collisions with obstacles and between agents. The safe barrier net algorithm was developed and evaluated using a swarm of Crazyflie 2.1 micro quadrotors, which were tested indoors with the Vicon motion capture system to ensure precise localization and control. Experimental results show that our system achieves landing accuracy of 2.25 cm with a mean time of 17 s and collision-free landings, underscoring its effectiveness and robustness in real-world scenarios. This work offers a promising foundation for applications in environments where safety and precision are paramount.

UAV-VLA: Vision-Language-Action System for Large Scale Aerial Mission Generation

Jan 09, 2025

Abstract:The UAV-VLA (Visual-Language-Action) system is a tool designed to facilitate communication with aerial robots. By integrating satellite imagery processing with the Visual Language Model (VLM) and the powerful capabilities of GPT, UAV-VLA enables users to generate general flight paths-and-action plans through simple text requests. This system leverages the rich contextual information provided by satellite images, allowing for enhanced decision-making and mission planning. The combination of visual analysis by VLM and natural language processing by GPT can provide the user with the path-and-action set, making aerial operations more efficient and accessible. The newly developed method showed the difference in the length of the created trajectory in 22% and the mean error in finding the objects of interest on a map in 34.22 m by Euclidean distance in the K-Nearest Neighbors (KNN) approach.

OmniRace: 6D Hand Pose Estimation for Intuitive Guidance of Racing Drone

Jul 16, 2024

Abstract:This paper presents the OmniRace approach to controlling a racing drone with 6-degree of freedom (DoF) hand pose estimation and gesture recognition. To our knowledge, it is the first-ever technology that allows for low-level control of high-speed drones using gestures. OmniRace employs a gesture interface based on computer vision and a deep neural network to estimate a 6-DoF hand pose. The advanced machine learning algorithm robustly interprets human gestures, allowing users to control drone motion intuitively. Real-time control of a racing drone demonstrates the effectiveness of the system, validating its potential to revolutionize drone racing and other applications. Experimental results conducted in the Gazebo simulation environment revealed that OmniRace allows the users to complite the UAV race track significantly (by 25.1%) faster and to decrease the length of the test drone path (from 102.9 to 83.7 m). Users preferred the gesture interface for attractiveness (1.57 UEQ score), hedonic quality (1.56 UEQ score), and lower perceived temporal demand (32.0 score in NASA-TLX), while noting the high efficiency (0.75 UEQ score) and low physical demand (19.0 score in NASA-TLX) of the baseline remote controller. The deep neural network attains an average accuracy of 99.75% when applied to both normalized datasets and raw datasets. OmniRace can potentially change the way humans interact with and navigate racing drones in dynamic and complex environments. The source code is available at https://github.com/SerValera/OmniRace.git.

AirNeRF: 3D Reconstruction of Human with Drone and NeRF for Future Communication Systems

Jul 15, 2024

Abstract:In the rapidly evolving landscape of digital content creation, the demand for fast, convenient, and autonomous methods of crafting detailed 3D reconstructions of humans has grown significantly. Addressing this pressing need, our AirNeRF system presents an innovative pathway to the creation of a realistic 3D human avatar. Our approach leverages Neural Radiance Fields (NeRF) with an automated drone-based video capturing method. The acquired data provides a swift and precise way to create high-quality human body reconstructions following several stages of our system. The rigged mesh derived from our system proves to be an excellent foundation for free-view synthesis of dynamic humans, particularly well-suited for the immersive experiences within gaming and virtual reality.

MorphoMove: Bi-Modal Path Planner with MPC-based Path Follower for Multi-Limb Morphogenetic UAV

Jul 12, 2024

Abstract:This paper discusses developments for a multi-limb morphogenetic UAV, MorphoGear, that is capable of both aerial flight and ground locomotion. A hybrid path planning algorithm based on A* strategy has been developed enabling seamless transition between air-to-ground navigation modes, thereby enhancing robot's mobility in complex environments. Moreover, precise path following is achieved during ground locomotion with a Model Predictive Control (MPC) architecture for its novel walking behaviour. Experimental validation was conducted in the Unity simulation environment utilizing Python scripts to compute control values. The algorithms' performance is validated by the Root Mean Squared Error (RMSE) of 0.91 cm and a maximum error of 1.85 cm, as demonstrated by the results. These developments highlight the adaptability of MorphoGear in navigation through cluttered environments, establishing it as a usable tool in autonomous exploration, both aerial and ground-based.

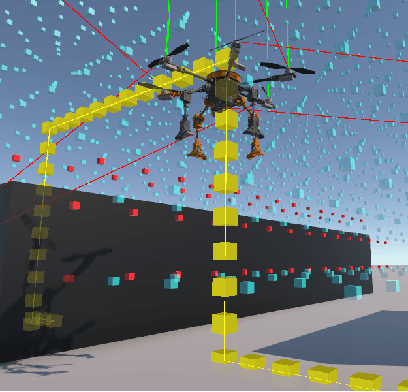

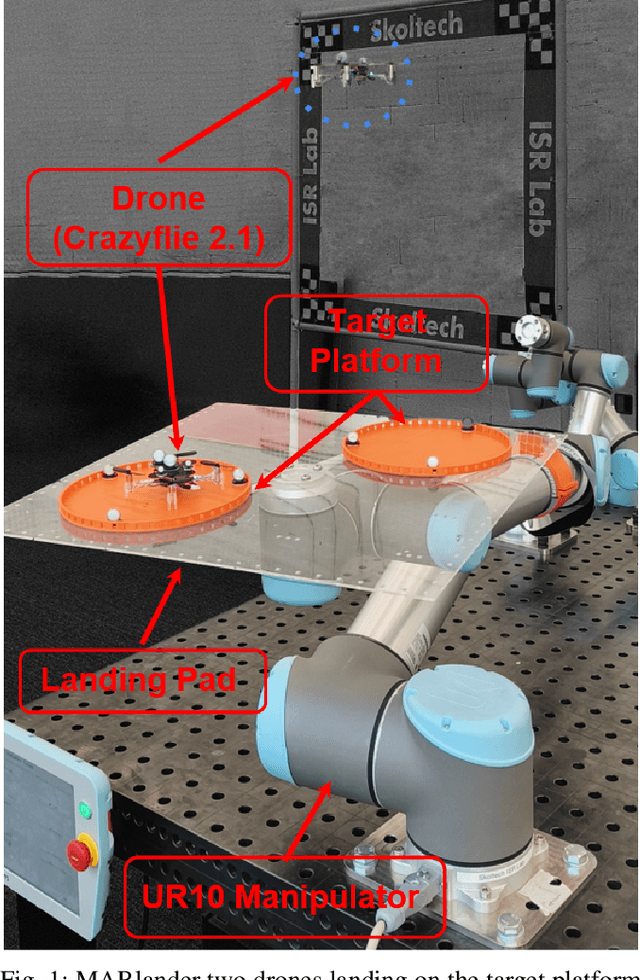

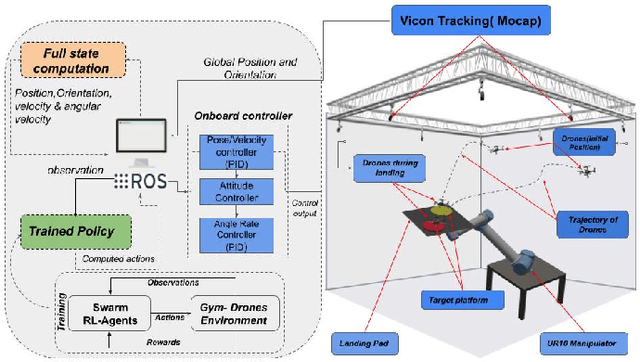

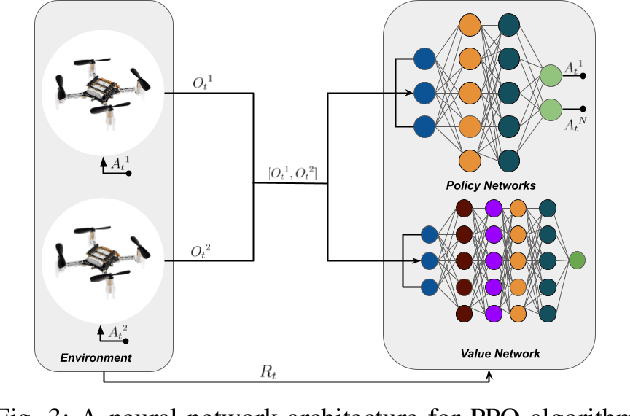

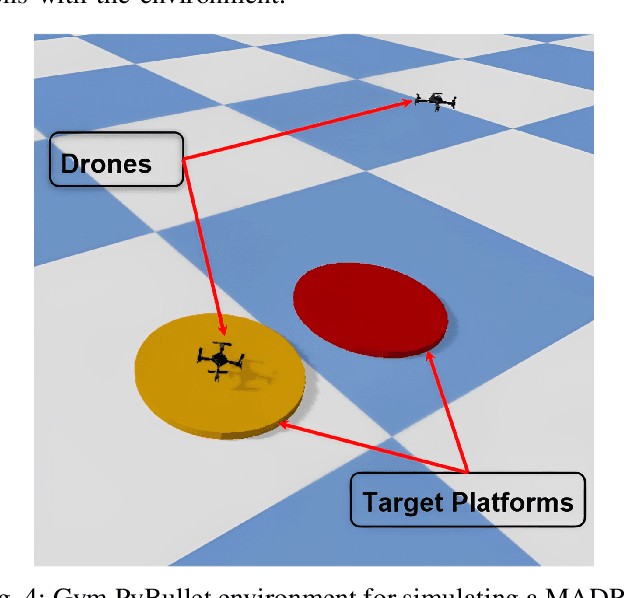

MARLander: A Local Path Planning for Drone Swarms using Multiagent Deep Reinforcement Learning

Jun 06, 2024

Abstract:Achieving safe and precise landings for a swarm of drones poses a significant challenge, primarily attributed to conventional control and planning methods. This paper presents the implementation of multi-agent deep reinforcement learning (MADRL) techniques for the precise landing of a drone swarm at relocated target locations. The system is trained in a realistic simulated environment with a maximum velocity of 3 m/s in training spaces of 4 x 4 x 4 m and deployed utilizing Crazyflie drones with a Vicon indoor localization system. The experimental results revealed that the proposed approach achieved a landing accuracy of 2.26 cm on stationary and 3.93 cm on moving platforms surpassing a baseline method used with a Proportional-integral-derivative (PID) controller with an Artificial Potential Field (APF). This research highlights drone landing technologies that eliminate the need for analytical centralized systems, potentially offering scalability and revolutionizing applications in logistics, safety, and rescue missions.

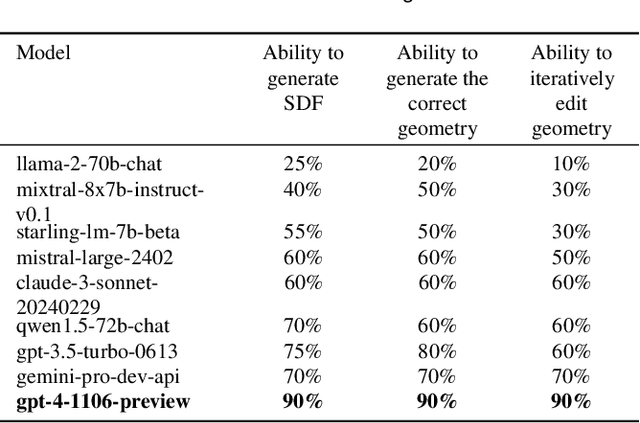

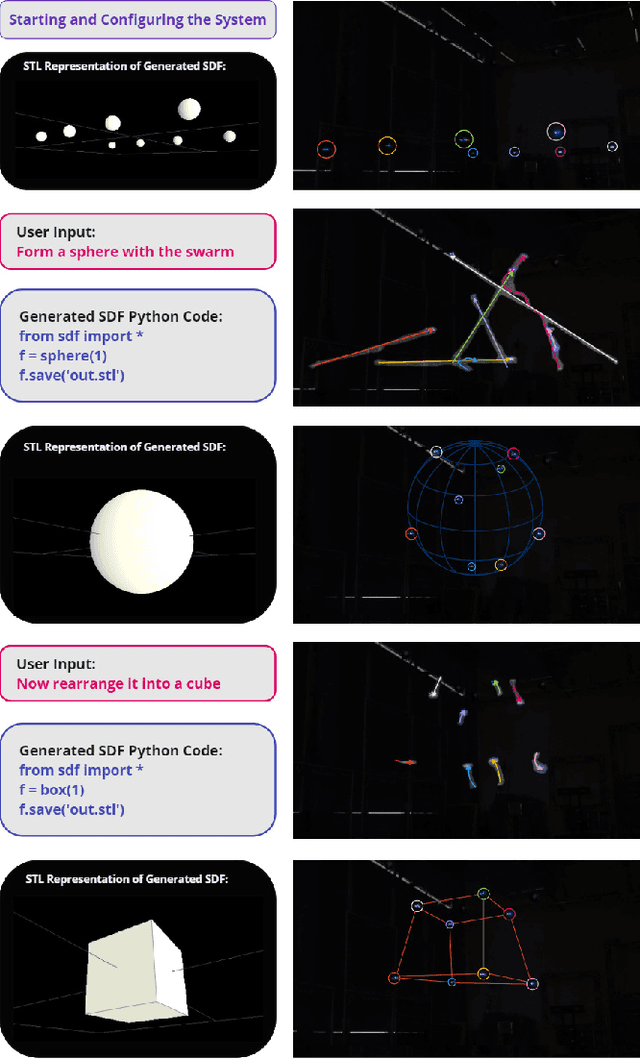

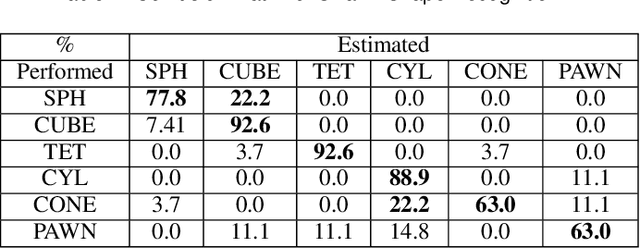

FlockGPT: Guiding UAV Flocking with Linguistic Orchestration

May 09, 2024

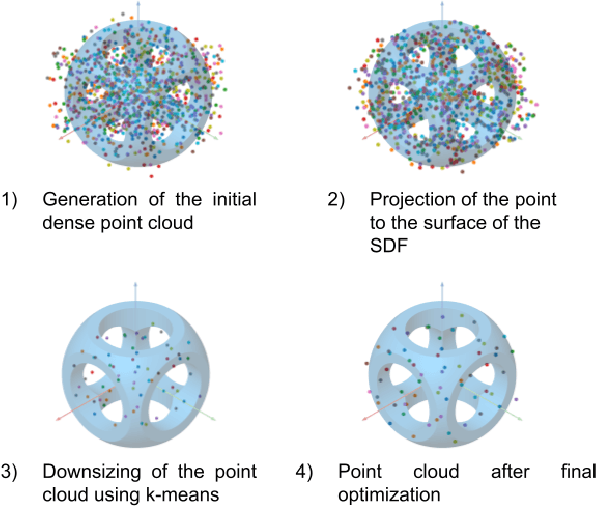

Abstract:This article presents the world's first rapid drone flocking control using natural language through generative AI. The described approach enables the intuitive orchestration of a flock of any size to achieve the desired geometry. The key feature of the method is the development of a new interface based on Large Language Models to communicate with the user and to generate the target geometry descriptions. Users can interactively modify or provide comments during the construction of the flock geometry model. By combining flocking technology and defining the target surface using a signed distance function, smooth and adaptive movement of the drone swarm between target states is achieved. Our user study on FlockGPT confirmed a high level of intuitive control over drone flocking by users. Subjects who had never previously controlled a swarm of drones were able to construct complex figures in just a few iterations and were able to accurately distinguish the formed swarm drone figures. The results revealed a high recognition rate for six different geometric patterns generated through the LLM-based interface and performed by a simulated drone flock (mean of 80% with a maximum of 93\% for cube and tetrahedron patterns). Users commented on low temporal demand (19.2 score in NASA-TLX), high performance (26 score in NASA-TLX), attractiveness (1.94 UEQ score), and hedonic quality (1.81 UEQ score) of the developed system. The FlockGPT demo code repository can be found at: coming soon

DNFOMP: Dynamic Neural Field Optimal Motion Planner for Navigation of Autonomous Robots in Cluttered Environment

Aug 07, 2023Abstract:Motion planning in dynamically changing environments is one of the most complex challenges in autonomous driving. Safety is a crucial requirement, along with driving comfort and speed limits. While classical sampling-based, lattice-based, and optimization-based planning methods can generate smooth and short paths, they often do not consider the dynamics of the environment. Some techniques do consider it, but they rely on updating the environment on-the-go rather than explicitly accounting for the dynamics, which is not suitable for self-driving. To address this, we propose a novel method based on the Neural Field Optimal Motion Planner (NFOMP), which outperforms state-of-the-art approaches in terms of normalized curvature and the number of cusps. Our approach embeds previously known moving obstacles into the neural field collision model to account for the dynamics of the environment. We also introduce time profiling of the trajectory and non-linear velocity constraints by adding Lagrange multipliers to the trajectory loss function. We applied our method to solve the optimal motion planning problem in an urban environment using the BeamNG.tech driving simulator. An autonomous car drove the generated trajectories in three city scenarios while sharing the road with the obstacle vehicle. Our evaluation shows that the maximum acceleration the passenger can experience instantly is -7.5 m/s^2 and that 89.6% of the driving time is devoted to normal driving with accelerations below 3.5 m/s^2. The driving style is characterized by 46.0% and 31.4% of the driving time being devoted to the light rail transit style and the moderate driving style, respectively.

MorphoLander: Reinforcement Learning Based Landing of a Group of Drones on the Adaptive Morphogenetic UAV

Jul 28, 2023

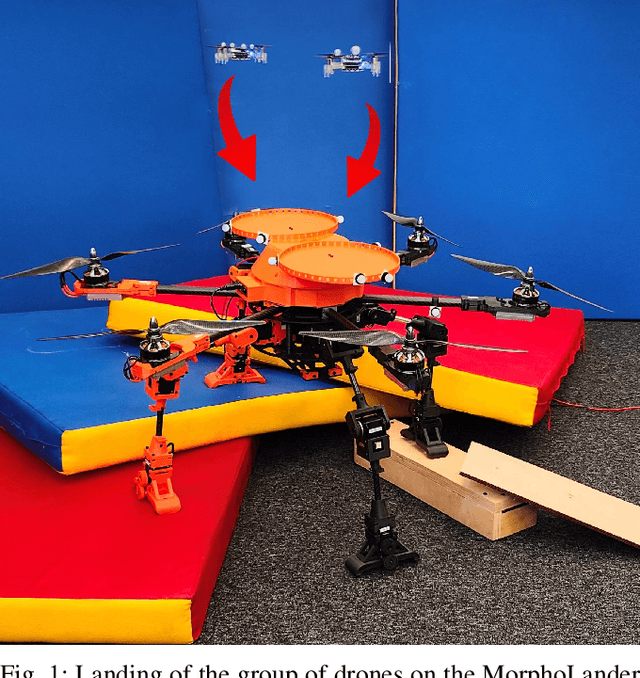

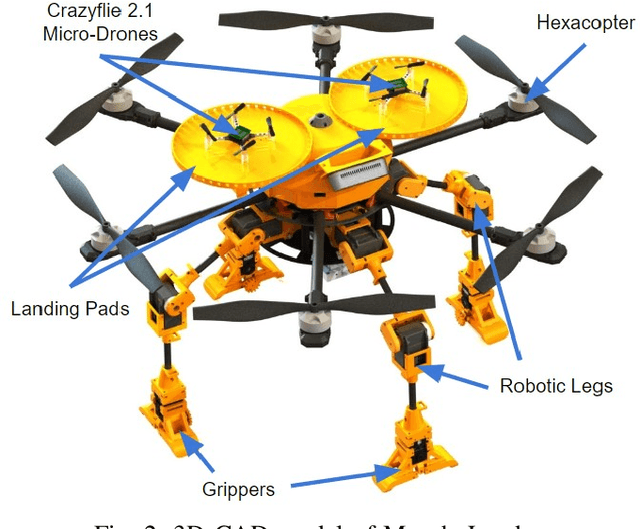

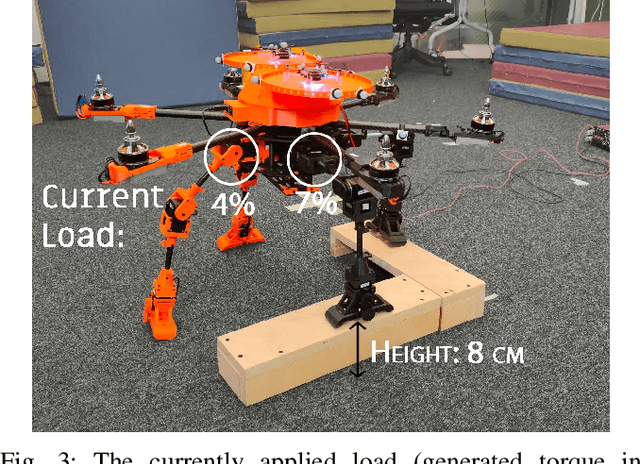

Abstract:This paper focuses on a novel robotic system MorphoLander representing heterogeneous swarm of drones for exploring rough terrain environments. The morphogenetic leader drone is capable of landing on uneven terrain, traversing it, and maintaining horizontal position to deploy smaller drones for extensive area exploration. After completing their tasks, these drones return and land back on the landing pads of MorphoGear. The reinforcement learning algorithm was developed for a precise landing of drones on the leader robot that either remains static during their mission or relocates to the new position. Several experiments were conducted to evaluate the performance of the developed landing algorithm under both even and uneven terrain conditions. The experiments revealed that the proposed system results in high landing accuracy of 0.5 cm when landing on the leader drone under even terrain conditions and 2.35 cm under uneven terrain conditions. MorphoLander has the potential to significantly enhance the efficiency of the industrial inspections, seismic surveys, and rescue missions in highly cluttered and unstructured environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge