Daria Trinitatova

FiDTouch: A 3D Wearable Haptic Display for the Finger Pad

Jul 10, 2025Abstract:The applications of fingertip haptic devices have spread to various fields from revolutionizing virtual reality and medical training simulations to facilitating remote robotic operations, proposing great potential for enhancing user experiences, improving training outcomes, and new forms of interaction. In this work, we present FiDTouch, a 3D wearable haptic device that delivers cutaneous stimuli to the finger pad, such as contact, pressure, encounter, skin stretch, and vibrotactile feedback. The application of a tiny inverted Delta robot in the mechanism design allows providing accurate contact and fast changing dynamic stimuli to the finger pad surface. The performance of the developed display was evaluated in a two-stage user study of the perception of static spatial contact stimuli and skin stretch stimuli generated on the finger pad. The proposed display, by providing users with precise touch and force stimuli, can enhance user immersion and efficiency in the fields of human-computer and human-robot interactions.

HapticVLM: VLM-Driven Texture Recognition Aimed at Intelligent Haptic Interaction

May 05, 2025Abstract:This paper introduces HapticVLM, a novel multimodal system that integrates vision-language reasoning with deep convolutional networks to enable real-time haptic feedback. HapticVLM leverages a ConvNeXt-based material recognition module to generate robust visual embeddings for accurate identification of object materials, while a state-of-the-art Vision-Language Model (Qwen2-VL-2B-Instruct) infers ambient temperature from environmental cues. The system synthesizes tactile sensations by delivering vibrotactile feedback through speakers and thermal cues via a Peltier module, thereby bridging the gap between visual perception and tactile experience. Experimental evaluations demonstrate an average recognition accuracy of 84.67% across five distinct auditory-tactile patterns and a temperature estimation accuracy of 86.7% based on a tolerance-based evaluation method with an 8{\deg}C margin of error across 15 scenarios. Although promising, the current study is limited by the use of a small set of prominent patterns and a modest participant pool. Future work will focus on expanding the range of tactile patterns and increasing user studies to further refine and validate the system's performance. Overall, HapticVLM presents a significant step toward context-aware, multimodal haptic interaction with potential applications in virtual reality, and assistive technologies.

Towards Intuitive Drone Operation Using a Handheld Motion Controller

Apr 13, 2025Abstract:We present an intuitive human-drone interaction system that utilizes a gesture-based motion controller to enhance the drone operation experience in real and simulated environments. The handheld motion controller enables natural control of the drone through the movements of the operator's hand, thumb, and index finger: the trigger press manages the throttle, the tilt of the hand adjusts pitch and roll, and the thumbstick controls yaw rotation. Communication with drones is facilitated via the ExpressLRS radio protocol, ensuring robust connectivity across various frequencies. The user evaluation of the flight experience with the designed drone controller using the UEQ-S survey showed high scores for both Pragmatic (mean=2.2, SD = 0.8) and Hedonic (mean=2.3, SD = 0.9) Qualities. This versatile control interface supports applications such as research, drone racing, and training programs in real and simulated environments, thereby contributing to advances in the field of human-drone interaction.

FlightAR: AR Flight Assistance Interface with Multiple Video Streams and Object Detection Aimed at Immersive Drone Control

Oct 22, 2024

Abstract:The swift advancement of unmanned aerial vehicle (UAV) technologies necessitates new standards for developing human-drone interaction (HDI) interfaces. Most interfaces for HDI, especially first-person view (FPV) goggles, limit the operator's ability to obtain information from the environment. This paper presents a novel interface, FlightAR, that integrates augmented reality (AR) overlays of UAV first-person view (FPV) and bottom camera feeds with head-mounted display (HMD) to enhance the pilot's situational awareness. Using FlightAR, the system provides pilots not only with a video stream from several UAV cameras simultaneously, but also the ability to observe their surroundings in real time. User evaluation with NASA-TLX and UEQ surveys showed low physical demand ($\mu=1.8$, $SD = 0.8$) and good performance ($\mu=3.4$, $SD = 0.8$), proving better user assessments in comparison with baseline FPV goggles. Participants also rated the system highly for stimulation ($\mu=2.35$, $SD = 0.9$), novelty ($\mu=2.1$, $SD = 0.9$) and attractiveness ($\mu=1.97$, $SD = 1$), indicating positive user experiences. These results demonstrate the potential of the system to improve UAV piloting experience through enhanced situational awareness and intuitive control. The code is available here: https://github.com/Sautenich/FlightAR

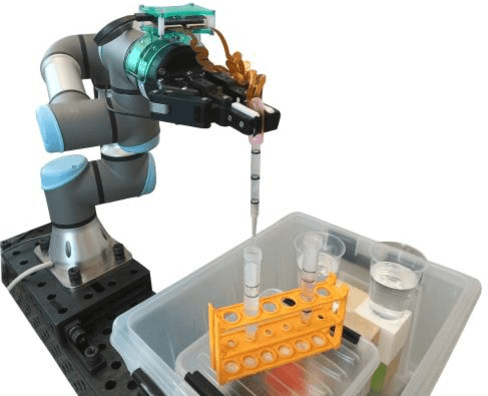

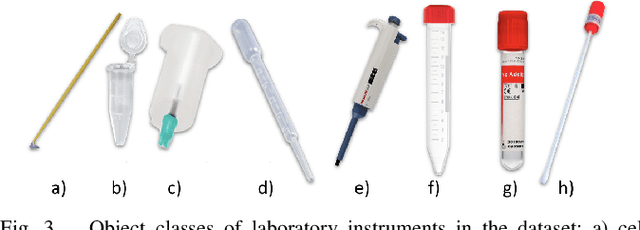

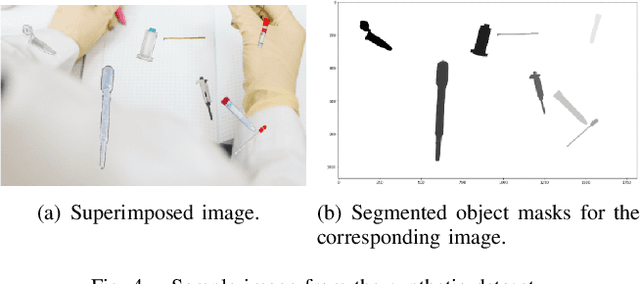

Robotic framework for autonomous manipulation of laboratory equipment with different degrees of transparency via 6D pose estimation

Oct 10, 2024

Abstract:Many modern robotic systems operate autonomously, however they often lack the ability to accurately analyze the environment and adapt to changing external conditions, while teleoperation systems often require special operator skills. In the field of laboratory automation, the number of automated processes is growing, however such systems are usually developed to perform specific tasks. In addition, many of the objects used in this field are transparent, making it difficult to analyze them using visual channels. The contributions of this work include the development of a robotic framework with autonomous mode for manipulating liquid-filled objects with different degrees of transparency in complex pose combinations. The conducted experiments demonstrated the robustness of the designed visual perception system to accurately estimate object poses for autonomous manipulation, and confirmed the performance of the algorithms in dexterous operations such as liquid dispensing. The proposed robotic framework can be applied for laboratory automation, since it allows solving the problem of performing non-trivial manipulation tasks with the analysis of object poses of varying degrees of transparency and liquid levels, requiring high accuracy and repeatability.

VR-GPT: Visual Language Model for Intelligent Virtual Reality Applications

May 19, 2024Abstract:The advent of immersive Virtual Reality applications has transformed various domains, yet their integration with advanced artificial intelligence technologies like Visual Language Models remains underexplored. This study introduces a pioneering approach utilizing VLMs within VR environments to enhance user interaction and task efficiency. Leveraging the Unity engine and a custom-developed VLM, our system facilitates real-time, intuitive user interactions through natural language processing, without relying on visual text instructions. The incorporation of speech-to-text and text-to-speech technologies allows for seamless communication between the user and the VLM, enabling the system to guide users through complex tasks effectively. Preliminary experimental results indicate that utilizing VLMs not only reduces task completion times but also improves user comfort and task engagement compared to traditional VR interaction methods.

AirTouch: Towards Safe Human-Robot Interaction Using Air Pressure Feedback and IR Mocap System

Jul 31, 2023

Abstract:The growing use of robots in urban environments has raised concerns about potential safety hazards, especially in public spaces where humans and robots may interact. In this paper, we present a system for safe human-robot interaction that combines an infrared (IR) camera with a wearable marker and airflow potential field. IR cameras enable real-time detection and tracking of humans in challenging environments, while controlled airflow creates a physical barrier that guides humans away from dangerous proximity to robots without the need for wearable devices. A preliminary experiment was conducted to measure the accuracy of the perception of safety barriers rendered by controlled air pressure. In a second experiment, we evaluated our approach in an imitation scenario of an interaction between an inattentive person and an autonomous robotic system. Experimental results show that the proposed system significantly improves a participant's ability to maintain a safe distance from the operating robot compared to trials without the system.

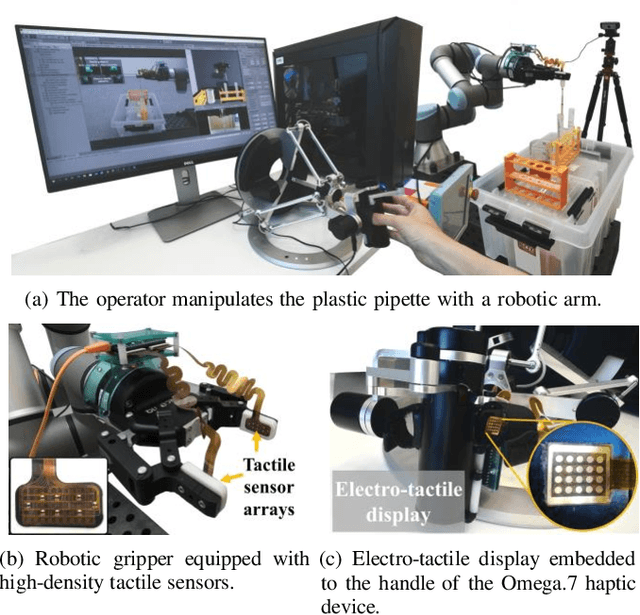

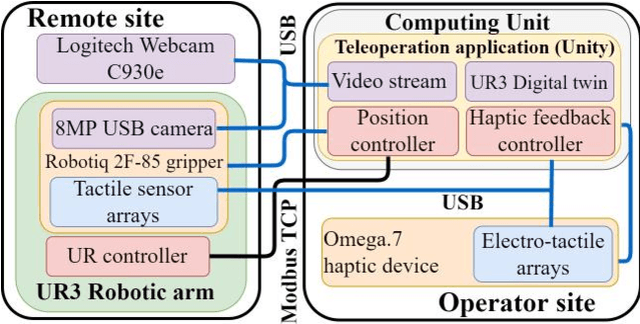

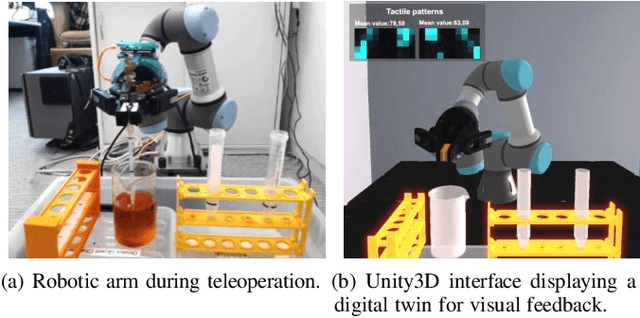

Exploring the Role of Electro-Tactile and Kinesthetic Feedback in Telemanipulation Task

Aug 30, 2022

Abstract:Teleoperation of robotic systems for precise and delicate object grasping requires high-fidelity haptic feedback to obtain comprehensive real-time information about the grasp. In such cases, the most common approach is to use kinesthetic feedback. However, a single contact point information is insufficient to detect the dynamically changing shape of soft objects. This paper proposes a novel telemanipulation system that provides kinesthetic and cutaneous stimuli to the user's hand to achieve accurate liquid dispensing by dexterously manipulating the deformable object (i.e., pipette). The experimental results revealed that the proposed approach to provide the user with multimodal haptic feedback considerably improves the quality of dosing with a remote pipette. Compared with pure visual feedback, the relative dosing error decreased by 66\% and task execution time decreased by 18\% when users manipulated the deformable pipette with a multimodal haptic interface in combination with visual feedback. The proposed technology can be potentially implemented in delicate dosing procedures during the antibody tests for COVID-19, chemical experiments, operation with organic materials, and telesurgery.

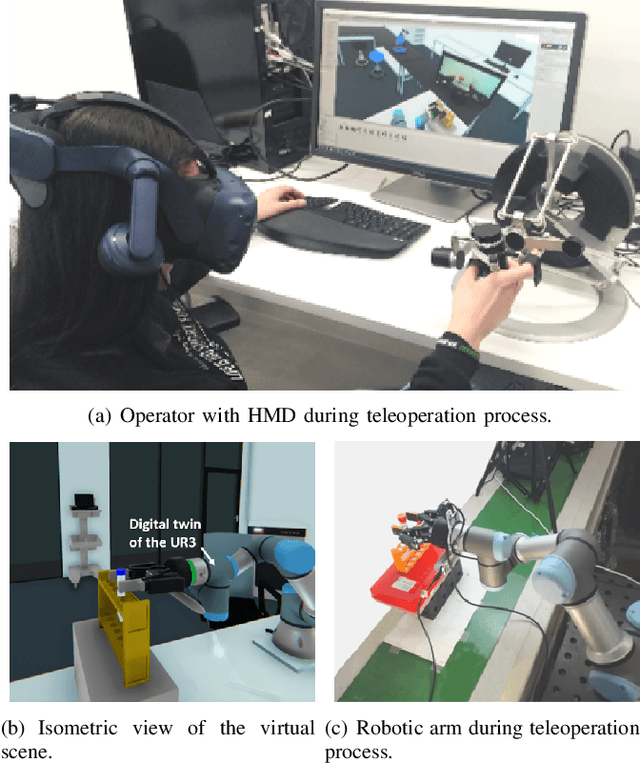

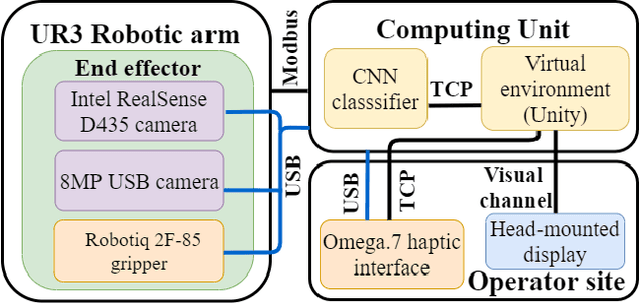

GraspLook: a VR-based Telemanipulation System with R-CNN-driven Augmentation of Virtual Environment

Oct 24, 2021

Abstract:The teleoperation of robotic systems in medical applications requires stable and convenient visual feedback for the operator. The most accessible approach to delivering visual information from the remote area is using cameras to transmit a video stream from the environment. However, such systems are sensitive to the camera resolution, limited viewpoints, and cluttered environment bringing additional mental demands to the human operator. The paper proposes a novel system of teleoperation based on an augmented virtual environment (VE). The region-based convolutional neural network (R-CNN) is applied to detect the laboratory instrument and estimate its position in the remote environment to display further its digital twin in the VE, which is necessary for dexterous telemanipulation. The experimental results revealed that the developed system allows users to operate the robot smoother, which leads to a decrease in task execution time when manipulating test tubes. In addition, the participants evaluated the developed system as less mentally demanding (by 11%) and requiring less effort (by 16%) to accomplish the task than the camera-based teleoperation approach and highly assessed their performance in the augmented VE. The proposed technology can be potentially applied for conducting laboratory tests in remote areas when operating with infectious and poisonous reagents.

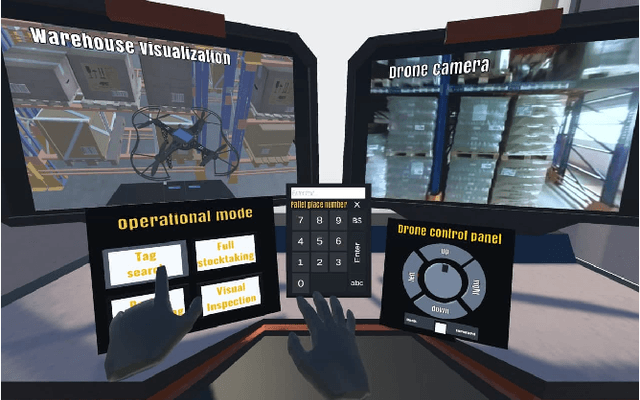

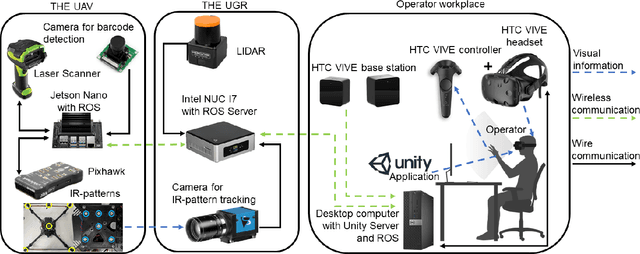

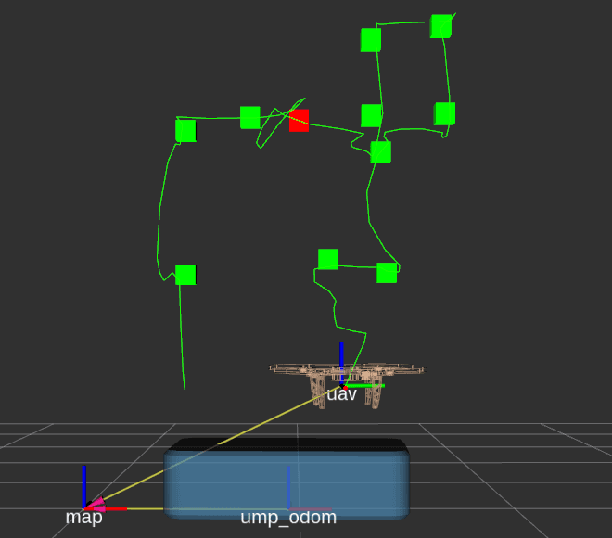

WareVR: Virtual Reality Interface for Supervision of Autonomous Robotic System Aimed at Warehouse Stocktaking

Oct 21, 2021

Abstract:WareVR is a novel human-robot interface based on a virtual reality (VR) application to interact with a heterogeneous robotic system for automated inventory management. We have created an interface to supervise an autonomous robot remotely from a secluded workstation in a warehouse that could benefit during the current pandemic COVID-19 since the stocktaking is a necessary and regular process in warehouses, which involves a group of people. The proposed interface allows regular warehouse workers without experience in robotics to control the heterogeneous robotic system consisting of an unmanned ground vehicle (UGV) and unmanned aerial vehicle (UAV). WareVR provides visualization of the robotic system in a digital twin of the warehouse, which is accompanied by a real-time video stream from the real environment through an on-board UAV camera. Using the WareVR interface, the operator can conduct different levels of stocktaking, monitor the inventory process remotely, and teleoperate the drone for a more detailed inspection. Besides, the developed interface includes remote control of the UAV for intuitive and straightforward human interaction with the autonomous robot for stocktaking. The effectiveness of the VR-based interface was evaluated through the user study in a "visual inspection" scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge