Ivan Kalinov

GHACPP: Genetic-based Human-Aware Coverage Path Planning Algorithm for Autonomous Disinfection Robot

Jul 17, 2023

Abstract:Numerous mobile robots with mounted Ultraviolet-C (UV-C) lamps were developed recently, yet they cannot work in the same space as humans without irradiating them by UV-C. This paper proposes a novel modular and scalable Human-Aware Genetic-based Coverage Path Planning algorithm (GHACPP), that aims to solve the problem of disinfecting of unknown environments by UV-C irradiation and preventing human eyes and skin from being harmed. The proposed genetic-based algorithm alternates between the stages of exploring a new area, generating parts of the resulting disinfection trajectory, called mini-trajectories, and updating the current state around the robot. The system performance in effectiveness and human safety is validated and compared with one of the latest state-of-the-art online coverage path planning algorithms called SimExCoverage-STC. The experimental results confirmed both the high level of safety for humans and the efficiency of the developed algorithm in terms of decrease of path length (by 37.1%), number (39.5%) and size (35.2%) of turns, and time (7.6%) to complete the disinfection task, with a small loss in the percentage of area covered (0.6%), in comparison with the state-of-the-art approach.

POA: Passable Obstacles Aware Path-planning Algorithm for Navigation of a Two-wheeled Robot in Highly Cluttered Environments

Jul 16, 2023Abstract:This paper focuses on Passable Obstacles Aware (POA) planner - a novel navigation method for two-wheeled robots in a highly cluttered environment. The navigation algorithm detects and classifies objects to distinguish two types of obstacles - passable and unpassable. Our algorithm allows two-wheeled robots to find a path through passable obstacles. Such a solution helps the robot working in areas inaccessible to standard path planners and find optimal trajectories in scenarios with a high number of objects in the robot's vicinity. The POA planner can be embedded into other planning algorithms and enables them to build a path through obstacles. Our method decreases path length and the total travel time to the final destination up to 43% and 39%, respectively, comparing to standard path planners such as GVD, A*, and RRT*

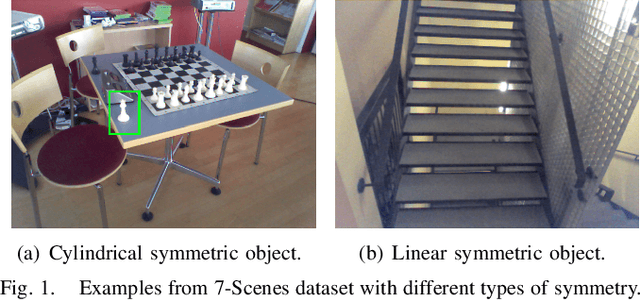

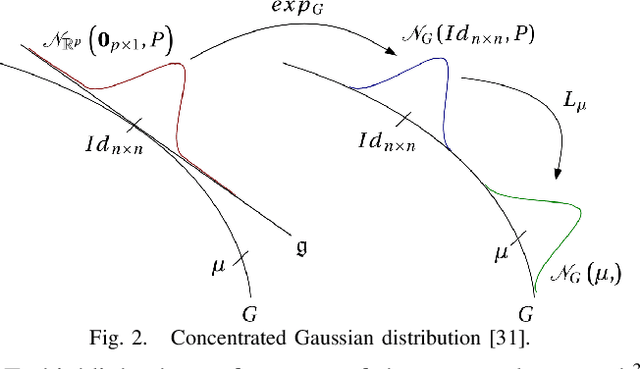

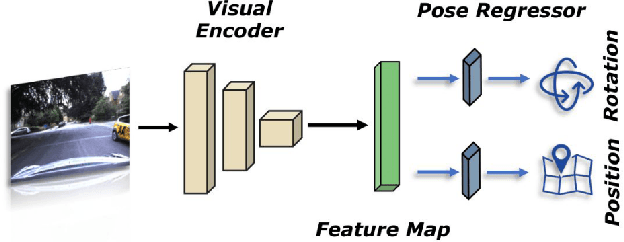

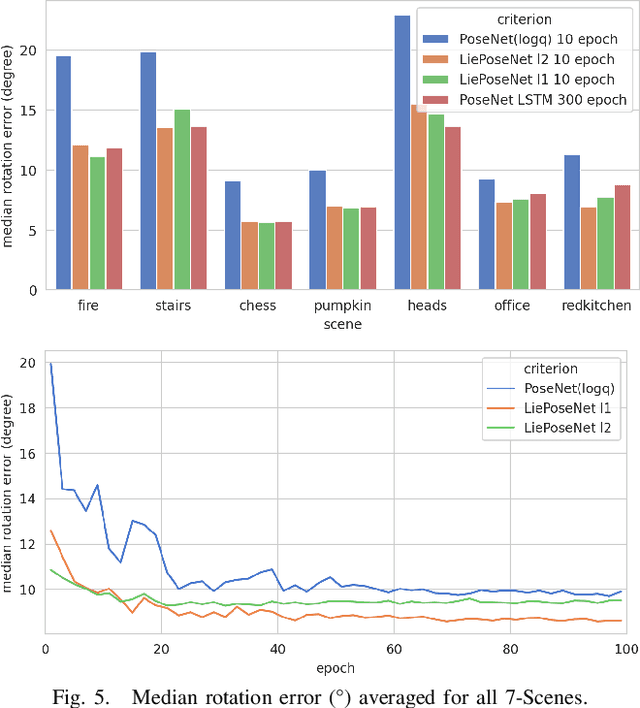

LiePoseNet: Heterogeneous Loss Function Based on Lie Group for Significant Speed-up of PoseNet Training Process

Nov 15, 2022

Abstract:Visual localization is an essential modern technology for robotics and computer vision. Popular approaches for solving this task are image-based methods. Nowadays, these methods have low accuracy and a long training time. The reasons are the lack of rigid-body and projective geometry awareness, landmark symmetry, and homogeneous error assumption. We propose a heterogeneous loss function based on concentrated Gaussian distribution with the Lie group to overcome these difficulties. Following our experiment, the proposed method allows us to speed up the training process significantly (from 300 to 10 epochs) with acceptable error values.

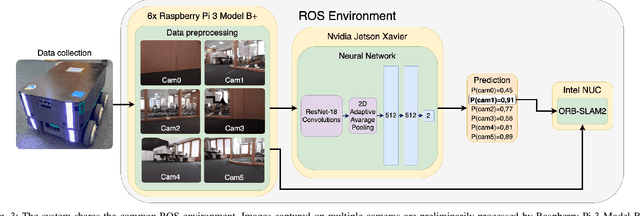

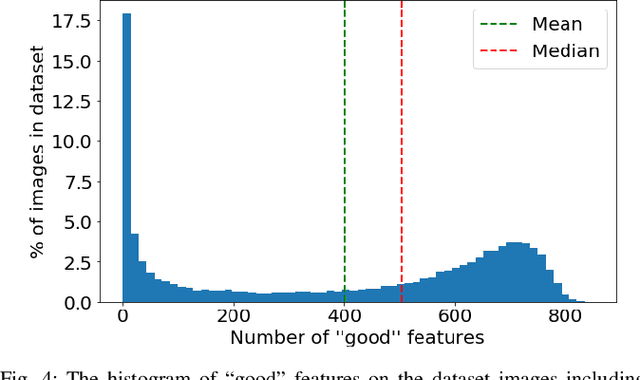

MuCaSLAM: CNN-Based Frame Quality Assessment for Mobile Robot with Omnidirectional Visual SLAM

Sep 05, 2022

Abstract:In the proposed study, we describe an approach to improving the computational efficiency and robustness of visual SLAM algorithms on mobile robots with multiple cameras and limited computational power by implementing an intermediate layer between the cameras and the SLAM pipeline. In this layer, the images are classified using a ResNet18-based neural network regarding their applicability to the robot localization. The network is trained on a six-camera dataset collected in the campus of the Skolkovo Institute of Science and Technology (Skoltech). For training, we use the images and ORB features that were successfully matched with subsequent frame of the same camera ("good" keypoints or features). The results have shown that the network is able to accurately determine the optimal images for ORB-SLAM2, and implementing the proposed approach in the SLAM pipeline can help significantly increase the number of images the SLAM algorithm can localize on, and improve the overall robustness of visual SLAM. The experiments on operation time state that the proposed approach is at least 6 times faster compared to using ORB extractor and feature matcher when operated on CPU, and more than 30 times faster when run on GPU. The network evaluation has shown at least 90% accuracy in recognizing images with a big number of "good" ORB keypoints. The use of the proposed approach allowed to maintain a high number of features throughout the dataset by robustly switching from cameras with feature-poor streams.

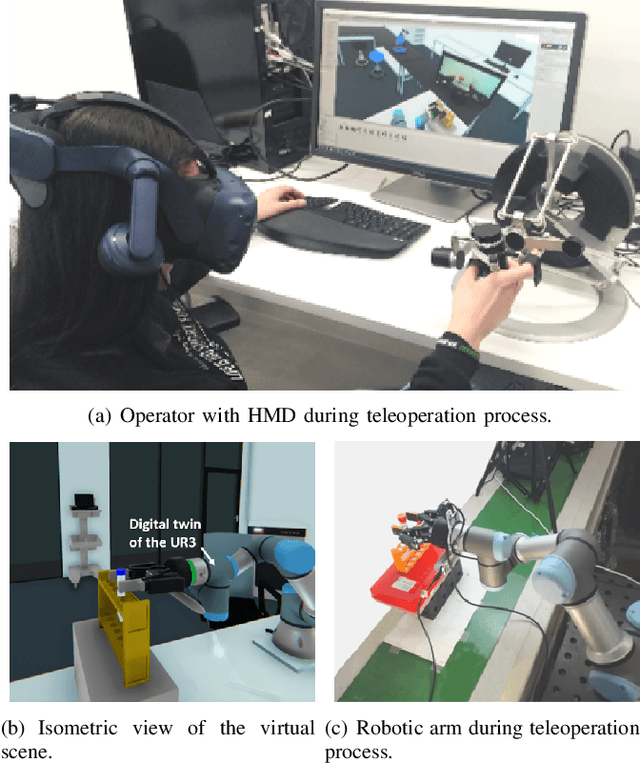

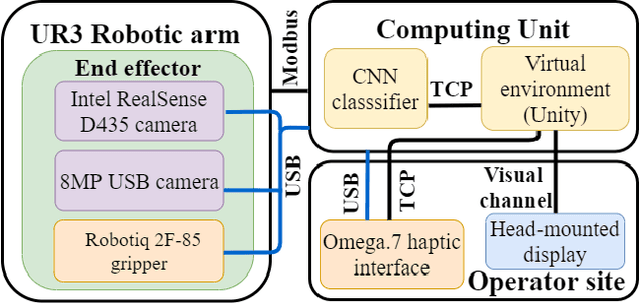

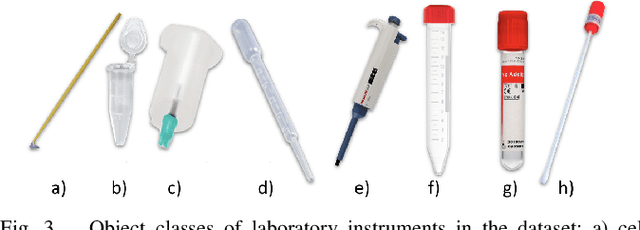

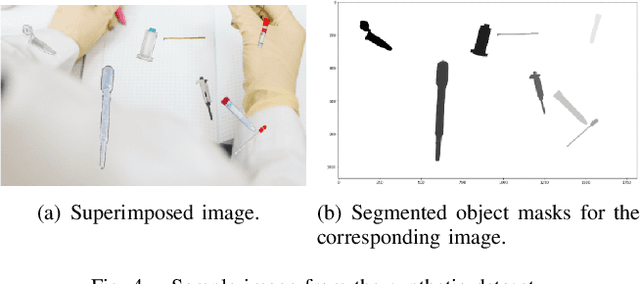

GraspLook: a VR-based Telemanipulation System with R-CNN-driven Augmentation of Virtual Environment

Oct 24, 2021

Abstract:The teleoperation of robotic systems in medical applications requires stable and convenient visual feedback for the operator. The most accessible approach to delivering visual information from the remote area is using cameras to transmit a video stream from the environment. However, such systems are sensitive to the camera resolution, limited viewpoints, and cluttered environment bringing additional mental demands to the human operator. The paper proposes a novel system of teleoperation based on an augmented virtual environment (VE). The region-based convolutional neural network (R-CNN) is applied to detect the laboratory instrument and estimate its position in the remote environment to display further its digital twin in the VE, which is necessary for dexterous telemanipulation. The experimental results revealed that the developed system allows users to operate the robot smoother, which leads to a decrease in task execution time when manipulating test tubes. In addition, the participants evaluated the developed system as less mentally demanding (by 11%) and requiring less effort (by 16%) to accomplish the task than the camera-based teleoperation approach and highly assessed their performance in the augmented VE. The proposed technology can be potentially applied for conducting laboratory tests in remote areas when operating with infectious and poisonous reagents.

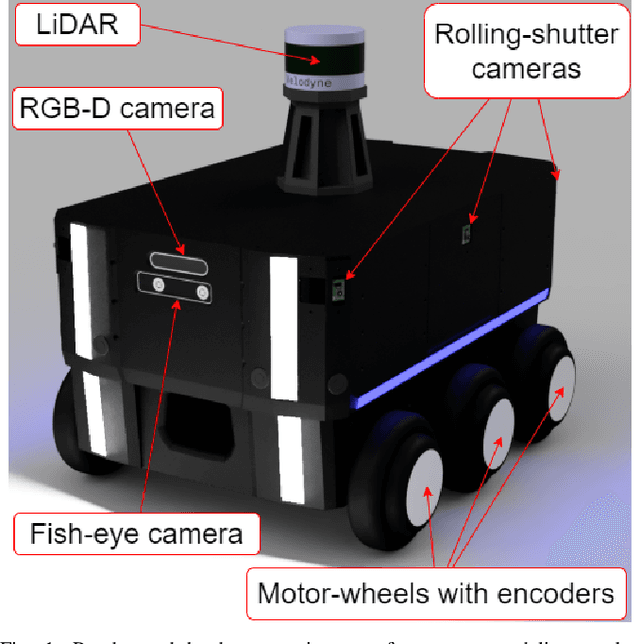

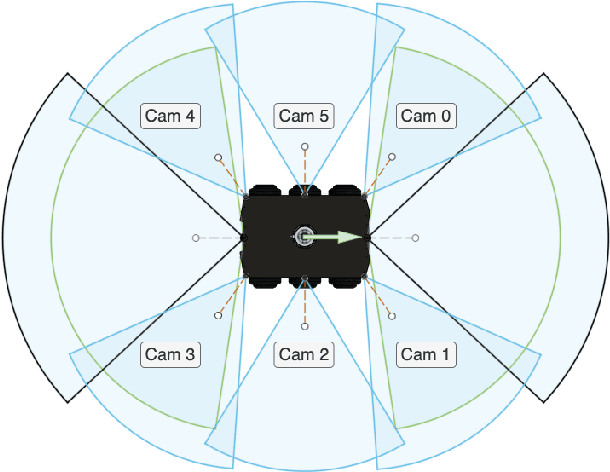

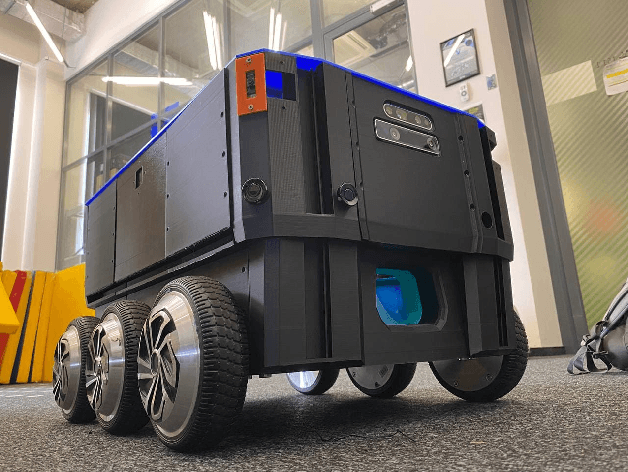

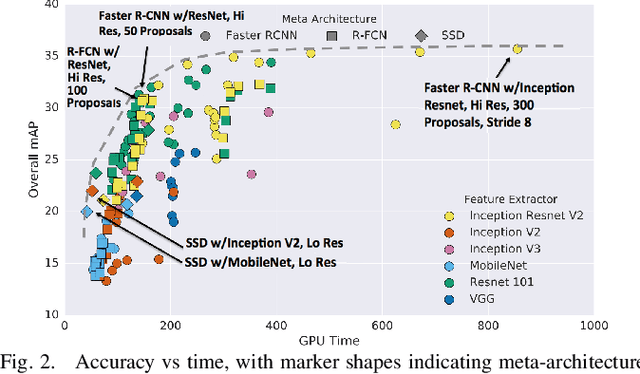

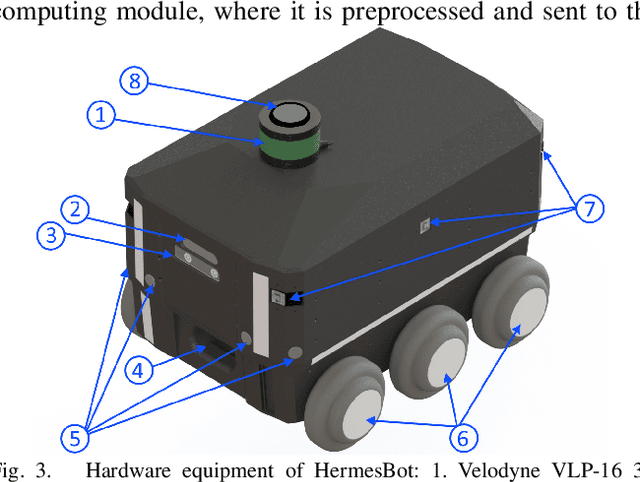

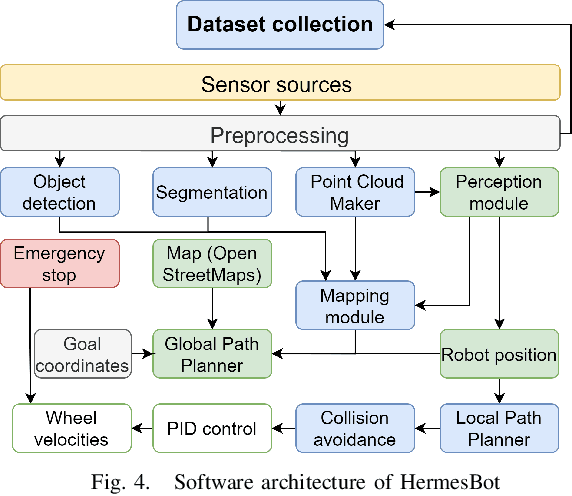

CNN-based Omnidirectional Object Detection for HermesBot Autonomous Delivery Robot with Preliminary Frame Classification

Oct 22, 2021

Abstract:Mobile autonomous robots include numerous sensors for environment perception. Cameras are an essential tool for robot's localization, navigation, and obstacle avoidance. To process a large flow of data from the sensors, it is necessary to optimize algorithms, or to utilize substantial computational power. In our work, we propose an algorithm for optimizing a neural network for object detection using preliminary binary frame classification. An autonomous outdoor mobile robot with 6 rolling-shutter cameras on the perimeter providing a 360-degree field of view was used as the experimental setup. The obtained experimental results revealed that the proposed optimization accelerates the inference time of the neural network in the cases with up to 5 out of 6 cameras containing target objects.

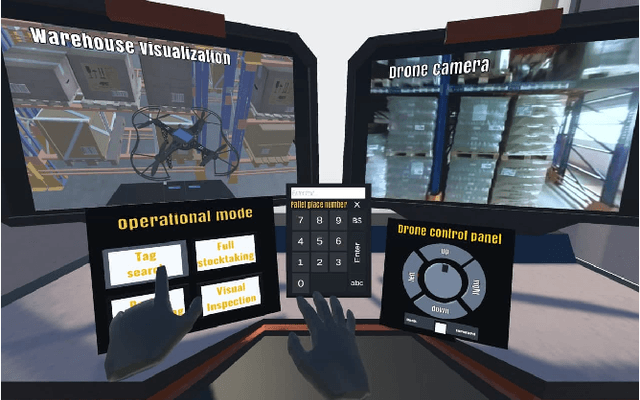

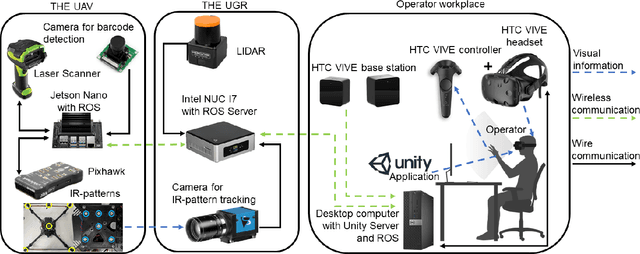

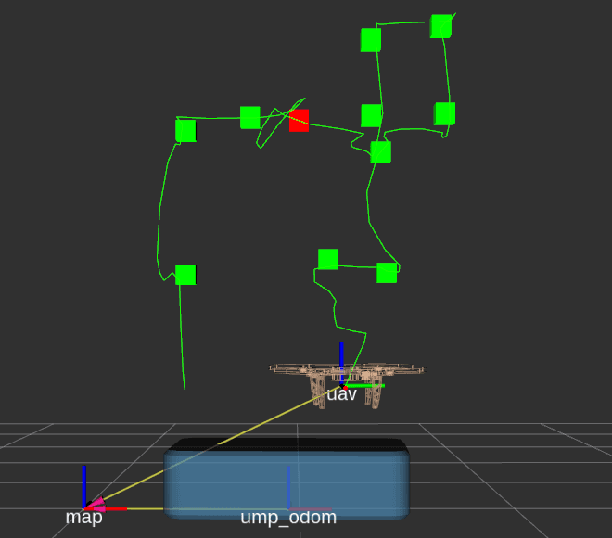

WareVR: Virtual Reality Interface for Supervision of Autonomous Robotic System Aimed at Warehouse Stocktaking

Oct 21, 2021

Abstract:WareVR is a novel human-robot interface based on a virtual reality (VR) application to interact with a heterogeneous robotic system for automated inventory management. We have created an interface to supervise an autonomous robot remotely from a secluded workstation in a warehouse that could benefit during the current pandemic COVID-19 since the stocktaking is a necessary and regular process in warehouses, which involves a group of people. The proposed interface allows regular warehouse workers without experience in robotics to control the heterogeneous robotic system consisting of an unmanned ground vehicle (UGV) and unmanned aerial vehicle (UAV). WareVR provides visualization of the robotic system in a digital twin of the warehouse, which is accompanied by a real-time video stream from the real environment through an on-board UAV camera. Using the WareVR interface, the operator can conduct different levels of stocktaking, monitor the inventory process remotely, and teleoperate the drone for a more detailed inspection. Besides, the developed interface includes remote control of the UAV for intuitive and straightforward human interaction with the autonomous robot for stocktaking. The effectiveness of the VR-based interface was evaluated through the user study in a "visual inspection" scenario.

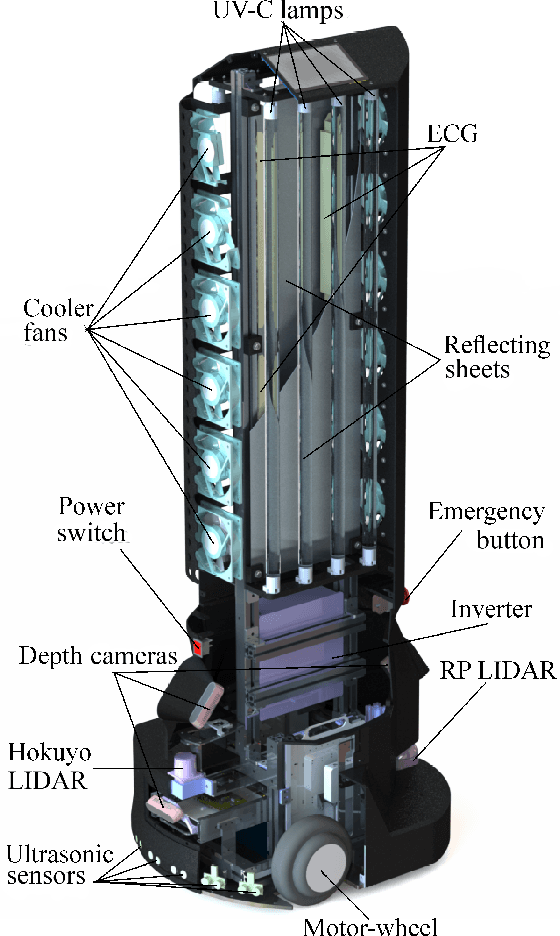

UltraBot: Autonomous Mobile Robot for Indoor UV-C Disinfection with Non-trivial Shape of Disinfection Zone

Aug 22, 2021

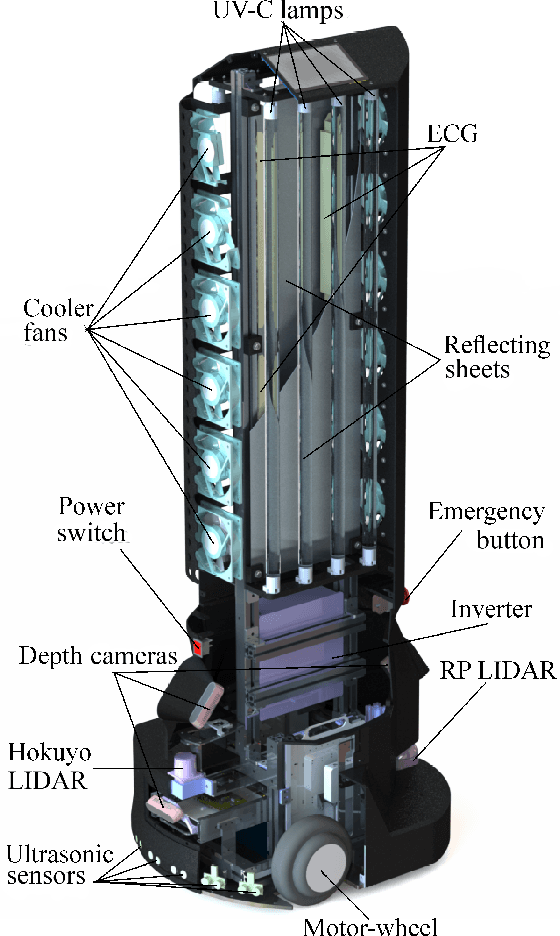

Abstract:The paper focuses on the development of an autonomous disinfection robot UltraBot to reduce COVID-19 transmission along with other harmful bacteria and viruses. The motivation behind the research is to develop such a robot that is capable of performing disinfection tasks without the use of harmful sprays and chemicals that can leave residues and require airing the room afterward for a long time. UltraBot technology has the potential to offer the most optimal autonomous disinfection performance along with taking care of people, keeping them from getting under the UV-C radiation. The paper highlights UltraBot's mechanical and electrical design as well as disinfection performance. The conducted experiments demonstrate the effectiveness of robot disinfection ability and actual disinfection area per each side with UV-C lamp array. The disinfection effectiveness results show actual performance for the multi-pass technique that provides 1-log reduction with combined direct UV-C exposure and ozone-based air purification after two robot passes at a speed of 0.14 m/s. This technique has the same performance as ten minutes static disinfection. Finally, we have calculated the non-trivial form of the robot disinfection zone by two consecutive experiment to produce optimal path planning and to provide full disinfection in selected areas.

UltraBot: Autonomous Mobile Robot for Indoor UV-C Disinfection

Aug 22, 2021

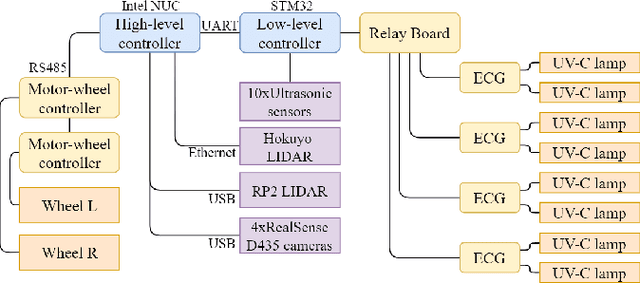

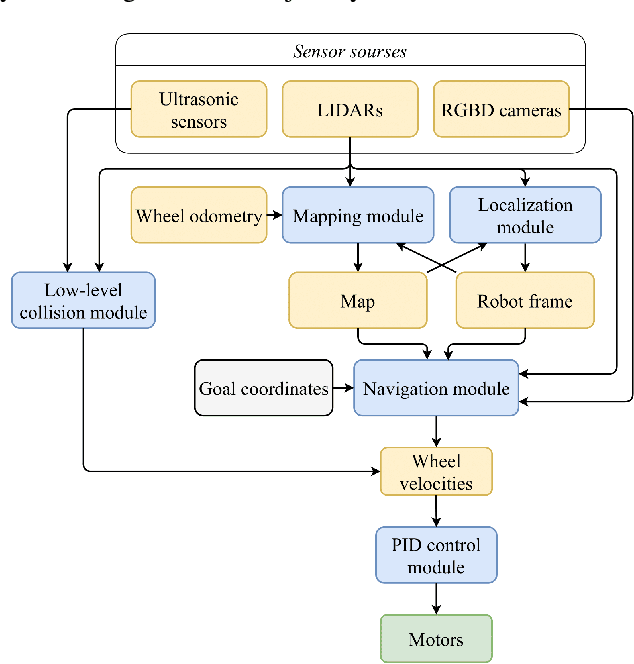

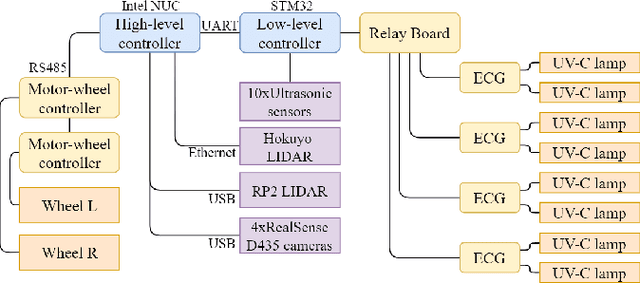

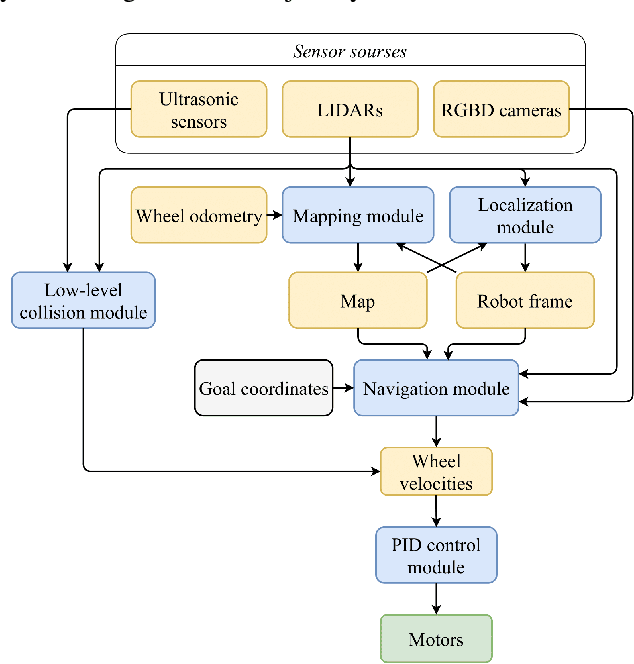

Abstract:The paper focuses on the development of the autonomous robot UltraBot to reduce COVID-19 transmission and other harmful bacteria and viruses. The motivation behind the research is to develop such a robot that is capable of performing disinfection tasks without the use of harmful sprays and chemicals that can leave residues, require airing the room afterward for a long time, and can cause the corrosion of the metal structures. UltraBot technology has the potential to offer the most optimal autonomous disinfection performance along with taking care of people, keeping them from getting under UV-C radiation. The paper highlights UltraBot's mechanical and electrical structures as well as low-level and high-level control systems. The conducted experiments demonstrate the effectiveness of the robot localization module and optimal trajectories for UV-C disinfection. The results of UV-C disinfection performance revealed a decrease of the total bacterial count (TBC) by 94% on the distance of 2.8 meters from the robot after 10 minutes of UV-C irradiation.

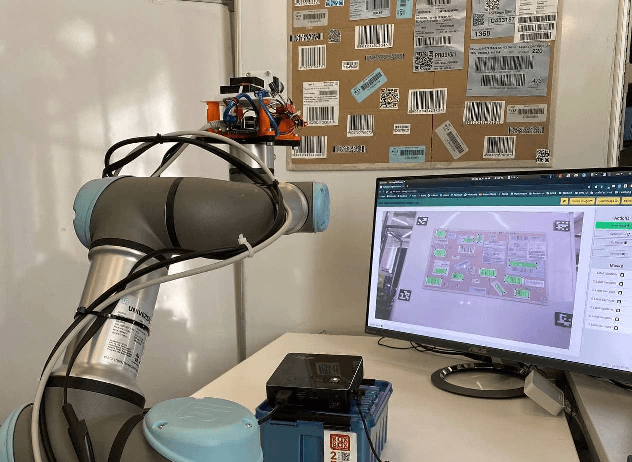

DeepScanner: a Robotic System for Automated 2D Object Dataset Collection with Annotations

Aug 05, 2021

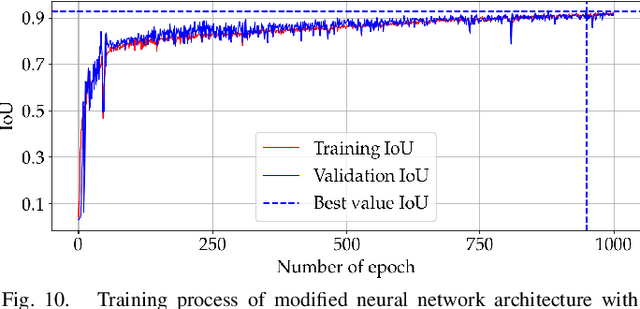

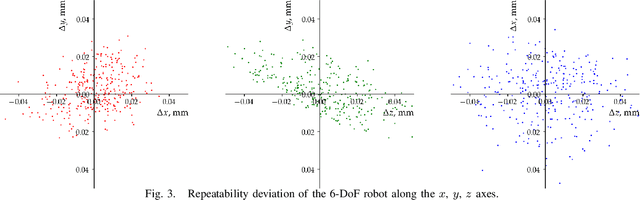

Abstract:In the proposed study, we describe the possibility of automated dataset collection using an articulated robot. The proposed technology reduces the number of pixel errors on a polygonal dataset and the time spent on manual labeling of 2D objects. The paper describes a novel automatic dataset collection and annotation system, and compares the results of automated and manual dataset labeling. Our approach increases the speed of data labeling 240-fold, and improves the accuracy compared to manual labeling 13-fold. We also present a comparison of metrics for training a neural network on a manually annotated and an automatically collected dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge