Michael Gastegger

Improved motif-scaffolding with SE(3) flow matching

Jan 08, 2024Abstract:Protein design often begins with knowledge of a desired function from a motif which motif-scaffolding aims to construct a functional protein around. Recently, generative models have achieved breakthrough success in designing scaffolds for a diverse range of motifs. However, the generated scaffolds tend to lack structural diversity, which can hinder success in wet-lab validation. In this work, we extend FrameFlow, an SE(3) flow matching model for protein backbone generation, to perform motif-scaffolding with two complementary approaches. The first is motif amortization, in which FrameFlow is trained with the motif as input using a data augmentation strategy. The second is motif guidance, which performs scaffolding using an estimate of the conditional score from FrameFlow, and requires no additional training. Both approaches achieve an equivalent or higher success rate than previous state-of-the-art methods, with 2.5 times more structurally diverse scaffolds. Code: https://github.com/ microsoft/frame-flow.

Scaling machine learning-based chemical plant simulation: A method for fine-tuning a model to induce stable fixed points

Jul 25, 2023Abstract:Idealized first-principles models of chemical plants can be inaccurate. An alternative is to fit a Machine Learning (ML) model directly to plant sensor data. We use a structured approach: Each unit within the plant gets represented by one ML model. After fitting the models to the data, the models are connected into a flowsheet-like directed graph. We find that for smaller plants, this approach works well, but for larger plants, the complex dynamics arising from large and nested cycles in the flowsheet lead to instabilities in the cycle solver. We analyze this problem in depth and show that it is not merely a specialized concern but rather a more pervasive challenge that will likely occur whenever ML is applied to larger plants. To address this problem, we present a way to fine-tune ML models such that solving cycles with the usual methods becomes robust again.

SchNetPack 2.0: A neural network toolbox for atomistic machine learning

Dec 11, 2022

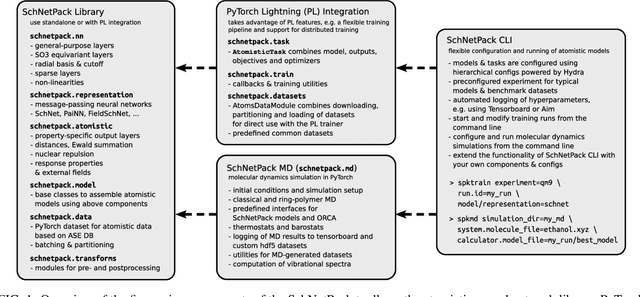

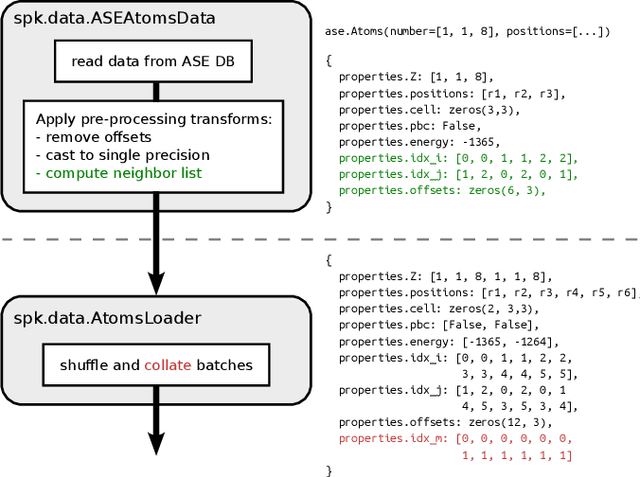

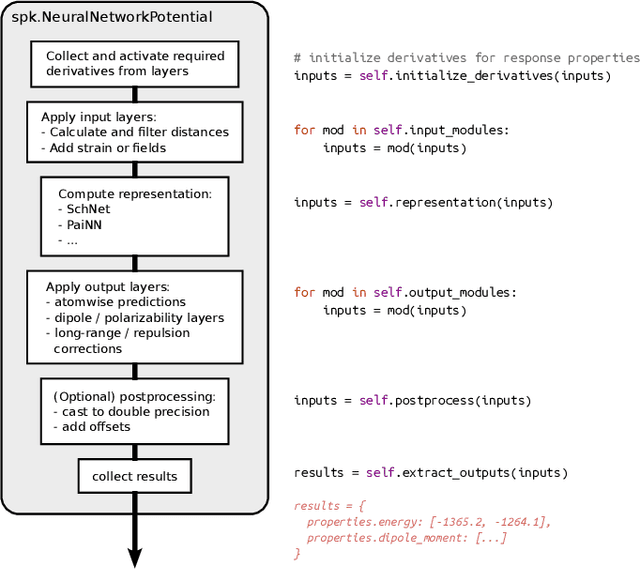

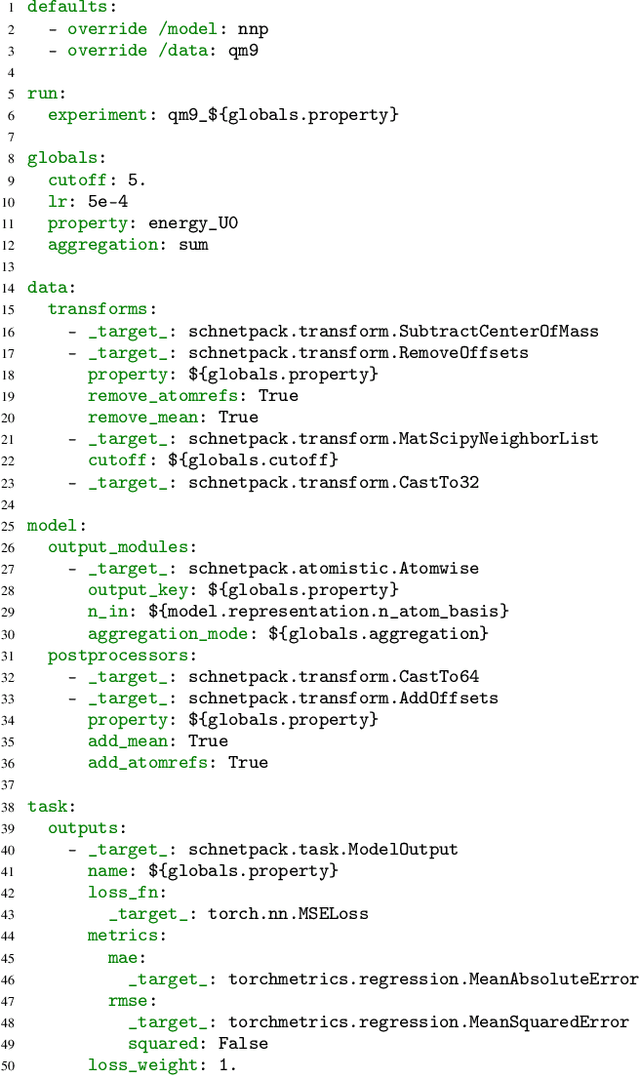

Abstract:SchNetPack is a versatile neural networks toolbox that addresses both the requirements of method development and application of atomistic machine learning. Version 2.0 comes with an improved data pipeline, modules for equivariant neural networks as well as a PyTorch implementation of molecular dynamics. An optional integration with PyTorch Lightning and the Hydra configuration framework powers a flexible command-line interface. This makes SchNetPack 2.0 easily extendable with custom code and ready for complex training task such as generation of 3d molecular structures.

Accurate Machine Learned Quantum-Mechanical Force Fields for Biomolecular Simulations

May 17, 2022

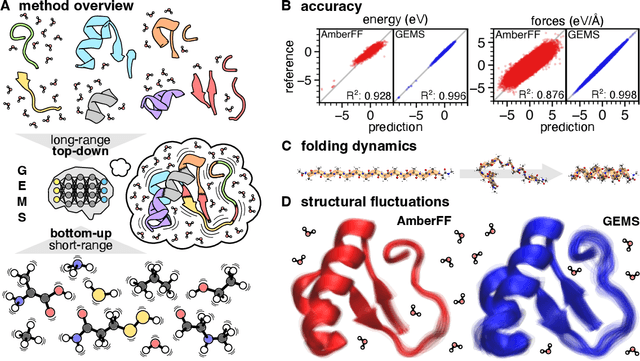

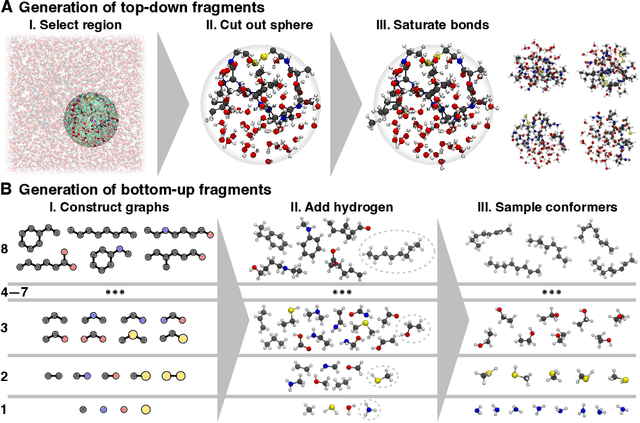

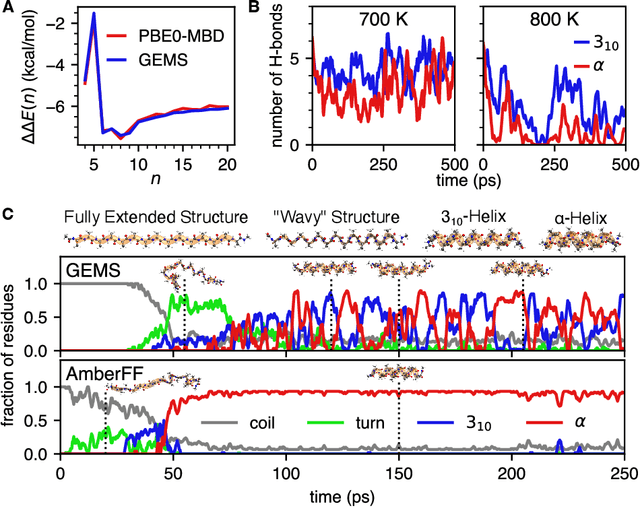

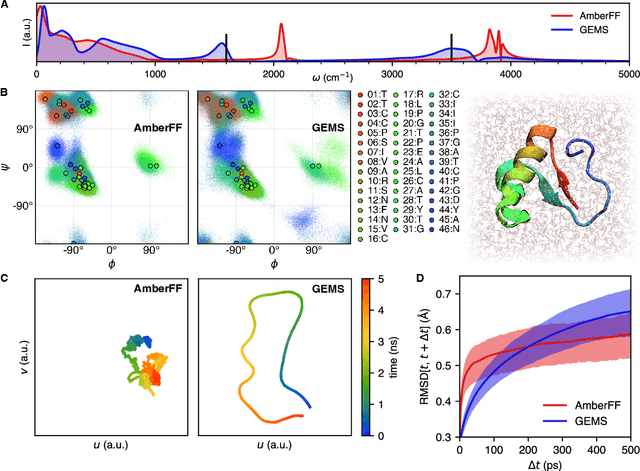

Abstract:Molecular dynamics (MD) simulations allow atomistic insights into chemical and biological processes. Accurate MD simulations require computationally demanding quantum-mechanical calculations, being practically limited to short timescales and few atoms. For larger systems, efficient, but much less reliable empirical force fields are used. Recently, machine learned force fields (MLFFs) emerged as an alternative means to execute MD simulations, offering similar accuracy as ab initio methods at orders-of-magnitude speedup. Until now, MLFFs mainly capture short-range interactions in small molecules or periodic materials, due to the increased complexity of constructing models and obtaining reliable reference data for large molecules, where long-ranged many-body effects become important. This work proposes a general approach to constructing accurate MLFFs for large-scale molecular simulations (GEMS) by training on "bottom-up" and "top-down" molecular fragments of varying size, from which the relevant physicochemical interactions can be learned. GEMS is applied to study the dynamics of alanine-based peptides and the 46-residue protein crambin in aqueous solution, allowing nanosecond-scale MD simulations of >25k atoms at essentially ab initio quality. Our findings suggest that structural motifs in peptides and proteins are more flexible than previously thought, indicating that simulations at ab initio accuracy might be necessary to understand dynamic biomolecular processes such as protein (mis)folding, drug-protein binding, or allosteric regulation.

Automatic Identification of Chemical Moieties

Mar 30, 2022

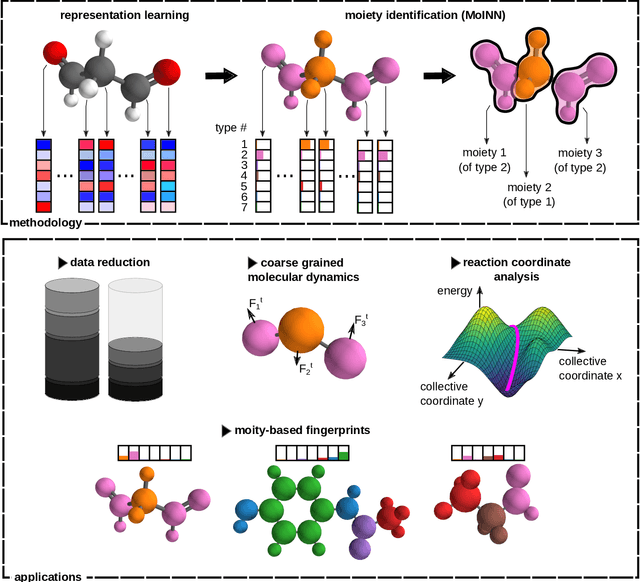

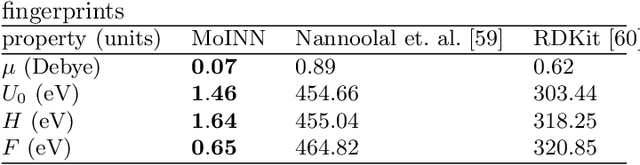

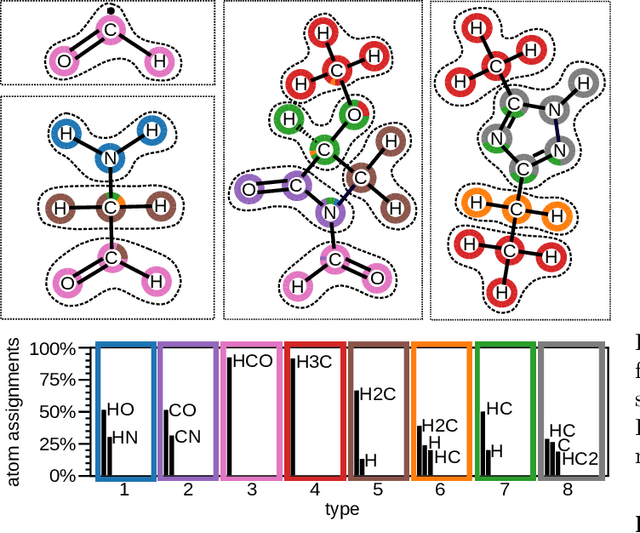

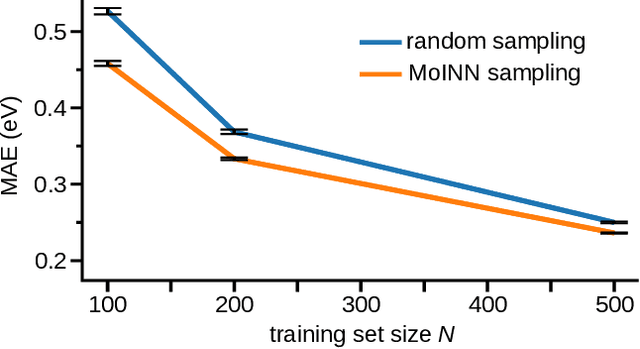

Abstract:In recent years, the prediction of quantum mechanical observables with machine learning methods has become increasingly popular. Message-passing neural networks (MPNNs) solve this task by constructing atomic representations, from which the properties of interest are predicted. Here, we introduce a method to automatically identify chemical moieties (molecular building blocks) from such representations, enabling a variety of applications beyond property prediction, which otherwise rely on expert knowledge. The required representation can either be provided by a pretrained MPNN, or learned from scratch using only structural information. Beyond the data-driven design of molecular fingerprints, the versatility of our approach is demonstrated by enabling the selection of representative entries in chemical databases, the automatic construction of coarse-grained force fields, as well as the identification of reaction coordinates.

Inverse design of 3d molecular structures with conditional generative neural networks

Sep 10, 2021

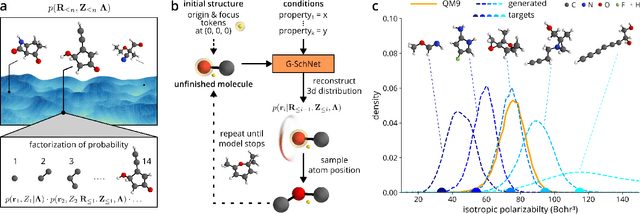

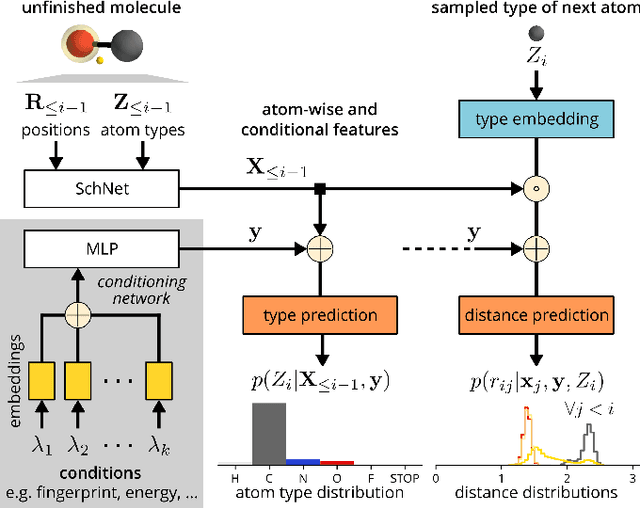

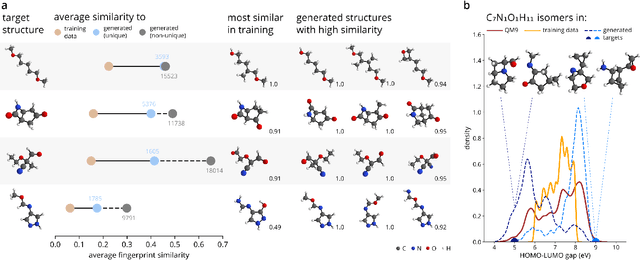

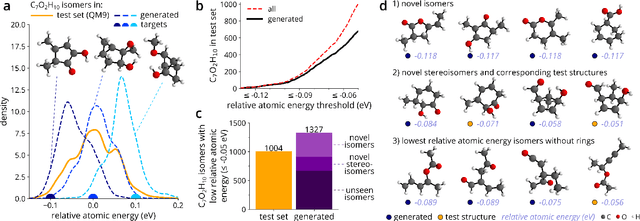

Abstract:The rational design of molecules with desired properties is a long-standing challenge in chemistry. Generative neural networks have emerged as a powerful approach to sample novel molecules from a learned distribution. Here, we propose a conditional generative neural network for 3d molecular structures with specified structural and chemical properties. This approach is agnostic to chemical bonding and enables targeted sampling of novel molecules from conditional distributions, even in domains where reference calculations are sparse. We demonstrate the utility of our method for inverse design by generating molecules with specified composition or motifs, discovering particularly stable molecules, and jointly targeting multiple electronic properties beyond the training regime.

SE(3)-equivariant prediction of molecular wavefunctions and electronic densities

Jun 04, 2021

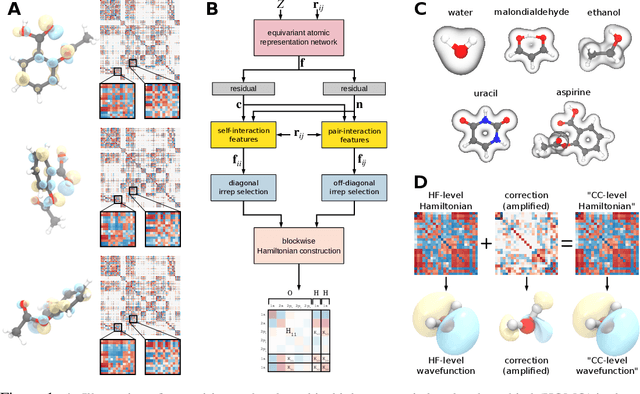

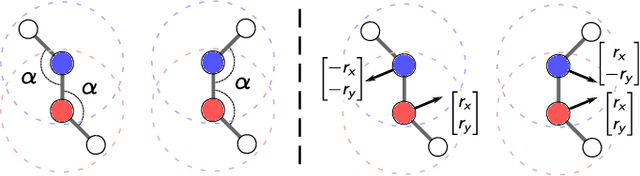

Abstract:Machine learning has enabled the prediction of quantum chemical properties with high accuracy and efficiency, allowing to bypass computationally costly ab initio calculations. Instead of training on a fixed set of properties, more recent approaches attempt to learn the electronic wavefunction (or density) as a central quantity of atomistic systems, from which all other observables can be derived. This is complicated by the fact that wavefunctions transform non-trivially under molecular rotations, which makes them a challenging prediction target. To solve this issue, we introduce general SE(3)-equivariant operations and building blocks for constructing deep learning architectures for geometric point cloud data and apply them to reconstruct wavefunctions of atomistic systems with unprecedented accuracy. Our model reduces prediction errors by up to two orders of magnitude compared to the previous state-of-the-art and makes it possible to derive properties such as energies and forces directly from the wavefunction in an end-to-end manner. We demonstrate the potential of our approach in a transfer learning application, where a model trained on low accuracy reference wavefunctions implicitly learns to correct for electronic many-body interactions from observables computed at a higher level of theory. Such machine-learned wavefunction surrogates pave the way towards novel semi-empirical methods, offering resolution at an electronic level while drastically decreasing computational cost. While we focus on physics applications in this contribution, the proposed equivariant framework for deep learning on point clouds is promising also beyond, say, in computer vision or graphics.

SpookyNet: Learning Force Fields with Electronic Degrees of Freedom and Nonlocal Effects

May 01, 2021

Abstract:In recent years, machine-learned force fields (ML-FFs) have gained increasing popularity in the field of computational chemistry. Provided they are trained on appropriate reference data, ML-FFs combine the accuracy of ab initio methods with the efficiency of conventional force fields. However, current ML-FFs typically ignore electronic degrees of freedom, such as the total charge or spin, when forming their prediction. In addition, they often assume chemical locality, which can be problematic in cases where nonlocal effects play a significant role. This work introduces SpookyNet, a deep neural network for constructing ML-FFs with explicit treatment of electronic degrees of freedom and quantum nonlocality. Its predictions are further augmented with physically-motivated corrections to improve the description of long-ranged interactions and nuclear repulsion. SpookyNet improves upon the current state-of-the-art (or achieves similar performance) on popular quantum chemistry data sets. Notably, it can leverage the learned chemical insights, e.g. by predicting unknown spin states or by properly modeling physical limits. Moreover, it is able to generalize across chemical and conformational space and thus close an important remaining gap for today's machine learning models in quantum chemistry.

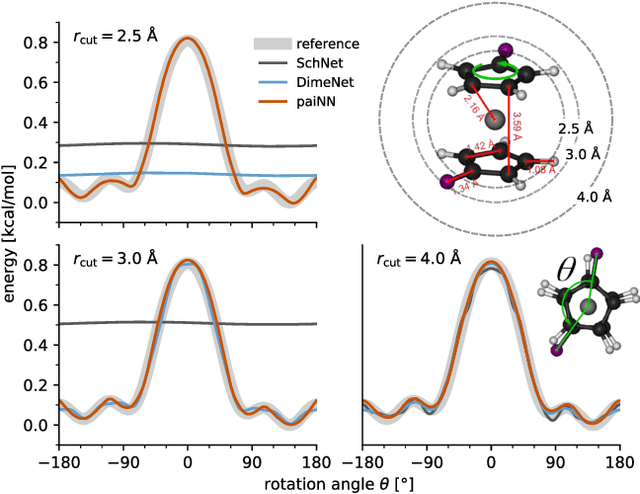

Equivariant message passing for the prediction of tensorial properties and molecular spectra

Feb 08, 2021

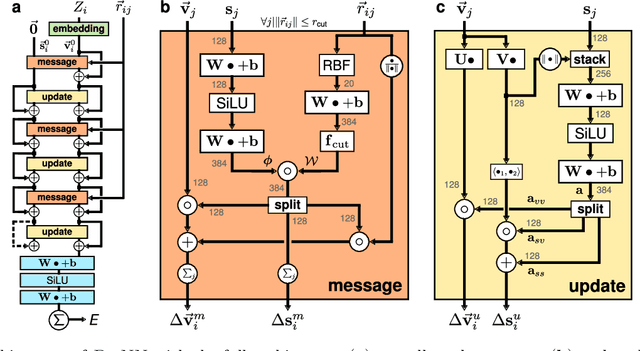

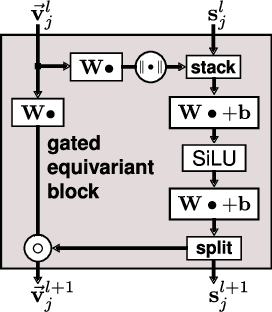

Abstract:Message passing neural networks have become a method of choice for learning on graphs, in particular the prediction of chemical properties and the acceleration of molecular dynamics studies. While they readily scale to large training data sets, previous approaches have proven to be less data efficient than kernel methods. We identify limitations of invariant representations as a major reason and extend the message passing formulation to rotationally equivariant representations. On this basis, we propose the polarizable atom interaction neural network (PaiNN) and improve on common molecule benchmarks over previous networks, while reducing model size and inference time. We leverage the equivariant atomwise representations obtained by PaiNN for the prediction of tensorial properties. Finally, we apply this to the simulation of molecular spectra, achieving speedups of 4-5 orders of magnitude compared to the electronic structure reference.

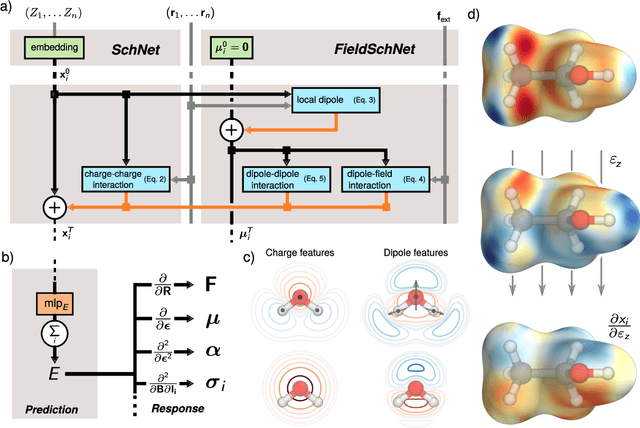

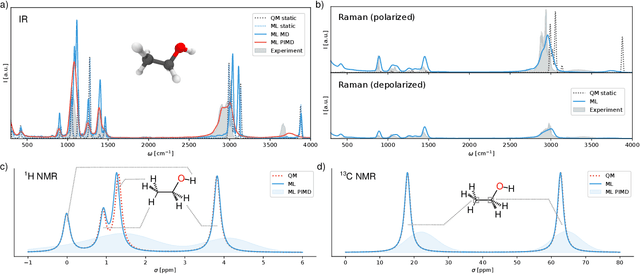

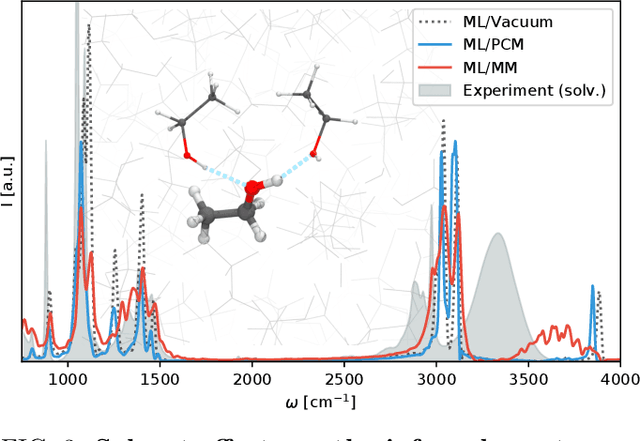

Machine learning of solvent effects on molecular spectra and reactions

Nov 04, 2020

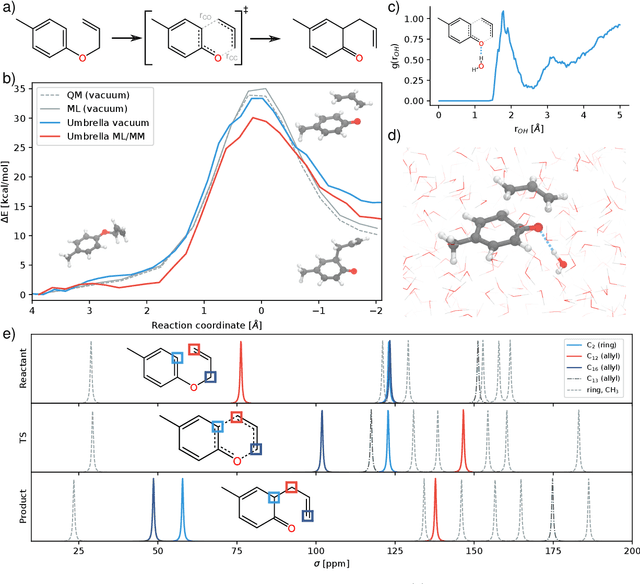

Abstract:Fast and accurate simulation of complex chemical systems in environments such as solutions is a long standing challenge in theoretical chemistry. In recent years, machine learning has extended the boundaries of quantum chemistry by providing highly accurate and efficient surrogate models of electronic structure theory, which previously have been out of reach for conventional approaches. Those models have long been restricted to closed molecular systems without accounting for environmental influences, such as external electric and magnetic fields or solvent effects. Here, we introduce the deep neural network FieldSchNet for modeling the interaction of molecules with arbitrary external fields. FieldSchNet offers access to a wealth of molecular response properties, enabling it to simulate a wide range of molecular spectra, such as infrared, Raman and nuclear magnetic resonance. Beyond that, it is able to describe implicit and explicit molecular environments, operating as a polarizable continuum model for solvation or in a quantum mechanics / molecular mechanics setup. We employ FieldSchNet to study the influence of solvent effects on molecular spectra and a Claisen rearrangement reaction. Based on these results, we use FieldSchNet to design an external environment capable of lowering the activation barrier of the rearrangement reaction significantly, demonstrating promising venues for inverse chemical design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge