Liwen Wu

Neural BRDF Importance Sampling by Reparameterization

May 13, 2025Abstract:Neural bidirectional reflectance distribution functions (BRDFs) have emerged as popular material representations for enhancing realism in physically-based rendering. Yet their importance sampling remains a significant challenge. In this paper, we introduce a reparameterization-based formulation of neural BRDF importance sampling that seamlessly integrates into the standard rendering pipeline with precise generation of BRDF samples. The reparameterization-based formulation transfers the distribution learning task to a problem of identifying BRDF integral substitutions. In contrast to previous methods that rely on invertible networks and multi-step inference to reconstruct BRDF distributions, our model removes these constraints, which offers greater flexibility and efficiency. Our variance and performance analysis demonstrates that our reparameterization method achieves the best variance reduction in neural BRDF renderings while maintaining high inference speeds compared to existing baselines.

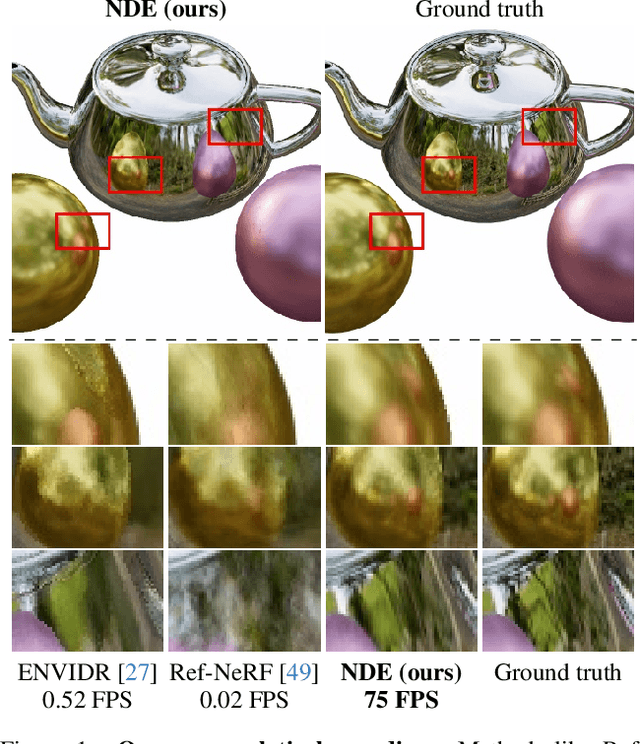

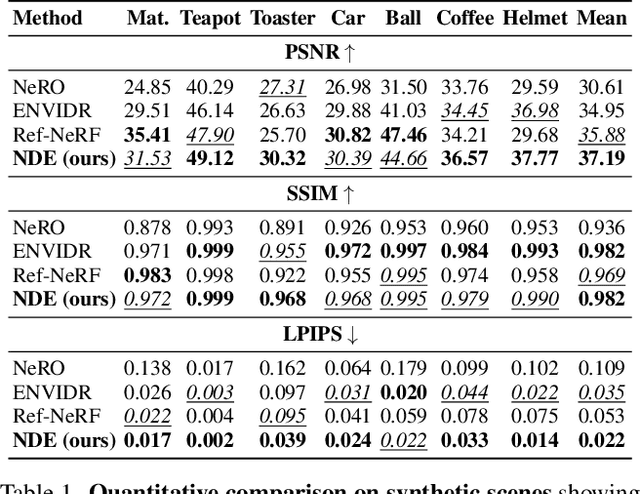

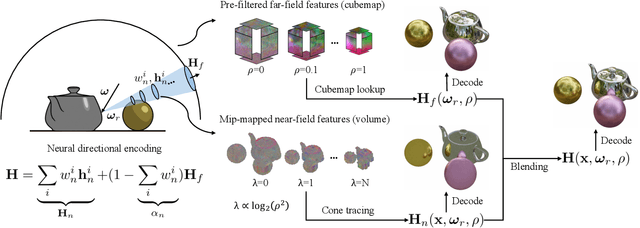

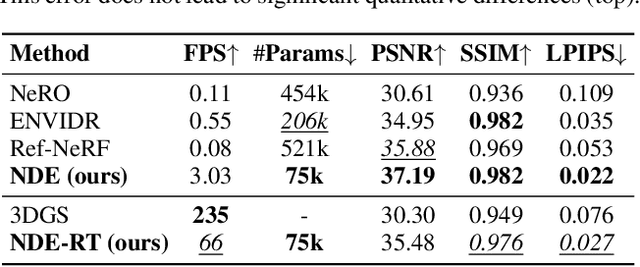

Neural Directional Encoding for Efficient and Accurate View-Dependent Appearance Modeling

May 23, 2024

Abstract:Novel-view synthesis of specular objects like shiny metals or glossy paints remains a significant challenge. Not only the glossy appearance but also global illumination effects, including reflections of other objects in the environment, are critical components to faithfully reproduce a scene. In this paper, we present Neural Directional Encoding (NDE), a view-dependent appearance encoding of neural radiance fields (NeRF) for rendering specular objects. NDE transfers the concept of feature-grid-based spatial encoding to the angular domain, significantly improving the ability to model high-frequency angular signals. In contrast to previous methods that use encoding functions with only angular input, we additionally cone-trace spatial features to obtain a spatially varying directional encoding, which addresses the challenging interreflection effects. Extensive experiments on both synthetic and real datasets show that a NeRF model with NDE (1) outperforms the state of the art on view synthesis of specular objects, and (2) works with small networks to allow fast (real-time) inference. The project webpage and source code are available at: \url{https://lwwu2.github.io/nde/}.

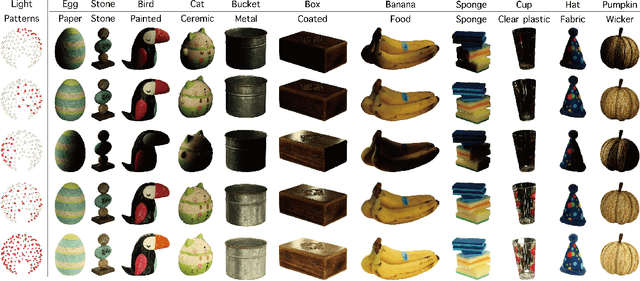

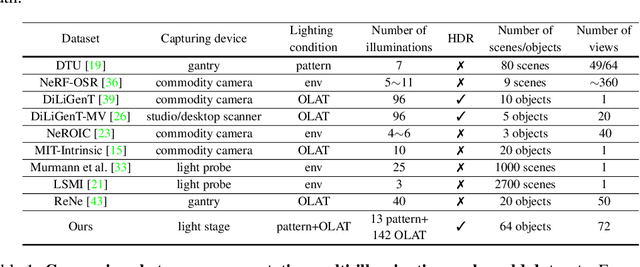

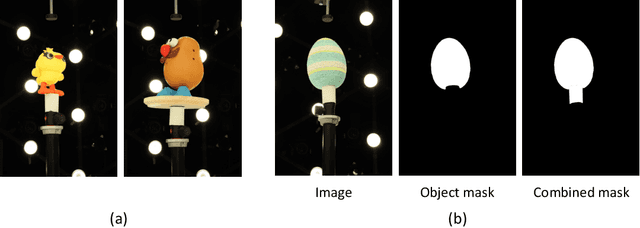

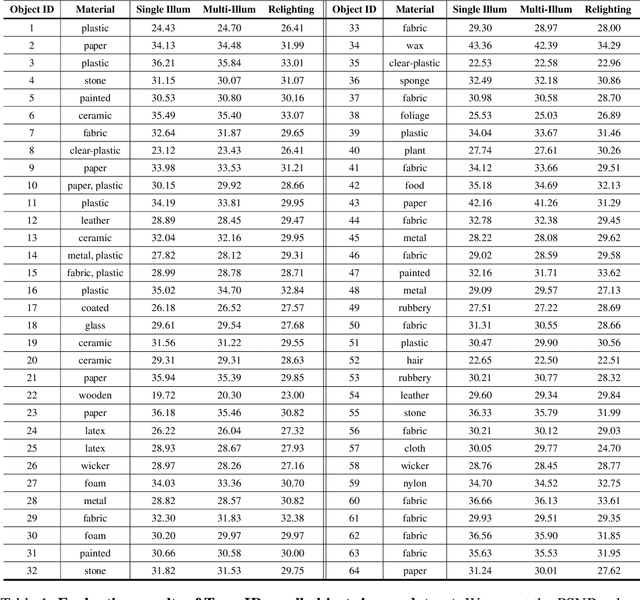

OpenIllumination: A Multi-Illumination Dataset for Inverse Rendering Evaluation on Real Objects

Sep 14, 2023

Abstract:We introduce OpenIllumination, a real-world dataset containing over 108K images of 64 objects with diverse materials, captured under 72 camera views and a large number of different illuminations. For each image in the dataset, we provide accurate camera parameters, illumination ground truth, and foreground segmentation masks. Our dataset enables the quantitative evaluation of most inverse rendering and material decomposition methods for real objects. We examine several state-of-the-art inverse rendering methods on our dataset and compare their performances. The dataset and code can be found on the project page: https://oppo-us-research.github.io/OpenIllumination.

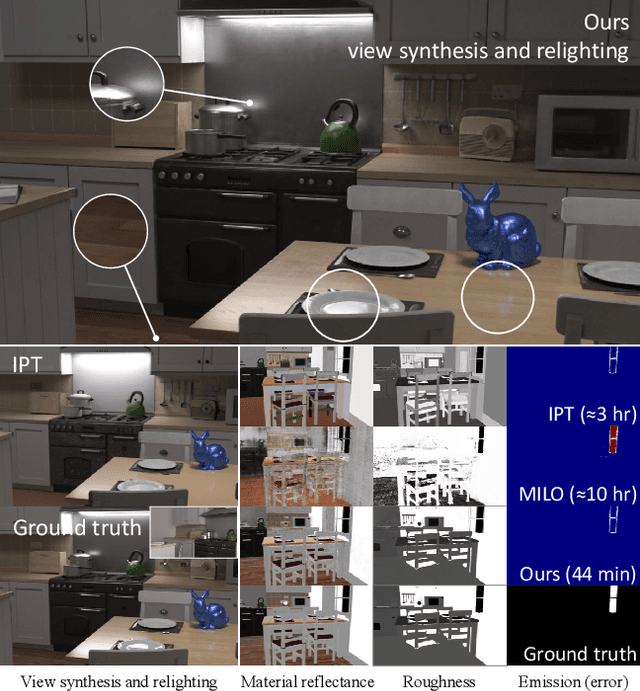

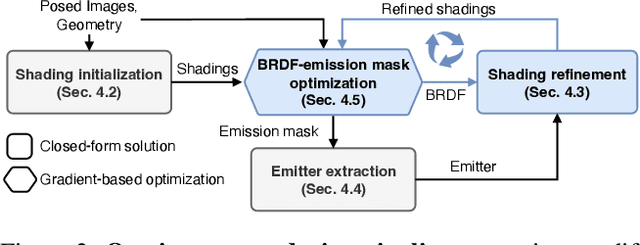

Factorized Inverse Path Tracing for Efficient and Accurate Material-Lighting Estimation

Apr 12, 2023

Abstract:Inverse path tracing has recently been applied to joint material and lighting estimation, given geometry and multi-view HDR observations of an indoor scene. However, it has two major limitations: path tracing is expensive to compute, and ambiguities exist between reflection and emission. We propose a novel Factorized Inverse Path Tracing (FIPT) method which utilizes a factored light transport formulation and finds emitters driven by rendering errors. Our algorithm enables accurate material and lighting optimization faster than previous work, and is more effective at resolving ambiguities. The exhaustive experiments on synthetic scenes show that our method (1) outperforms state-of-the-art indoor inverse rendering and relighting methods particularly in the presence of complex illumination effects; (2) speeds up inverse path tracing optimization to less than an hour. We further demonstrate robustness to noisy inputs through material and lighting estimates that allow plausible relighting in a real scene. The source code is available at: https://github.com/lwwu2/fipt

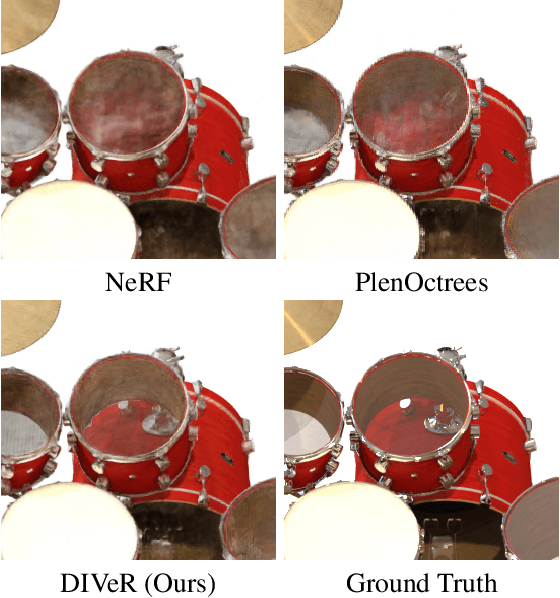

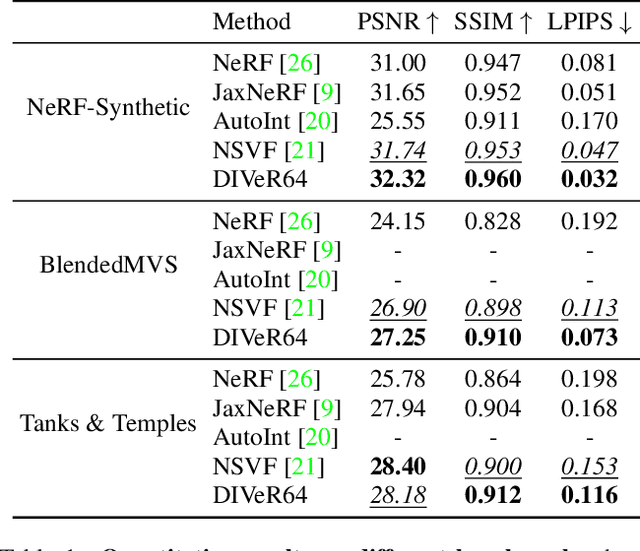

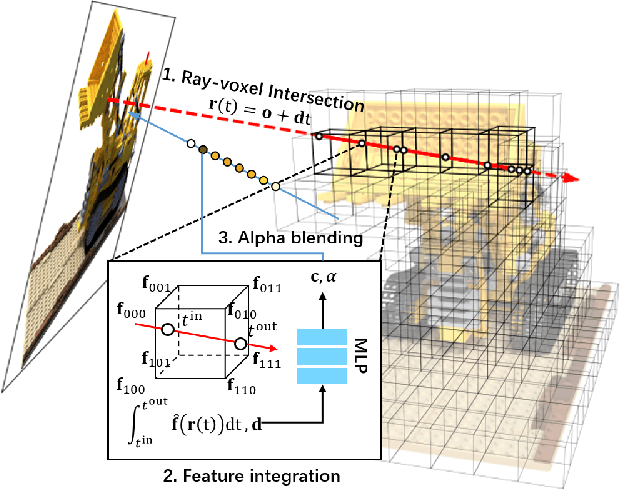

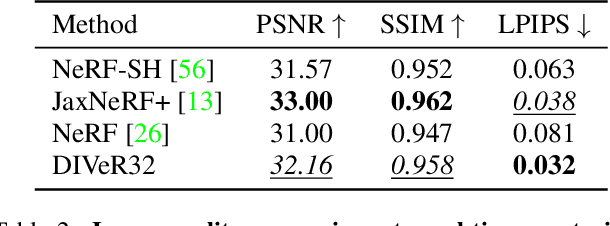

DIVeR: Real-time and Accurate Neural Radiance Fields with Deterministic Integration for Volume Rendering

Nov 19, 2021

Abstract:DIVeR builds on the key ideas of NeRF and its variants -- density models and volume rendering -- to learn 3D object models that can be rendered realistically from small numbers of images. In contrast to all previous NeRF methods, DIVeR uses deterministic rather than stochastic estimates of the volume rendering integral. DIVeR's representation is a voxel based field of features. To compute the volume rendering integral, a ray is broken into intervals, one per voxel; components of the volume rendering integral are estimated from the features for each interval using an MLP, and the components are aggregated. As a result, DIVeR can render thin translucent structures that are missed by other integrators. Furthermore, DIVeR's representation has semantics that is relatively exposed compared to other such methods -- moving feature vectors around in the voxel space results in natural edits. Extensive qualitative and quantitative comparisons to current state-of-the-art methods show that DIVeR produces models that (1) render at or above state-of-the-art quality, (2) are very small without being baked, (3) render very fast without being baked, and (4) can be edited in natural ways.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge