Kun Cheng

Commercial Vehicle Braking Optimization: A Robust SIFT-Trajectory Approach

Dec 21, 2025

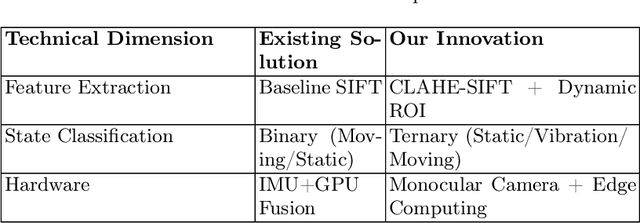

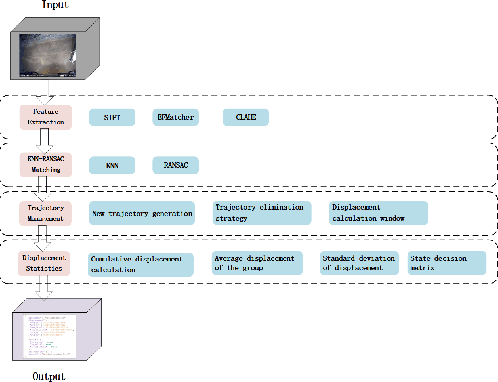

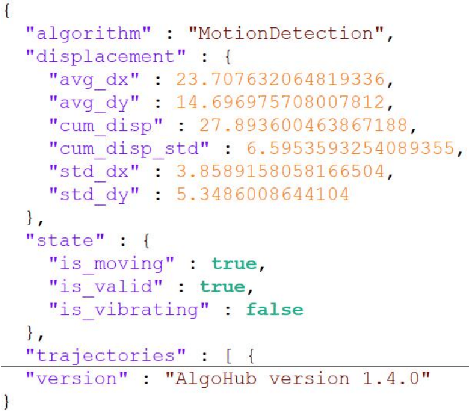

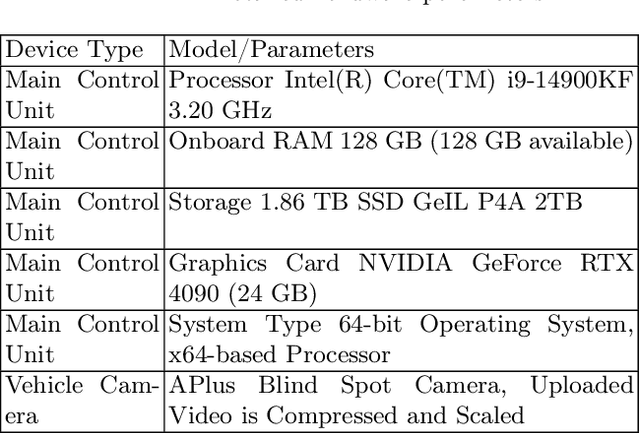

Abstract:A vision-based trajectory analysis solution is proposed to address the "zero-speed braking" issue caused by inaccurate Controller Area Network (CAN) signals in commercial vehicle Automatic Emergency Braking (AEB) systems during low-speed operation. The algorithm utilizes the NVIDIA Jetson AGX Xavier platform to process sequential video frames from a blind spot camera, employing self-adaptive Contrast Limited Adaptive Histogram Equalization (CLAHE)-enhanced Scale-Invariant Feature Transform (SIFT) feature extraction and K-Nearest Neighbors (KNN)-Random Sample Consensus (RANSAC) matching. This allows for precise classification of the vehicle's motion state (static, vibration, moving). Key innovations include 1) multiframe trajectory displacement statistics (5-frame sliding window), 2) a dual-threshold state decision matrix, and 3) OBD-II driven dynamic Region of Interest (ROI) configuration. The system effectively suppresses environmental interference and false detection of dynamic objects, directly addressing the challenge of low-speed false activation in commercial vehicle safety systems. Evaluation in a real-world dataset (32,454 video segments from 1,852 vehicles) demonstrates an F1-score of 99.96% for static detection, 97.78% for moving state recognition, and a processing delay of 14.2 milliseconds (resolution 704x576). The deployment on-site shows an 89% reduction in false braking events, a 100% success rate in emergency braking, and a fault rate below 5%.

Round Attention: A Novel Round-Level Attention Mechanism to Accelerate LLM Inference

Feb 21, 2025

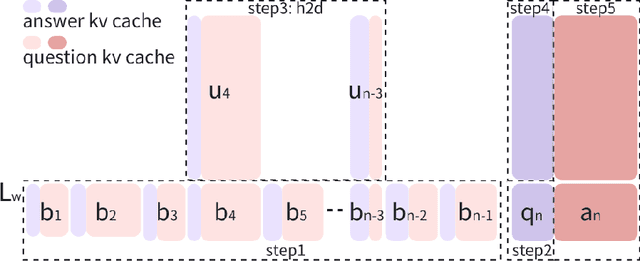

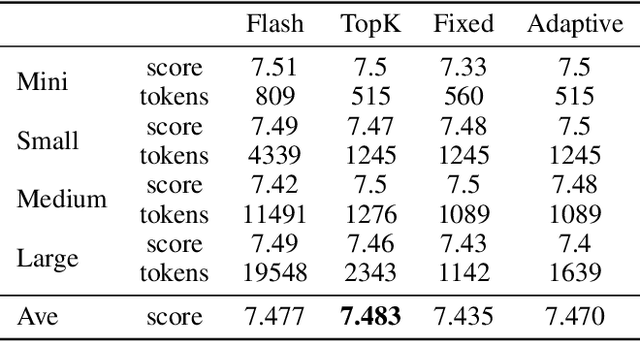

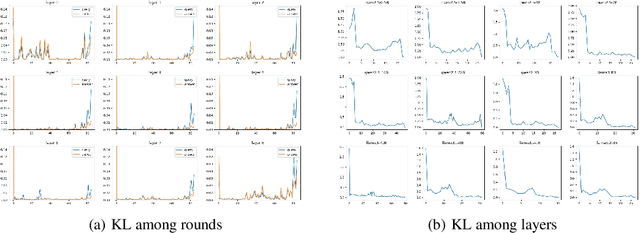

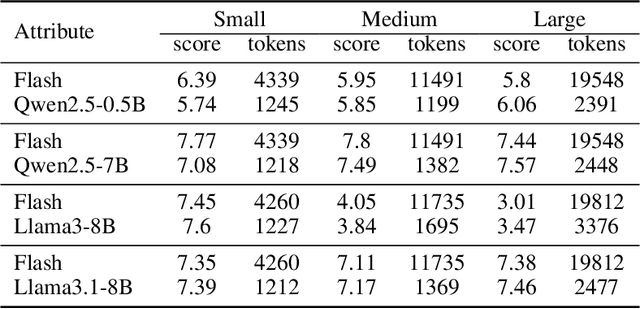

Abstract:The increasing context window size in large language models (LLMs) has improved their ability to handle complex, long-text tasks. However, as the conversation rounds continue, it is required to store a large amount of KV cache in GPU memory, which significantly affects the efficiency and even availability of the model serving systems. This paper analyzes dialogue data from real users and discovers that the LLM inference manifests a watershed layer, after which the distribution of round-level attention shows notable similarity. We propose Round Attention, a novel round-level attention mechanism that only recalls and computes the KV cache of the most relevant rounds. The experiments show that our method saves 55\% memory usage without compromising model performance.

Effective Diffusion Transformer Architecture for Image Super-Resolution

Sep 29, 2024

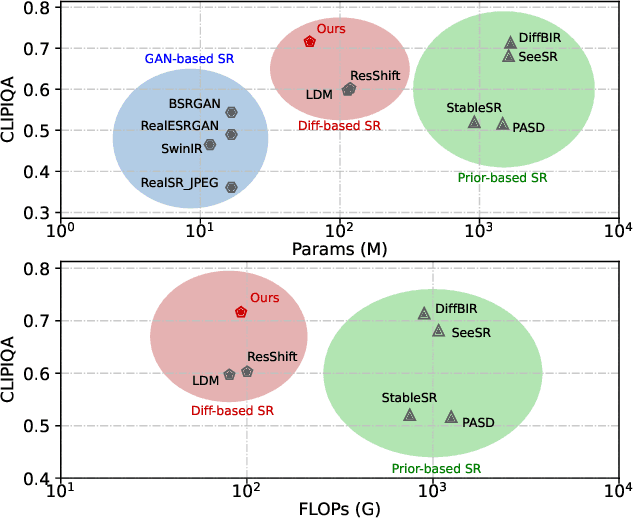

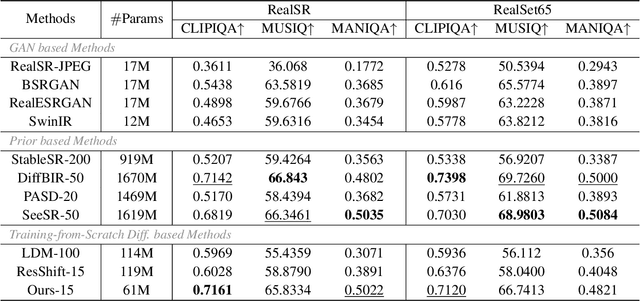

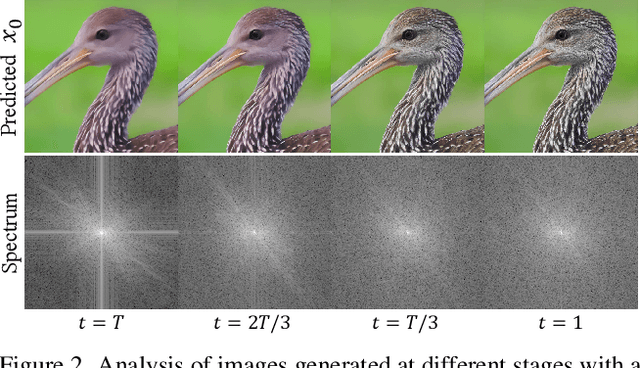

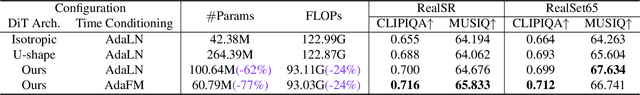

Abstract:Recent advances indicate that diffusion models hold great promise in image super-resolution. While the latest methods are primarily based on latent diffusion models with convolutional neural networks, there are few attempts to explore transformers, which have demonstrated remarkable performance in image generation. In this work, we design an effective diffusion transformer for image super-resolution (DiT-SR) that achieves the visual quality of prior-based methods, but through a training-from-scratch manner. In practice, DiT-SR leverages an overall U-shaped architecture, and adopts a uniform isotropic design for all the transformer blocks across different stages. The former facilitates multi-scale hierarchical feature extraction, while the latter reallocates the computational resources to critical layers to further enhance performance. Moreover, we thoroughly analyze the limitation of the widely used AdaLN, and present a frequency-adaptive time-step conditioning module, enhancing the model's capacity to process distinct frequency information at different time steps. Extensive experiments demonstrate that DiT-SR outperforms the existing training-from-scratch diffusion-based SR methods significantly, and even beats some of the prior-based methods on pretrained Stable Diffusion, proving the superiority of diffusion transformer in image super-resolution.

One Step Diffusion-based Super-Resolution with Time-Aware Distillation

Aug 14, 2024Abstract:Diffusion-based image super-resolution (SR) methods have shown promise in reconstructing high-resolution images with fine details from low-resolution counterparts. However, these approaches typically require tens or even hundreds of iterative samplings, resulting in significant latency. Recently, techniques have been devised to enhance the sampling efficiency of diffusion-based SR models via knowledge distillation. Nonetheless, when aligning the knowledge of student and teacher models, these solutions either solely rely on pixel-level loss constraints or neglect the fact that diffusion models prioritize varying levels of information at different time steps. To accomplish effective and efficient image super-resolution, we propose a time-aware diffusion distillation method, named TAD-SR. Specifically, we introduce a novel score distillation strategy to align the data distribution between the outputs of the student and teacher models after minor noise perturbation. This distillation strategy enables the student network to concentrate more on the high-frequency details. Furthermore, to mitigate performance limitations stemming from distillation, we integrate a latent adversarial loss and devise a time-aware discriminator that leverages diffusion priors to effectively distinguish between real images and generated images. Extensive experiments conducted on synthetic and real-world datasets demonstrate that the proposed method achieves comparable or even superior performance compared to both previous state-of-the-art (SOTA) methods and the teacher model in just one sampling step. Codes are available at https://github.com/LearningHx/TAD-SR.

Bridging Generative and Discriminative Models for Unified Visual Perception with Diffusion Priors

Jan 29, 2024

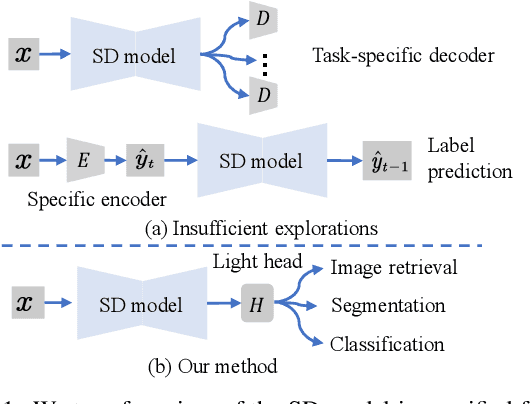

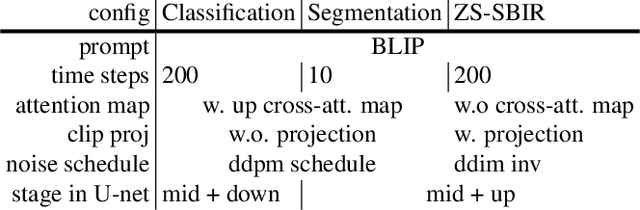

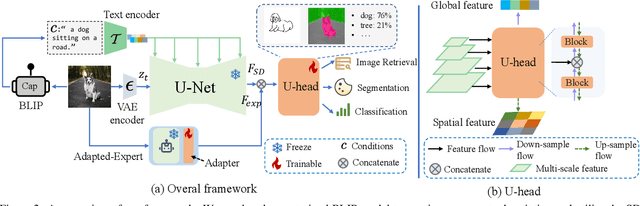

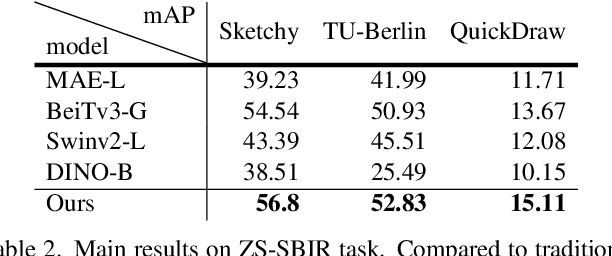

Abstract:The remarkable prowess of diffusion models in image generation has spurred efforts to extend their application beyond generative tasks. However, a persistent challenge exists in lacking a unified approach to apply diffusion models to visual perception tasks with diverse semantic granularity requirements. Our purpose is to establish a unified visual perception framework, capitalizing on the potential synergies between generative and discriminative models. In this paper, we propose Vermouth, a simple yet effective framework comprising a pre-trained Stable Diffusion (SD) model containing rich generative priors, a unified head (U-head) capable of integrating hierarchical representations, and an adapted expert providing discriminative priors. Comprehensive investigations unveil potential characteristics of Vermouth, such as varying granularity of perception concealed in latent variables at distinct time steps and various U-net stages. We emphasize that there is no necessity for incorporating a heavyweight or intricate decoder to transform diffusion models into potent representation learners. Extensive comparative evaluations against tailored discriminative models showcase the efficacy of our approach on zero-shot sketch-based image retrieval (ZS-SBIR), few-shot classification, and open-vocabulary semantic segmentation tasks. The promising results demonstrate the potential of diffusion models as formidable learners, establishing their significance in furnishing informative and robust visual representations.

VideoReTalking: Audio-based Lip Synchronization for Talking Head Video Editing In the Wild

Nov 27, 2022Abstract:We present VideoReTalking, a new system to edit the faces of a real-world talking head video according to input audio, producing a high-quality and lip-syncing output video even with a different emotion. Our system disentangles this objective into three sequential tasks: (1) face video generation with a canonical expression; (2) audio-driven lip-sync; and (3) face enhancement for improving photo-realism. Given a talking-head video, we first modify the expression of each frame according to the same expression template using the expression editing network, resulting in a video with the canonical expression. This video, together with the given audio, is then fed into the lip-sync network to generate a lip-syncing video. Finally, we improve the photo-realism of the synthesized faces through an identity-aware face enhancement network and post-processing. We use learning-based approaches for all three steps and all our modules can be tackled in a sequential pipeline without any user intervention. Furthermore, our system is a generic approach that does not need to be retrained to a specific person. Evaluations on two widely-used datasets and in-the-wild examples demonstrate the superiority of our framework over other state-of-the-art methods in terms of lip-sync accuracy and visual quality.

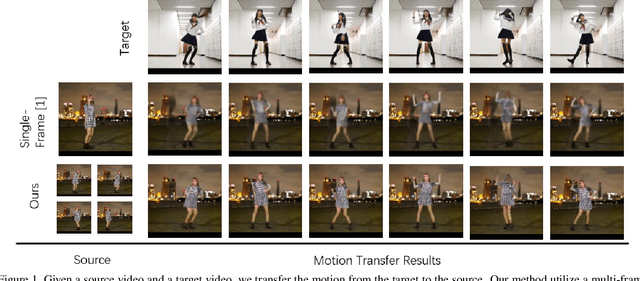

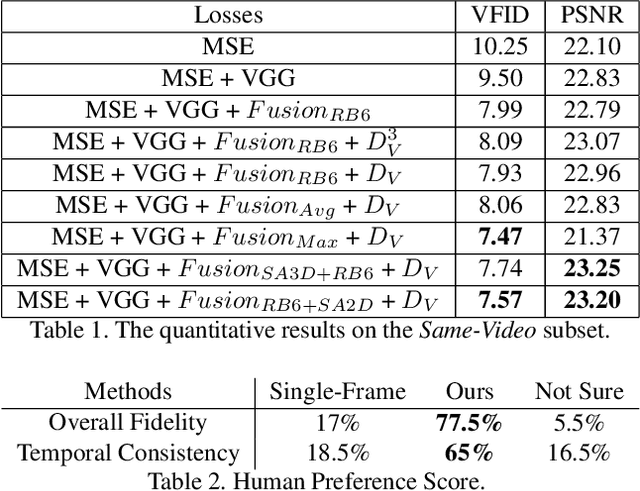

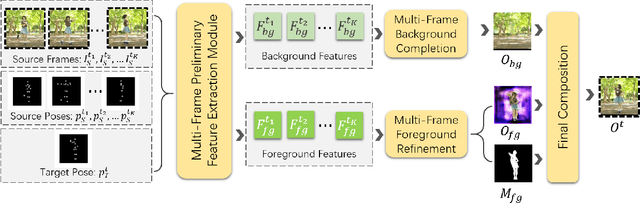

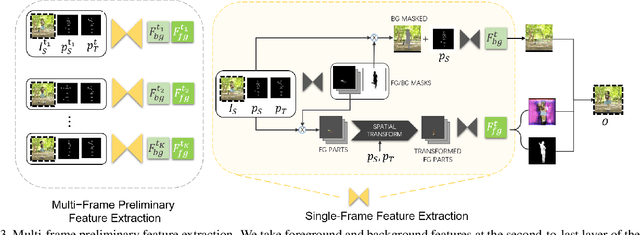

Multi-Frame Content Integration with a Spatio-Temporal Attention Mechanism for Person Video Motion Transfer

Aug 12, 2019

Abstract:Existing person video generation methods either lack the flexibility in controlling both the appearance and motion, or fail to preserve detailed appearance and temporal consistency. In this paper, we tackle the problem of motion transfer for generating person videos, which provides controls on both the appearance and the motion. Specifically, we transfer the motion of one person in a target video to another person in a source video, while preserving the appearance of the source person. Besides only relying on one source frame as the existing state-of-the-art methods, our proposed method integrates information from multiple source frames based on a spatio-temporal attention mechanism to preserve rich appearance details. In addition to a spatial discriminator employed for encouraging the frame-level fidelity, a multi-range temporal discriminator is adopted to enforce the generated video to resemble temporal dynamics of a real video in various time ranges. A challenging real-world dataset, which contains about 500 dancing video clips with complex and unpredictable motions, is collected for the training and testing. Extensive experiments show that the proposed method can produce more photo-realistic and temporally consistent person videos than previous methods. As our method decomposes the syntheses of the foreground and background into two branches, a flexible background substitution application can also be achieved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge