Ka-Hei Hui

PEGAsus: 3D Personalization of Geometry and Appearance

Feb 09, 2026Abstract:We present PEGAsus, a new framework capable of generating Personalized 3D shapes by learning shape concepts at both Geometry and Appearance levels. First, we formulate 3D shape personalization as extracting reusable, category-agnostic geometric and appearance attributes from reference shapes, and composing these attributes with text to generate novel shapes. Second, we design a progressive optimization strategy to learn shape concepts at both the geometry and appearance levels, decoupling the shape concept learning process. Third, we extend our approach to region-wise concept learning, enabling flexible concept extraction, with context-aware and context-free losses. Extensive experimental results show that PEGAsus is able to effectively extract attributes from a wide range of reference shapes and then flexibly compose these concepts with text to synthesize new shapes. This enables fine-grained control over shape generation and supports the creation of diverse, personalized results, even in challenging cross-category scenarios. Both quantitative and qualitative experiments demonstrate that our approach outperforms existing state-of-the-art solutions.

VisPhyWorld: Probing Physical Reasoning via Code-Driven Video Reconstruction

Feb 09, 2026Abstract:Evaluating whether Multimodal Large Language Models (MLLMs) genuinely reason about physical dynamics remains challenging. Most existing benchmarks rely on recognition-style protocols such as Visual Question Answering (VQA) and Violation of Expectation (VoE), which can often be answered without committing to an explicit, testable physical hypothesis. We propose VisPhyWorld, an execution-based framework that evaluates physical reasoning by requiring models to generate executable simulator code from visual observations. By producing runnable code, the inferred world representation is directly inspectable, editable, and falsifiable. This separates physical reasoning from rendering. Building on this framework, we introduce VisPhyBench, comprising 209 evaluation scenes derived from 108 physical templates and a systematic protocol that evaluates how well models reconstruct appearance and reproduce physically plausible motion. Our pipeline produces valid reconstructed videos in 97.7% on the benchmark. Experiments show that while state-of-the-art MLLMs achieve strong semantic scene understanding, they struggle to accurately infer physical parameters and to simulate consistent physical dynamics.

Imagine a City: CityGenAgent for Procedural 3D City Generation

Feb 05, 2026Abstract:The automated generation of interactive 3D cities is a critical challenge with broad applications in autonomous driving, virtual reality, and embodied intelligence. While recent advances in generative models and procedural techniques have improved the realism of city generation, existing methods often struggle with high-fidelity asset creation, controllability, and manipulation. In this work, we introduce CityGenAgent, a natural language-driven framework for hierarchical procedural generation of high-quality 3D cities. Our approach decomposes city generation into two interpretable components, Block Program and Building Program. To ensure structural correctness and semantic alignment, we adopt a two-stage learning strategy: (1) Supervised Fine-Tuning (SFT). We train BlockGen and BuildingGen to generate valid programs that adhere to schema constraints, including non-self-intersecting polygons and complete fields; (2) Reinforcement Learning (RL). We design Spatial Alignment Reward to enhance spatial reasoning ability and Visual Consistency Reward to bridge the gap between textual descriptions and the visual modality. Benefiting from the programs and the models' generalization, CityGenAgent supports natural language editing and manipulation. Comprehensive evaluations demonstrate superior semantic alignment, visual quality, and controllability compared to existing methods, establishing a robust foundation for scalable 3D city generation.

OPA-Pack: Object-Property-Aware Robotic Bin Packing

May 19, 2025Abstract:Robotic bin packing aids in a wide range of real-world scenarios such as e-commerce and warehouses. Yet, existing works focus mainly on considering the shape of objects to optimize packing compactness and neglect object properties such as fragility, edibility, and chemistry that humans typically consider when packing objects. This paper presents OPA-Pack (Object-Property-Aware Packing framework), the first framework that equips the robot with object property considerations in planning the object packing. Technical-wise, we develop a novel object property recognition scheme with retrieval-augmented generation and chain-of-thought reasoning, and build a dataset with object property annotations for 1,032 everyday objects. Also, we formulate OPA-Net, aiming to jointly separate incompatible object pairs and reduce pressure on fragile objects, while compacting the packing. Further, OPA-Net consists of a property embedding layer to encode the property of candidate objects to be packed, together with a fragility heightmap and an avoidance heightmap to keep track of the packed objects. Then, we design a reward function and adopt a deep Q-learning scheme to train OPA-Net. Experimental results manifest that OPA-Pack greatly improves the accuracy of separating incompatible object pairs (from 52% to 95%) and largely reduces pressure on fragile objects (by 29.4%), while maintaining good packing compactness. Besides, we demonstrate the effectiveness of OPA-Pack on a real packing platform, showcasing its practicality in real-world scenarios.

Rethinking End-to-End 2D to 3D Scene Segmentation in Gaussian Splatting

Mar 18, 2025Abstract:Lifting multi-view 2D instance segmentation to a radiance field has proven to be effective to enhance 3D understanding. Existing methods rely on direct matching for end-to-end lifting, yielding inferior results; or employ a two-stage solution constrained by complex pre- or post-processing. In this work, we design a new end-to-end object-aware lifting approach, named Unified-Lift that provides accurate 3D segmentation based on the 3D Gaussian representation. To start, we augment each Gaussian point with an additional Gaussian-level feature learned using a contrastive loss to encode instance information. Importantly, we introduce a learnable object-level codebook to account for individual objects in the scene for an explicit object-level understanding and associate the encoded object-level features with the Gaussian-level point features for segmentation predictions. While promising, achieving effective codebook learning is non-trivial and a naive solution leads to degraded performance. Therefore, we formulate the association learning module and the noisy label filtering module for effective and robust codebook learning. We conduct experiments on three benchmarks: LERF-Masked, Replica, and Messy Rooms datasets. Both qualitative and quantitative results manifest that our Unified-Lift clearly outperforms existing methods in terms of segmentation quality and time efficiency. The code is publicly available at \href{https://github.com/Runsong123/Unified-Lift}{https://github.com/Runsong123/Unified-Lift}.

WonderVerse: Extendable 3D Scene Generation with Video Generative Models

Mar 13, 2025Abstract:We introduce \textit{WonderVerse}, a simple but effective framework for generating extendable 3D scenes. Unlike existing methods that rely on iterative depth estimation and image inpainting, often leading to geometric distortions and inconsistencies, WonderVerse leverages the powerful world-level priors embedded within video generative foundation models to create highly immersive and geometrically coherent 3D environments. Furthermore, we propose a new technique for controllable 3D scene extension to substantially increase the scale of the generated environments. Besides, we introduce a novel abnormal sequence detection module that utilizes camera trajectory to address geometric inconsistency in the generated videos. Finally, WonderVerse is compatible with various 3D reconstruction methods, allowing both efficient and high-quality generation. Extensive experiments on 3D scene generation demonstrate that our WonderVerse, with an elegant and simple pipeline, delivers extendable and highly-realistic 3D scenes, markedly outperforming existing works that rely on more complex architectures.

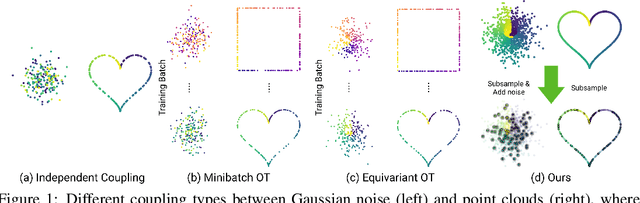

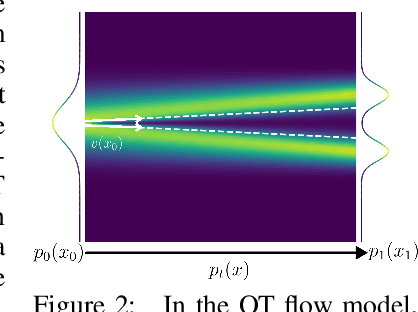

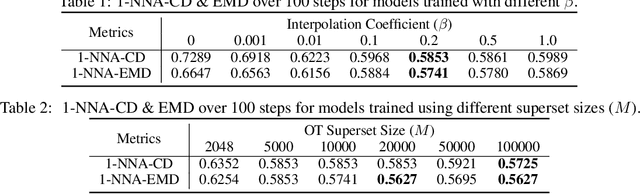

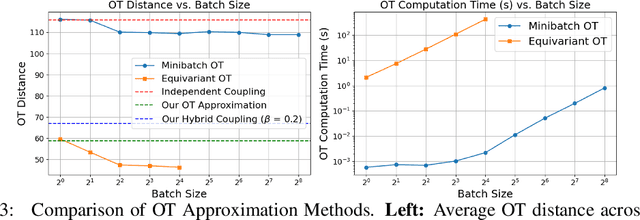

Not-So-Optimal Transport Flows for 3D Point Cloud Generation

Feb 18, 2025

Abstract:Learning generative models of 3D point clouds is one of the fundamental problems in 3D generative learning. One of the key properties of point clouds is their permutation invariance, i.e., changing the order of points in a point cloud does not change the shape they represent. In this paper, we analyze the recently proposed equivariant OT flows that learn permutation invariant generative models for point-based molecular data and we show that these models scale poorly on large point clouds. Also, we observe learning (equivariant) OT flows is generally challenging since straightening flow trajectories makes the learned flow model complex at the beginning of the trajectory. To remedy these, we propose not-so-optimal transport flow models that obtain an approximate OT by an offline OT precomputation, enabling an efficient construction of OT pairs for training. During training, we can additionally construct a hybrid coupling by combining our approximate OT and independent coupling to make the target flow models easier to learn. In an extensive empirical study, we show that our proposed model outperforms prior diffusion- and flow-based approaches on a wide range of unconditional generation and shape completion on the ShapeNet benchmark.

PCF-Lift: Panoptic Lifting by Probabilistic Contrastive Fusion

Oct 14, 2024Abstract:Panoptic lifting is an effective technique to address the 3D panoptic segmentation task by unprojecting 2D panoptic segmentations from multi-views to 3D scene. However, the quality of its results largely depends on the 2D segmentations, which could be noisy and error-prone, so its performance often drops significantly for complex scenes. In this work, we design a new pipeline coined PCF-Lift based on our Probabilis-tic Contrastive Fusion (PCF) to learn and embed probabilistic features throughout our pipeline to actively consider inaccurate segmentations and inconsistent instance IDs. Technical-wise, we first model the probabilistic feature embeddings through multivariate Gaussian distributions. To fuse the probabilistic features, we incorporate the probability product kernel into the contrastive loss formulation and design a cross-view constraint to enhance the feature consistency across different views. For the inference, we introduce a new probabilistic clustering method to effectively associate prototype features with the underlying 3D object instances for the generation of consistent panoptic segmentation results. Further, we provide a theoretical analysis to justify the superiority of the proposed probabilistic solution. By conducting extensive experiments, our PCF-lift not only significantly outperforms the state-of-the-art methods on widely used benchmarks including the ScanNet dataset and the challenging Messy Room dataset (4.4% improvement of scene-level PQ), but also demonstrates strong robustness when incorporating various 2D segmentation models or different levels of hand-crafted noise.

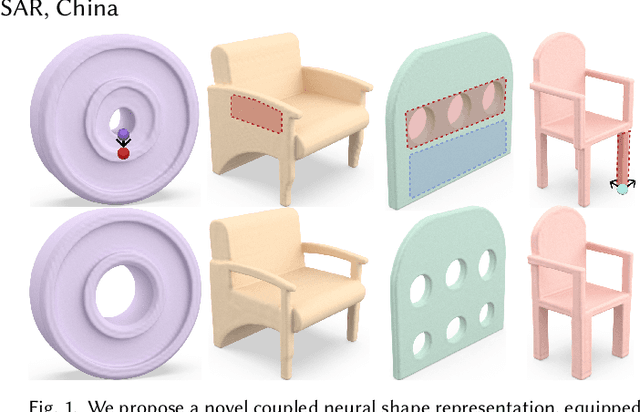

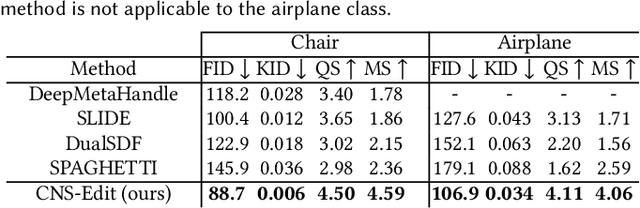

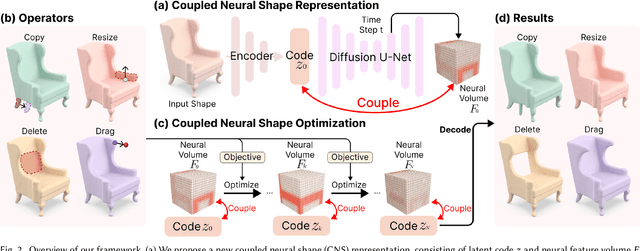

CNS-Edit: 3D Shape Editing via Coupled Neural Shape Optimization

Feb 04, 2024

Abstract:This paper introduces a new approach based on a coupled representation and a neural volume optimization to implicitly perform 3D shape editing in latent space. This work has three innovations. First, we design the coupled neural shape (CNS) representation for supporting 3D shape editing. This representation includes a latent code, which captures high-level global semantics of the shape, and a 3D neural feature volume, which provides a spatial context to associate with the local shape changes given by the editing. Second, we formulate the coupled neural shape optimization procedure to co-optimize the two coupled components in the representation subject to the editing operation. Last, we offer various 3D shape editing operators, i.e., copy, resize, delete, and drag, and derive each into an objective for guiding the CNS optimization, such that we can iteratively co-optimize the latent code and neural feature volume to match the editing target. With our approach, we can achieve a rich variety of editing results that are not only aware of the shape semantics but are also not easy to achieve by existing approaches. Both quantitative and qualitative evaluations demonstrate the strong capabilities of our approach over the state-of-the-art solutions.

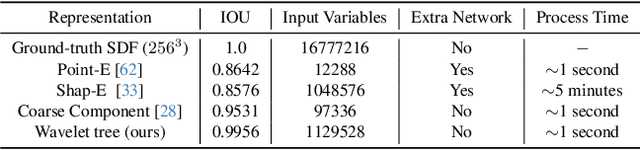

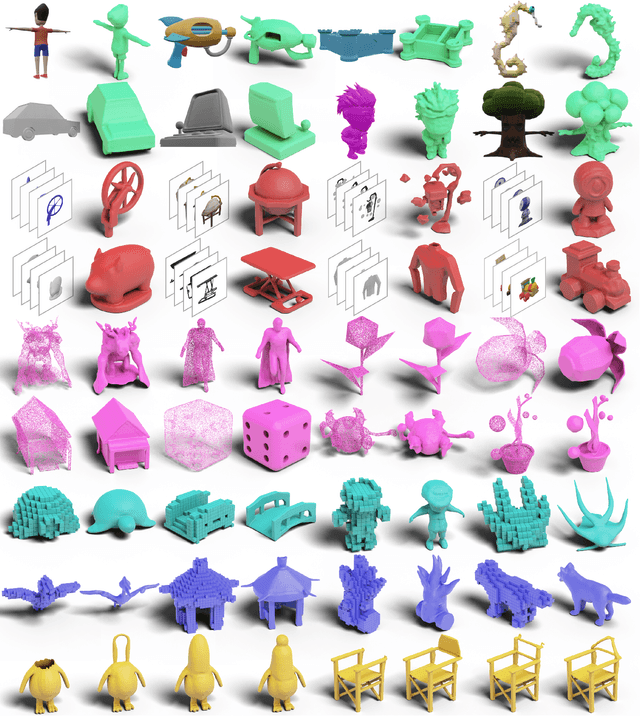

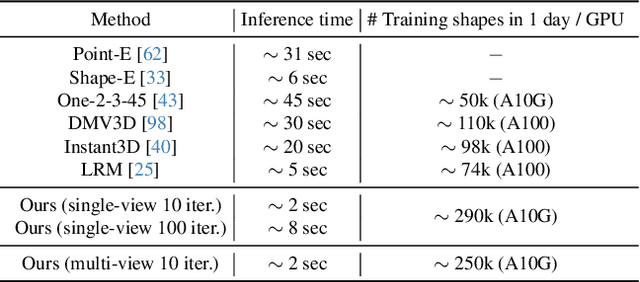

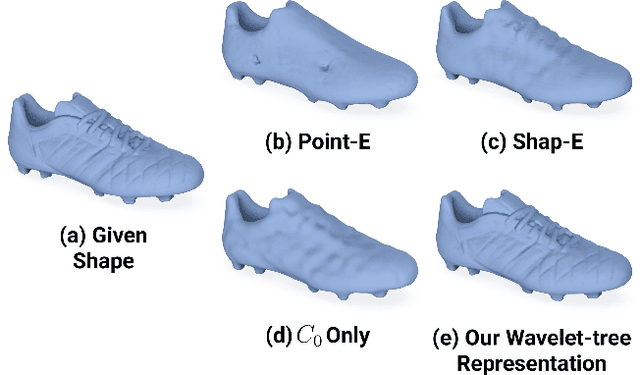

Make-A-Shape: a Ten-Million-scale 3D Shape Model

Jan 20, 2024

Abstract:Significant progress has been made in training large generative models for natural language and images. Yet, the advancement of 3D generative models is hindered by their substantial resource demands for training, along with inefficient, non-compact, and less expressive representations. This paper introduces Make-A-Shape, a new 3D generative model designed for efficient training on a vast scale, capable of utilizing 10 millions publicly-available shapes. Technical-wise, we first innovate a wavelet-tree representation to compactly encode shapes by formulating the subband coefficient filtering scheme to efficiently exploit coefficient relations. We then make the representation generatable by a diffusion model by devising the subband coefficients packing scheme to layout the representation in a low-resolution grid. Further, we derive the subband adaptive training strategy to train our model to effectively learn to generate coarse and detail wavelet coefficients. Last, we extend our framework to be controlled by additional input conditions to enable it to generate shapes from assorted modalities, e.g., single/multi-view images, point clouds, and low-resolution voxels. In our extensive set of experiments, we demonstrate various applications, such as unconditional generation, shape completion, and conditional generation on a wide range of modalities. Our approach not only surpasses the state of the art in delivering high-quality results but also efficiently generates shapes within a few seconds, often achieving this in just 2 seconds for most conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge