John Grundy

Walking the Tightrope of LLMs for Software Development: A Practitioners' Perspective

Nov 09, 2025

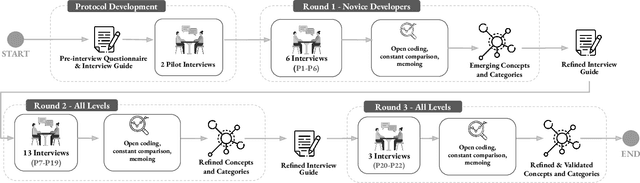

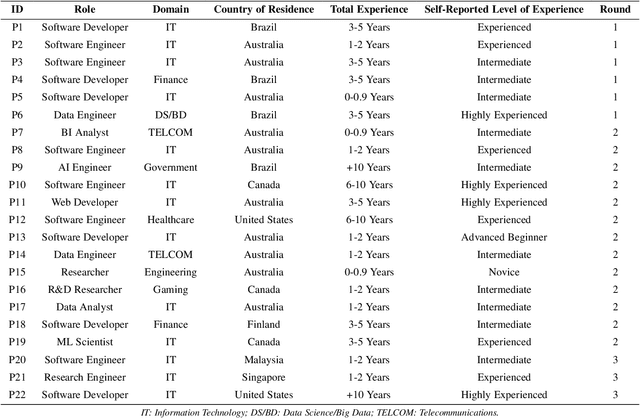

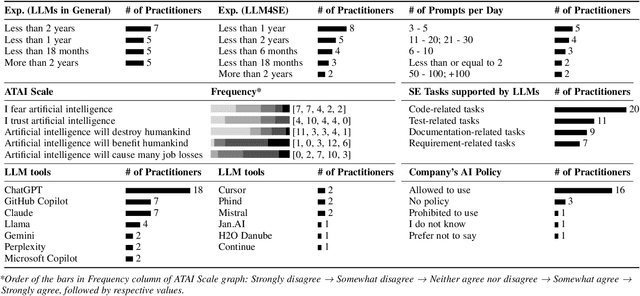

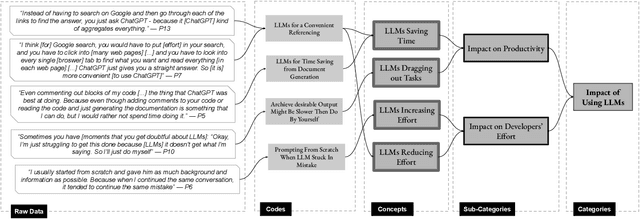

Abstract:Background: Large Language Models emerged with the potential of provoking a revolution in software development (e.g., automating processes, workforce transformation). Although studies have started to investigate the perceived impact of LLMs for software development, there is a need for empirical studies to comprehend how to balance forward and backward effects of using LLMs. Objective: We investigated how LLMs impact software development and how to manage the impact from a software developer's perspective. Method: We conducted 22 interviews with software practitioners across 3 rounds of data collection and analysis, between October (2024) and September (2025). We employed socio-technical grounded theory (STGT) for data analysis to rigorously analyse interview participants' responses. Results: We identified the benefits (e.g., maintain software development flow, improve developers' mental model, and foster entrepreneurship) and disadvantages (e.g., negative impact on developers' personality and damage to developers' reputation) of using LLMs at individual, team, organisation, and society levels; as well as best practices on how to adopt LLMs. Conclusion: Critically, we present the trade-offs that software practitioners, teams, and organisations face in working with LLMs. Our findings are particularly useful for software team leaders and IT managers to assess the viability of LLMs within their specific context.

Junior Software Developers' Perspectives on Adopting LLMs for Software Engineering: a Systematic Literature Review

Mar 10, 2025Abstract:Many studies exploring the adoption of Large Language Model-based tools for software development by junior developers have emerged in recent years. These studies have sought to understand developers' perspectives about using those tools, a fundamental pillar for successfully adopting LLM-based tools in Software Engineering. The aim of this paper is to provide an overview of junior software developers' perspectives and use of LLM-based tools for software engineering (LLM4SE). We conducted a systematic literature review (SLR) following guidelines by Kitchenham et al. on 56 primary studies, applying the definition for junior software developers as software developers with equal or less than five years of experience, including Computer Science/Software Engineering students. We found that the majority of the studies focused on comprehending the different aspects of integrating AI tools in SE. Only 8.9\% of the studies provide a clear definition for junior software developers, and there is no uniformity. Searching for relevant information is the most common task using LLM tools. ChatGPT was the most common LLM tool present in the studies (and experiments). A majority of the studies (83.9\%) report both positive and negative perceptions about the impact of adopting LLM tools. We also found and categorised advantages, challenges, and recommendations regarding LLM adoption. Our results indicate that developers are using LLMs not just for code generation, but also to improve their development skills. Critically, they are not just experiencing the benefits of adopting LLM tools, but they are also aware of at least a few LLM limitations, such as the generation of wrong suggestions, potential data leaking, and AI hallucination. Our findings offer implications for software engineering researchers, educators, and developers.

Advice for Diabetes Self-Management by ChatGPT Models: Challenges and Recommendations

Jan 14, 2025

Abstract:Given their ability for advanced reasoning, extensive contextual understanding, and robust question-answering abilities, large language models have become prominent in healthcare management research. Despite adeptly handling a broad spectrum of healthcare inquiries, these models face significant challenges in delivering accurate and practical advice for chronic conditions such as diabetes. We evaluate the responses of ChatGPT versions 3.5 and 4 to diabetes patient queries, assessing their depth of medical knowledge and their capacity to deliver personalized, context-specific advice for diabetes self-management. Our findings reveal discrepancies in accuracy and embedded biases, emphasizing the models' limitations in providing tailored advice unless activated by sophisticated prompting techniques. Additionally, we observe that both models often provide advice without seeking necessary clarification, a practice that can result in potentially dangerous advice. This underscores the limited practical effectiveness of these models without human oversight in clinical settings. To address these issues, we propose a commonsense evaluation layer for prompt evaluation and incorporating disease-specific external memory using an advanced Retrieval Augmented Generation technique. This approach aims to improve information quality and reduce misinformation risks, contributing to more reliable AI applications in healthcare settings. Our findings seek to influence the future direction of AI in healthcare, enhancing both the scope and quality of its integration.

RepoTransBench: A Real-World Benchmark for Repository-Level Code Translation

Dec 23, 2024

Abstract:Repository-level code translation refers to translating an entire code repository from one programming language to another while preserving the functionality of the source repository. Many benchmarks have been proposed to evaluate the performance of such code translators. However, previous benchmarks mostly provide fine-grained samples, focusing at either code snippet, function, or file-level code translation. Such benchmarks do not accurately reflect real-world demands, where entire repositories often need to be translated, involving longer code length and more complex functionalities. To address this gap, we propose a new benchmark, named RepoTransBench, which is a real-world repository-level code translation benchmark with an automatically executable test suite. We conduct experiments on RepoTransBench to evaluate the translation performance of 11 advanced LLMs. We find that the Success@1 score (test success in one attempt) of the best-performing LLM is only 7.33%. To further explore the potential of LLMs for repository-level code translation, we provide LLMs with error-related feedback to perform iterative debugging and observe an average 7.09% improvement on Success@1. However, even with this improvement, the Success@1 score of the best-performing LLM is only 21%, which may not meet the need for reliable automatic repository-level code translation. Finally, we conduct a detailed error analysis and highlight current LLMs' deficiencies in repository-level code translation, which could provide a reference for further improvements.

Requirements Engineering for Older Adult Digital Health Software: A Systematic Literature Review

Nov 06, 2024Abstract:Growth of the older adult population has led to an increasing interest in technology-supported aged care. However, the area has some challenges such as a lack of caregivers and limitations in understanding the emotional, social, physical, and mental well-being needs of seniors. Furthermore, there is a gap in the understanding between developers and ageing people of their requirements. Digital health can be important in supporting older adults wellbeing, emotional requirements, and social needs. Requirements Engineering (RE) is a major software engineering field, which can help to identify, elicit and prioritize the requirements of stakeholders and ensure that the systems meet standards for performance, reliability, and usability. We carried out a systematic review of the literature on RE for older adult digital health software. This was necessary to show the representatives of the current stage of understanding the needs of older adults in aged care digital health. Using established guidelines outlined by the Kitchenham method, the PRISMA and the PICO guideline, we developed a protocol, followed by the systematic exploration of eight databases. This resulted in 69 primary studies of high relevance, which were subsequently subjected to data extraction, synthesis, and reporting. We highlight key RE processes in digital health software for ageing people. It explored the utilization of technology for older user well-being and care, and the evaluations of such solutions. The review also identified key limitations found in existing primary studies that inspire future research opportunities. The results indicate that requirement gathering and understanding have a significant variation between different studies. The differences are in the quality, depth, and techniques adopted for requirement gathering and these differences are largely due to uneven adoption of RE methods.

Model-driven Engineering for Machine Learning Components: A Systematic Literature Review

Nov 01, 2023Abstract:Context: Machine Learning (ML) has become widely adopted as a component in many modern software applications. Due to the large volumes of data available, organizations want to increasingly leverage their data to extract meaningful insights and enhance business profitability. ML components enable predictive capabilities, anomaly detection, recommendation, accurate image and text processing, and informed decision-making. However, developing systems with ML components is not trivial; it requires time, effort, knowledge, and expertise in ML, data processing, and software engineering. There have been several studies on the use of model-driven engineering (MDE) techniques to address these challenges when developing traditional software and cyber-physical systems. Recently, there has been a growing interest in applying MDE for systems with ML components. Objective: The goal of this study is to further explore the promising intersection of MDE with ML (MDE4ML) through a systematic literature review (SLR). Through this SLR, we wanted to analyze existing studies, including their motivations, MDE solutions, evaluation techniques, key benefits and limitations. Results: We analyzed selected studies with respect to several areas of interest and identified the following: 1) the key motivations behind using MDE4ML; 2) a variety of MDE solutions applied, such as modeling languages, model transformations, tool support, targeted ML aspects, contributions and more; 3) the evaluation techniques and metrics used; and 4) the limitations and directions for future work. We also discuss the gaps in existing literature and provide recommendations for future research. Conclusion: This SLR highlights current trends, gaps and future research directions in the field of MDE4ML, benefiting both researchers and practitioners

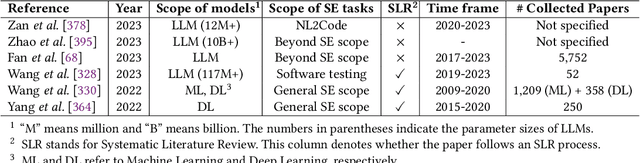

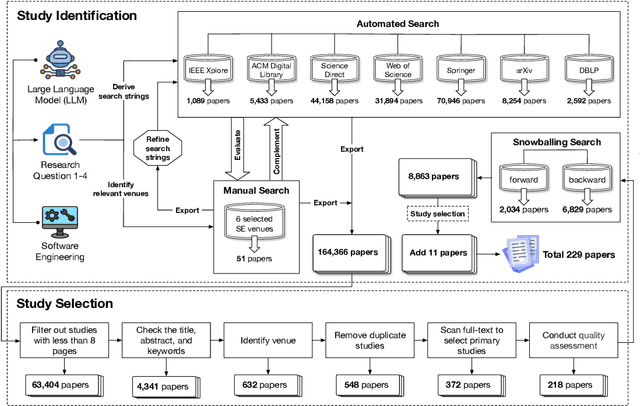

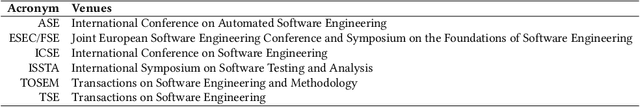

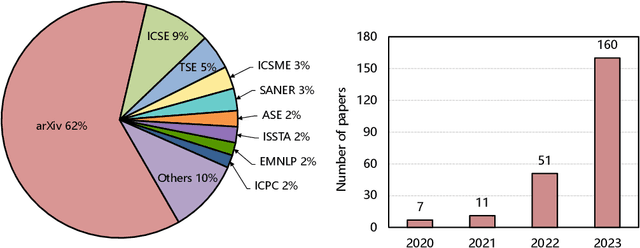

Large Language Models for Software Engineering: A Systematic Literature Review

Sep 12, 2023

Abstract:Large Language Models (LLMs) have significantly impacted numerous domains, including Software Engineering (SE). Many recent publications have explored LLMs applied to various SE tasks. Nevertheless, a comprehensive understanding of the application, effects, and possible limitations of LLMs on SE is still in its early stages. To bridge this gap, we conducted a systematic literature review on LLM4SE, with a particular focus on understanding how LLMs can be exploited to optimize processes and outcomes. We collect and analyze 229 research papers from 2017 to 2023 to answer four key research questions (RQs). In RQ1, we categorize different LLMs that have been employed in SE tasks, characterizing their distinctive features and uses. In RQ2, we analyze the methods used in data collection, preprocessing, and application highlighting the role of well-curated datasets for successful LLM for SE implementation. RQ3 investigates the strategies employed to optimize and evaluate the performance of LLMs in SE. Finally, RQ4 examines the specific SE tasks where LLMs have shown success to date, illustrating their practical contributions to the field. From the answers to these RQs, we discuss the current state-of-the-art and trends, identifying gaps in existing research, and flagging promising areas for future study.

Requirements Framework for Engineering Human-centered Artificial Intelligence-Based Software Systems

Mar 06, 2023Abstract:[Context] Artificial intelligence (AI) components used in building software solutions have substantially increased in recent years. However, many of these solutions end up focusing on technical aspects and ignore critical human-centered aspects. [Objective] Including human-centered aspects during requirements engineering (RE) when building AI-based software can help achieve more responsible, unbiased, and inclusive AI-based software solutions. [Method] In this paper, we present a new framework developed based on human-centered AI guidelines and a user survey to aid in collecting requirements for human-centered AI-based software. We provide a catalog to elicit these requirements and a conceptual model to present them visually. [Results] The framework is applied to a case study to elicit and model requirements for enhancing the quality of 360 degree~videos intended for virtual reality (VR) users. [Conclusion] We found that our proposed approach helped the project team fully understand the needs of the project to deliver. Furthermore, the framework helped to understand what requirements need to be captured at the initial stages against later stages in the engineering process of AI-based software.

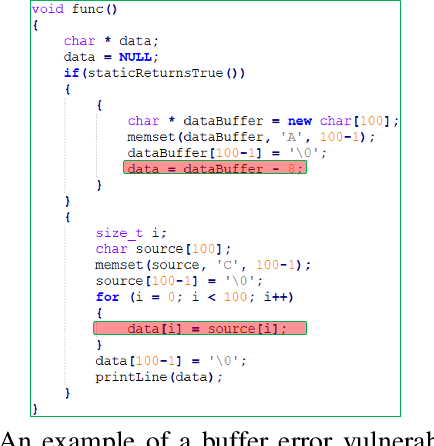

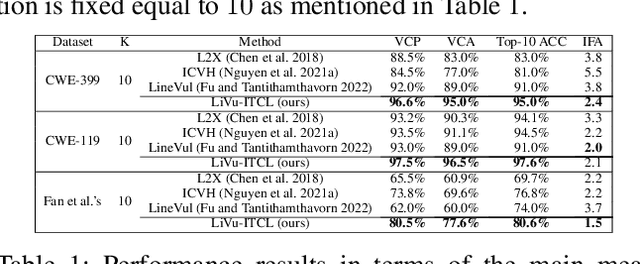

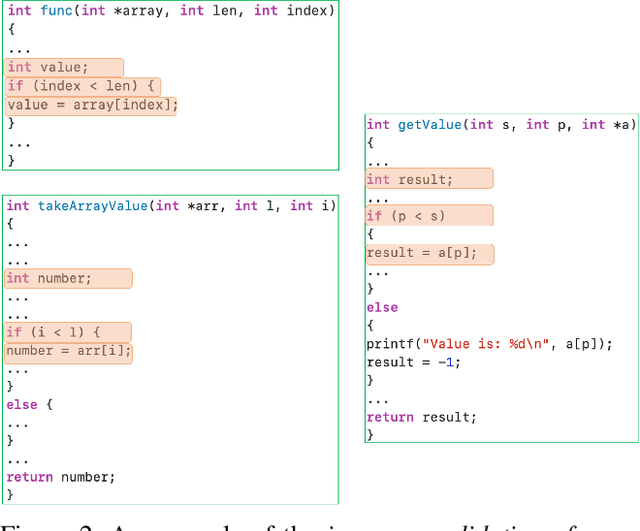

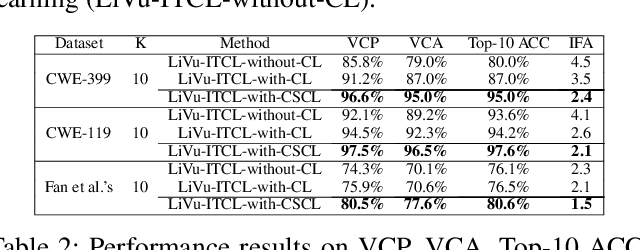

An Information-Theoretic and Contrastive Learning-based Approach for Identifying Code Statements Causing Software Vulnerability

Sep 20, 2022

Abstract:Software vulnerabilities existing in a program or function of computer systems are a serious and crucial concern. Typically, in a program or function consisting of hundreds or thousands of source code statements, there are only few statements causing the corresponding vulnerabilities. Vulnerability labeling is currently done on a function or program level by experts with the assistance of machine learning tools. Extending this approach to the code statement level is much more costly and time-consuming and remains an open problem. In this paper we propose a novel end-to-end deep learning-based approach to identify the vulnerability-relevant code statements of a specific function. Inspired by the specific structures observed in real world vulnerable code, we first leverage mutual information for learning a set of latent variables representing the relevance of the source code statements to the corresponding function's vulnerability. We then propose novel clustered spatial contrastive learning in order to further improve the representation learning and the robust selection process of vulnerability-relevant code statements. Experimental results on real-world datasets of 200k+ C/C++ functions show the superiority of our method over other state-of-the-art baselines. In general, our method obtains a higher performance in VCP, VCA, and Top-10 ACC measures of between 3\% to 14\% over the baselines when running on real-world datasets in an unsupervised setting. Our released source code samples are publicly available at \href{https://github.com/vannguyennd/livuitcl}{https://github.com/vannguyennd/livuitcl.}

Cross Project Software Vulnerability Detection via Domain Adaptation and Max-Margin Principle

Sep 19, 2022

Abstract:Software vulnerabilities (SVs) have become a common, serious and crucial concern due to the ubiquity of computer software. Many machine learning-based approaches have been proposed to solve the software vulnerability detection (SVD) problem. However, there are still two open and significant issues for SVD in terms of i) learning automatic representations to improve the predictive performance of SVD, and ii) tackling the scarcity of labeled vulnerabilities datasets that conventionally need laborious labeling effort by experts. In this paper, we propose a novel end-to-end approach to tackle these two crucial issues. We first exploit the automatic representation learning with deep domain adaptation for software vulnerability detection. We then propose a novel cross-domain kernel classifier leveraging the max-margin principle to significantly improve the transfer learning process of software vulnerabilities from labeled projects into unlabeled ones. The experimental results on real-world software datasets show the superiority of our proposed method over state-of-the-art baselines. In short, our method obtains a higher performance on F1-measure, the most important measure in SVD, from 1.83% to 6.25% compared to the second highest method in the used datasets. Our released source code samples are publicly available at https://github.com/vannguyennd/dam2p

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge