Dilini Rajapaksha

LIMREF: Local Interpretable Model Agnostic Rule-based Explanations for Forecasting, with an Application to Electricity Smart Meter Data

Feb 15, 2022

Abstract:Accurate electricity demand forecasts play a crucial role in sustainable power systems. To enable better decision-making especially for demand flexibility of the end-user, it is necessary to provide not only accurate but also understandable and actionable forecasts. To provide accurate forecasts Global Forecasting Models (GFM) trained across time series have shown superior results in many demand forecasting competitions and real-world applications recently, compared with univariate forecasting approaches. We aim to fill the gap between the accuracy and the interpretability in global forecasting approaches. In order to explain the global model forecasts, we propose Local Interpretable Model-agnostic Rule-based Explanations for Forecasting (LIMREF), a local explainer framework that produces k-optimal impact rules for a particular forecast, considering the global forecasting model as a black-box model, in a model-agnostic way. It provides different types of rules that explain the forecast of the global model and the counterfactual rules, which provide actionable insights for potential changes to obtain different outputs for given instances. We conduct experiments using a large-scale electricity demand dataset with exogenous features such as temperature and calendar effects. Here, we evaluate the quality of the explanations produced by the LIMREF framework in terms of both qualitative and quantitative aspects such as accuracy, fidelity, and comprehensibility and benchmark those against other local explainers.

LoMEF: A Framework to Produce Local Explanations for Global Model Time Series Forecasts

Nov 13, 2021

Abstract:Global Forecasting Models (GFM) that are trained across a set of multiple time series have shown superior results in many forecasting competitions and real-world applications compared with univariate forecasting approaches. One aspect of the popularity of statistical forecasting models such as ETS and ARIMA is their relative simplicity and interpretability (in terms of relevant lags, trend, seasonality, and others), while GFMs typically lack interpretability, especially towards particular time series. This reduces the trust and confidence of the stakeholders when making decisions based on the forecasts without being able to understand the predictions. To mitigate this problem, in this work, we propose a novel local model-agnostic interpretability approach to explain the forecasts from GFMs. We train simpler univariate surrogate models that are considered interpretable (e.g., ETS) on the predictions of the GFM on samples within a neighbourhood that we obtain through bootstrapping or straightforwardly as the one-step-ahead global black-box model forecasts of the time series which needs to be explained. After, we evaluate the explanations for the forecasts of the global models in both qualitative and quantitative aspects such as accuracy, fidelity, stability and comprehensibility, and are able to show the benefits of our approach.

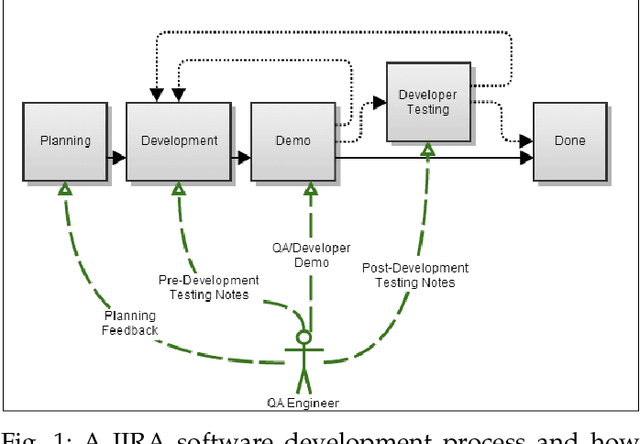

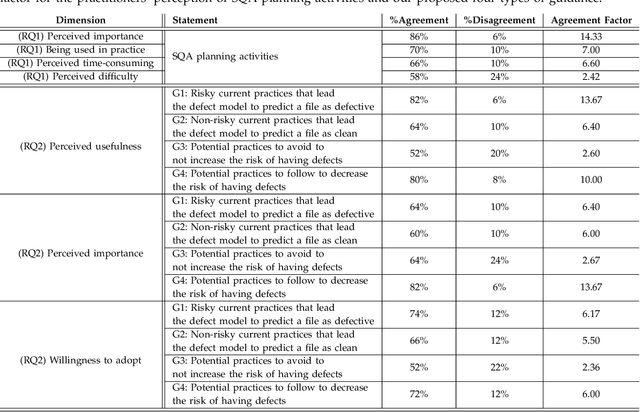

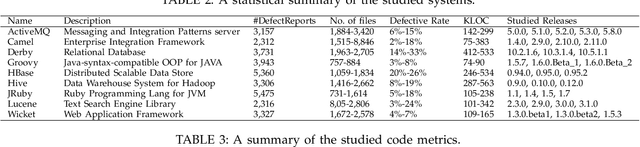

SQAPlanner: Generating Data-InformedSoftware Quality Improvement Plans

Feb 19, 2021

Abstract:Software Quality Assurance (SQA) planning aims to define proactive plans, such as defining maximum file size, to prevent the occurrence of software defects in future releases. To aid this, defect prediction models have been proposed to generate insights as the most important factors that are associated with software quality. Such insights that are derived from traditional defect models are far from actionable-i.e., practitioners still do not know what they should do or avoid to decrease the risk of having defects, and what is the risk threshold for each metric. A lack of actionable guidance and risk threshold can lead to inefficient and ineffective SQA planning processes. In this paper, we investigate the practitioners' perceptions of current SQA planning activities, current challenges of such SQA planning activities, and propose four types of guidance to support SQA planning. We then propose and evaluate our AI-Driven SQAPlanner approach, a novel approach for generating four types of guidance and their associated risk thresholds in the form of rule-based explanations for the predictions of defect prediction models. Finally, we develop and evaluate an information visualization for our SQAPlanner approach. Through the use of qualitative survey and empirical evaluation, our results lead us to conclude that SQAPlanner is needed, effective, stable, and practically applicable. We also find that 80% of our survey respondents perceived that our visualization is more actionable. Thus, our SQAPlanner paves a way for novel research in actionable software analytics-i.e., generating actionable guidance on what should practitioners do and not do to decrease the risk of having defects to support SQA planning.

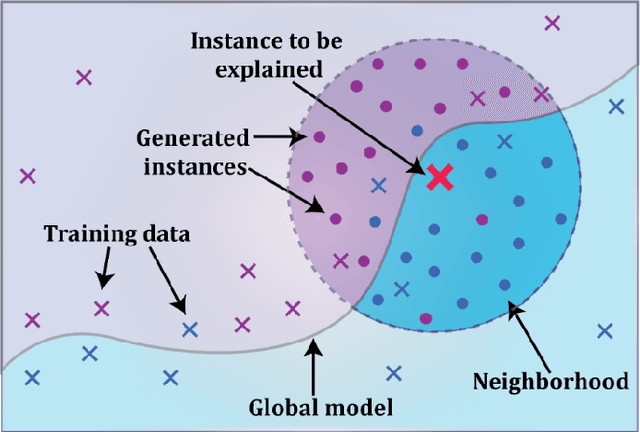

LoRMIkA: Local Rule-based Model Interpretability with k-optimal Associations

Aug 11, 2019

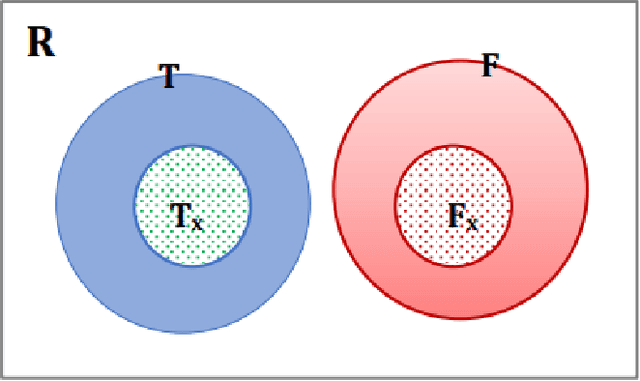

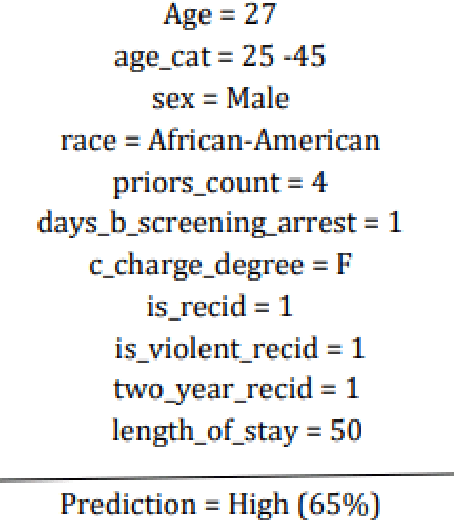

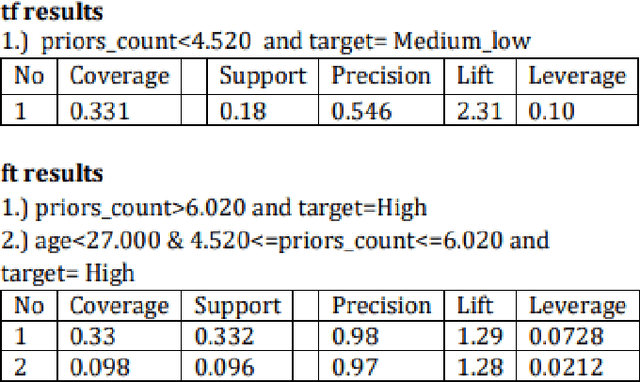

Abstract:As we rely more and more on machine learning models for real-life decision-making, being able to understand and trust the predictions becomes ever more important. Local explainer models have recently been introduced to explain the predictions of complex machine learning models at the instance level. In this paper, we propose Local Rule-based Model Interpretability with k-optimal Associations (LoRMIkA), a novel model-agnostic approach that obtains k-optimal association rules from a neighborhood of the instance to be explained. Compared to other rule-based approaches in the literature, we argue that the most predictive rules are not necessarily the rules that provide the best explanations. Consequently, the LoRMIkA framework provides a flexible way to obtain predictive and interesting rules. It uses an efficient search algorithm guaranteed to find the k-optimal rules with respect to objectives such as strength, lift, leverage, coverage, and support. It also provides multiple rules which explain the decision and counterfactual rules, which give indications for potential changes to obtain different outputs for given instances. We compare our approach to other state-of-the-art approaches in local model interpretability on three different datasets, and achieve competitive results in terms of local accuracy and interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge