Rashina Hoda

Walking the Tightrope of LLMs for Software Development: A Practitioners' Perspective

Nov 09, 2025

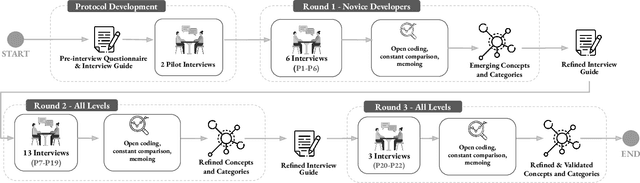

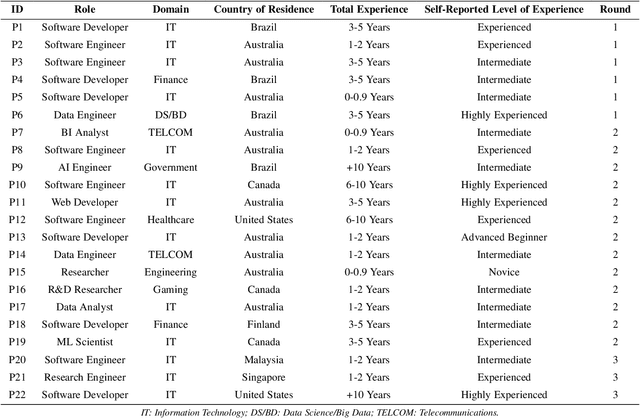

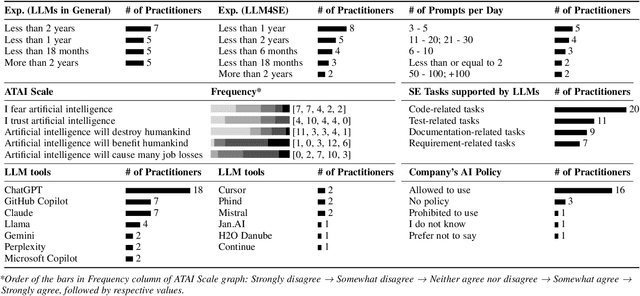

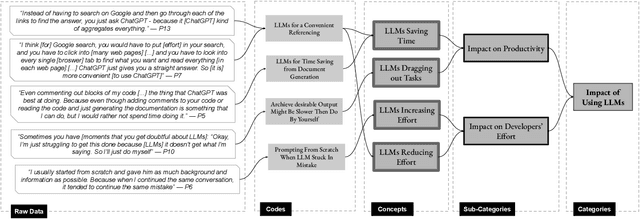

Abstract:Background: Large Language Models emerged with the potential of provoking a revolution in software development (e.g., automating processes, workforce transformation). Although studies have started to investigate the perceived impact of LLMs for software development, there is a need for empirical studies to comprehend how to balance forward and backward effects of using LLMs. Objective: We investigated how LLMs impact software development and how to manage the impact from a software developer's perspective. Method: We conducted 22 interviews with software practitioners across 3 rounds of data collection and analysis, between October (2024) and September (2025). We employed socio-technical grounded theory (STGT) for data analysis to rigorously analyse interview participants' responses. Results: We identified the benefits (e.g., maintain software development flow, improve developers' mental model, and foster entrepreneurship) and disadvantages (e.g., negative impact on developers' personality and damage to developers' reputation) of using LLMs at individual, team, organisation, and society levels; as well as best practices on how to adopt LLMs. Conclusion: Critically, we present the trade-offs that software practitioners, teams, and organisations face in working with LLMs. Our findings are particularly useful for software team leaders and IT managers to assess the viability of LLMs within their specific context.

Comparing Large Language Models and Traditional Machine Translation Tools for Translating Medical Consultation Summaries: A Pilot Study

Apr 23, 2025

Abstract:This study evaluates how well large language models (LLMs) and traditional machine translation (MT) tools translate medical consultation summaries from English into Arabic, Chinese, and Vietnamese. It assesses both patient, friendly and clinician, focused texts using standard automated metrics. Results showed that traditional MT tools generally performed better, especially for complex texts, while LLMs showed promise, particularly in Vietnamese and Chinese, when translating simpler summaries. Arabic translations improved with complexity due to the language's morphology. Overall, while LLMs offer contextual flexibility, they remain inconsistent, and current evaluation metrics fail to capture clinical relevance. The study highlights the need for domain-specific training, improved evaluation methods, and human oversight in medical translation.

Junior Software Developers' Perspectives on Adopting LLMs for Software Engineering: a Systematic Literature Review

Mar 10, 2025Abstract:Many studies exploring the adoption of Large Language Model-based tools for software development by junior developers have emerged in recent years. These studies have sought to understand developers' perspectives about using those tools, a fundamental pillar for successfully adopting LLM-based tools in Software Engineering. The aim of this paper is to provide an overview of junior software developers' perspectives and use of LLM-based tools for software engineering (LLM4SE). We conducted a systematic literature review (SLR) following guidelines by Kitchenham et al. on 56 primary studies, applying the definition for junior software developers as software developers with equal or less than five years of experience, including Computer Science/Software Engineering students. We found that the majority of the studies focused on comprehending the different aspects of integrating AI tools in SE. Only 8.9\% of the studies provide a clear definition for junior software developers, and there is no uniformity. Searching for relevant information is the most common task using LLM tools. ChatGPT was the most common LLM tool present in the studies (and experiments). A majority of the studies (83.9\%) report both positive and negative perceptions about the impact of adopting LLM tools. We also found and categorised advantages, challenges, and recommendations regarding LLM adoption. Our results indicate that developers are using LLMs not just for code generation, but also to improve their development skills. Critically, they are not just experiencing the benefits of adopting LLM tools, but they are also aware of at least a few LLM limitations, such as the generation of wrong suggestions, potential data leaking, and AI hallucination. Our findings offer implications for software engineering researchers, educators, and developers.

Navigating Fairness: Practitioners' Understanding, Challenges, and Strategies in AI/ML Development

Mar 21, 2024

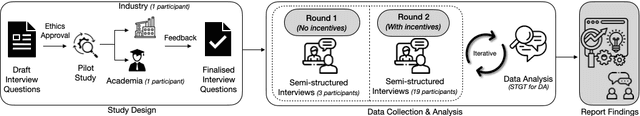

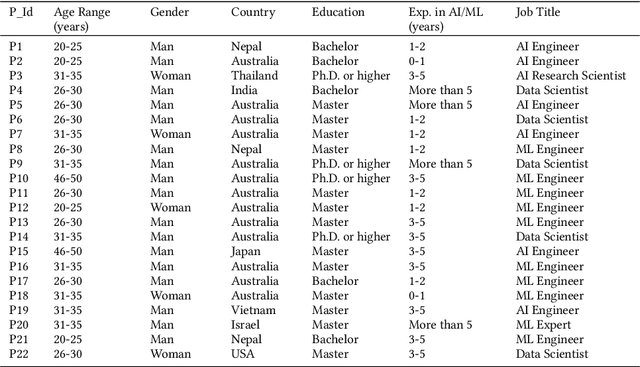

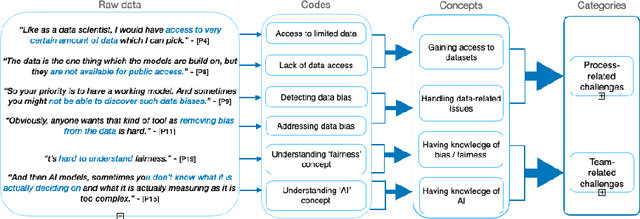

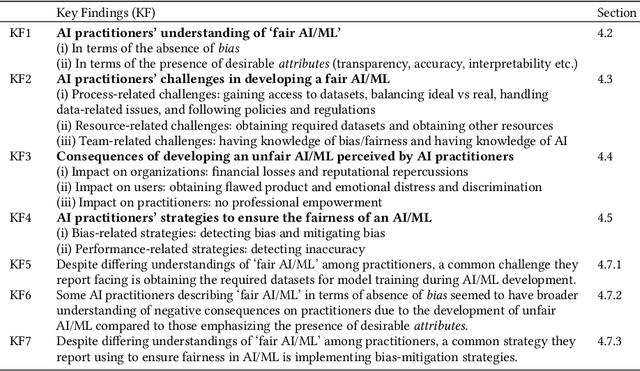

Abstract:The rise in the use of AI/ML applications across industries has sparked more discussions about the fairness of AI/ML in recent times. While prior research on the fairness of AI/ML exists, there is a lack of empirical studies focused on understanding the views and experiences of AI practitioners in developing a fair AI/ML. Understanding AI practitioners' views and experiences on the fairness of AI/ML is important because they are directly involved in its development and deployment and their insights can offer valuable real-world perspectives on the challenges associated with ensuring fairness in AI/ML. We conducted semi-structured interviews with 22 AI practitioners to investigate their understanding of what a 'fair AI/ML' is, the challenges they face in developing a fair AI/ML, the consequences of developing an unfair AI/ML, and the strategies they employ to ensure AI/ML fairness. We developed a framework showcasing the relationship between AI practitioners' understanding of 'fair AI/ML' and (i) their challenges in its development, (ii) the consequences of developing an unfair AI/ML, and (iii) strategies used to ensure AI/ML fairness. Additionally, we also identify areas for further investigation and offer recommendations to aid AI practitioners and AI companies in navigating fairness.

Ethics in the Age of AI: An Analysis of AI Practitioners' Awareness and Challenges

Jul 14, 2023

Abstract:Ethics in AI has become a debated topic of public and expert discourse in recent years. But what do people who build AI - AI practitioners - have to say about their understanding of AI ethics and the challenges associated with incorporating it in the AI-based systems they develop? Understanding AI practitioners' views on AI ethics is important as they are the ones closest to the AI systems and can bring about changes and improvements. We conducted a survey aimed at understanding AI practitioners' awareness of AI ethics and their challenges in incorporating ethics. Based on 100 AI practitioners' responses, our findings indicate that majority of AI practitioners had a reasonable familiarity with the concept of AI ethics, primarily due to workplace rules and policies. Privacy protection and security was the ethical principle that majority of them were aware of. Formal education/training was considered somewhat helpful in preparing practitioners to incorporate AI ethics. The challenges that AI practitioners faced in the development of ethical AI-based systems included (i) general challenges, (ii) technology-related challenges and (iii) human-related challenges. We also identified areas needing further investigation and provided recommendations to assist AI practitioners and companies in incorporating ethics into AI development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge