Jie Pan

HCOMC: A Hierarchical Cooperative On-Ramp Merging Control Framework in Mixed Traffic Environment on Two-Lane Highways

Jul 15, 2025Abstract:Highway on-ramp merging areas are common bottlenecks to traffic congestion and accidents. Currently, a cooperative control strategy based on connected and automated vehicles (CAVs) is a fundamental solution to this problem. While CAVs are not fully widespread, it is necessary to propose a hierarchical cooperative on-ramp merging control (HCOMC) framework for heterogeneous traffic flow on two-lane highways to address this gap. This paper extends longitudinal car-following models based on the intelligent driver model and lateral lane-changing models using the quintic polynomial curve to account for human-driven vehicles (HDVs) and CAVs, comprehensively considering human factors and cooperative adaptive cruise control. Besides, this paper proposes a HCOMC framework, consisting of a hierarchical cooperative planning model based on the modified virtual vehicle model, a discretionary lane-changing model based on game theory, and a multi-objective optimization model using the elitist non-dominated sorting genetic algorithm to ensure the safe, smooth, and efficient merging process. Then, the performance of our HCOMC is analyzed under different traffic densities and CAV penetration rates through simulation. The findings underscore our HCOMC's pronounced comprehensive advantages in enhancing the safety of group vehicles, stabilizing and expediting merging process, optimizing traffic efficiency, and economizing fuel consumption compared with benchmarks.

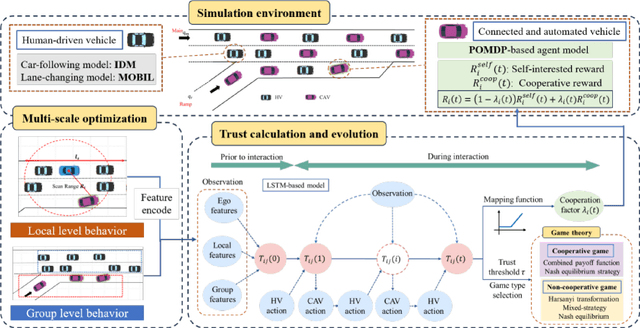

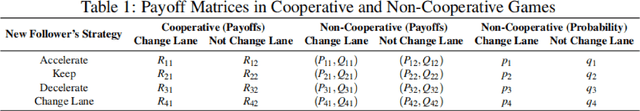

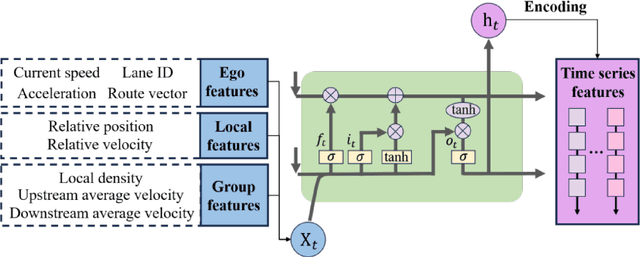

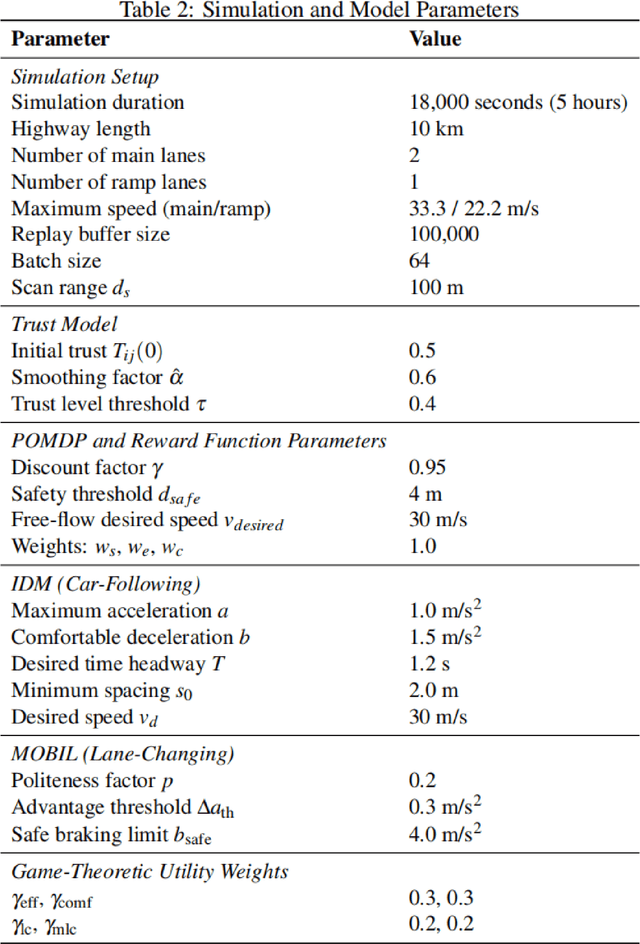

Trust-MARL: Trust-Based Multi-Agent Reinforcement Learning Framework for Cooperative On-Ramp Merging Control in Heterogeneous Traffic Flow

Jun 14, 2025

Abstract:Intelligent transportation systems require connected and automated vehicles (CAVs) to conduct safe and efficient cooperation with human-driven vehicles (HVs) in complex real-world traffic environments. However, the inherent unpredictability of human behaviour, especially at bottlenecks such as highway on-ramp merging areas, often disrupts traffic flow and compromises system performance. To address the challenge of cooperative on-ramp merging in heterogeneous traffic environments, this study proposes a trust-based multi-agent reinforcement learning (Trust-MARL) framework. At the macro level, Trust-MARL enhances global traffic efficiency by leveraging inter-agent trust to improve bottleneck throughput and mitigate traffic shockwave through emergent group-level coordination. At the micro level, a dynamic trust mechanism is designed to enable CAVs to adjust their cooperative strategies in response to real-time behaviors and historical interactions with both HVs and other CAVs. Furthermore, a trust-triggered game-theoretic decision-making module is integrated to guide each CAV in adapting its cooperation factor and executing context-aware lane-changing decisions under safety, comfort, and efficiency constraints. An extensive set of ablation studies and comparative experiments validates the effectiveness of the proposed Trust-MARL approach, demonstrating significant improvements in safety, efficiency, comfort, and adaptability across varying CAV penetration rates and traffic densities.

Integrating Large Language Models with Human Expertise for Disease Detection in Electronic Health Records

Mar 31, 2025Abstract:Objective: Electronic health records (EHR) are widely available to complement administrative data-based disease surveillance and healthcare performance evaluation. Defining conditions from EHR is labour-intensive and requires extensive manual labelling of disease outcomes. This study developed an efficient strategy based on advanced large language models to identify multiple conditions from EHR clinical notes. Methods: We linked a cardiac registry cohort in 2015 with an EHR system in Alberta, Canada. We developed a pipeline that leveraged a generative large language model (LLM) to analyze, understand, and interpret EHR notes by prompts based on specific diagnosis, treatment management, and clinical guidelines. The pipeline was applied to detect acute myocardial infarction (AMI), diabetes, and hypertension. The performance was compared against clinician-validated diagnoses as the reference standard and widely adopted International Classification of Diseases (ICD) codes-based methods. Results: The study cohort accounted for 3,088 patients and 551,095 clinical notes. The prevalence was 55.4%, 27.7%, 65.9% and for AMI, diabetes, and hypertension, respectively. The performance of the LLM-based pipeline for detecting conditions varied: AMI had 88% sensitivity, 63% specificity, and 77% positive predictive value (PPV); diabetes had 91% sensitivity, 86% specificity, and 71% PPV; and hypertension had 94% sensitivity, 32% specificity, and 72% PPV. Compared with ICD codes, the LLM-based method demonstrated improved sensitivity and negative predictive value across all conditions. The monthly percentage trends from the detected cases by LLM and reference standard showed consistent patterns.

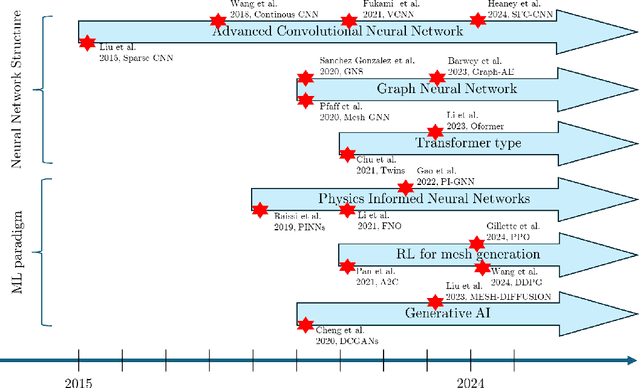

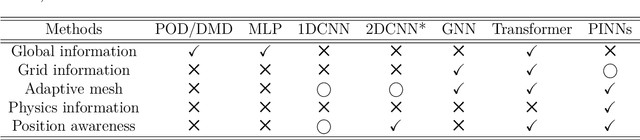

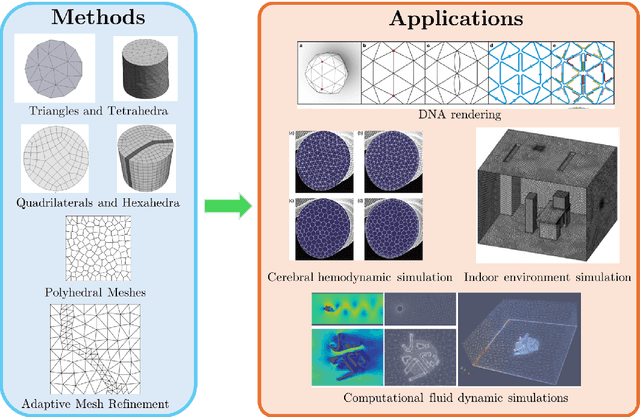

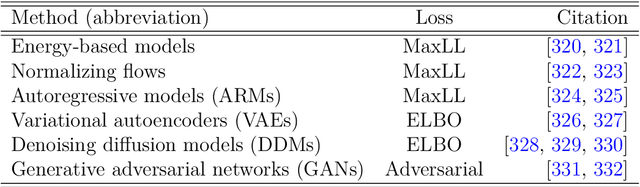

Machine learning for modelling unstructured grid data in computational physics: a review

Feb 13, 2025

Abstract:Unstructured grid data are essential for modelling complex geometries and dynamics in computational physics. Yet, their inherent irregularity presents significant challenges for conventional machine learning (ML) techniques. This paper provides a comprehensive review of advanced ML methodologies designed to handle unstructured grid data in high-dimensional dynamical systems. Key approaches discussed include graph neural networks, transformer models with spatial attention mechanisms, interpolation-integrated ML methods, and meshless techniques such as physics-informed neural networks. These methodologies have proven effective across diverse fields, including fluid dynamics and environmental simulations. This review is intended as a guidebook for computational scientists seeking to apply ML approaches to unstructured grid data in their domains, as well as for ML researchers looking to address challenges in computational physics. It places special focus on how ML methods can overcome the inherent limitations of traditional numerical techniques and, conversely, how insights from computational physics can inform ML development. To support benchmarking, this review also provides a summary of open-access datasets of unstructured grid data in computational physics. Finally, emerging directions such as generative models with unstructured data, reinforcement learning for mesh generation, and hybrid physics-data-driven paradigms are discussed to inspire future advancements in this evolving field.

Graph Structure Learning for Tumor Microenvironment with Cell Type Annotation from non-spatial scRNA-seq data

Feb 04, 2025Abstract:The exploration of cellular heterogeneity within the tumor microenvironment (TME) via single-cell RNA sequencing (scRNA-seq) is essential for understanding cancer progression and response to therapy. Current scRNA-seq approaches, however, lack spatial context and rely on incomplete datasets of ligand-receptor interactions (LRIs), limiting accurate cell type annotation and cell-cell communication (CCC) inference. This study addresses these challenges using a novel graph neural network (GNN) model that enhances cell type prediction and cell interaction analysis. Our study utilized a dataset consisting of 49,020 cells from 19 patients across three cancer types: Leukemia, Breast Invasive Carcinoma, and Colorectal Cancer. The proposed scGSL model demonstrated robust performance, achieving an average accuracy of 84.83%, precision of 86.23%, recall of 81.51%, and an F1 score of 80.92% across all datasets. These metrics represent a significant enhancement over existing methods, which typically exhibit lower performance metrics. Additionally, by reviewing existing literature on gene interactions within the TME, the scGSL model proves to robustly identify biologically meaningful gene interactions in an unsupervised manner, validated by significant expression differences in key gene pairs across various cancers. The source code and data used in this paper can be found in https://github.com/LiYuechao1998/scGSL.

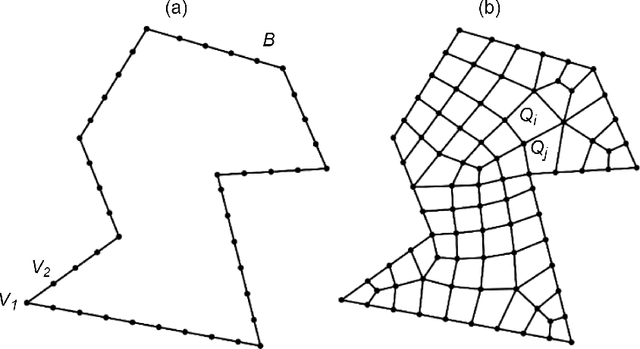

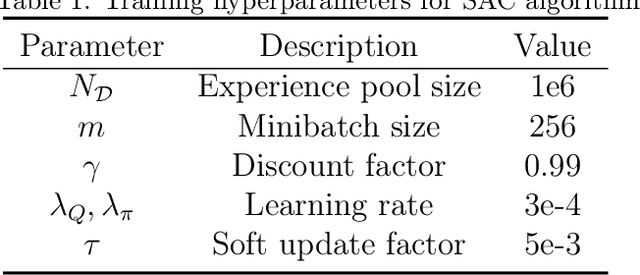

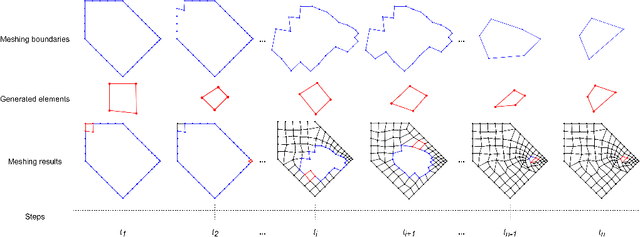

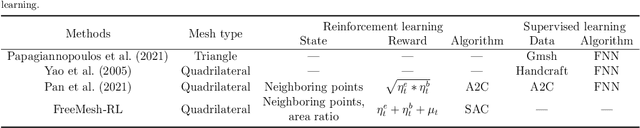

Reinforcement learning for automatic quadrilateral mesh generation: a soft actor-critic approach

Mar 19, 2022

Abstract:This paper proposes, implements, and evaluates a Reinforcement Learning (RL) based computational framework for automatic mesh generation. Mesh generation, as one of six basic research directions identified in NASA Vision 2030, is an important area in computational geometry and plays a fundamental role in numerical simulations in the area of finite element analysis (FEA) and computational fluid dynamics (CFD). Existing mesh generation methods suffer from high computational complexity, low mesh quality in complex geometries, and speed limitations. By formulating the mesh generation as a Markov decision process (MDP) problem, we are able to use soft actor-critic, a state-of-the-art RL algorithm, to learn the meshing agent's policy from trials automatically, and achieve a fully automatic mesh generation system without human intervention and any extra clean-up operations, which are typically needed in current commercial software. In our experiments and comparison with a number of representative commercial software, our system demonstrates promising performance with respect to generalizability, robustness, and effectiveness.

RAPTOR: Robust and Perception-aware Trajectory Replanning for Quadrotor Fast Flight

Jul 06, 2020

Abstract:Recent advances in trajectory replanning have enabled quadrotor to navigate autonomously in unknown environments. However, high-speed navigation still remains a significant challenge. Given very limited time, existing methods have no strong guarantee on the feasibility or quality of the solutions. Moreover, most methods do not consider environment perception, which is the key bottleneck to fast flight. In this paper, we present RAPTOR, a robust and perception-aware replanning framework to support fast and safe flight. A path-guided optimization (PGO) approach that incorporates multiple topological paths is devised, to ensure finding feasible and high-quality trajectories in very limited time. We also introduce a perception-aware planning strategy to actively observe and avoid unknown obstacles. A risk-aware trajectory refinement ensures that unknown obstacles which may endanger the quadrotor can be observed earlier and avoid in time. The motion of yaw angle is planned to actively explore the surrounding space that is relevant for safe navigation. The proposed methods are tested extensively. We will release our implementation as an open-source package for the community.

Robust Real-time UAV Replanning Using Guided Gradient-based Optimization and Topological Paths

Dec 29, 2019

Abstract:Gradient-based trajectory optimization (GTO) has gained wide popularity for quadrotor trajectory replanning. However, it suffers from local minima, which is not only fatal to safety but also unfavorable for smooth navigation. In this paper, we propose a replanning method based on GTO addressing this issue systematically. A path-guided optimization (PGO) approach is devised to tackle infeasible local minima, which improves the replanning success rate significantly. A topological path searching algorithm is developed to capture a collection of distinct useful paths in 3-D environments, each of which then guides an independent trajectory optimization. It activates a more comprehensive exploration of the solution space and output superior replanned trajectories. Benchmark evaluation shows that our method outplays state-of-the-art methods regarding replanning success rate and optimality. Challenging experiments of aggressive autonomous flight are presented to demonstrate the robustness of our method. We will release our implementation as an open-source package.

Teach-Repeat-Replan: A Complete and Robust System for Aggressive Flight in Complex Environments

Jul 01, 2019

Abstract:In this paper, we propose a complete and robust motion planning system for the aggressive flight of autonomous quadrotors. The proposed method is built upon on a classical teach-and-repeat framework, which is widely adopted in infrastructure inspection, aerial transportation, and search-and-rescue. For these applications, human's intention is essential to decide the topological structure of the flight trajectory of the drone. However, poor teaching trajectories and changing environments prevent a simple teach-and-repeat system from being applied flexibly and robustly. In this paper, instead of commanding the drone to precisely follow a teaching trajectory, we propose a method to automatically convert a human-piloted trajectory, which can be arbitrarily jerky, to a topologically equivalent one. The generated trajectory is guaranteed to be smooth, safe, and kinodynamically feasible, with a human preferable aggressiveness. Also, to avoid unmapped or dynamic obstacles during flights, a sliding-windowed local perception and re-planning method are introduced to our system, to generate safe local trajectories onboard. We name our system as teach-repeat-replan. It can capture users' intention of a flight mission, convert an arbitrarily jerky teaching path to a smooth repeating trajectory, and generate safe local re-plans to avoid unmapped or moving obstacles. The proposed planning system is integrated into a complete autonomous quadrotor with global and local perception and localization sub-modules. Our system is validated by performing aggressive flights in challenging indoor/outdoor environments. We release all components in our quadrotor system as open-source ros-packages.

Real-Time Dense Stereo Embedded in A UAV for Road Inspection

Apr 12, 2019

Abstract:The condition assessment of road surfaces is essential to ensure their serviceability while still providing maximum road traffic safety. This paper presents a robust stereo vision system embedded in an unmanned aerial vehicle (UAV). The perspective view of the target image is first transformed into the reference view, and this not only improves the disparity accuracy, but also reduces the algorithm's computational complexity. The cost volumes generated from stereo matching are then filtered using a bilateral filter. The latter has been proved to be a feasible solution for the functional minimisation problem in a fully connected Markov random field model. Finally, the disparity maps are transformed by minimising an energy function with respect to the roll angle and disparity projection model. This makes the damaged road areas more distinguishable from the road surface. The proposed system is implemented on an NVIDIA Jetson TX2 GPU with CUDA for real-time purposes. It is demonstrated through experiments that the damaged road areas can be easily distinguished from the transformed disparity maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge