Reinforcement learning for automatic quadrilateral mesh generation: a soft actor-critic approach

Paper and Code

Mar 19, 2022

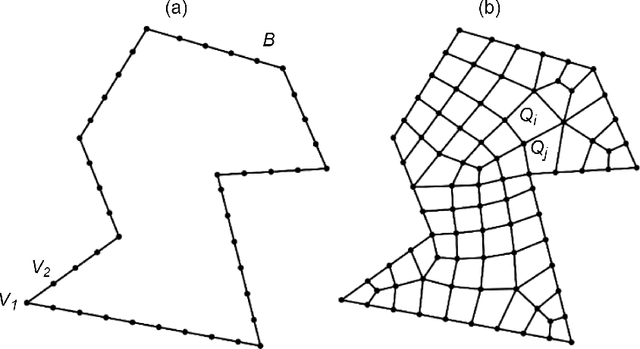

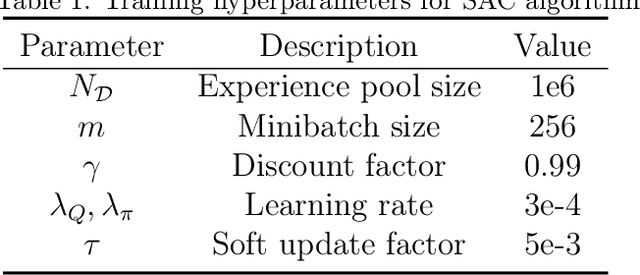

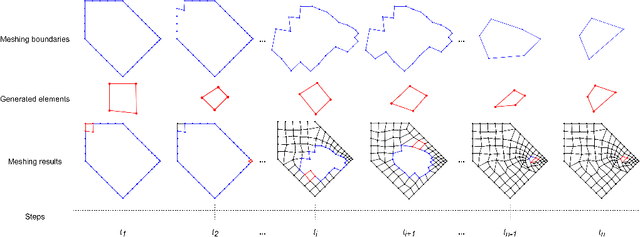

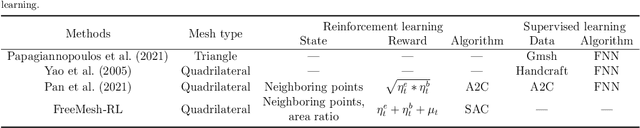

This paper proposes, implements, and evaluates a Reinforcement Learning (RL) based computational framework for automatic mesh generation. Mesh generation, as one of six basic research directions identified in NASA Vision 2030, is an important area in computational geometry and plays a fundamental role in numerical simulations in the area of finite element analysis (FEA) and computational fluid dynamics (CFD). Existing mesh generation methods suffer from high computational complexity, low mesh quality in complex geometries, and speed limitations. By formulating the mesh generation as a Markov decision process (MDP) problem, we are able to use soft actor-critic, a state-of-the-art RL algorithm, to learn the meshing agent's policy from trials automatically, and achieve a fully automatic mesh generation system without human intervention and any extra clean-up operations, which are typically needed in current commercial software. In our experiments and comparison with a number of representative commercial software, our system demonstrates promising performance with respect to generalizability, robustness, and effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge