Isabella Huang

Mobi-$π$: Mobilizing Your Robot Learning Policy

May 29, 2025Abstract:Learned visuomotor policies are capable of performing increasingly complex manipulation tasks. However, most of these policies are trained on data collected from limited robot positions and camera viewpoints. This leads to poor generalization to novel robot positions, which limits the use of these policies on mobile platforms, especially for precise tasks like pressing buttons or turning faucets. In this work, we formulate the policy mobilization problem: find a mobile robot base pose in a novel environment that is in distribution with respect to a manipulation policy trained on a limited set of camera viewpoints. Compared to retraining the policy itself to be more robust to unseen robot base pose initializations, policy mobilization decouples navigation from manipulation and thus does not require additional demonstrations. Crucially, this problem formulation complements existing efforts to improve manipulation policy robustness to novel viewpoints and remains compatible with them. To study policy mobilization, we introduce the Mobi-$\pi$ framework, which includes: (1) metrics that quantify the difficulty of mobilizing a given policy, (2) a suite of simulated mobile manipulation tasks based on RoboCasa to evaluate policy mobilization, (3) visualization tools for analysis, and (4) several baseline methods. We also propose a novel approach that bridges navigation and manipulation by optimizing the robot's base pose to align with an in-distribution base pose for a learned policy. Our approach utilizes 3D Gaussian Splatting for novel view synthesis, a score function to evaluate pose suitability, and sampling-based optimization to identify optimal robot poses. We show that our approach outperforms baselines in both simulation and real-world environments, demonstrating its effectiveness for policy mobilization.

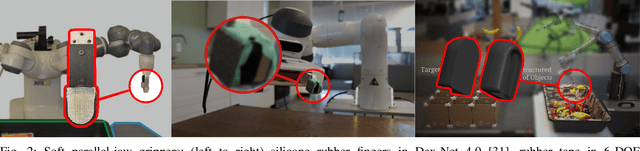

Practical Insights on Grasp Strategies for Mobile Manipulation in the Wild

Apr 16, 2025Abstract:Mobile manipulation robots are continuously advancing, with their grasping capabilities rapidly progressing. However, there are still significant gaps preventing state-of-the-art mobile manipulators from widespread real-world deployments, including their ability to reliably grasp items in unstructured environments. To help bridge this gap, we developed SHOPPER, a mobile manipulation robot platform designed to push the boundaries of reliable and generalizable grasp strategies. We develop these grasp strategies and deploy them in a real-world grocery store -- an exceptionally challenging setting chosen for its vast diversity of manipulable items, fixtures, and layouts. In this work, we present our detailed approach to designing general grasp strategies towards picking any item in a real grocery store. Additionally, we provide an in-depth analysis of our latest real-world field test, discussing key findings related to fundamental failure modes over hundreds of distinct pick attempts. Through our detailed analysis, we aim to offer valuable practical insights and identify key grasping challenges, which can guide the robotics community towards pressing open problems in the field.

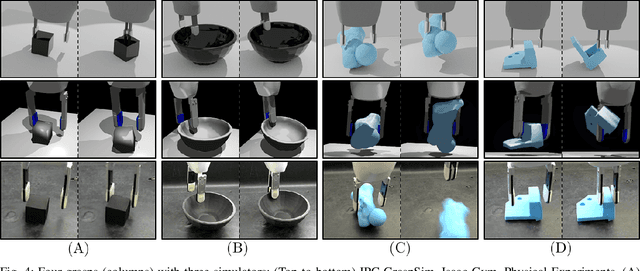

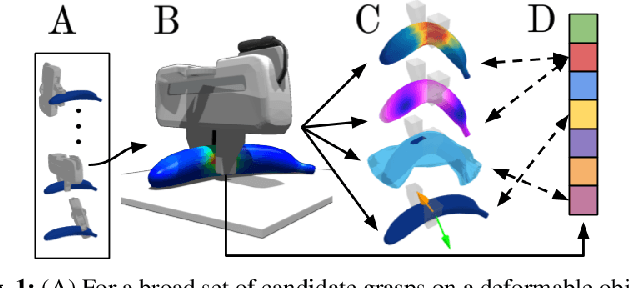

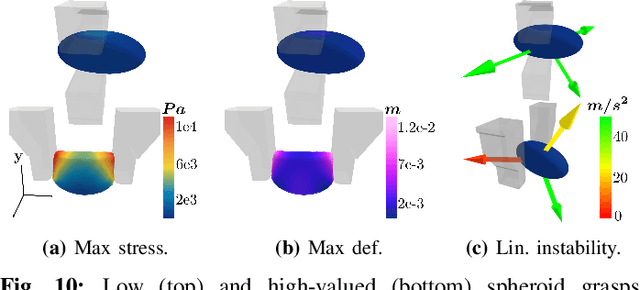

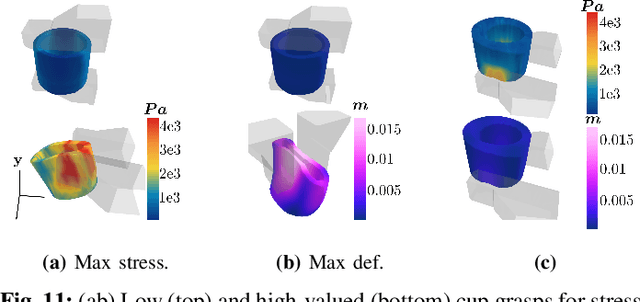

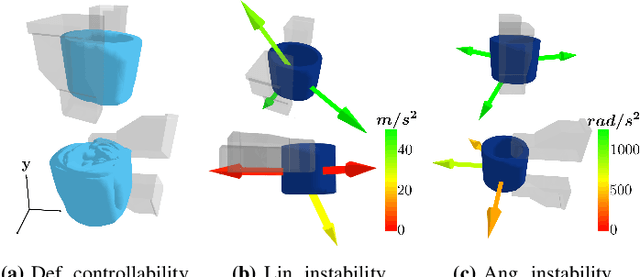

DefGraspNets: Grasp Planning on 3D Fields with Graph Neural Nets

Mar 28, 2023

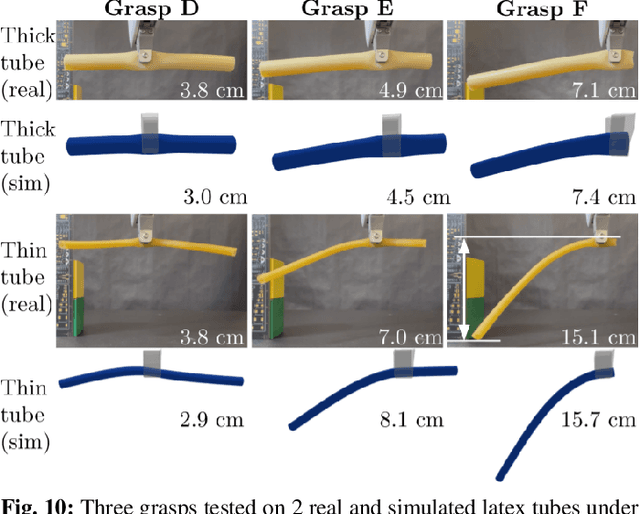

Abstract:Robotic grasping of 3D deformable objects is critical for real-world applications such as food handling and robotic surgery. Unlike rigid and articulated objects, 3D deformable objects have infinite degrees of freedom. Fully defining their state requires 3D deformation and stress fields, which are exceptionally difficult to analytically compute or experimentally measure. Thus, evaluating grasp candidates for grasp planning typically requires accurate, but slow 3D finite element method (FEM) simulation. Sampling-based grasp planning is often impractical, as it requires evaluation of a large number of grasp candidates. Gradient-based grasp planning can be more efficient, but requires a differentiable model to synthesize optimal grasps from initial candidates. Differentiable FEM simulators may fill this role, but are typically no faster than standard FEM. In this work, we propose learning a predictive graph neural network (GNN), DefGraspNets, to act as our differentiable model. We train DefGraspNets to predict 3D stress and deformation fields based on FEM-based grasp simulations. DefGraspNets not only runs up to 1500 times faster than the FEM simulator, but also enables fast gradient-based grasp optimization over 3D stress and deformation metrics. We design DefGraspNets to align with real-world grasp planning practices and demonstrate generalization across multiple test sets, including real-world experiments.

Design Project of an Open-Source, Low-Cost, and Lightweight Robotic Manipulator for High School Students

Feb 21, 2023

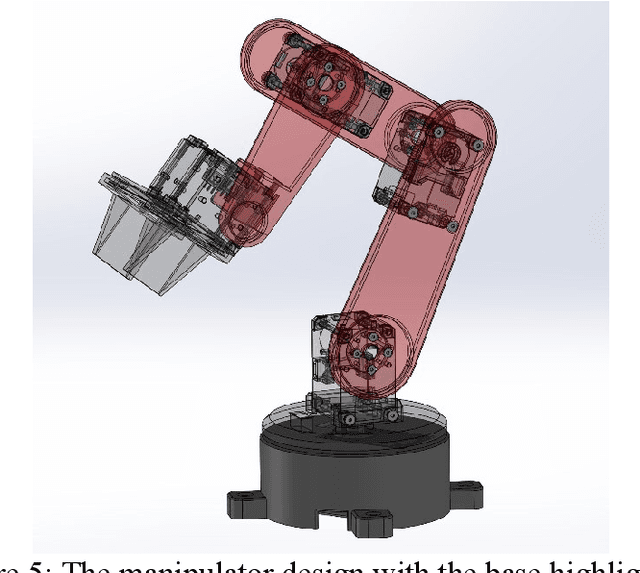

Abstract:In recent years, there is an increasing interest in high school robotics extracurriculars such as robotics clubs and robotics competitions. The growing demand is a result of more ubiquitous open-source software and affordable off-the-shelf hardware kits, which significantly help lower the barrier for entry-level robotics hobbyists. In this project, we present an open-source, low-cost, and lightweight robotic manipulator designed and developed by a high school researcher under the guidance of a university faculty and a Ph.D. student. We believe the presented project is suitable for high school robotics research and educational activities. Our open-source package consists of mechanical design models, mechatronics specifications, and software program source codes. The mechanical design models include CAD (Computer Aided Design) files that are ready for prototyping (3D printing technology) and serve as an assembly guide accommodated with a complete bill of materials. Electrical wiring diagrams and low-level controllers are documented in detail as part of the open-source software package. The educational objective of this project is to enable high school student teams to replicate and build a robotic manipulator. The engineering experience that high school students acquire in the proposed project is full-stack, including mechanical design, mechatronics, and programming. The project significantly enriches their hands-on engineering experience in a project-based environment. Throughout this project, we discovered that the high school researcher was able to apply multidisciplinary knowledge from K-12 STEM courses to build the robotic manipulator. The researcher was able to go through a system engineering design and development process and obtain skills to use professional engineering tools including SolidWorks and Arduino microcontrollers.

DefGraspSim: Physics-based simulation of grasp outcomes for 3D deformable objects

Mar 21, 2022

Abstract:Robotic grasping of 3D deformable objects (e.g., fruits/vegetables, internal organs, bottles/boxes) is critical for real-world applications such as food processing, robotic surgery, and household automation. However, developing grasp strategies for such objects is uniquely challenging. Unlike rigid objects, deformable objects have infinite degrees of freedom and require field quantities (e.g., deformation, stress) to fully define their state. As these quantities are not easily accessible in the real world, we propose studying interaction with deformable objects through physics-based simulation. As such, we simulate grasps on a wide range of 3D deformable objects using a GPU-based implementation of the corotational finite element method (FEM). To facilitate future research, we open-source our simulated dataset (34 objects, 1e5 Pa elasticity range, 6800 grasp evaluations, 1.1M grasp measurements), as well as a code repository that allows researchers to run our full FEM-based grasp evaluation pipeline on arbitrary 3D object models of their choice. Finally, we demonstrate good correspondence between grasp outcomes on simulated objects and their real counterparts.

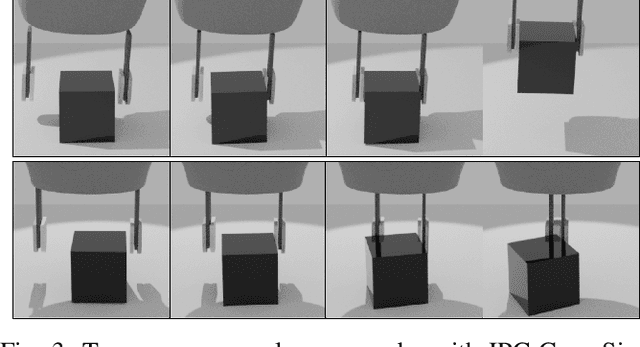

Simulation of Parallel-Jaw Grasping using Incremental Potential Contact Models

Nov 02, 2021

Abstract:Soft compliant jaw tips are almost universally used with parallel-jaw robot grippers due to their ability to increase contact area and friction between the jaws and the object to be manipulated. However, interactions between the compliant surfaces and rigid objects are notoriously difficult to model. We introduce IPC-GraspSim, a novel simulator using Incremental Potential Contact (IPC) - a deformation model developed in 2020 for computer graphics - that models both the dynamics and the deformation of compliant jaw tips during grasping. IPC-GraspSim is evaluated using a set of 2,000 physical grasps across 16 adversarial objects where standard analytic models perform poorly. In comparison to both analytic quasistatic contact models (soft point contact, REACH, 6DFC) and dynamic grasp simulators (Isaac Gym with FleX backend), results suggest that IPC-GraspSim more accurately models real-world grasps, increasing F1 score by 9%. All data, code, videos, and supplementary material are available at https://sites.google.com/berkeley.edu/ipcgraspsim.

DefGraspSim: Simulation-based grasping of 3D deformable objects

Jul 12, 2021

Abstract:Robotic grasping of 3D deformable objects (e.g., fruits/vegetables, internal organs, bottles/boxes) is critical for real-world applications such as food processing, robotic surgery, and household automation. However, developing grasp strategies for such objects is uniquely challenging. In this work, we efficiently simulate grasps on a wide range of 3D deformable objects using a GPU-based implementation of the corotational finite element method (FEM). To facilitate future research, we open-source our simulated dataset (34 objects, 1e5 Pa elasticity range, 6800 grasp evaluations, 1.1M grasp measurements), as well as a code repository that allows researchers to run our full FEM-based grasp evaluation pipeline on arbitrary 3D object models of their choice. We also provide a detailed analysis on 6 object primitives. For each primitive, we methodically describe the effects of different grasp strategies, compute a set of performance metrics (e.g., deformation, stress) that fully capture the object response, and identify simple grasp features (e.g., gripper displacement, contact area) measurable by robots prior to pickup and predictive of these performance metrics. Finally, we demonstrate good correspondence between grasps on simulated objects and their real-world counterparts.

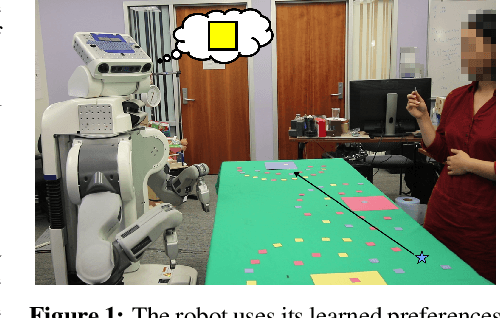

Nonverbal Robot Feedback for Human Teachers

Nov 06, 2019

Abstract:Robots can learn preferences from human demonstrations, but their success depends on how informative these demonstrations are. Being informative is unfortunately very challenging, because during teaching, people typically get no transparency into what the robot already knows or has learned so far. In contrast, human students naturally provide a wealth of nonverbal feedback that reveals their level of understanding and engagement. In this work, we study how a robot can similarly provide feedback that is minimally disruptive, yet gives human teachers a better mental model of the robot learner, and thus enables them to teach more effectively. Our idea is that at any point, the robot can indicate what it thinks the correct next action is, shedding light on its current estimate of the human's preferences. We analyze how useful this feedback is, both in theory and with two user studies---one with a virtual character that tests the feedback itself, and one with a PR2 robot that uses gaze as the feedback mechanism. We find that feedback can be useful for improving both the quality of teaching and teachers' understanding of the robot's capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge