Mark Tjersland

GTR: Gaussian Splatting Tracking and Reconstruction of Unknown Objects Based on Appearance and Geometric Complexity

May 17, 2025Abstract:We present a novel method for 6-DoF object tracking and high-quality 3D reconstruction from monocular RGBD video. Existing methods, while achieving impressive results, often struggle with complex objects, particularly those exhibiting symmetry, intricate geometry or complex appearance. To bridge these gaps, we introduce an adaptive method that combines 3D Gaussian Splatting, hybrid geometry/appearance tracking, and key frame selection to achieve robust tracking and accurate reconstructions across a diverse range of objects. Additionally, we present a benchmark covering these challenging object classes, providing high-quality annotations for evaluating both tracking and reconstruction performance. Our approach demonstrates strong capabilities in recovering high-fidelity object meshes, setting a new standard for single-sensor 3D reconstruction in open-world environments.

Practical Insights on Grasp Strategies for Mobile Manipulation in the Wild

Apr 16, 2025Abstract:Mobile manipulation robots are continuously advancing, with their grasping capabilities rapidly progressing. However, there are still significant gaps preventing state-of-the-art mobile manipulators from widespread real-world deployments, including their ability to reliably grasp items in unstructured environments. To help bridge this gap, we developed SHOPPER, a mobile manipulation robot platform designed to push the boundaries of reliable and generalizable grasp strategies. We develop these grasp strategies and deploy them in a real-world grocery store -- an exceptionally challenging setting chosen for its vast diversity of manipulable items, fixtures, and layouts. In this work, we present our detailed approach to designing general grasp strategies towards picking any item in a real grocery store. Additionally, we provide an in-depth analysis of our latest real-world field test, discussing key findings related to fundamental failure modes over hundreds of distinct pick attempts. Through our detailed analysis, we aim to offer valuable practical insights and identify key grasping challenges, which can guide the robotics community towards pressing open problems in the field.

Demonstrating Mobile Manipulation in the Wild: A Metrics-Driven Approach

Jan 03, 2024

Abstract:We present our general-purpose mobile manipulation system consisting of a custom robot platform and key algorithms spanning perception and planning. To extensively test the system in the wild and benchmark its performance, we choose a grocery shopping scenario in an actual, unmodified grocery store. We derive key performance metrics from detailed robot log data collected during six week-long field tests, spread across 18 months. These objective metrics, gained from complex yet repeatable tests, drive the direction of our research efforts and let us continuously improve our system's performance. We find that thorough end-to-end system-level testing of a complex mobile manipulation system can serve as a reality-check for state-of-the-art methods in robotics. This effectively grounds robotics research efforts in real world needs and challenges, which we deem highly useful for the advancement of the field. To this end, we share our key insights and takeaways to inspire and accelerate similar system-level research projects.

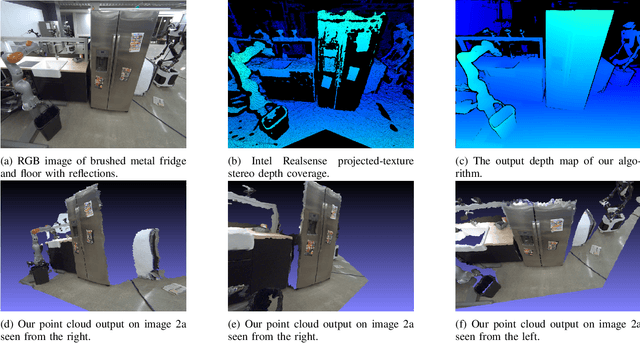

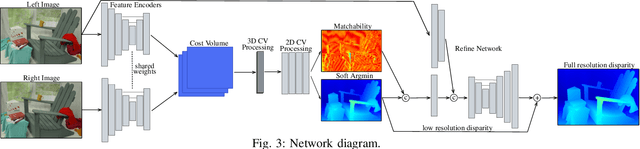

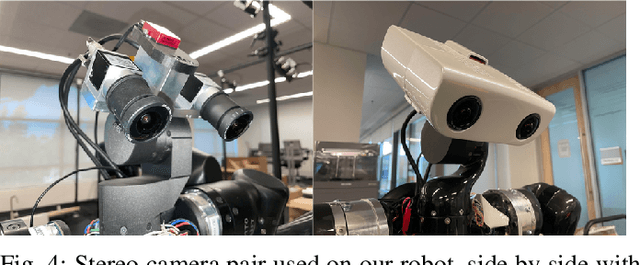

A Learned Stereo Depth System for Robotic Manipulation in Homes

Sep 23, 2021

Abstract:We present a passive stereo depth system that produces dense and accurate point clouds optimized for human environments, including dark, textureless, thin, reflective and specular surfaces and objects, at 2560x2048 resolution, with 384 disparities, in 30 ms. The system consists of an algorithm combining learned stereo matching with engineered filtering, a training and data-mixing methodology, and a sensor hardware design. Our architecture is 15x faster than approaches that perform similarly on the Middlebury and Flying Things Stereo Benchmarks. To effectively supervise the training of this model, we combine real data labelled using off-the-shelf depth sensors, as well as a number of different rendered, simulated labeled datasets. We demonstrate the efficacy of our system by presenting a large number of qualitative results in the form of depth maps and point-clouds, experiments validating the metric accuracy of our system and comparisons to other sensors on challenging objects and scenes. We also show the competitiveness of our algorithm compared to state-of-the-art learned models using the Middlebury and FlyingThings datasets.

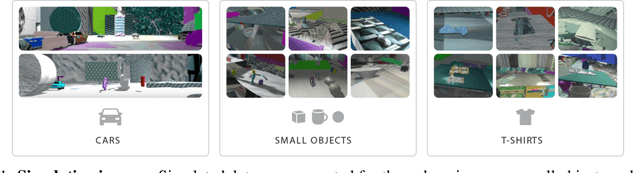

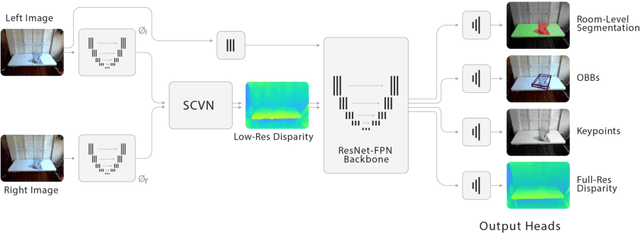

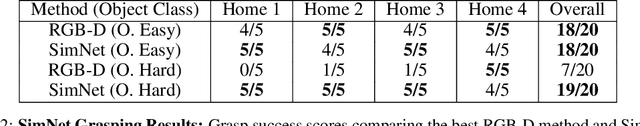

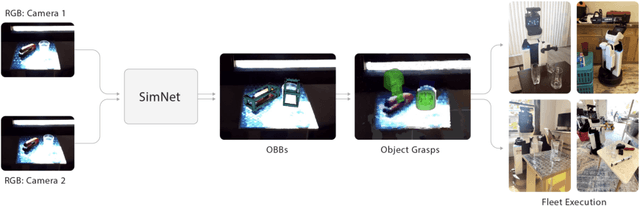

SimNet: Enabling Robust Unknown Object Manipulation from Pure Synthetic Data via Stereo

Jun 30, 2021

Abstract:Robot manipulation of unknown objects in unstructured environments is a challenging problem due to the variety of shapes, materials, arrangements and lighting conditions. Even with large-scale real-world data collection, robust perception and manipulation of transparent and reflective objects across various lighting conditions remain challenging. To address these challenges we propose an approach to performing sim-to-real transfer of robotic perception. The underlying model, SimNet, is trained as a single multi-headed neural network using simulated stereo data as input and simulated object segmentation masks, 3D oriented bounding boxes (OBBs), object keypoints, and disparity as output. A key component of SimNet is the incorporation of a learned stereo sub-network that predicts disparity. SimNet is evaluated on 2D car detection, unknown object detection, and deformable object keypoint detection and significantly outperforms a baseline that uses a structured light RGB-D sensor. By inferring grasp positions using the OBB and keypoint predictions, SimNet can be used to perform end-to-end manipulation of unknown objects in both easy and hard scenarios using our fleet of Toyota HSR robots in four home environments. In unknown object grasping experiments, the predictions from the baseline RGB-D network and SimNet enable successful grasps of most of the easy objects. However, the RGB-D baseline only grasps 35% of the hard (e.g., transparent) objects, while SimNet grasps 95%, suggesting that SimNet can enable robust manipulation of unknown objects, including transparent objects, in unknown environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge