Sangwoon Kim

Practical Insights on Grasp Strategies for Mobile Manipulation in the Wild

Apr 16, 2025Abstract:Mobile manipulation robots are continuously advancing, with their grasping capabilities rapidly progressing. However, there are still significant gaps preventing state-of-the-art mobile manipulators from widespread real-world deployments, including their ability to reliably grasp items in unstructured environments. To help bridge this gap, we developed SHOPPER, a mobile manipulation robot platform designed to push the boundaries of reliable and generalizable grasp strategies. We develop these grasp strategies and deploy them in a real-world grocery store -- an exceptionally challenging setting chosen for its vast diversity of manipulable items, fixtures, and layouts. In this work, we present our detailed approach to designing general grasp strategies towards picking any item in a real grocery store. Additionally, we provide an in-depth analysis of our latest real-world field test, discussing key findings related to fundamental failure modes over hundreds of distinct pick attempts. Through our detailed analysis, we aim to offer valuable practical insights and identify key grasping challenges, which can guide the robotics community towards pressing open problems in the field.

TEXterity -- Tactile Extrinsic deXterity: Simultaneous Tactile Estimation and Control for Extrinsic Dexterity

Mar 04, 2024

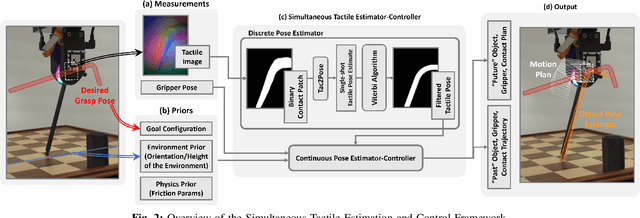

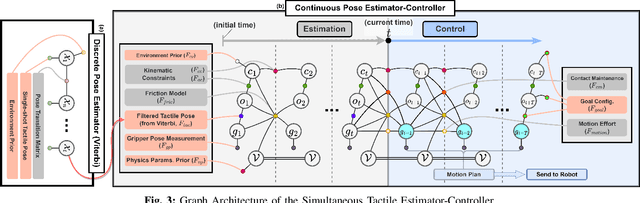

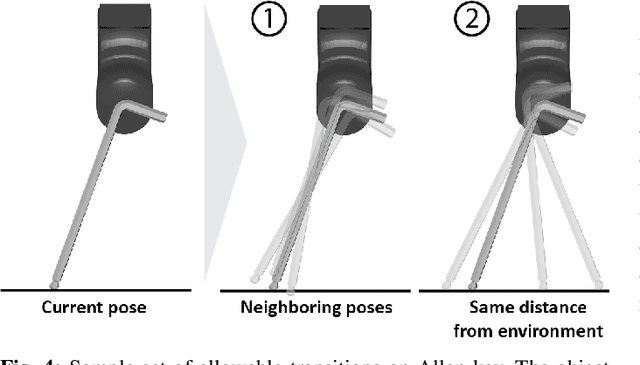

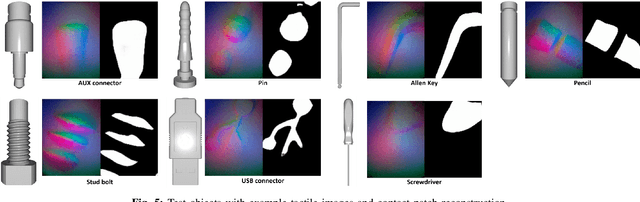

Abstract:We introduce a novel approach that combines tactile estimation and control for in-hand object manipulation. By integrating measurements from robot kinematics and an image-based tactile sensor, our framework estimates and tracks object pose while simultaneously generating motion plans in a receding horizon fashion to control the pose of a grasped object. This approach consists of a discrete pose estimator that tracks the most likely sequence of object poses in a coarsely discretized grid, and a continuous pose estimator-controller to refine the pose estimate and accurately manipulate the pose of the grasped object. Our method is tested on diverse objects and configurations, achieving desired manipulation objectives and outperforming single-shot methods in estimation accuracy. The proposed approach holds potential for tasks requiring precise manipulation and limited intrinsic in-hand dexterity under visual occlusion, laying the foundation for closed-loop behavior in applications such as regrasping, insertion, and tool use. Please see https://sites.google.com/view/texterity for videos of real-world demonstrations.

TEXterity: Tactile Extrinsic deXterity

Jan 22, 2024

Abstract:We introduce a novel approach that combines tactile estimation and control for in-hand object manipulation. By integrating measurements from robot kinematics and an image-based tactile sensor, our framework estimates and tracks object pose while simultaneously generating motion plans to control the pose of a grasped object. This approach consists of a discrete pose estimator that uses the Viterbi decoding algorithm to find the most likely sequence of object poses in a coarsely discretized grid, and a continuous pose estimator-controller to refine the pose estimate and accurately manipulate the pose of the grasped object. Our method is tested on diverse objects and configurations, achieving desired manipulation objectives and outperforming single-shot methods in estimation accuracy. The proposed approach holds potential for tasks requiring precise manipulation in scenarios where visual perception is limited, laying the foundation for closed-loop behavior applications such as assembly and tool use. Please see supplementary videos for real-world demonstration at https://sites.google.com/view/texterity.

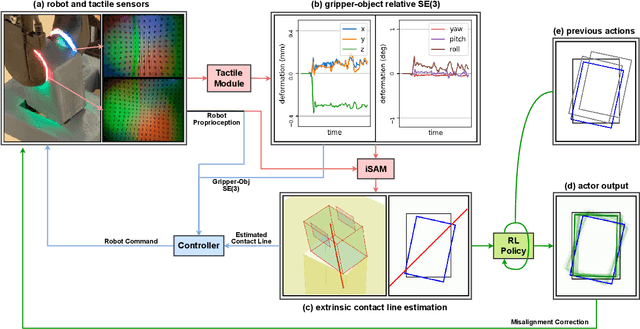

Simultaneous Tactile Estimation and Control of Extrinsic Contact

Mar 06, 2023

Abstract:We propose a method that simultaneously estimates and controls extrinsic contact with tactile feedback. The method enables challenging manipulation tasks that require controlling light forces and accurate motions in contact, such as balancing an unknown object on a thin rod standing upright. A factor graph-based framework fuses a sequence of tactile and kinematic measurements to estimate and control the interaction between gripper-object-environment, including the location and wrench at the extrinsic contact between the grasped object and the environment and the grasp wrench transferred from the gripper to the object. The same framework simultaneously plans the gripper motions that make it possible to estimate the state while satisfying regularizing control objectives to prevent slip, such as minimizing the grasp wrench and minimizing frictional force at the extrinsic contact. We show results with sub-millimeter contact localization error and good slip prevention even on slippery environments, for multiple contact formations (point, line, patch contact) and transitions between them. See supplementary video and results at https://sites.google.com/view/sim-tact.

Active Extrinsic Contact Sensing: Application to General Peg-in-Hole Insertion

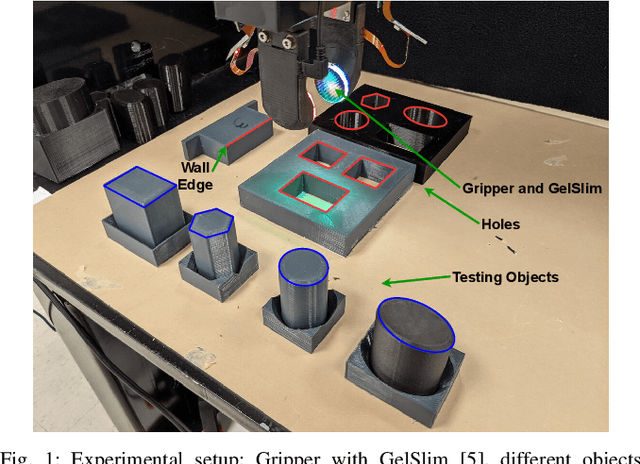

Oct 07, 2021

Abstract:We propose a method that actively estimates the location of contact between a grasped rigid object and its environment, and uses this as input to a peg-in-hole insertion policy. An estimation model and an active tactile feedback controller work collaboratively to get an accurate estimate of the external contacts. The controller helps the estimation model get a better estimate by regulating a consistent contact mode. The better estimation makes it easier for the controller to regulate the contact. To utilize this contact estimate, we train an object-agnostic insertion policy that learns to make use of the series of contact estimates to guide the insertion. In contrast with previous works that learn a policy directly from tactile signals, since this policy is in contact configuration space, can be learned directly in simulation. Lastly, we demonstrate and evaluate the active extrinsic contact line estimation and the trained insertion policy together in a real experiment. We show that the proposed method inserts various-shaped test objects with higher success rates and fewer insertion attempts than previous work with end-to-end approaches. See supplementary video and results at https://sites.google.com/view/active-extrinsic-contact.

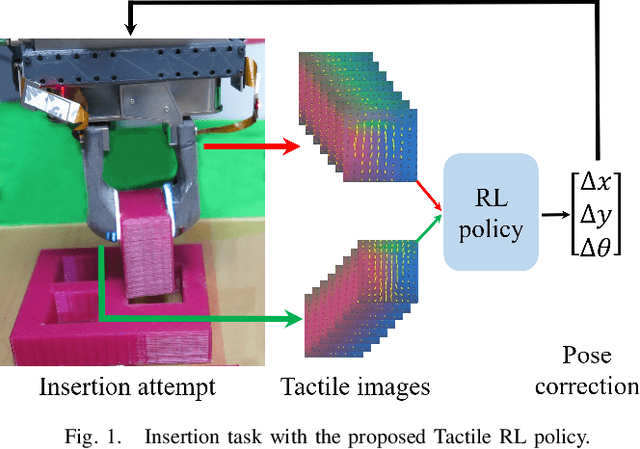

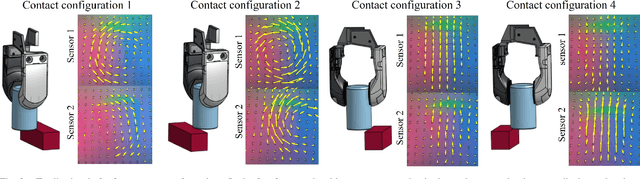

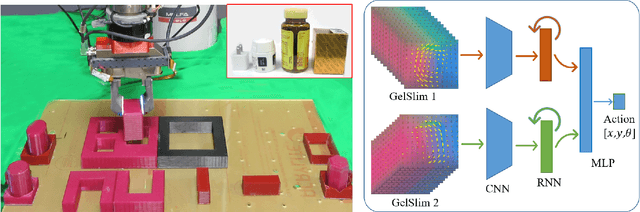

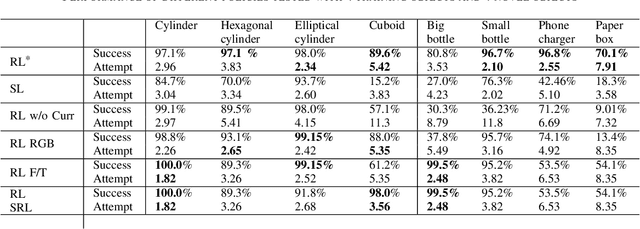

Tactile-RL for Insertion: Generalization to Objects of Unknown Geometry

Apr 02, 2021

Abstract:Object insertion is a classic contact-rich manipulation task. The task remains challenging, especially when considering general objects of unknown geometry, which significantly limits the ability to understand the contact configuration between the object and the environment. We study the problem of aligning the object and environment with a tactile-based feedback insertion policy. The insertion process is modeled as an episodic policy that iterates between insertion attempts followed by pose corrections. We explore different mechanisms to learn such a policy based on Reinforcement Learning. The key contribution of this paper is to demonstrate that it is possible to learn a tactile insertion policy that generalizes across different object geometries, and an ablation study of the key design choices for the learning agent: 1) the type of learning scheme: supervised vs. reinforcement learning; 2) the type of learning schedule: unguided vs. curriculum learning; 3) the type of sensing modality: force/torque (F/T) vs. tactile; and 4) the type of tactile representation: tactile RGB vs. tactile flow. We show that the optimal configuration of the learning agent (RL + curriculum + tactile flow) exposed to 4 training objects yields an insertion policy that inserts 4 novel objects with over 85.0% success rate and within 3~4 attempts. Comparisons between F/T and tactile sensing, shows that while an F/T-based policy learns more efficiently, a tactile-based policy provides better generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge