Huan Song

A Real-Time Privacy-Preserving Behavior Recognition System via Edge-Cloud Collaboration

Jan 30, 2026Abstract:As intelligent sensing expands into high-privacy environments such as restrooms and changing rooms, the field faces a critical privacy-security paradox. Traditional RGB surveillance raises significant concerns regarding visual recording and storage, while existing privacy-preserving methods-ranging from physical desensitization to traditional cryptographic or obfuscation techniques-often compromise semantic understanding capabilities or fail to guarantee mathematical irreversibility against reconstruction attacks. To address these challenges, this study presents a novel privacy-preserving perception technology based on the AI Flow theoretical framework and an edge-cloud collaborative architecture. The proposed methodology integrates source desensitization with irreversible feature mapping. Leveraging Information Bottleneck theory, the edge device performs millisecond-level processing to transform raw imagery into abstract feature vectors via non-linear mapping and stochastic noise injection. This process constructs a unidirectional information flow that strips identity-sensitive attributes, rendering the reconstruction of original images impossible. Subsequently, the cloud platform utilizes multimodal family models to perform joint inference solely on these abstract vectors to detect abnormal behaviors. This approach fundamentally severs the path to privacy leakage at the architectural level, achieving a breakthrough from video surveillance to de-identified behavior perception and offering a robust solution for risk management in high-sensitivity public spaces.

Theoretical Foundations of Scaling Law in Familial Models

Dec 29, 2025Abstract:Neural scaling laws have become foundational for optimizing large language model (LLM) training, yet they typically assume a single dense model output. This limitation effectively overlooks "Familial models, a transformative paradigm essential for realizing ubiquitous intelligence across heterogeneous device-edge-cloud hierarchies. Transcending static architectures, familial models integrate early exits with relay-style inference to spawn G deployable sub-models from a single shared backbone. In this work, we theoretically and empirically extend the scaling law to capture this "one-run, many-models" paradigm by introducing Granularity (G) as a fundamental scaling variable alongside model size (N) and training tokens (D). To rigorously quantify this relationship, we propose a unified functional form L(N, D, G) and parameterize it using large-scale empirical runs. Specifically, we employ a rigorous IsoFLOP experimental design to strictly isolate architectural impact from computational scale. Across fixed budgets, we systematically sweep model sizes (N) and granularities (G) while dynamically adjusting tokens (D). This approach effectively decouples the marginal cost of granularity from the benefits of scale, ensuring high-fidelity parameterization of our unified scaling law. Our results reveal that the granularity penalty follows a multiplicative power law with an extremely small exponent. Theoretically, this bridges fixed-compute training with dynamic architectures. Practically, it validates the "train once, deploy many" paradigm, demonstrating that deployment flexibility is achievable without compromising the compute-optimality of dense baselines.

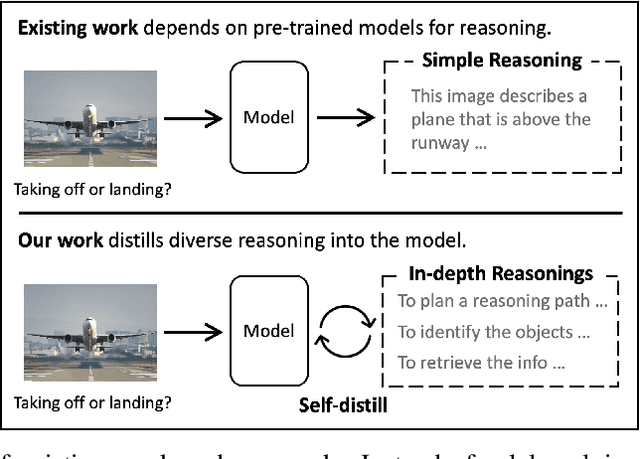

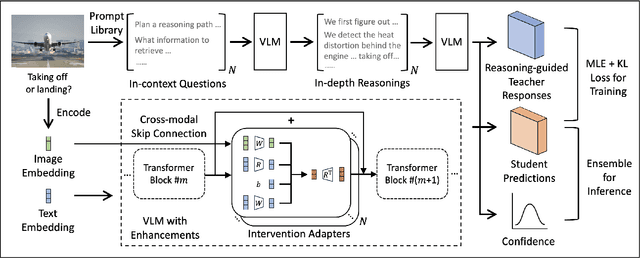

Enhancing Multi-hop Reasoning in Vision-Language Models via Self-Distillation with Multi-Prompt Ensembling

Mar 03, 2025

Abstract:Multi-modal large language models have seen rapid advancement alongside large language models. However, while language models can effectively leverage chain-of-thought prompting for zero or few-shot learning, similar prompting strategies are less effective for multi-modal LLMs due to modality gaps and task complexity. To address this challenge, we explore two prompting approaches: a dual-query method that separates multi-modal input analysis and answer generation into two prompting steps, and an ensemble prompting method that combines multiple prompt variations to arrive at the final answer. Although these approaches enhance the model's reasoning capabilities without fine-tuning, they introduce significant inference overhead. Therefore, building on top of these two prompting techniques, we propose a self-distillation framework such that the model can improve itself without any annotated data. Our self-distillation framework learns representation intervention modules from the reasoning traces collected from ensembled dual-query prompts, in the form of hidden representations. The lightweight intervention modules operate in parallel with the frozen original model, which makes it possible to maintain computational efficiency while significantly improving model capability. We evaluate our method on five widely-used VQA benchmarks, demonstrating its effectiveness in performing multi-hop reasoning for complex tasks.

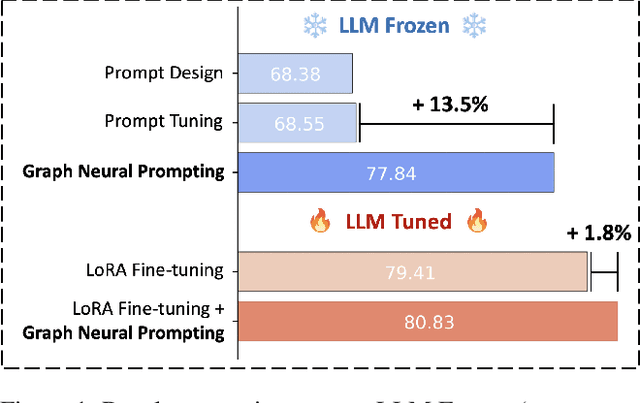

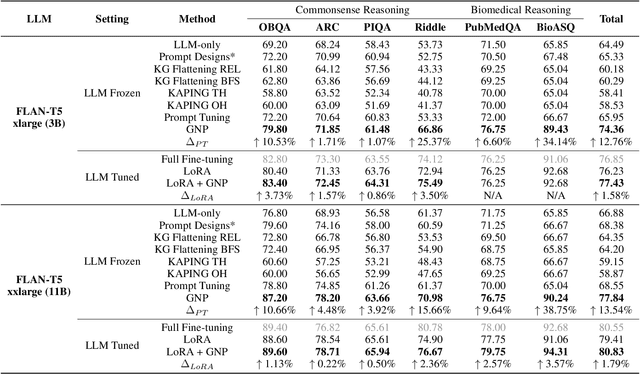

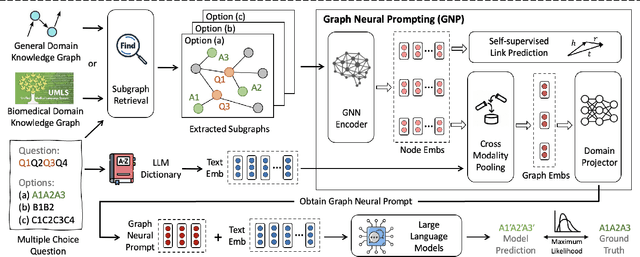

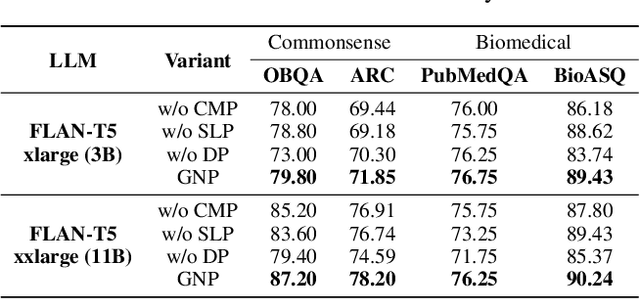

Graph Neural Prompting with Large Language Models

Sep 27, 2023

Abstract:Large Language Models (LLMs) have shown remarkable generalization capability with exceptional performance in various language modeling tasks. However, they still exhibit inherent limitations in precisely capturing and returning grounded knowledge. While existing work has explored utilizing knowledge graphs to enhance language modeling via joint training and customized model architectures, applying this to LLMs is problematic owing to their large number of parameters and high computational cost. In addition, how to leverage the pre-trained LLMs and avoid training a customized model from scratch remains an open question. In this work, we propose Graph Neural Prompting (GNP), a novel plug-and-play method to assist pre-trained LLMs in learning beneficial knowledge from KGs. GNP encompasses various designs, including a standard graph neural network encoder, a cross-modality pooling module, a domain projector, and a self-supervised link prediction objective. Extensive experiments on multiple datasets demonstrate the superiority of GNP on both commonsense and biomedical reasoning tasks across different LLM sizes and settings.

MedGPTEval: A Dataset and Benchmark to Evaluate Responses of Large Language Models in Medicine

May 12, 2023

Abstract:METHODS: First, a set of evaluation criteria is designed based on a comprehensive literature review. Second, existing candidate criteria are optimized for using a Delphi method by five experts in medicine and engineering. Third, three clinical experts design a set of medical datasets to interact with LLMs. Finally, benchmarking experiments are conducted on the datasets. The responses generated by chatbots based on LLMs are recorded for blind evaluations by five licensed medical experts. RESULTS: The obtained evaluation criteria cover medical professional capabilities, social comprehensive capabilities, contextual capabilities, and computational robustness, with sixteen detailed indicators. The medical datasets include twenty-seven medical dialogues and seven case reports in Chinese. Three chatbots are evaluated, ChatGPT by OpenAI, ERNIE Bot by Baidu Inc., and Doctor PuJiang (Dr. PJ) by Shanghai Artificial Intelligence Laboratory. Experimental results show that Dr. PJ outperforms ChatGPT and ERNIE Bot in both multiple-turn medical dialogue and case report scenarios.

Interactive Visual Pattern Search on Graph Data via Graph Representation Learning

Feb 18, 2022

Abstract:Graphs are a ubiquitous data structure to model processes and relations in a wide range of domains. Examples include control-flow graphs in programs and semantic scene graphs in images. Identifying subgraph patterns in graphs is an important approach to understanding their structural properties. We propose a visual analytics system GraphQ to support human-in-the-loop, example-based, subgraph pattern search in a database containing many individual graphs. To support fast, interactive queries, we use graph neural networks (GNNs) to encode a graph as fixed-length latent vector representation, and perform subgraph matching in the latent space. Due to the complexity of the problem, it is still difficult to obtain accurate one-to-one node correspondences in the matching results that are crucial for visualization and interpretation. We, therefore, propose a novel GNN for node-alignment called NeuroAlign, to facilitate easy validation and interpretation of the query results. GraphQ provides a visual query interface with a query editor and a multi-scale visualization of the results, as well as a user feedback mechanism for refining the results with additional constraints. We demonstrate GraphQ through two example usage scenarios: analyzing reusable subroutines in program workflows and semantic scene graph search in images. Quantitative experiments show that NeuroAlign achieves 19-29% improvement in node-alignment accuracy compared to baseline GNN and provides up to 100x speedup compared to combinatorial algorithms. Our qualitative study with domain experts confirms the effectiveness for both usage scenarios.

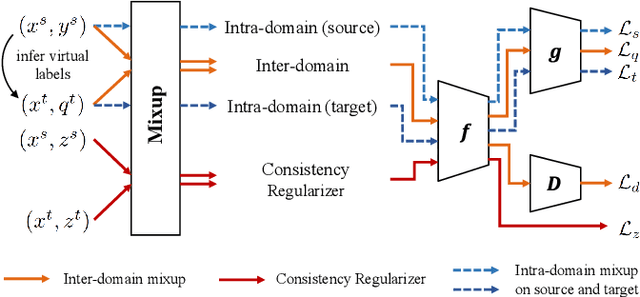

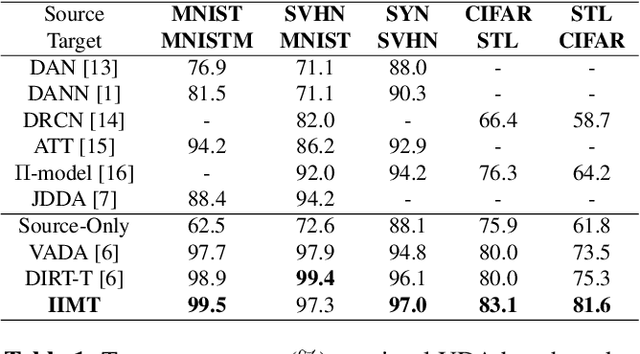

Improve Unsupervised Domain Adaptation with Mixup Training

Jan 03, 2020

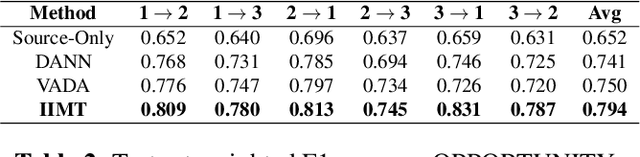

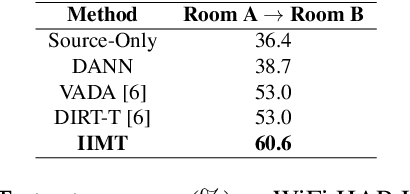

Abstract:Unsupervised domain adaptation studies the problem of utilizing a relevant source domain with abundant labels to build predictive modeling for an unannotated target domain. Recent work observe that the popular adversarial approach of learning domain-invariant features is insufficient to achieve desirable target domain performance and thus introduce additional training constraints, e.g. cluster assumption. However, these approaches impose the constraints on source and target domains individually, ignoring the important interplay between them. In this work, we propose to enforce training constraints across domains using mixup formulation to directly address the generalization performance for target data. In order to tackle potentially huge domain discrepancy, we further propose a feature-level consistency regularizer to facilitate the inter-domain constraint. When adding intra-domain mixup and domain adversarial learning, our general framework significantly improves state-of-the-art performance on several important tasks from both image classification and human activity recognition.

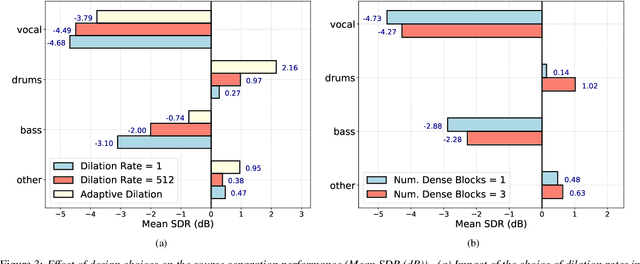

Audio Source Separation via Multi-Scale Learning with Dilated Dense U-Nets

Apr 08, 2019

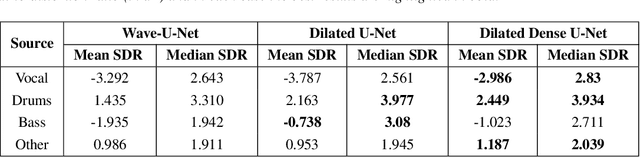

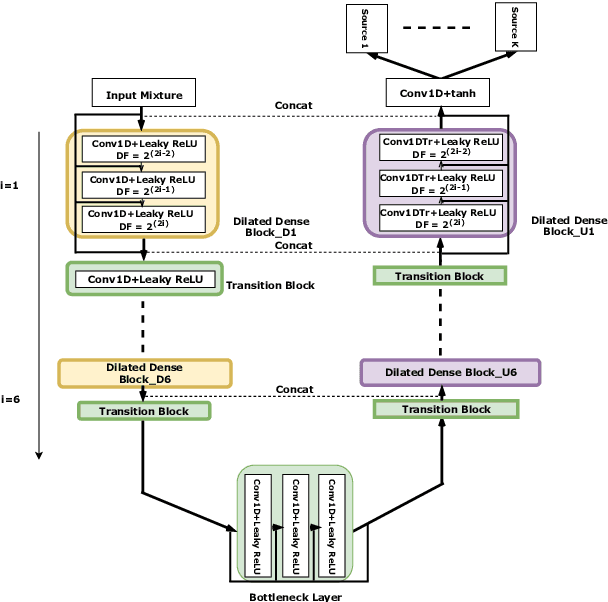

Abstract:Modern audio source separation techniques rely on optimizing sequence model architectures such as, 1D-CNNs, on mixture recordings to generalize well to unseen mixtures. Specifically, recent focus is on time-domain based architectures such as Wave-U-Net which exploit temporal context by extracting multi-scale features. However, the optimality of the feature extraction process in these architectures has not been well investigated. In this paper, we examine and recommend critical architectural changes that forge an optimal multi-scale feature extraction process. To this end, we replace regular $1-$D convolutions with adaptive dilated convolutions that have innate capability of capturing increased context by using large temporal receptive fields. We also investigate the impact of dense connections on the extraction process that encourage feature reuse and better gradient flow. The dense connections between the downsampling and upsampling paths of a U-Net architecture capture multi-resolution information leading to improved temporal modelling. We evaluate the proposed approaches on the MUSDB test dataset. In addition to providing an improved performance over the state-of-the-art, we also provide insights on the impact of different architectural choices on complex data-driven solutions for source separation.

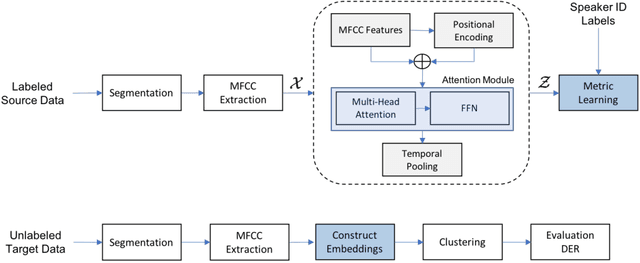

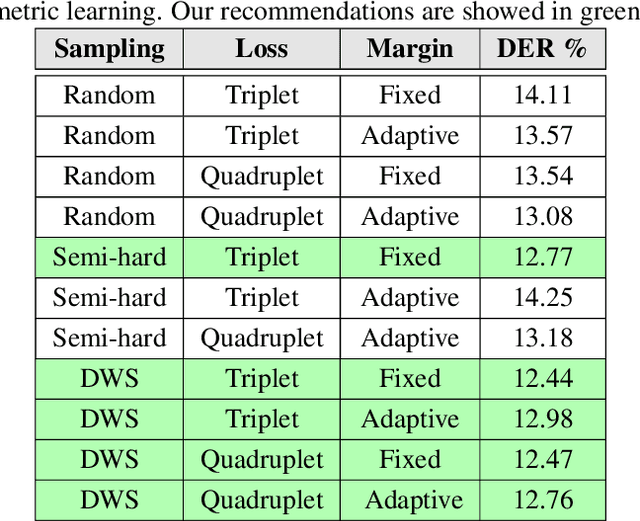

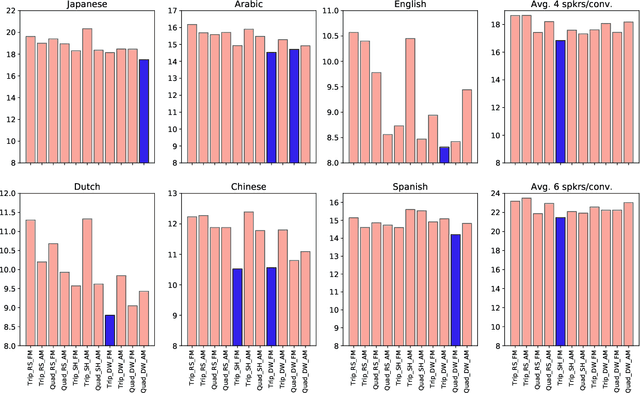

Designing an Effective Metric Learning Pipeline for Speaker Diarization

Nov 01, 2018

Abstract:State-of-the-art speaker diarization systems utilize knowledge from external data, in the form of a pre-trained distance metric, to effectively determine relative speaker identities to unseen data. However, much of recent focus has been on choosing the appropriate feature extractor, ranging from pre-trained $i-$vectors to representations learned via different sequence modeling architectures (e.g. 1D-CNNs, LSTMs, attention models), while adopting off-the-shelf metric learning solutions. In this paper, we argue that, regardless of the feature extractor, it is crucial to carefully design a metric learning pipeline, namely the loss function, the sampling strategy and the discrimnative margin parameter, for building robust diarization systems. Furthermore, we propose to adopt a fine-grained validation process to obtain a comprehensive evaluation of the generalization power of metric learning pipelines. To this end, we measure diarization performance across different language speakers, and variations in the number of speakers in a recording. Using empirical studies, we provide interesting insights into the effectiveness of different design choices and make recommendations.

Attention Models with Random Features for Multi-layered Graph Embeddings

Oct 02, 2018

Abstract:Modern data analysis pipelines are becoming increasingly complex due to the presence of multi-view information sources. While graphs are effective in modeling complex relationships, in many scenarios a single graph is rarely sufficient to succinctly represent all interactions, and hence multi-layered graphs have become popular. Though this leads to richer representations, extending solutions from the single-graph case is not straightforward. Consequently, there is a strong need for novel solutions to solve classical problems, such as node classification, in the multi-layered case. In this paper, we consider the problem of semi-supervised learning with multi-layered graphs. Though deep network embeddings, e.g. DeepWalk, are widely adopted for community discovery, we argue that feature learning with random node attributes, using graph neural networks, can be more effective. To this end, we propose to use attention models for effective feature learning, and develop two novel architectures, GrAMME-SG and GrAMME-Fusion, that exploit the inter-layer dependencies for building multi-layered graph embeddings. Using empirical studies on several benchmark datasets, we evaluate the proposed approaches and demonstrate significant performance improvements in comparison to state-of-the-art network embedding strategies. The results also show that using simple random features is an effective choice, even in cases where explicit node attributes are not available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge