Andreas Spanias

SAR-RAG: ATR Visual Question Answering by Semantic Search, Retrieval, and MLLM Generation

Feb 04, 2026Abstract:We present a visual-context image retrieval-augmented generation (ImageRAG) assisted AI agent for automatic target recognition (ATR) of synthetic aperture radar (SAR). SAR is a remote sensing method used in defense and security applications to detect and monitor the positions of military vehicles, which may appear indistinguishable in images. Researchers have extensively studied SAR ATR to improve the differentiation and identification of vehicle types, characteristics, and measurements. Test examples can be compared with known vehicle target types to improve recognition tasks. New methods enhance the capabilities of neural networks, transformer attention, and multimodal large language models. An agentic AI method may be developed to utilize a defined set of tools, such as searching through a library of similar examples. Our proposed method, SAR Retrieval-Augmented Generation (SAR-RAG), combines a multimodal large language model (MLLM) with a vector database of semantic embeddings to support contextual search for image exemplars with known qualities. By recovering past image examples with known true target types, our SAR-RAG system can compare similar vehicle categories, achieving improved ATR prediction accuracy. We evaluate this through search and retrieval metrics, categorical classification accuracy, and numeric regression of vehicle dimensions. These metrics all show improvements when SAR-RAG is added to an MLLM baseline method as an attached ATR memory bank.

Infrared Computer Vision for Utility-Scale Photovoltaic Array Inspection

Jun 29, 2024

Abstract:Utility-scale solar arrays require specialized inspection methods for detecting faulty panels. Photovoltaic (PV) panel faults caused by weather, ground leakage, circuit issues, temperature, environment, age, and other damage can take many forms but often symptomatically exhibit temperature differences. Included is a mini survey to review these common faults and PV array fault detection approaches. Among these, infrared thermography cameras are a powerful tool for improving solar panel inspection in the field. These can be combined with other technologies, including image processing and machine learning. This position paper examines several computer vision algorithms that automate thermal anomaly detection in infrared imagery. We demonstrate our infrared thermography data collection approach, the PV thermal imagery benchmark dataset, and the measured performance of image processing transformations, including the Hough Transform for PV segmentation. The results of this implementation are presented with a discussion of future work.

An L2-Normalized Spatial Attention Network For Accurate And Fast Classification Of Brain Tumors In 2D T1-Weighted CE-MRI Images

Aug 01, 2023

Abstract:We propose an accurate and fast classification network for classification of brain tumors in MRI images that outperforms all lightweight methods investigated in terms of accuracy. We test our model on a challenging 2D T1-weighted CE-MRI dataset containing three types of brain tumors: Meningioma, Glioma and Pituitary. We introduce an l2-normalized spatial attention mechanism that acts as a regularizer against overfitting during training. We compare our results against the state-of-the-art on this dataset and show that by integrating l2-normalized spatial attention into a baseline network we achieve a performance gain of 1.79 percentage points. Even better accuracy can be attained by combining our model in an ensemble with the pretrained VGG16 at the expense of execution speed. Our code is publicly available at https://github.com/juliadietlmeier/MRI_image_classification

Towards Live 3D Reconstruction from Wearable Video: An Evaluation of V-SLAM, NeRF, and Videogrammetry Techniques

Nov 21, 2022

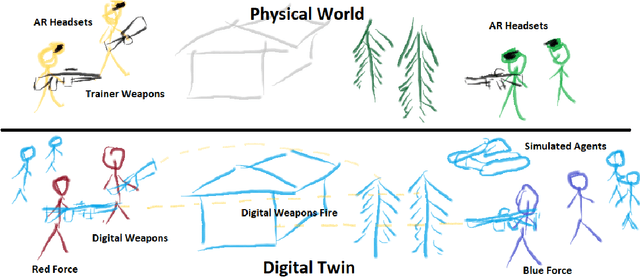

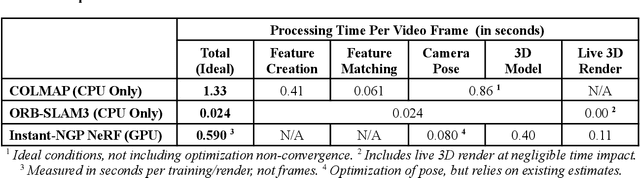

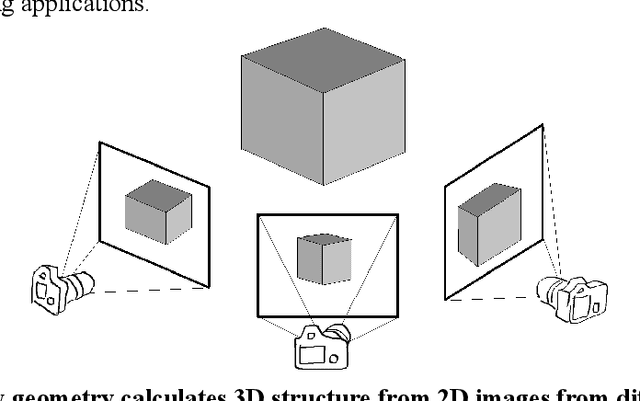

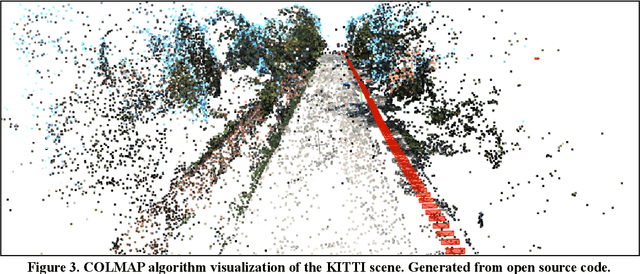

Abstract:Mixed reality (MR) is a key technology which promises to change the future of warfare. An MR hybrid of physical outdoor environments and virtual military training will enable engagements with long distance enemies, both real and simulated. To enable this technology, a large-scale 3D model of a physical environment must be maintained based on live sensor observations. 3D reconstruction algorithms should utilize the low cost and pervasiveness of video camera sensors, from both overhead and soldier-level perspectives. Mapping speed and 3D quality can be balanced to enable live MR training in dynamic environments. Given these requirements, we survey several 3D reconstruction algorithms for large-scale mapping for military applications given only live video. We measure 3D reconstruction performance from common structure from motion, visual-SLAM, and photogrammetry techniques. This includes the open source algorithms COLMAP, ORB-SLAM3, and NeRF using Instant-NGP. We utilize the autonomous driving academic benchmark KITTI, which includes both dashboard camera video and lidar produced 3D ground truth. With the KITTI data, our primary contribution is a quantitative evaluation of 3D reconstruction computational speed when considering live video.

Revisiting Inlier and Outlier Specification for Improved Out-of-Distribution Detection

Jul 12, 2022

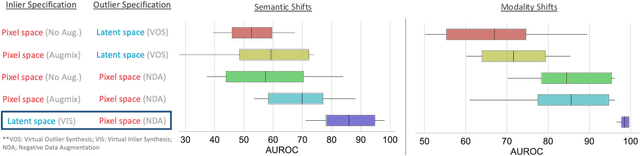

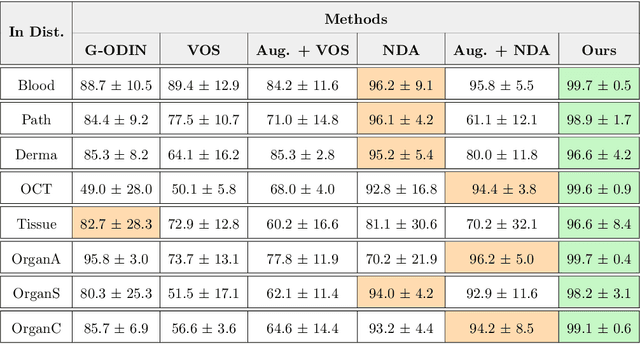

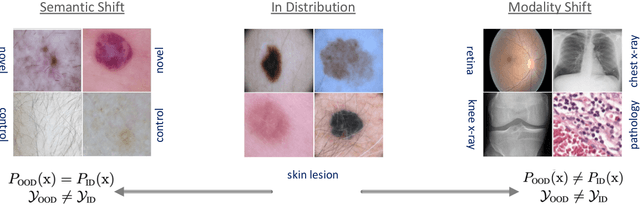

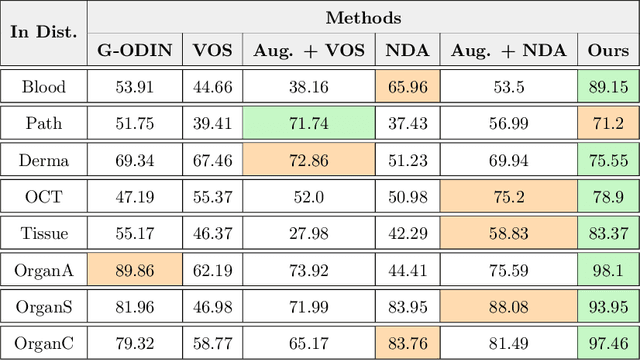

Abstract:Accurately detecting out-of-distribution (OOD) data with varying levels of semantic and covariate shifts with respect to the in-distribution (ID) data is critical for deployment of safe and reliable models. This is particularly the case when dealing with highly consequential applications (e.g. medical imaging, self-driving cars, etc). The goal is to design a detector that can accept meaningful variations of the ID data, while also rejecting examples from OOD regimes. In practice, this dual objective can be realized by enforcing consistency using an appropriate scoring function (e.g., energy) and calibrating the detector to reject a curated set of OOD data (referred to as outlier exposure or shortly OE). While OE methods are widely adopted, assembling representative OOD datasets is both costly and challenging due to the unpredictability of real-world scenarios, hence the recent trend of designing OE-free detectors. In this paper, we make a surprising finding that controlled generalization to ID variations and exposure to diverse (synthetic) outlier examples are essential to simultaneously improving semantic and modality shift detection. In contrast to existing methods, our approach samples inliers in the latent space, and constructs outlier examples via negative data augmentation. Through a rigorous empirical study on medical imaging benchmarks (MedMNIST, ISIC2019 and NCT), we demonstrate significant performance gains ($15\% - 35\%$ in AUROC) over existing OE-free, OOD detection approaches under both semantic and modality shifts.

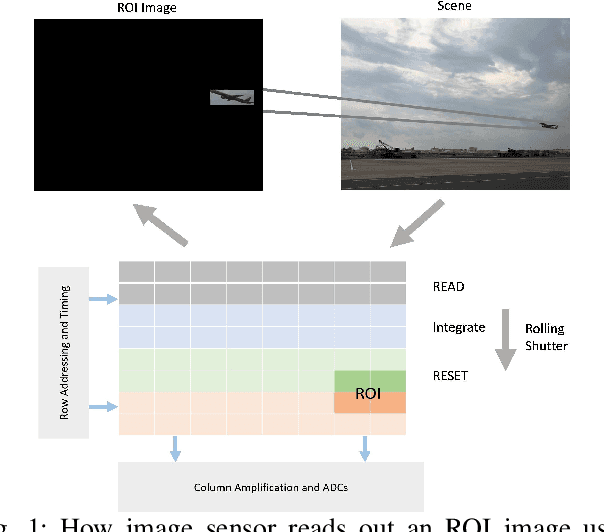

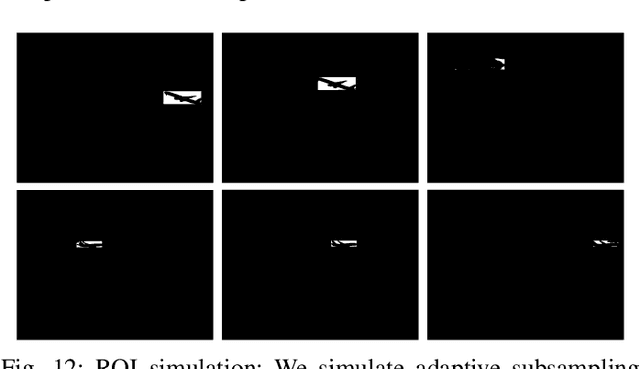

Adaptive Subsampling for ROI-based Visual Tracking: Algorithms and FPGA Implementation

Jan 17, 2022

Abstract:There is tremendous scope for improving the energy efficiency of embedded vision systems by incorporating programmable region-of-interest (ROI) readout in the image sensor design. In this work, we study how ROI programmability can be leveraged for tracking applications by anticipating where the ROI will be located in future frames and switching pixels off outside of this region. We refer to this process of ROI prediction and corresponding sensor configuration as adaptive subsampling. Our adaptive subsampling algorithms comprise an object detector and an ROI predictor (Kalman filter) which operate in conjunction to optimize the energy efficiency of the vision pipeline with the end task being object tracking. To further facilitate the implementation of our adaptive algorithms in real life, we select a candidate algorithm and map it onto an FPGA. Leveraging Xilinx Vitis AI tools, we designed and accelerated a YOLO object detector-based adaptive subsampling algorithm. In order to further improve the algorithm post-deployment, we evaluated several competing baselines on the OTB100 and LaSOT datasets. We found that coupling the ECO tracker with the Kalman filter has a competitive AUC score of 0.4568 and 0.3471 on the OTB100 and LaSOT datasets respectively. Further, the power efficiency of this algorithm is on par with, and in a couple of instances superior to, the other baselines. The ECO-based algorithm incurs a power consumption of approximately 4 W averaged across both datasets while the YOLO-based approach requires power consumption of approximately 6 W (as per our power consumption model). In terms of accuracy-latency tradeoff, the ECO-based algorithm provides near-real-time performance (19.23 FPS) while managing to attain competitive tracking precision.

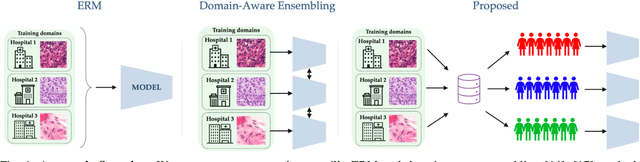

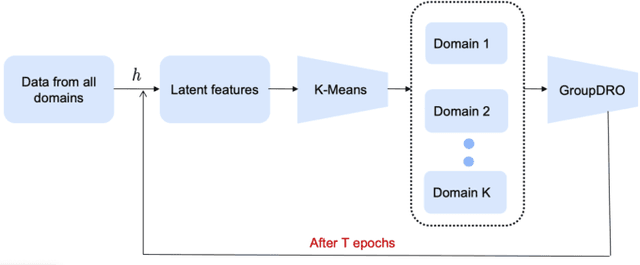

Improving Multi-Domain Generalization through Domain Re-labeling

Dec 17, 2021

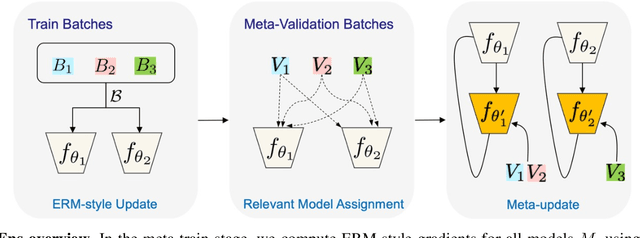

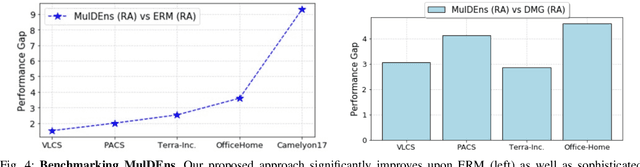

Abstract:Domain generalization (DG) methods aim to develop models that generalize to settings where the test distribution is different from the training data. In this paper, we focus on the challenging problem of multi-source zero-shot DG, where labeled training data from multiple source domains is available but with no access to data from the target domain. Though this problem has become an important topic of research, surprisingly, the simple solution of pooling all source data together and training a single classifier is highly competitive on standard benchmarks. More importantly, even sophisticated approaches that explicitly optimize for invariance across different domains do not necessarily provide non-trivial gains over ERM. In this paper, for the first time, we study the important link between pre-specified domain labels and the generalization performance. Using a motivating case-study and a new variant of a distributional robust optimization algorithm, GroupDRO++, we first demonstrate how inferring custom domain groups can lead to consistent improvements over the original domain labels that come with the dataset. Subsequently, we introduce a general approach for multi-domain generalization, MulDEns, that uses an ERM-based deep ensembling backbone and performs implicit domain re-labeling through a meta-optimization algorithm. Using empirical studies on multiple standard benchmarks, we show that MulDEns does not require tailoring the augmentation strategy or the training process specific to a dataset, consistently outperforms ERM by significant margins, and produces state-of-the-art generalization performance, even when compared to existing methods that exploit the domain labels.

Designing Counterfactual Generators using Deep Model Inversion

Oct 05, 2021

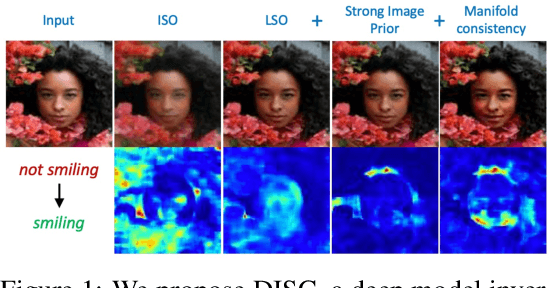

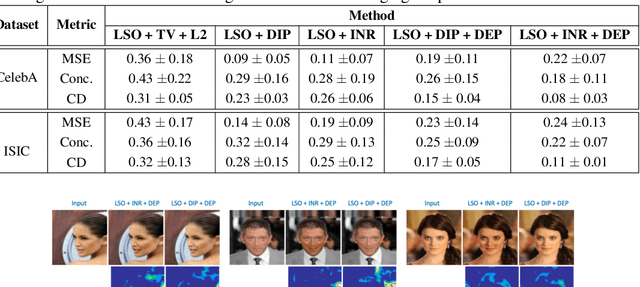

Abstract:Explanation techniques that synthesize small, interpretable changes to a given image while producing desired changes in the model prediction have become popular for introspecting black-box models. Commonly referred to as counterfactuals, the synthesized explanations are required to contain discernible changes (for easy interpretability) while also being realistic (consistency to the data manifold). In this paper, we focus on the case where we have access only to the trained deep classifier and not the actual training data. While the problem of inverting deep models to synthesize images from the training distribution has been explored, our goal is to develop a deep inversion approach to generate counterfactual explanations for a given query image. Despite their effectiveness in conditional image synthesis, we show that existing deep inversion methods are insufficient for producing meaningful counterfactuals. We propose DISC (Deep Inversion for Synthesizing Counterfactuals) that improves upon deep inversion by utilizing (a) stronger image priors, (b) incorporating a novel manifold consistency objective and (c) adopting a progressive optimization strategy. We find that, in addition to producing visually meaningful explanations, the counterfactuals from DISC are effective at learning classifier decision boundaries and are robust to unknown test-time corruptions.

On the Design of Deep Priors for Unsupervised Audio Restoration

Apr 14, 2021

Abstract:Unsupervised deep learning methods for solving audio restoration problems extensively rely on carefully tailored neural architectures that carry strong inductive biases for defining priors in the time or spectral domain. In this context, lot of recent success has been achieved with sophisticated convolutional network constructions that recover audio signals in the spectral domain. However, in practice, audio priors require careful engineering of the convolutional kernels to be effective at solving ill-posed restoration tasks, while also being easy to train. To this end, in this paper, we propose a new U-Net based prior that does not impact either the network complexity or convergence behavior of existing convolutional architectures, yet leads to significantly improved restoration. In particular, we advocate the use of carefully designed dilation schedules and dense connections in the U-Net architecture to obtain powerful audio priors. Using empirical studies on standard benchmarks and a variety of ill-posed restoration tasks, such as audio denoising, in-painting and source separation, we demonstrate that our proposed approach consistently outperforms widely adopted audio prior architectures.

Loss Estimators Improve Model Generalization

Mar 05, 2021

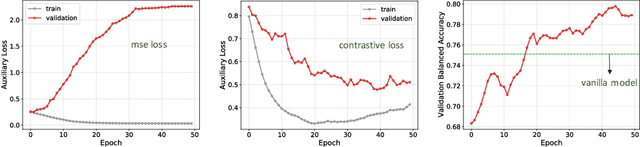

Abstract:With increased interest in adopting AI methods for clinical diagnosis, a vital step towards safe deployment of such tools is to ensure that the models not only produce accurate predictions but also do not generalize to data regimes where the training data provide no meaningful evidence. Existing approaches for ensuring the distribution of model predictions to be similar to that of the true distribution rely on explicit uncertainty estimators that are inherently hard to calibrate. In this paper, we propose to train a loss estimator alongside the predictive model, using a contrastive training objective, to directly estimate the prediction uncertainties. Interestingly, we find that, in addition to producing well-calibrated uncertainties, this approach improves the generalization behavior of the predictor. Using a dermatology use-case, we show the impact of loss estimators on model generalization, in terms of both its fidelity on in-distribution data and its ability to detect out of distribution samples or new classes unseen during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge