Houde Liu

GEN3D: Generating Domain-Free 3D Scenes from a Single Image

Nov 18, 2025Abstract:Despite recent advancements in neural 3D reconstruction, the dependence on dense multi-view captures restricts their broader applicability. Additionally, 3D scene generation is vital for advancing embodied AI and world models, which depend on diverse, high-quality scenes for learning and evaluation. In this work, we propose Gen3d, a novel method for generation of high-quality, wide-scope, and generic 3D scenes from a single image. After the initial point cloud is created by lifting the RGBD image, Gen3d maintains and expands its world model. The 3D scene is finalized through optimizing a Gaussian splatting representation. Extensive experiments on diverse datasets demonstrate the strong generalization capability and superior performance of our method in generating a world model and Synthesizing high-fidelity and consistent novel views.

Distribution Preference Optimization: A Fine-grained Perspective for LLM Unlearning

Oct 06, 2025Abstract:As Large Language Models (LLMs) demonstrate remarkable capabilities learned from vast corpora, concerns regarding data privacy and safety are receiving increasing attention. LLM unlearning, which aims to remove the influence of specific data while preserving overall model utility, is becoming an important research area. One of the mainstream unlearning classes is optimization-based methods, which achieve forgetting directly through fine-tuning, exemplified by Negative Preference Optimization (NPO). However, NPO's effectiveness is limited by its inherent lack of explicit positive preference signals. Attempts to introduce such signals by constructing preferred responses often necessitate domain-specific knowledge or well-designed prompts, fundamentally restricting their generalizability. In this paper, we shift the focus to the distribution-level, directly targeting the next-token probability distribution instead of entire responses, and derive a novel unlearning algorithm termed \textbf{Di}stribution \textbf{P}reference \textbf{O}ptimization (DiPO). We show that the requisite preference distribution pairs for DiPO, which are distributions over the model's output tokens, can be constructed by selectively amplifying or suppressing the model's high-confidence output logits, thereby effectively overcoming NPO's limitations. We theoretically prove the consistency of DiPO's loss function with the desired unlearning direction. Extensive experiments demonstrate that DiPO achieves a strong trade-off between model utility and forget quality. Notably, DiPO attains the highest forget quality on the TOFU benchmark, and maintains leading scalability and sustainability in utility preservation on the MUSE benchmark.

DeepMF: Deep Motion Factorization for Closed-Loop Safety-Critical Driving Scenario Simulation

Dec 23, 2024

Abstract:Safety-critical traffic scenarios are of great practical relevance to evaluating the robustness of autonomous driving (AD) systems. Given that these long-tail events are extremely rare in real-world traffic data, there is a growing body of work dedicated to the automatic traffic scenario generation. However, nearly all existing algorithms for generating safety-critical scenarios rely on snippets of previously recorded traffic events, transforming normal traffic flow into accident-prone situations directly. In other words, safety-critical traffic scenario generation is hindsight and not applicable to newly encountered and open-ended traffic events.In this paper, we propose the Deep Motion Factorization (DeepMF) framework, which extends static safety-critical driving scenario generation to closed-loop and interactive adversarial traffic simulation. DeepMF casts safety-critical traffic simulation as a Bayesian factorization that includes the assignment of hazardous traffic participants, the motion prediction of selected opponents, the reaction estimation of autonomous vehicle (AV) and the probability estimation of the accident occur. All the aforementioned terms are calculated using decoupled deep neural networks, with inputs limited to the current observation and historical states. Consequently, DeepMF can effectively and efficiently simulate safety-critical traffic scenarios at any triggered time and for any duration by maximizing the compounded posterior probability of traffic risk. Extensive experiments demonstrate that DeepMF excels in terms of risk management, flexibility, and diversity, showcasing outstanding performance in simulating a wide range of realistic, high-risk traffic scenarios.

Novelty-based Sample Reuse for Continuous Robotics Control

Oct 17, 2024

Abstract:In reinforcement learning, agents collect state information and rewards through environmental interactions, essential for policy refinement. This process is notably time-consuming, especially in complex robotic simulations and real-world applications. Traditional algorithms usually re-engage with the environment after processing a single batch of samples, thereby failing to fully capitalize on historical data. However, frequently observed states, with reliable value estimates, require minimal updates; in contrast, rare observed states necessitate more intensive updates for achieving accurate value estimations. To address uneven sample utilization, we propose Novelty-guided Sample Reuse (NSR). NSR provides extra updates for infrequent, novel states and skips additional updates for frequent states, maximizing sample use before interacting with the environment again. Our experiments show that NSR improves the convergence rate and success rate of algorithms without significantly increasing time consumption. Our code is publicly available at https://github.com/ppksigs/NSR-DDPG-HER.

MBC: Multi-Brain Collaborative Control for Quadruped Robots

Sep 24, 2024Abstract:In the field of locomotion task of quadruped robots, Blind Policy and Perceptive Policy each have their own advantages and limitations. The Blind Policy relies on preset sensor information and algorithms, suitable for known and structured environments, but it lacks adaptability in complex or unknown environments. The Perceptive Policy uses visual sensors to obtain detailed environmental information, allowing it to adapt to complex terrains, but its effectiveness is limited under occluded conditions, especially when perception fails. Unlike the Blind Policy, the Perceptive Policy is not as robust under these conditions. To address these challenges, we propose a MBC:Multi-Brain collaborative system that incorporates the concepts of Multi-Agent Reinforcement Learning and introduces collaboration between the Blind Policy and the Perceptive Policy. By applying this multi-policy collaborative model to a quadruped robot, the robot can maintain stable locomotion even when the perceptual system is impaired or observational data is incomplete. Our simulations and real-world experiments demonstrate that this system significantly improves the robot's passability and robustness against perception failures in complex environments, validating the effectiveness of multi-policy collaboration in enhancing robotic motion performance.

Range-SLAM: Ultra-Wideband-Based Smoke-Resistant Real-Time Localization and Mapping

Sep 15, 2024

Abstract:This paper presents Range-SLAM, a real-time, lightweight SLAM system designed to address the challenges of localization and mapping in environments with smoke and other harsh conditions using Ultra-Wideband (UWB) signals. While optical sensors like LiDAR and cameras struggle in low-visibility environments, UWB signals provide a robust alternative for real-time positioning. The proposed system uses general UWB devices to achieve accurate mapping and localization without relying on expensive LiDAR or other dedicated hardware. By utilizing only the distance and Received Signal Strength Indicator (RSSI) provided by UWB sensors in relation to anchors, we combine the motion of the tag-carrying agent with raycasting algorithm to construct a 2D occupancy grid map in real time. To enhance localization in challenging conditions, a Weighted Least Squares (WLS) method is employed. Extensive real-world experiments, including smoke-filled environments and simulated

Structural Optimization of Lightweight Bipedal Robot via SERL

Aug 28, 2024

Abstract:Designing a bipedal robot is a complex and challenging task, especially when dealing with a multitude of structural parameters. Traditional design methods often rely on human intuition and experience. However, such approaches are time-consuming, labor-intensive, lack theoretical guidance and hard to obtain optimal design results within vast design spaces, thus failing to full exploit the inherent performance potential of robots. In this context, this paper introduces the SERL (Structure Evolution Reinforcement Learning) algorithm, which combines reinforcement learning for locomotion tasks with evolution algorithms. The aim is to identify the optimal parameter combinations within a given multidimensional design space. Through the SERL algorithm, we successfully designed a bipedal robot named Wow Orin, where the optimal leg length are obtained through optimization based on body structure and motor torque. We have experimentally validated the effectiveness of the SERL algorithm, which is capable of optimizing the best structure within specified design space and task conditions. Additionally, to assess the performance gap between our designed robot and the current state-of-the-art robots, we compared Wow Orin with mainstream bipedal robots Cassie and Unitree H1. A series of experimental results demonstrate the Outstanding energy efficiency and performance of Wow Orin, further validating the feasibility of applying the SERL algorithm to practical design.

Quadruped robot traversing 3D complex environments with limited perception

Apr 30, 2024Abstract:Traversing 3-D complex environments has always been a significant challenge for legged locomotion. Existing methods typically rely on external sensors such as vision and lidar to preemptively react to obstacles by acquiring environmental information. However, in scenarios like nighttime or dense forests, external sensors often fail to function properly, necessitating robots to rely on proprioceptive sensors to perceive diverse obstacles in the environment and respond promptly. This task is undeniably challenging. Our research finds that methods based on collision detection can enhance a robot's perception of environmental obstacles. In this work, we propose an end-to-end learning-based quadruped robot motion controller that relies solely on proprioceptive sensing. This controller can accurately detect, localize, and agilely respond to collisions in unknown and complex 3D environments, thereby improving the robot's traversability in complex environments. We demonstrate in both simulation and real-world experiments that our method enables quadruped robots to successfully traverse challenging obstacles in various complex environments.

Agile and versatile bipedal robot tracking control through reinforcement learning

Apr 12, 2024

Abstract:The remarkable athletic intelligence displayed by humans in complex dynamic movements such as dancing and gymnastics suggests that the balance mechanism in biological beings is decoupled from specific movement patterns. This decoupling allows for the execution of both learned and unlearned movements under certain constraints while maintaining balance through minor whole-body coordination. To replicate this balance ability and body agility, this paper proposes a versatile controller for bipedal robots. This controller achieves ankle and body trajectory tracking across a wide range of gaits using a single small-scale neural network, which is based on a model-based IK solver and reinforcement learning. We consider a single step as the smallest control unit and design a universally applicable control input form suitable for any single-step variation. Highly flexible gait control can be achieved by combining these minimal control units with high-level policy through our extensible control interface. To enhance the trajectory-tracking capability of our controller, we utilize a three-stage training curriculum. After training, the robot can move freely between target footholds at varying distances and heights. The robot can also maintain static balance without repeated stepping to adjust posture. Finally, we evaluate the tracking accuracy of our controller on various bipedal tasks, and the effectiveness of our control framework is verified in the simulation environment.

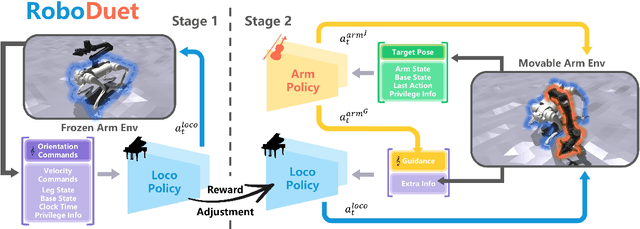

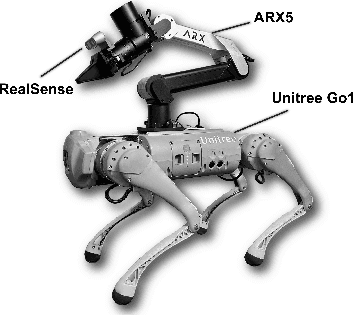

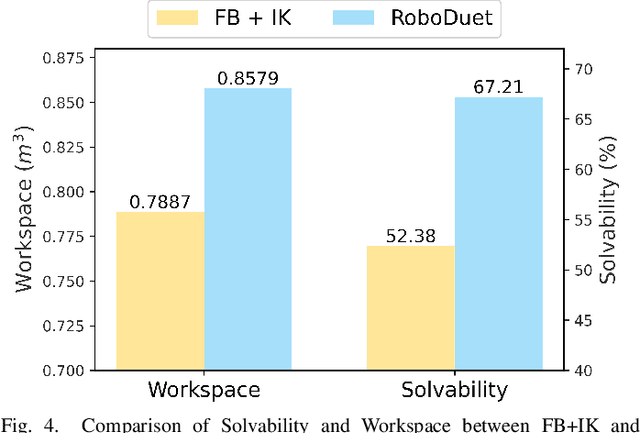

RoboDuet: A Framework Affording Mobile-Manipulation and Cross-Embodiment

Mar 27, 2024

Abstract:Combining the mobility of legged robots with the manipulation skills of arms has the potential to significantly expand the operational range and enhance the capabilities of robotic systems in performing various mobile manipulation tasks. Existing approaches are confined to imprecise six degrees of freedom (DoF) manipulation and possess a limited arm workspace. In this paper, we propose a novel framework, RoboDuet, which employs two collaborative policies to realize locomotion and manipulation simultaneously, achieving whole-body control through interactions between each other. Surprisingly, going beyond the large-range pose tracking, we find that the two-policy framework may enable cross-embodiment deployment such as using different quadrupedal robots or other arms. Our experiments demonstrate that the policies trained through RoboDuet can accomplish stable gaits, agile 6D end-effector pose tracking, and zero-shot exchange of legged robots, and can be deployed in the real world to perform various mobile manipulation tasks. Our project page with demo videos is at https://locomanip-duet.github.io .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge