Hongbin Sun

Expand Your SCOPE: Semantic Cognition over Potential-Based Exploration for Embodied Visual Navigation

Nov 12, 2025

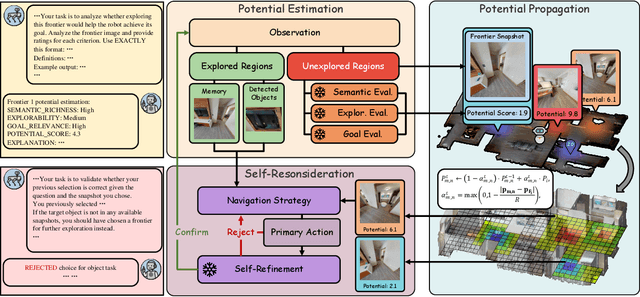

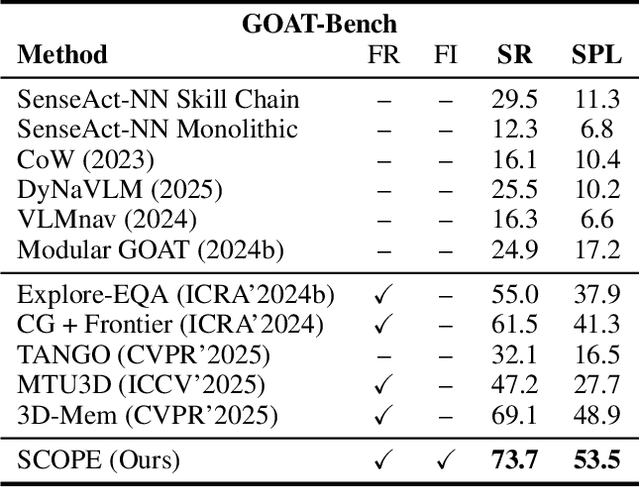

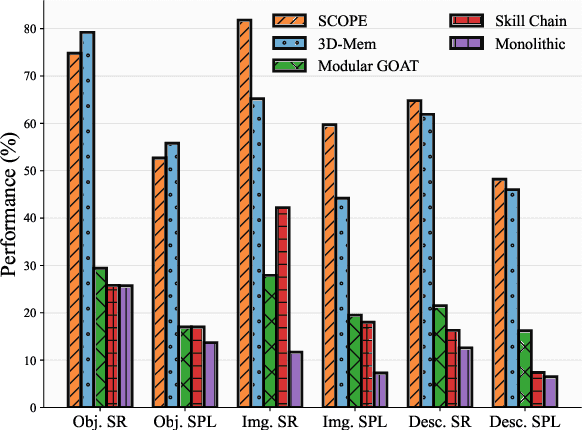

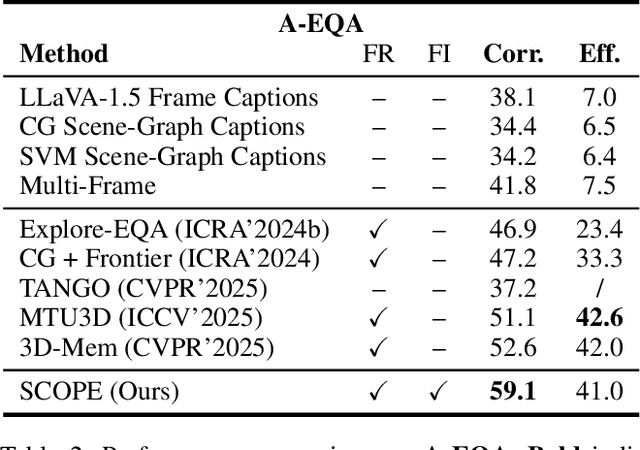

Abstract:Embodied visual navigation remains a challenging task, as agents must explore unknown environments with limited knowledge. Existing zero-shot studies have shown that incorporating memory mechanisms to support goal-directed behavior can improve long-horizon planning performance. However, they overlook visual frontier boundaries, which fundamentally dictate future trajectories and observations, and fall short of inferring the relationship between partial visual observations and navigation goals. In this paper, we propose Semantic Cognition Over Potential-based Exploration (SCOPE), a zero-shot framework that explicitly leverages frontier information to drive potential-based exploration, enabling more informed and goal-relevant decisions. SCOPE estimates exploration potential with a Vision-Language Model and organizes it into a spatio-temporal potential graph, capturing boundary dynamics to support long-horizon planning. In addition, SCOPE incorporates a self-reconsideration mechanism that revisits and refines prior decisions, enhancing reliability and reducing overconfident errors. Experimental results on two diverse embodied navigation tasks show that SCOPE outperforms state-of-the-art baselines by 4.6\% in accuracy. Further analysis demonstrates that its core components lead to improved calibration, stronger generalization, and higher decision quality.

TensorAR: Refinement is All You Need in Autoregressive Image Generation

May 22, 2025

Abstract:Autoregressive (AR) image generators offer a language-model-friendly approach to image generation by predicting discrete image tokens in a causal sequence. However, unlike diffusion models, AR models lack a mechanism to refine previous predictions, limiting their generation quality. In this paper, we introduce TensorAR, a new AR paradigm that reformulates image generation from next-token prediction to next-tensor prediction. By generating overlapping windows of image patches (tensors) in a sliding fashion, TensorAR enables iterative refinement of previously generated content. To prevent information leakage during training, we propose a discrete tensor noising scheme, which perturbs input tokens via codebook-indexed noise. TensorAR is implemented as a plug-and-play module compatible with existing AR models. Extensive experiments on LlamaGEN, Open-MAGVIT2, and RAR demonstrate that TensorAR significantly improves the generation performance of autoregressive models.

Neuc-MDS: Non-Euclidean Multidimensional Scaling Through Bilinear Forms

Nov 16, 2024Abstract:We introduce Non-Euclidean-MDS (Neuc-MDS), an extension of classical Multidimensional Scaling (MDS) that accommodates non-Euclidean and non-metric inputs. The main idea is to generalize the standard inner product to symmetric bilinear forms to utilize the negative eigenvalues of dissimilarity Gram matrices. Neuc-MDS efficiently optimizes the choice of (both positive and negative) eigenvalues of the dissimilarity Gram matrix to reduce STRESS, the sum of squared pairwise error. We provide an in-depth error analysis and proofs of the optimality in minimizing lower bounds of STRESS. We demonstrate Neuc-MDS's ability to address limitations of classical MDS raised by prior research, and test it on various synthetic and real-world datasets in comparison with both linear and non-linear dimension reduction methods.

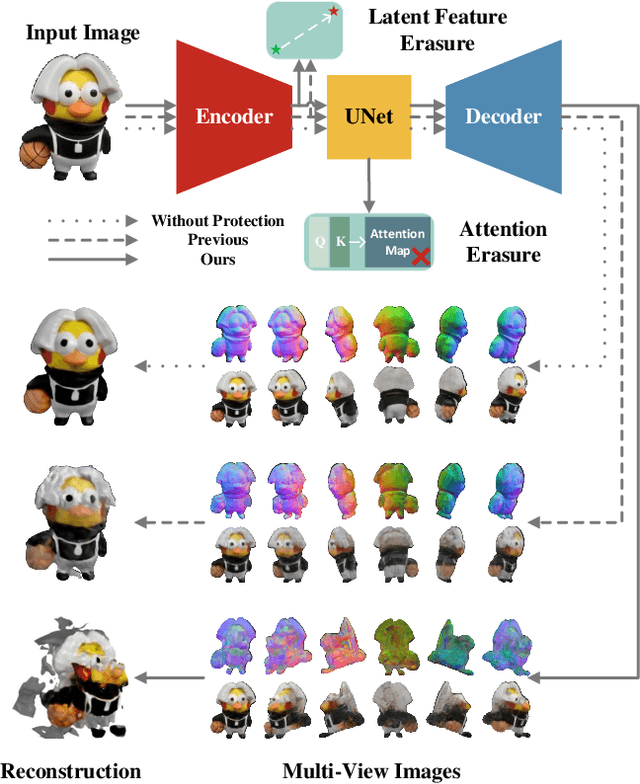

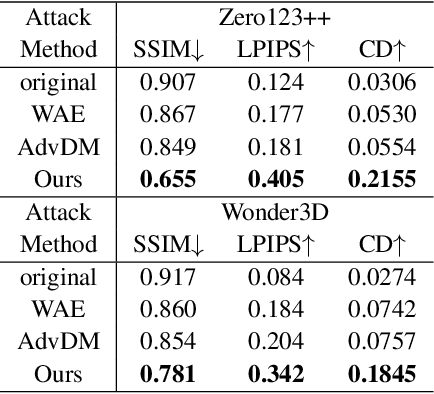

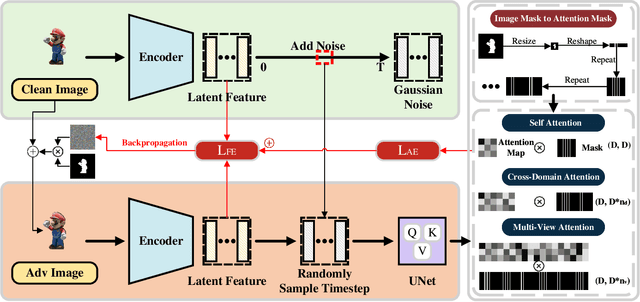

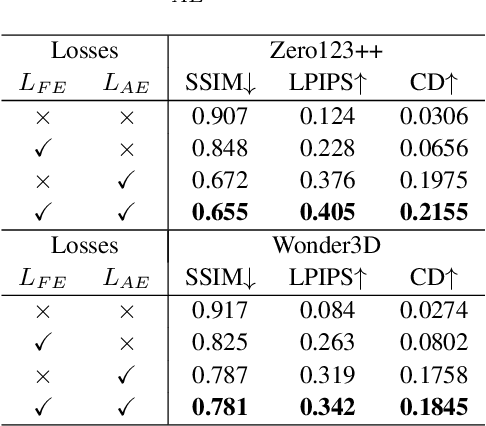

Latent Feature and Attention Dual Erasure Attack against Multi-View Diffusion Models for 3D Assets Protection

Aug 21, 2024

Abstract:Multi-View Diffusion Models (MVDMs) enable remarkable improvements in the field of 3D geometric reconstruction, but the issue regarding intellectual property has received increasing attention due to unauthorized imitation. Recently, some works have utilized adversarial attacks to protect copyright. However, all these works focus on single-image generation tasks which only need to consider the inner feature of images. Previous methods are inefficient in attacking MVDMs because they lack the consideration of disrupting the geometric and visual consistency among the generated multi-view images. This paper is the first to address the intellectual property infringement issue arising from MVDMs. Accordingly, we propose a novel latent feature and attention dual erasure attack to disrupt the distribution of latent feature and the consistency across the generated images from multi-view and multi-domain simultaneously. The experiments conducted on SOTA MVDMs indicate that our approach achieves superior performances in terms of attack effectiveness, transferability, and robustness against defense methods. Therefore, this paper provides an efficient solution to protect 3D assets from MVDMs-based 3D geometry reconstruction.

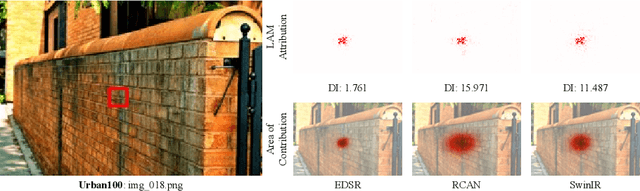

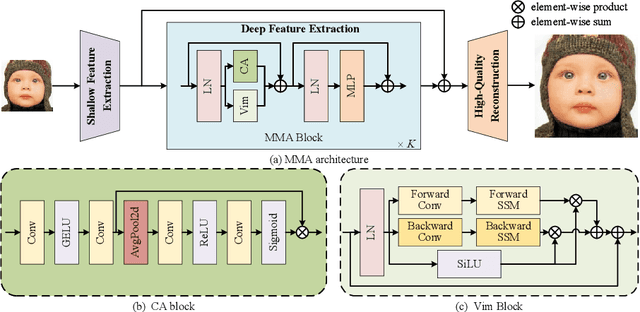

Activating Wider Areas in Image Super-Resolution

Mar 13, 2024

Abstract:The prevalence of convolution neural networks (CNNs) and vision transformers (ViTs) has markedly revolutionized the area of single-image super-resolution (SISR). To further boost the SR performances, several techniques, such as residual learning and attention mechanism, are introduced, which can be largely attributed to a wider range of activated area, that is, the input pixels that strongly influence the SR results. However, the possibility of further improving SR performance through another versatile vision backbone remains an unresolved challenge. To address this issue, in this paper, we unleash the representation potential of the modern state space model, i.e., Vision Mamba (Vim), in the context of SISR. Specifically, we present three recipes for better utilization of Vim-based models: 1) Integration into a MetaFormer-style block; 2) Pre-training on a larger and broader dataset; 3) Employing complementary attention mechanism, upon which we introduce the MMA. The resulting network MMA is capable of finding the most relevant and representative input pixels to reconstruct the corresponding high-resolution images. Comprehensive experimental analysis reveals that MMA not only achieves competitive or even superior performance compared to state-of-the-art SISR methods but also maintains relatively low memory and computational overheads (e.g., +0.5 dB PSNR elevation on Manga109 dataset with 19.8 M parameters at the scale of 2). Furthermore, MMA proves its versatility in lightweight SR applications. Through this work, we aim to illuminate the potential applications of state space models in the broader realm of image processing rather than SISR, encouraging further exploration in this innovative direction.

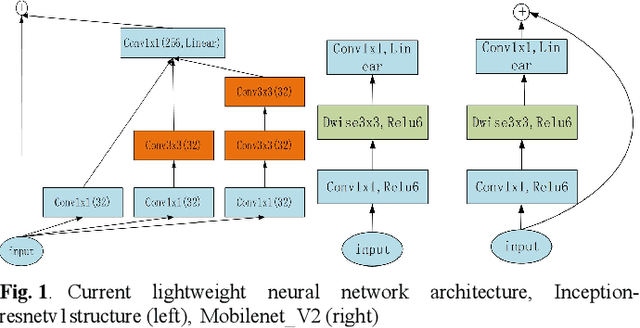

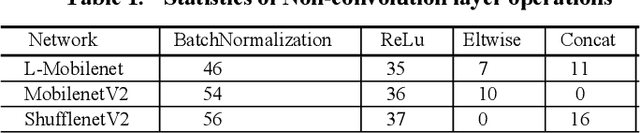

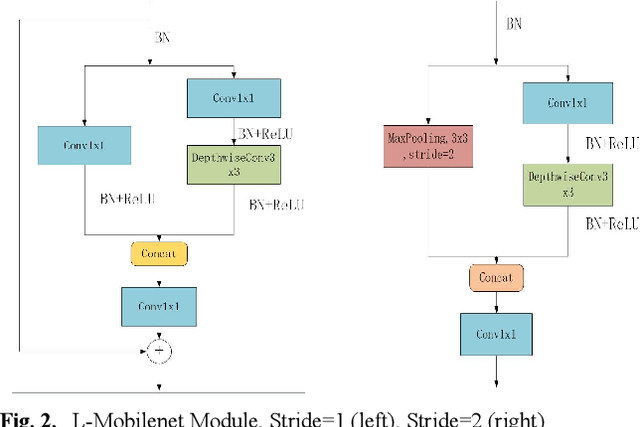

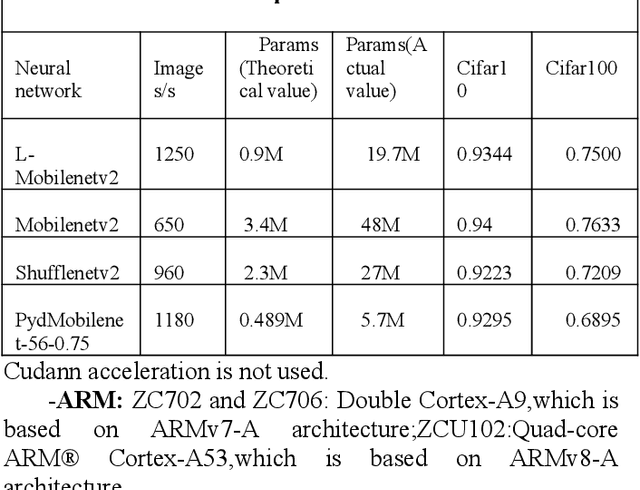

Exploring Hardware Friendly Bottleneck Architecture in CNN for Embedded Computing Systems

Mar 11, 2024

Abstract:In this paper, we explore how to design lightweight CNN architecture for embedded computing systems. We propose L-Mobilenet model for ZYNQ based hardware platform. L-Mobilenet can adapt well to the hardware computing and accelerating, and its network structure is inspired by the state-of-the-art work of Inception-ResnetV1 and MobilenetV2, which can effectively reduce parameters and delay while maintaining the accuracy of inference. We deploy our L-Mobilenet model to ZYNQ embedded platform for fully evaluating the performance of our design. By measuring in cifar10 and cifar100 datasets, L-Mobilenet model is able to gain 3x speed up and 3.7x fewer parameters than MobileNetV2 while maintaining a similar accuracy. It also can obtain 2x speed up and 1.5x fewer parameters than ShufflenetV2 while maintaining the same accuracy. Experiments show that our network model can obtain better performance because of the special considerations for hardware accelerating and software-hardware co-design strategies in our L-Mobilenet bottleneck architecture.

Networked Multiagent Safe Reinforcement Learning for Low-carbon Demand Management in Distribution Network

Nov 27, 2023Abstract:This paper proposes a multiagent based bi-level operation framework for the low-carbon demand management in distribution networks considering the carbon emission allowance on the demand side. In the upper level, the aggregate load agents optimize the control signals for various types of loads to maximize the profits; in the lower level, the distribution network operator makes optimal dispatching decisions to minimize the operational costs and calculates the distribution locational marginal price and carbon intensity. The distributed flexible load agent has only incomplete information of the distribution network and cooperates with other agents using networked communication. Finally, the problem is formulated into a networked multi-agent constrained Markov decision process, which is solved using a safe reinforcement learning algorithm called consensus multi-agent constrained policy optimization considering the carbon emission allowance for each agent. Case studies with the IEEE 33-bus and 123-bus distribution network systems demonstrate the effectiveness of the proposed approach, in terms of satisfying the carbon emission constraint on demand side, ensuring the safe operation of the distribution network and preserving privacy of both sides.

Meta-Adapter: An Online Few-shot Learner for Vision-Language Model

Nov 07, 2023

Abstract:The contrastive vision-language pre-training, known as CLIP, demonstrates remarkable potential in perceiving open-world visual concepts, enabling effective zero-shot image recognition. Nevertheless, few-shot learning methods based on CLIP typically require offline fine-tuning of the parameters on few-shot samples, resulting in longer inference time and the risk of over-fitting in certain domains. To tackle these challenges, we propose the Meta-Adapter, a lightweight residual-style adapter, to refine the CLIP features guided by the few-shot samples in an online manner. With a few training samples, our method can enable effective few-shot learning capabilities and generalize to unseen data or tasks without additional fine-tuning, achieving competitive performance and high efficiency. Without bells and whistles, our approach outperforms the state-of-the-art online few-shot learning method by an average of 3.6\% on eight image classification datasets with higher inference speed. Furthermore, our model is simple and flexible, serving as a plug-and-play module directly applicable to downstream tasks. Without further fine-tuning, Meta-Adapter obtains notable performance improvements in open-vocabulary object detection and segmentation tasks.

SoTaNa: The Open-Source Software Development Assistant

Aug 25, 2023

Abstract:Software development plays a crucial role in driving innovation and efficiency across modern societies. To meet the demands of this dynamic field, there is a growing need for an effective software development assistant. However, existing large language models represented by ChatGPT suffer from limited accessibility, including training data and model weights. Although other large open-source models like LLaMA have shown promise, they still struggle with understanding human intent. In this paper, we present SoTaNa, an open-source software development assistant. SoTaNa utilizes ChatGPT to generate high-quality instruction-based data for the domain of software engineering and employs a parameter-efficient fine-tuning approach to enhance the open-source foundation model, LLaMA. We evaluate the effectiveness of \our{} in answering Stack Overflow questions and demonstrate its capabilities. Additionally, we discuss its capabilities in code summarization and generation, as well as the impact of varying the volume of generated data on model performance. Notably, SoTaNa can run on a single GPU, making it accessible to a broader range of researchers. Our code, model weights, and data are public at \url{https://github.com/DeepSoftwareAnalytics/SoTaNa}.

An Adaptive Approach for Probabilistic Wind Power Forecasting Based on Meta-Learning

Aug 15, 2023Abstract:This paper studies an adaptive approach for probabilistic wind power forecasting (WPF) including offline and online learning procedures. In the offline learning stage, a base forecast model is trained via inner and outer loop updates of meta-learning, which endows the base forecast model with excellent adaptability to different forecast tasks, i.e., probabilistic WPF with different lead times or locations. In the online learning stage, the base forecast model is applied to online forecasting combined with incremental learning techniques. On this basis, the online forecast takes full advantage of recent information and the adaptability of the base forecast model. Two applications are developed based on our proposed approach concerning forecasting with different lead times (temporal adaptation) and forecasting for newly established wind farms (spatial adaptation), respectively. Numerical tests were conducted on real-world wind power data sets. Simulation results validate the advantages in adaptivity of the proposed methods compared with existing alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge